Beyond Fact Retrieval: Episodic Memory for RAG with Generative Semantic Workspaces (2511.07587v1)

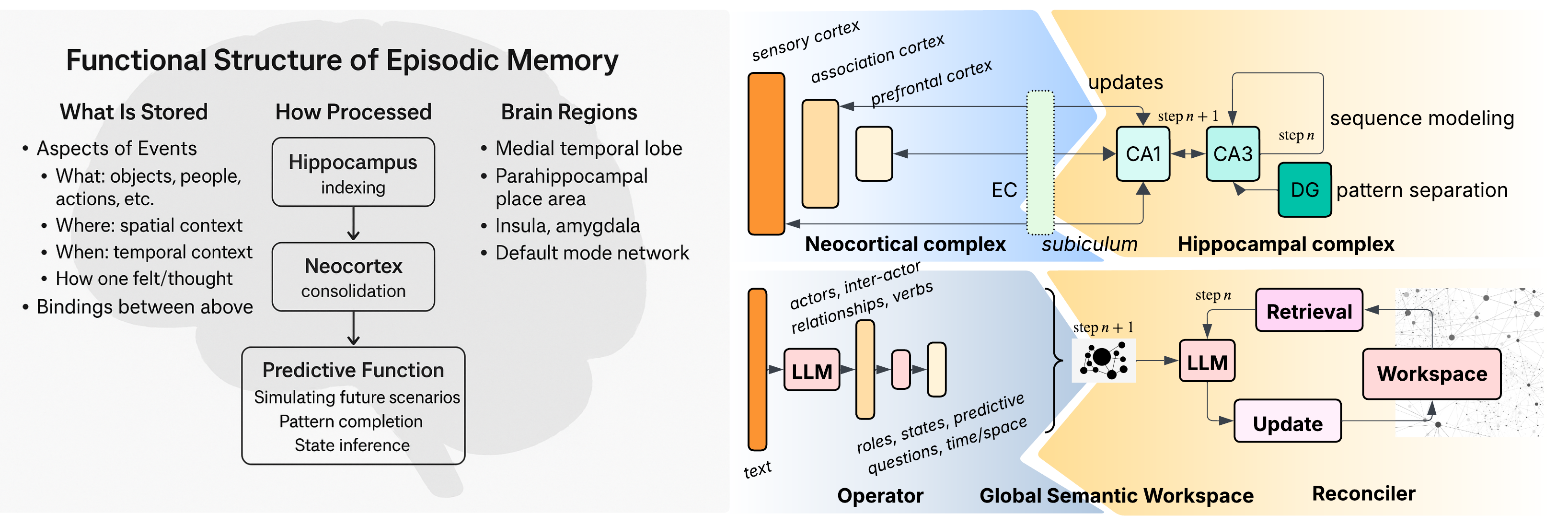

Abstract: LLMs face fundamental challenges in long-context reasoning: many documents exceed their finite context windows, while performance on texts that do fit degrades with sequence length, necessitating their augmentation with external memory frameworks. Current solutions, which have evolved from retrieval using semantic embeddings to more sophisticated structured knowledge graphs representations for improved sense-making and associativity, are tailored for fact-based retrieval and fail to build the space-time-anchored narrative representations required for tracking entities through episodic events. To bridge this gap, we propose the \textbf{Generative Semantic Workspace} (GSW), a neuro-inspired generative memory framework that builds structured, interpretable representations of evolving situations, enabling LLMs to reason over evolving roles, actions, and spatiotemporal contexts. Our framework comprises an \textit{Operator}, which maps incoming observations to intermediate semantic structures, and a \textit{Reconciler}, which integrates these into a persistent workspace that enforces temporal, spatial, and logical coherence. On the Episodic Memory Benchmark (EpBench) \cite{huet_episodic_2025} comprising corpora ranging from 100k to 1M tokens in length, GSW outperforms existing RAG based baselines by up to \textbf{20\%}. Furthermore, GSW is highly efficient, reducing query-time context tokens by \textbf{51\%} compared to the next most token-efficient baseline, reducing inference time costs considerably. More broadly, GSW offers a concrete blueprint for endowing LLMs with human-like episodic memory, paving the way for more capable agents that can reason over long horizons.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Beyond Fact Retrieval: A simple explanation of “Episodic Memory for RAG with Generative Semantic Workspaces”

1) What is this paper about?

This paper tries to help AI systems, like chatbots, remember and reason about long, story-like information. Instead of just looking up facts, the authors want AI to track who did what, where, and when—across many documents and long time periods—much like how people remember episodes in their lives. They introduce a new memory system called the Generative Semantic Workspace (GSW) that helps AI build and keep a “story map” it can use to answer questions later.

2) What questions are the researchers trying to answer?

The paper focuses on three big questions:

- Can we give AI a memory that tracks people, places, times, and actions across long stories or reports, not just short facts?

- Can this memory help AI answer questions that require connecting details spread over many chapters or documents?

- Can it do this both accurately and efficiently (using fewer tokens, which saves time and money)?

3) How does their method work?

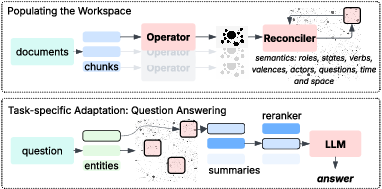

Think of GSW as a neat, organized “world model” or scrapbook of events. It builds and updates a structured memory while reading. It has two main parts:

- The Operator: This is like a careful note‑taker. When the AI reads a new document, the Operator pulls out the key pieces—who is involved (actors), what roles they play (like “presenter” or “police officer”), what they did (verbs/actions), where it happened (location), and when it happened (time). It turns messy text into clean, labeled notes.

- The Reconciler: This is like a super‑organizer. It takes the new notes and merges them into the big memory, making sure everything fits together logically and consistently over time and space. For example, if two mentions of “Carter Stewart” refer to the same person at different times, the Reconciler keeps their timeline straight and avoids mixing them up.

Together, these parts create a “workspace” that:

- Tracks actors, roles, actions, times, and locations.

- Connects events in the right order.

- Helps predict what might happen next (like real episodic memory).

How the system answers questions:

- It finds the people or places mentioned in the question.

- It pulls short, focused summaries about those matches from the memory.

- It re-ranks those summaries by relevance.

- It sends only the most helpful summaries to an AI model to produce the final answer.

To test the system, the authors used a dataset called EpBench. It contains long, story‑like “books” with hundreds to thousands of chapters and questions that often require combining details from up to 17 different chapters.

4) What did they find, and why does it matter?

Key findings:

- Better accuracy: On the smaller EpBench set (200 chapters), GSW achieved the best overall score (F1 ≈ 0.85) and the best precision and recall, beating strong baselines. It especially shined on hard questions that needed pulling together many details (up to 17 chapters), improving recall by up to 20% over the next best method.

- Works at larger scale: On a much bigger set (2000 chapters), GSW still led with an overall F1 ≈ 0.773, about 15% better than the best traditional retrieval baseline.

- More efficient: GSW uses far fewer tokens per question (about half as many as the next most efficient method). That means lower costs, faster answers, and fewer errors because the AI sees only what it needs.

Why it matters:

- Many real documents are long narratives—news coverage over days, legal records, military reports, corporate filings. You need more than fact lookup; you need a memory of episodes.

- GSW helps AI handle these by building a clear, structured story map, reducing confusion and hallucinations.

- It’s both accurate and efficient, which is practical for real systems.

5) What’s the bigger impact?

If AI can remember and reason about long-running situations more like humans do, it can:

- Become a more reliable assistant for research, journalism, law, and business, where tracking evolving events is essential.

- Handle long‑term tasks (like project management or investigations) by recalling who did what, where, and when over time.

- Reduce costs and mistakes by only using the most relevant information when answering questions.

In short, this paper shows a promising way to give AI “episodic memory,” helping it understand and reason across long stories—not just retrieve single facts. That could make future AI agents more trustworthy and better at complex, real‑world tasks.

Collections

Sign up for free to add this paper to one or more collections.