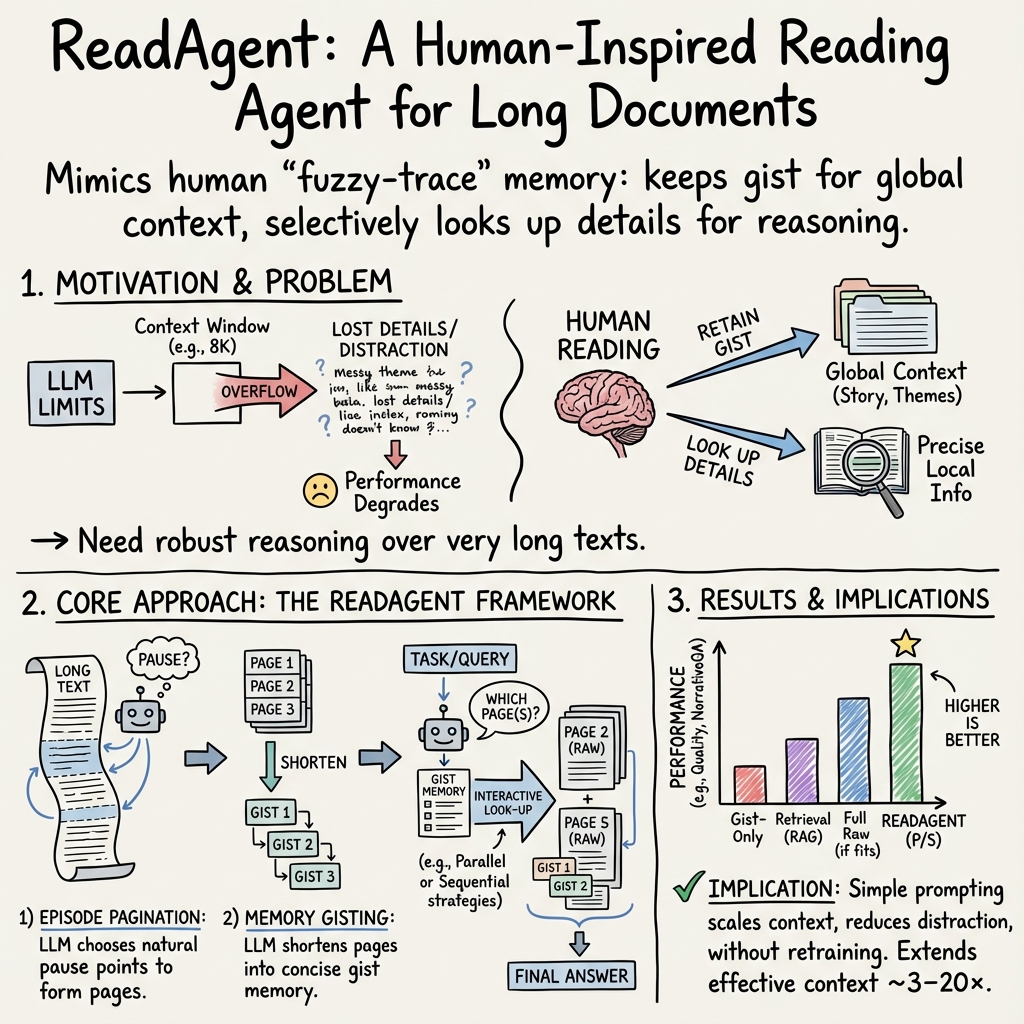

- The paper presents ReadAgent, which extends LLM context windows up to 20× by mimicking human gist memory strategies.

- It groups document content into episodic memories and compresses them into brief gists for efficient interactive detail retrieval.

- Results on benchmarks like QuALITY, NarrativeQA, and QMSum demonstrate ReadAgent’s practical impact on complex long-document comprehension tasks.

A Human-Inspired Reading Agent with Gist Memory of Very Long Contexts

Introduction

Current LLMs encounter limitations in processing long documents due to maximum context length restrictions and decreased performance when dealing with long inputs. This paper introduces ReadAgent, a novel LLM agent system designed to mimic human reading strategies for efficiently managing long documents. Inspired by the human ability to focus on the gist of information while being able to retrieve details when necessary, ReadAgent implements a simple prompting system to significantly extend the effective context length LLMs can handle.

Core Contributions

The paper's primary contribution is the development of ReadAgent, which demonstrates a human-like reading capability by:

- Deciding what content to group together into a memory episode,

- Compressing these episodes into short, gist-like memories,

- Interactively looking up detailed passages when required for task completion.

Using a straightforward implementation, ReadAgent can effectively extend the context window up to 20× compared to baseline models on various long-document comprehension tasks. The system showcases remarkable performance improvements across all evaluated benchmarks—QuALITY, NarrativeQA, and QMSum—underscoring its efficacy and the practical applicability of leveraging episodic gist memories and interactive retrieval.

Theoretical Implications

ReadAgent's approach suggests that efficiently scaling LLMs for long contexts doesn't necessarily require architectural modifications or extensive training. Instead, leveraging advanced language capabilities through strategic prompting and memory management can be equally impactful. This methodology aligns with fuzzy-trace theory, highlighting how gist-based processing and retrieval from episodic memories are crucial in human comprehension and reasoning over extended contexts.

The findings also indicate the potential for LLMs to process information more effectively using human-like reading strategies, raising interesting questions about the nature of comprehension and the role of memory in AI systems. This could have considerable implications for future research in understanding and improving LLM performance on complex tasks.

Practical Applications and Future Directions

Beyond theoretical implications, ReadAgent introduces practical methodologies for extending LLM capacities in real-world applications. This includes improved handling of long documents in areas like legal document analysis, exhaustive literature reviews, or detailed narrative comprehension, without necessitating direct architectural advancements in the models themselves.

Moreover, the successful implementation for web navigation tasks illustrates ReadAgent's versatility and its potential as a springboard for developing more sophisticated interactive systems. Future investigations might explore conditional gisting dependent on known tasks or iterative gisting for managing exceptionally long context histories, further enhancing the practical utility of this approach.

Conclusion

Overall, ReadAgent represents a significant step forward in the utilization of LLMs for long-context tasks, demonstrating both the practical feasibility and the theoretical significance of incorporating human-inspired reading strategies into AI systems. By focusing on gist memory generation and interactive lookup, ReadAgent not only achieves outstanding performance on demanding benchmarks but also opens new avenues for research in AI, cognition, and memory.