ComoRAG: A Cognitive-Inspired Memory-Organized RAG for Stateful Long Narrative Reasoning (2508.10419v1)

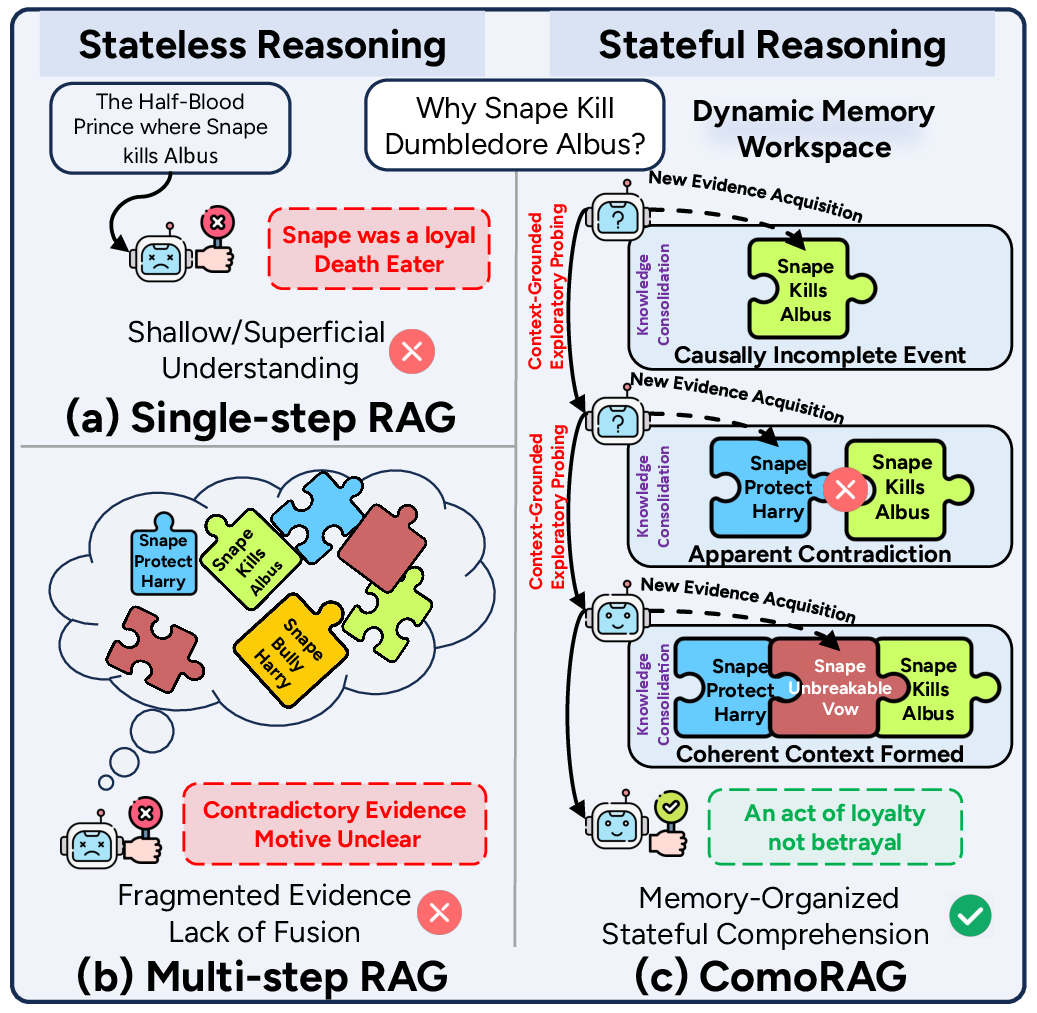

Abstract: Narrative comprehension on long stories and novels has been a challenging domain attributed to their intricate plotlines and entangled, often evolving relations among characters and entities. Given the LLM's diminished reasoning over extended context and high computational cost, retrieval-based approaches remain a pivotal role in practice. However, traditional RAG methods can fall short due to their stateless, single-step retrieval process, which often overlooks the dynamic nature of capturing interconnected relations within long-range context. In this work, we propose ComoRAG, holding the principle that narrative reasoning is not a one-shot process, but a dynamic, evolving interplay between new evidence acquisition and past knowledge consolidation, analogous to human cognition when reasoning with memory-related signals in the brain. Specifically, when encountering a reasoning impasse, ComoRAG undergoes iterative reasoning cycles while interacting with a dynamic memory workspace. In each cycle, it generates probing queries to devise new exploratory paths, then integrates the retrieved evidence of new aspects into a global memory pool, thereby supporting the emergence of a coherent context for the query resolution. Across four challenging long-context narrative benchmarks (200K+ tokens), ComoRAG outperforms strong RAG baselines with consistent relative gains up to 11% compared to the strongest baseline. Further analysis reveals that ComoRAG is particularly advantageous for complex queries requiring global comprehension, offering a principled, cognitively motivated paradigm for retrieval-based long context comprehension towards stateful reasoning. Our code is publicly released at https://github.com/EternityJune25/ComoRAG

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What’s this paper about?

This paper introduces ComoRAG, a new way for AI to understand very long stories (like novels) and answer questions about them. Instead of trying to read everything at once, ComoRAG acts more like a thoughtful human reader: it remembers what it has learned, asks itself smaller follow-up questions when stuck, finds new clues, and keeps updating its understanding until it can explain the answer clearly.

The name “ComoRAG” comes from “Cognitive-Inspired Memory-Organized RAG”:

- “Cognitive-inspired” means it takes ideas from how the human brain reasons.

- “Memory-organized” means it keeps and uses a smart memory of what it has found.

- “RAG” stands for Retrieval-Augmented Generation, which is a technique where an AI looks up relevant text before answering.

What problem are they trying to solve?

Modern AI can read long texts, but:

- It has trouble keeping track of details across hundreds of pages.

- It often “forgets” important parts in the middle (“lost in the middle” problem).

- Traditional RAG finds evidence in one quick step, which can miss how events and relationships change over time in a story.

The paper asks: How can an AI perform “stateful reasoning” — building and updating a mental model of the story — like a human reader does, especially for complex questions that rely on clues spread across the entire narrative?

What are the key goals?

In simple terms, the researchers want the AI to:

- Understand long stories by combining facts, themes, and the timeline of events.

- Keep a dynamic memory that grows and improves with each step.

- Ask smarter follow-up questions when it hits a dead end.

- Piece together clues into a coherent explanation (not just spot a single fact).

How does ComoRAG work?

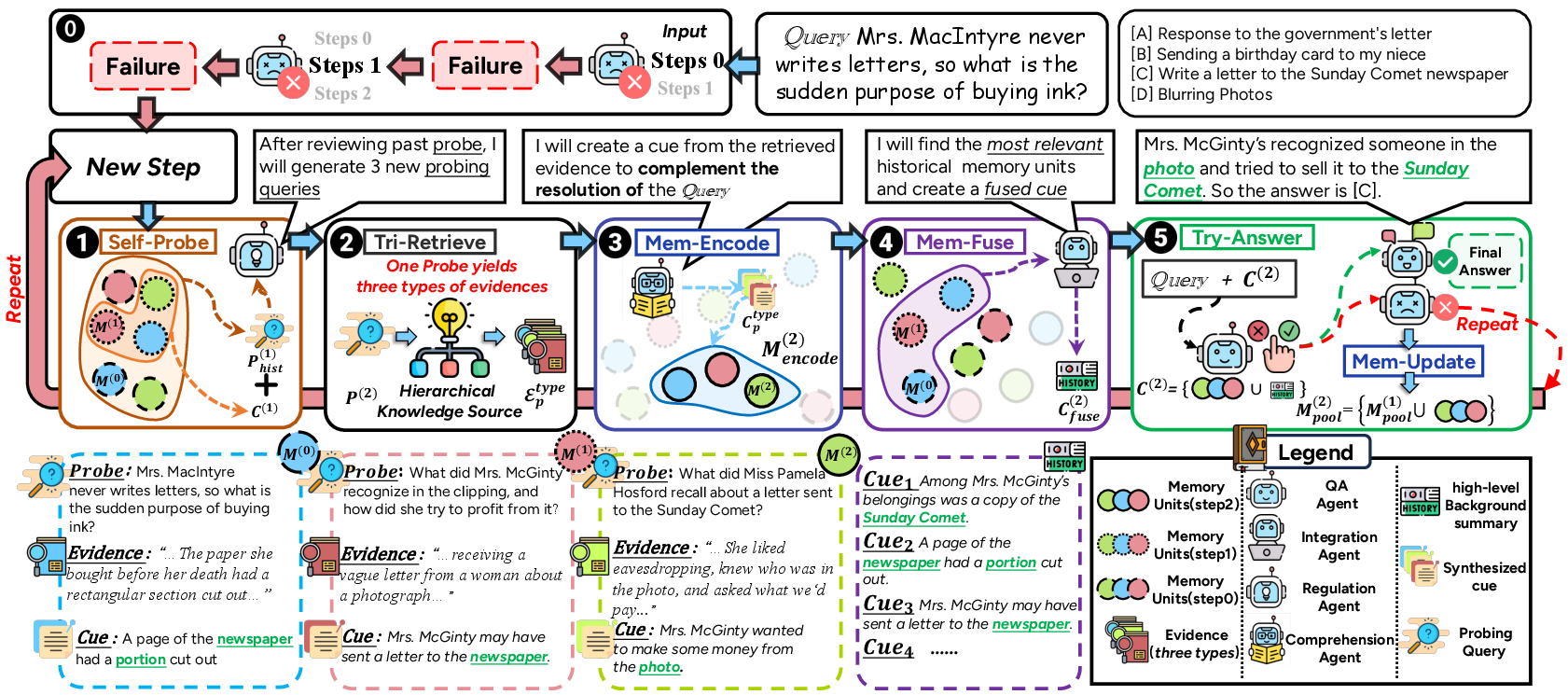

Think of ComoRAG as a detective reading a big book:

- It keeps a “workspace” (like a notebook) that stores clues and thoughts.

- When it can’t answer a question, it plans what to look for next, finds new evidence, and updates its notes.

To do this, it uses two big ideas:

1) A layered “library” of the story (Hierarchical Knowledge Source)

ComoRAG organizes the story in three helpful layers:

- Facts (Veridical Layer): The exact text and who-did-what details. This keeps answers grounded in evidence.

- Themes (Semantic Layer): Summaries of related chunks, grouped by meaning. This helps find the big ideas across many pages.

- Timeline (Episodic Layer): Summaries that follow the plot over time. This captures how events and motivations change.

Analogy: Imagine sorting a huge novel into:

- Sticky notes for quotes and facts,

- Chapter summaries by theme,

- A timeline chart showing what happened when.

2) A “metacognitive” loop (reasoning with memory)

When the AI can’t answer a question right away, it enters an iterative loop:

- Plan: Decide a few small, targeted follow-up questions ("What should I check next to better understand the main question?")

- Retrieve: Look up evidence from the facts, themes, and timeline layers.

- Reflect: Summarize why the new evidence matters for the original question.

- Fuse: Combine new clues with what it already knows.

- Try Answering: Attempt the final answer. If it’s still not enough, repeat the loop.

This loop continues for a few rounds (usually 2–3) until the AI builds a coherent view of the story that supports a correct answer.

A memory “unit” is like a sticky note with:

- The sub-question it asked,

- The evidence it found,

- A brief cue explaining how this helps answer the main question.

What did they find?

The team tested ComoRAG on four tough benchmarks with very long texts (often over 200,000 tokens, roughly hundreds of pages). They compared it to strong methods, including single-step RAG and multi-step RAG systems.

Main results:

- ComoRAG consistently beat all baselines across all datasets.

- On especially long stories, it was much more robust (it kept working well as texts got longer).

- It excelled at complex questions that require global understanding of the plot, not just fact lookup.

- Most of the improvement came from the iterative reasoning loop (doing 2–3 cycles made a big difference).

- It’s modular and flexible: adding ComoRAG’s loop on top of other RAG systems (like RAPTOR or HippoRAGv2) made those systems better.

- Using a stronger LLM (like GPT-4.1) inside the loop boosted performance even more.

In numbers (simplified highlights):

- On a multiple-choice benchmark for long novels (EN.MC), ComoRAG reached about 73% accuracy with a mid-size model, and up to about 78% with a stronger model. That’s notably higher than other advanced RAG methods.

- On free-form question answering, it improved both F1 score and exact matches, especially on global/narrative questions.

Why is this important?

Understanding long stories is more than finding a quote; it requires building and updating a mental model of characters, motives, and events. ComoRAG shows that:

- AI can perform more “human-like” reading by probing for new clues and consolidating them in memory.

- A dynamic memory and iterative reasoning process is key to handling long, interconnected narratives.

- This approach reduces mistakes caused by one-shot retrieval, which may grab misleading or incomplete evidence.

What’s the potential impact?

- Better reading assistants: AI that can truly understand novels, biographies, and reports—not just skim.

- Reliable research tools: Systems that can gather, link, and explain evidence across many sources.

- Smarter tutoring: Educational AIs that can guide students through complex texts, asking helpful follow-up questions and explaining reasoning.

- Strong building block: ComoRAG’s loop can be plugged into other RAG systems, making them more powerful without starting from scratch.

In short, ComoRAG pushes AI beyond “find a fact” toward “understand the story,” using memory and thoughtful, step-by-step reasoning—much like how a careful reader solves a mystery across many chapters.

Collections

Sign up for free to add this paper to one or more collections.