The paper introduces EM-LLM, a novel architecture designed to enhance the ability of LLMs to process extended contexts by integrating principles of human episodic memory and event cognition. EM-LLM addresses the limitations of existing LLMs in maintaining coherence and accuracy over long sequences, which stems from the challenges in Transformer-based architectures, such as difficulties in extrapolating to contexts longer than their training window size and the computational cost of softmax attention over extended token sequences.

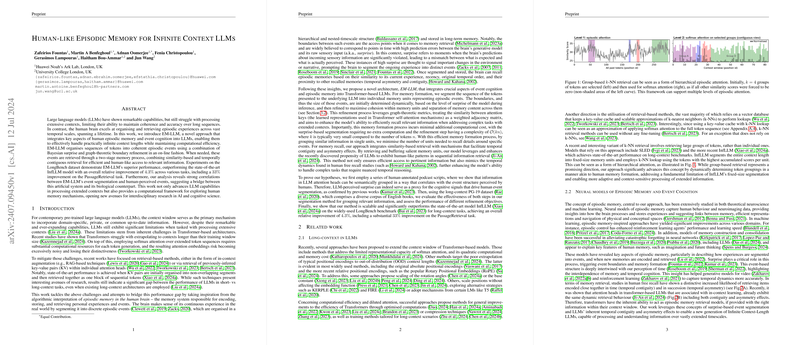

The core idea is to segment sequences of tokens into episodic events, drawing inspiration from how the human brain organizes and retrieves episodic experiences. This segmentation is achieved through a combination of Bayesian surprise and graph-theoretic boundary refinement. The episodic events are then retrieved using a two-stage memory process that combines similarity-based and temporally contiguous retrieval, mimicking the way humans access relevant information.

The architecture of EM-LLM divides the context into initial tokens, evicted tokens, and local context. The local context, which contains the most recent tokens, resides within the typical context window of the underlying LLM and utilizes full softmax attention. The evicted tokens, which constitute the majority of past tokens, are managed by the memory model, while the initial tokens act as attention sinks.

The memory formation process involves segmenting the sequence of tokens into individual memory units that represent episodic events. Boundaries are initially determined dynamically based on the surprise of the model during inference, quantified by the negative log-likelihood of observing the current token given the previous tokens:

,

where:

- is the conditional probability of token given the preceding tokens to and model parameters ,

- is a threshold value.

The threshold is defined as:

,

where:

- is the mean surprise over a window offset by ,

- is the variance of surprise over the same window,

- is a scaling factor.

These boundaries are then refined to maximize cohesion within memory units and separation of memory content across them. The refinement leverages graph-theoretic metrics, treating the similarity between attention keys as a weighted adjacency matrix.

Two graph-clustering metrics are employed: modularity and conductance. Modularity, , is defined as:

where:

- is the adjacency matrix for attention head ,

- is the set of event boundaries,

- is the total edge weight in the graph,

- is the community to which node is assigned,

- is the Kronecker delta function.

Conductance, , is defined as:

where:

- is a subset of all nodes in the induced graph, with ,

- ,

- .

For memory retrieval, the approach integrates similarity-based retrieval with mechanisms that facilitate temporal contiguity and asymmetry effects. The memory retrieval process employs a two-stage mechanism: retrieving events using k-NN search based on dot product similarity to form a similarity buffer, and enqueuing neighboring events into a contiguity buffer of size .

The model was evaluated on the LongBench dataset, and it outperformed the state-of-the-art InfLLM model, achieving an overall relative improvement of . Notably, the model achieved a improvement on the PassageRetrieval task, which requires accurate recall of detailed information from a large context.

The paper also presents an analysis of human-annotated podcast scripts, which reveals strong correlations between LLM's attention heads and human-perceived event structures. Segmentation quality and correlation with human data were compared using modularity and conductance, as well as the ratio between intra- and inter-community similarity, defined as:

where:

- ,

- ,

- represents the tokens in a community.

The results indicate that surprise-only segmentation achieves similar results to human segmentation, and the addition of the refinement algorithm improves performance. Also, surprise-based methods identify event boundaries that are closest to those perceived by humans.

The authors compared their method with other long-context models, including methods that address the limitations of softmax attention, positional encodings, and computational efficiency. Unlike InfLLM, which segments the context into fixed-size memory units, EM-LLM dynamically determines token groupings, similar to human memory formation.

The authors suggest that future research could explore extending the surprise-based segmentation and boundary refinement to operate at each layer of the Transformer independently, and explore how EM-LLM could be utilized to enable imagination and future thinking.