- The paper presents OmniPianist, a novel RL-based agent that uses optimal transport for autonomous fingering in piano playing.

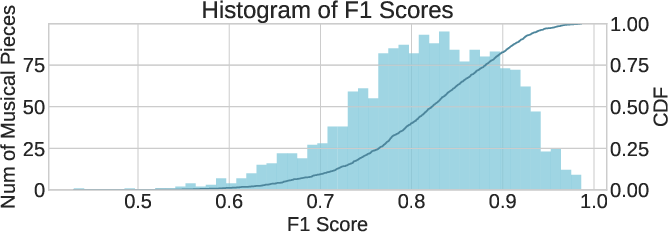

- It leverages the RP1M++ dataset and a Flow Matching Transformer to achieve robust performance and generalization across music pieces.

- The approach advances robotic dexterity by automating key finger movements and improving training efficiency for complex musical tasks.

Dexterous Robotic Piano Playing at Scale

Introduction

The paper "Dexterous Robotic Piano Playing at Scale" (2511.02504) introduces an innovative approach to robotic piano playing that addresses the challenges of dexterity and scalability in robotics. It presents OmniPianist, an agent capable of performing a vast repertoire of music pieces without human demonstrations by leveraging a novel Reinforcement Learning (RL) framework. This work is a significant step forward in enabling robots to achieve human-level dexterity in complex tasks.

Core Components of the Method

The research introduces three key innovations:

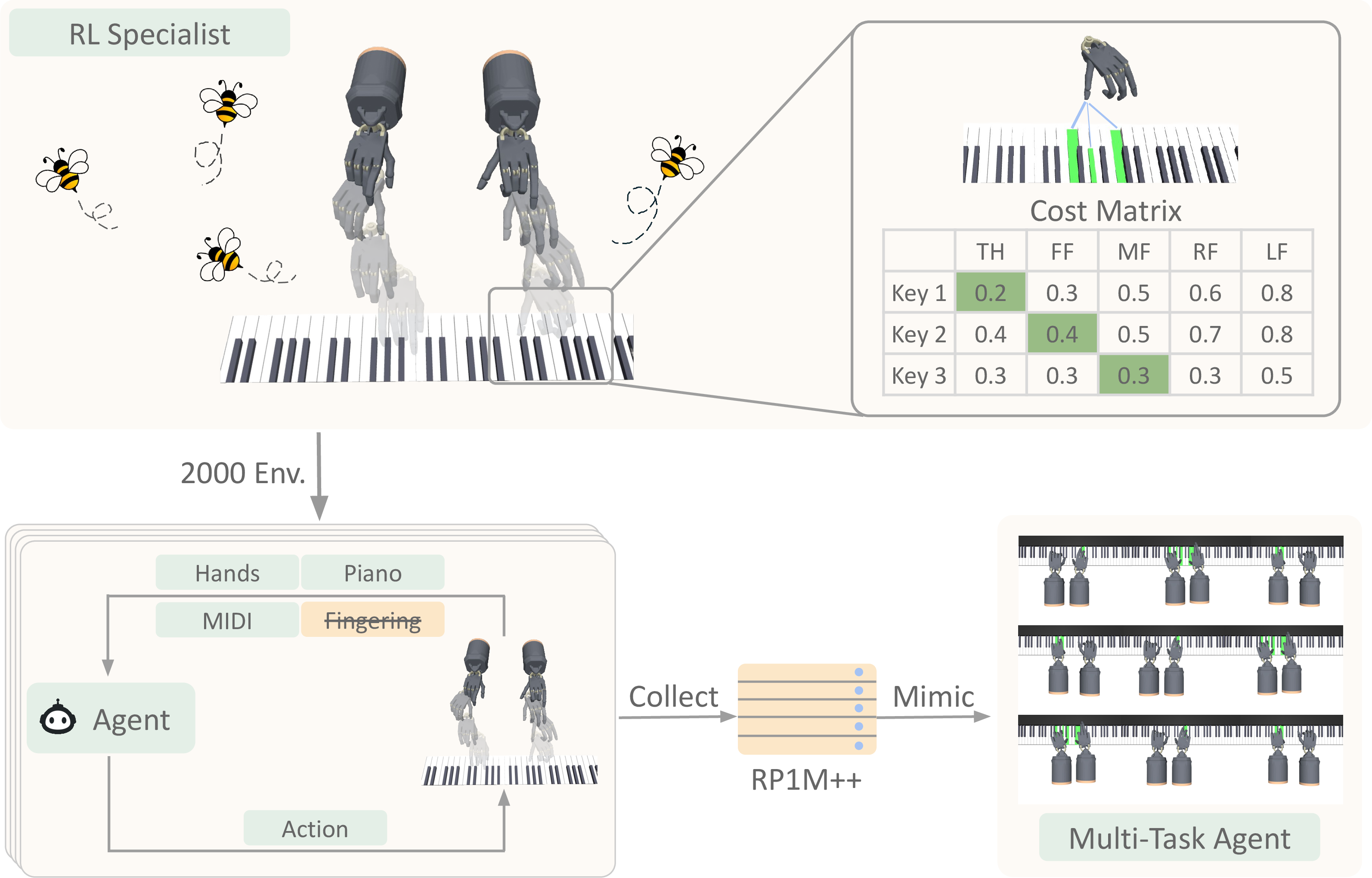

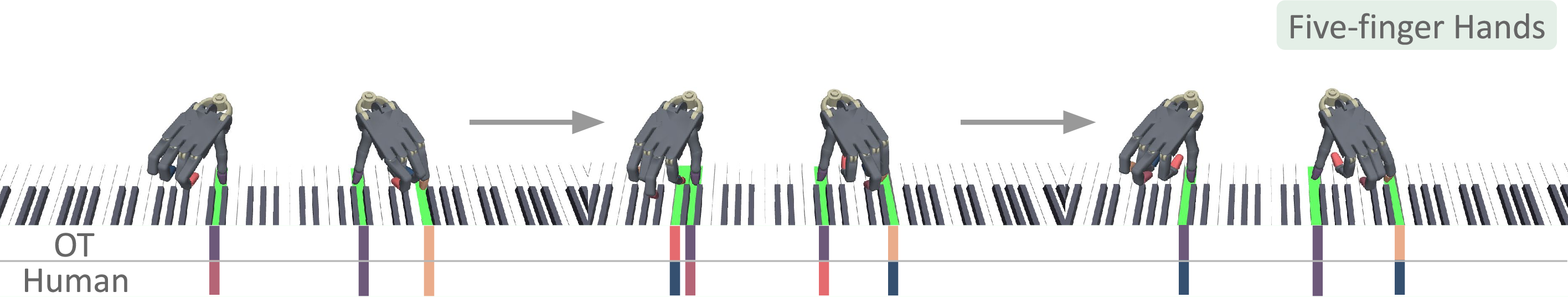

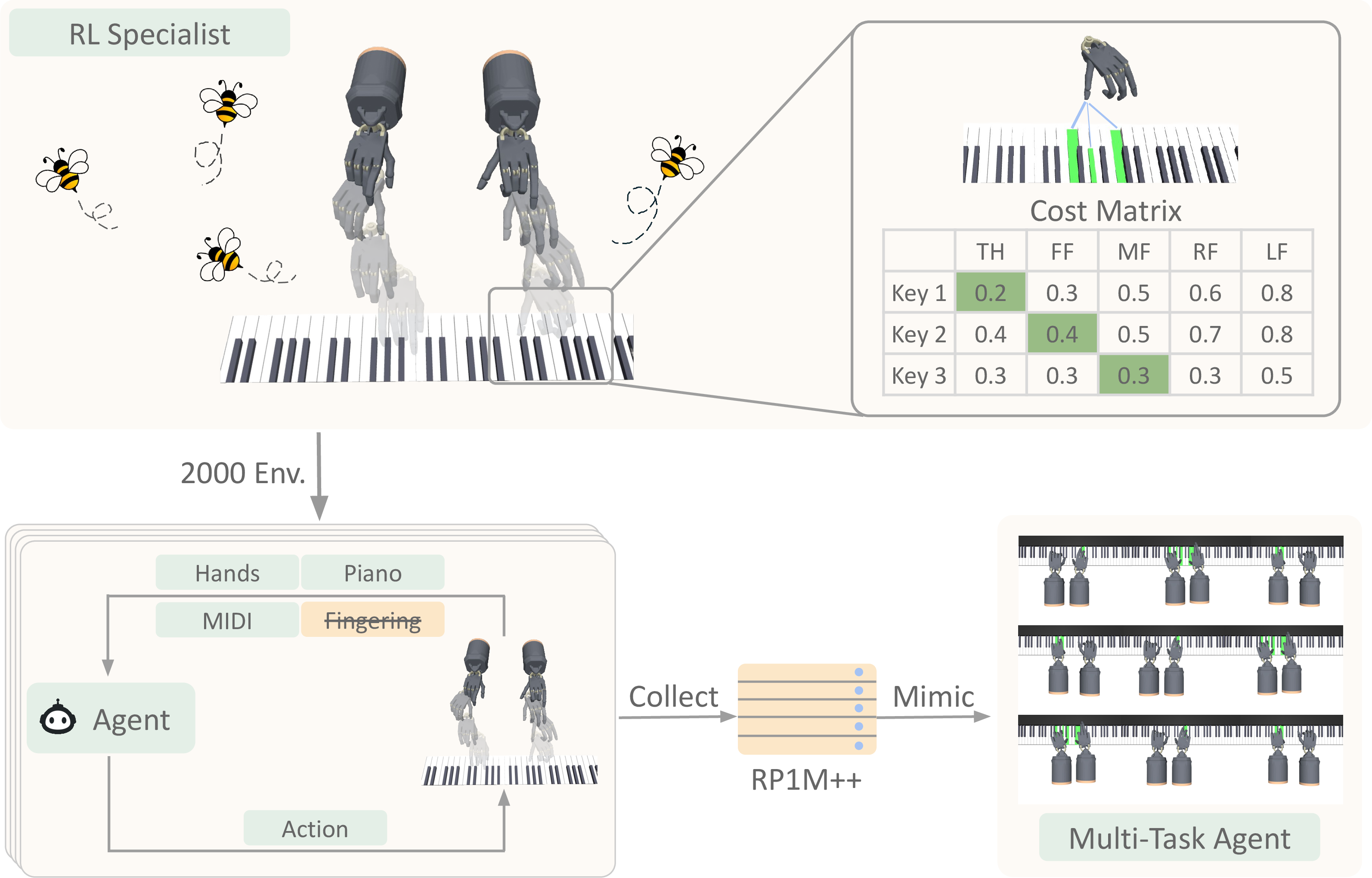

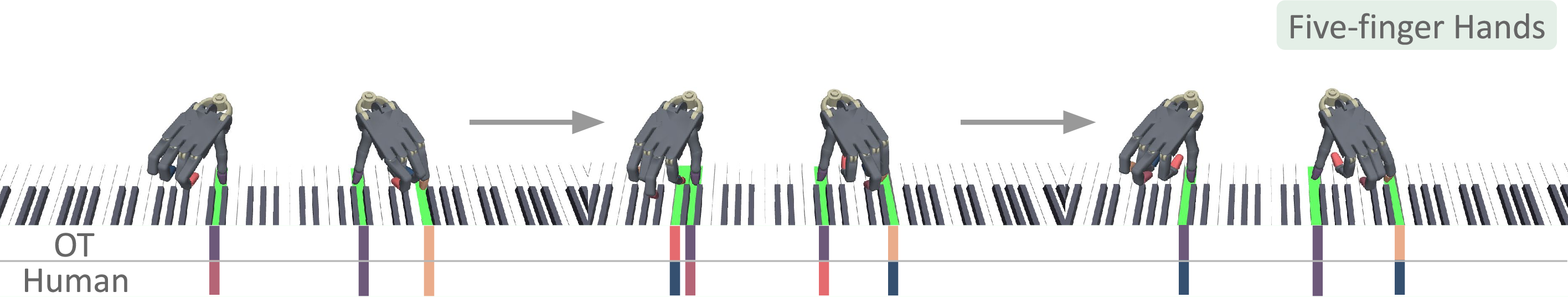

- Automatic Fingering Strategy: Utilizing an Optimal Transport (OT) formulation, the proposed method eliminates the dependency on human-annotated fingering. This allows the robotic agent to autonomously discover efficient piano-playing strategies (Figure 1).

Figure 1: Overview of the proposed RL-based agent using OT formulation for autonomous piano playing.

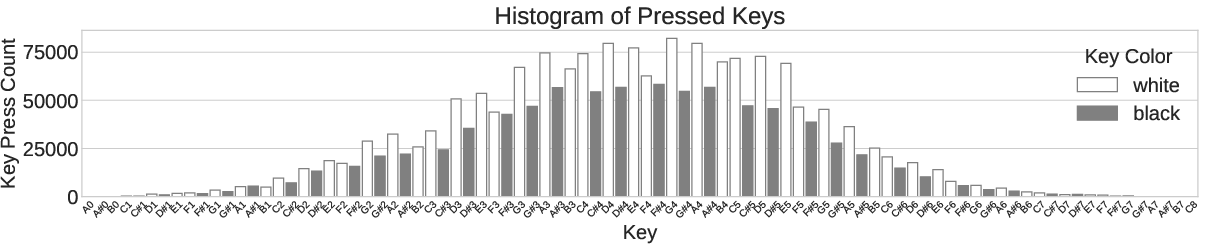

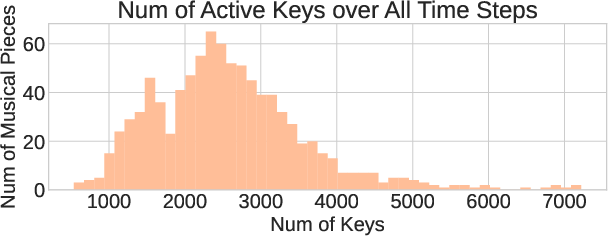

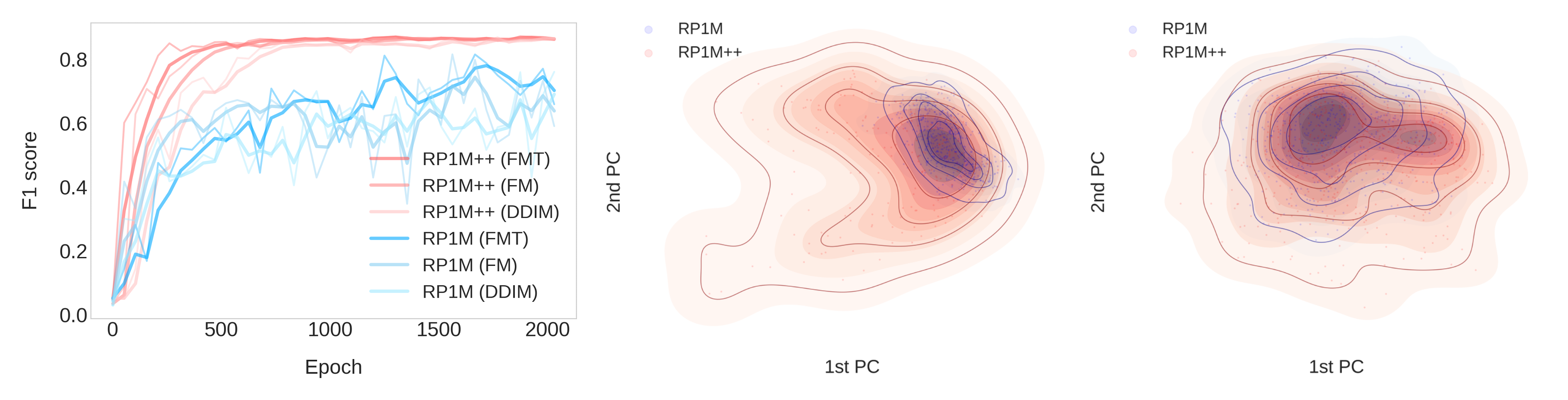

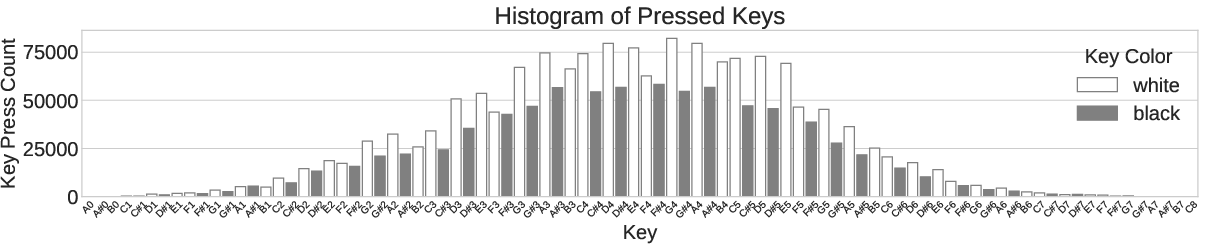

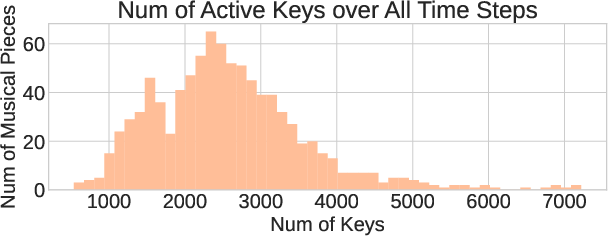

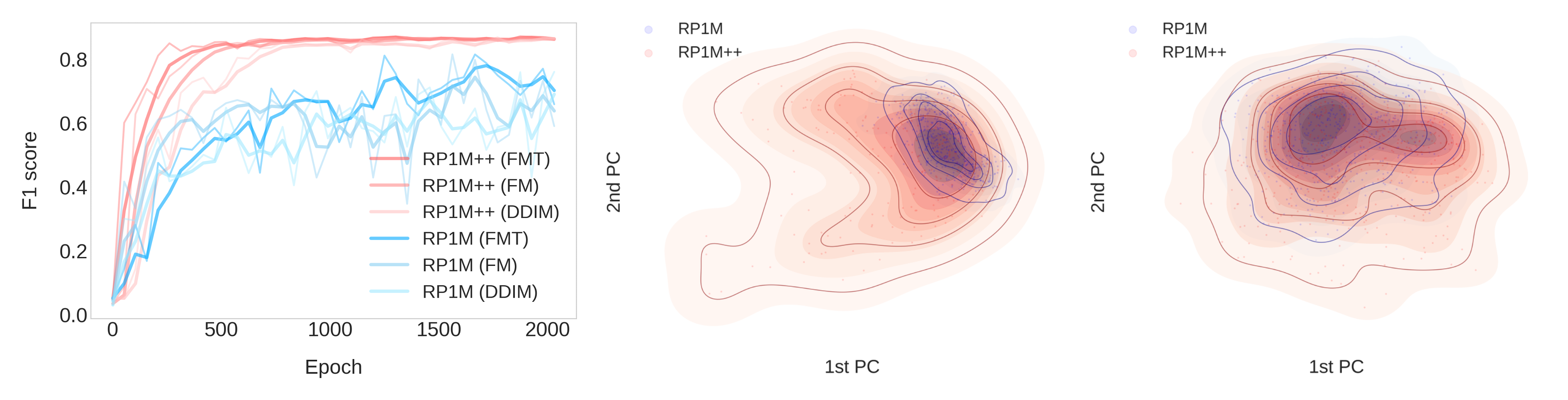

- Large-Scale Dataset - RP1M++: The collection and utilization of a comprehensive dataset, RP1M++, comprising over one million trajectories from specialist RL agents trained on distinct music pieces, ensures extensive coverage and diversity necessary for robust performance (Figure 2).

Figure 2: Dataset statistics showing diversity and coverage in RP1M++.

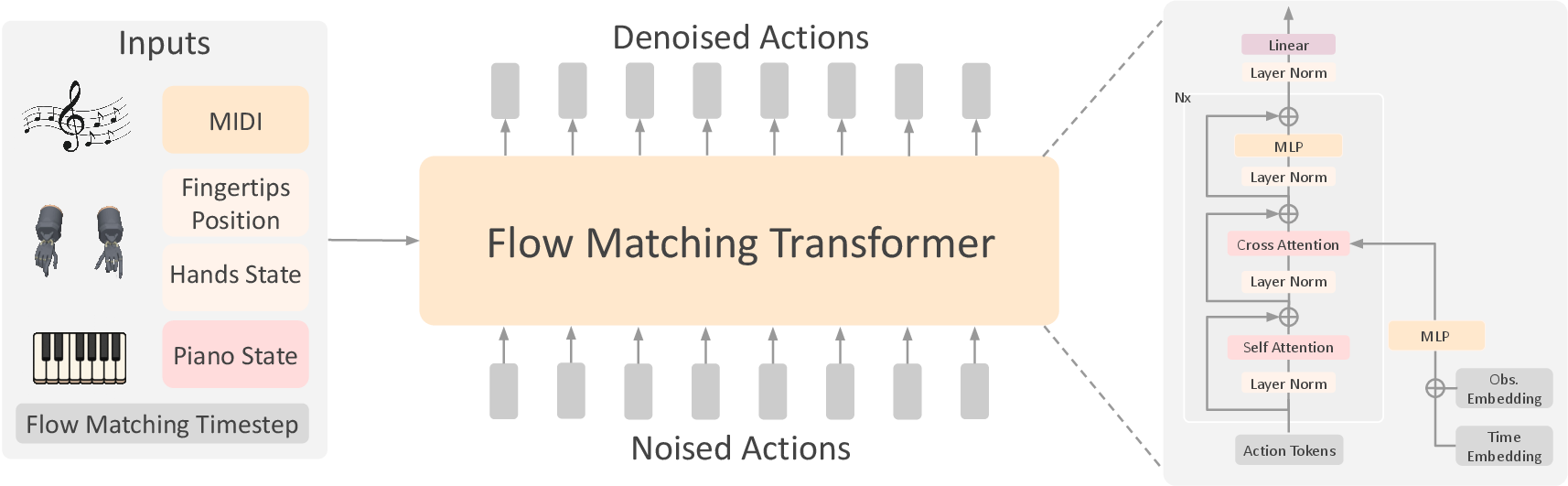

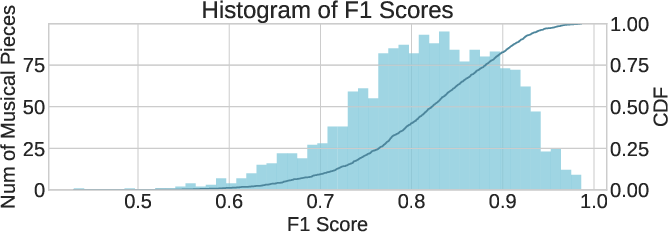

- Flow Matching Transformer: Employed for imitation learning, this model processes the RP1M++ data to produce a skilled agent capable of diverse performances with strong generalization to new songs (Figure 3).

Figure 3: Flow Matching Transformer architecture used in the OmniPianist.

Implementation Details

Optimal Transport for Fingering

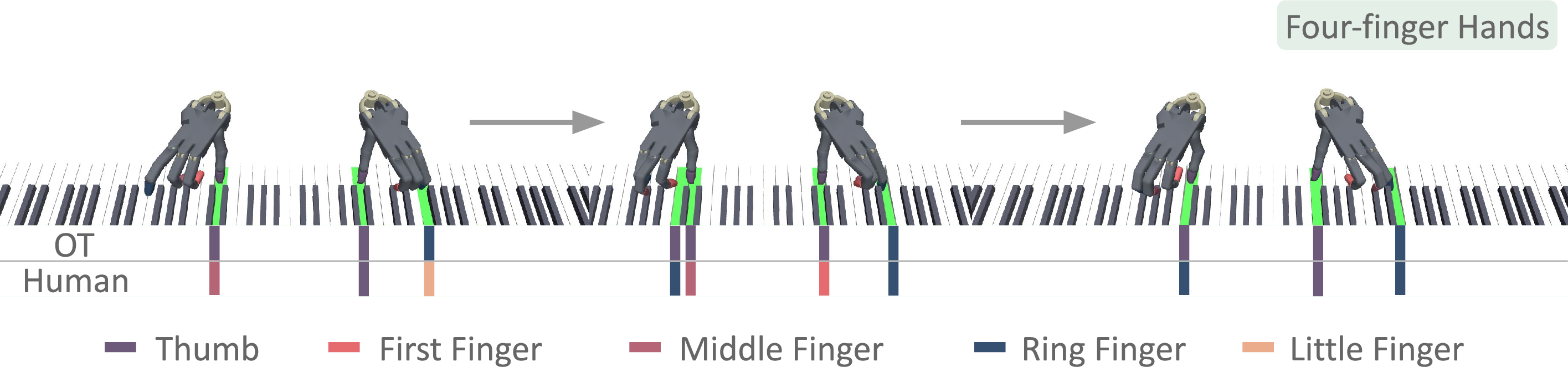

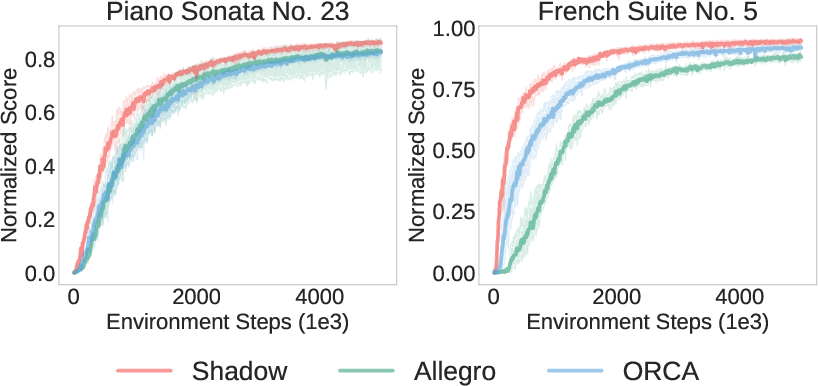

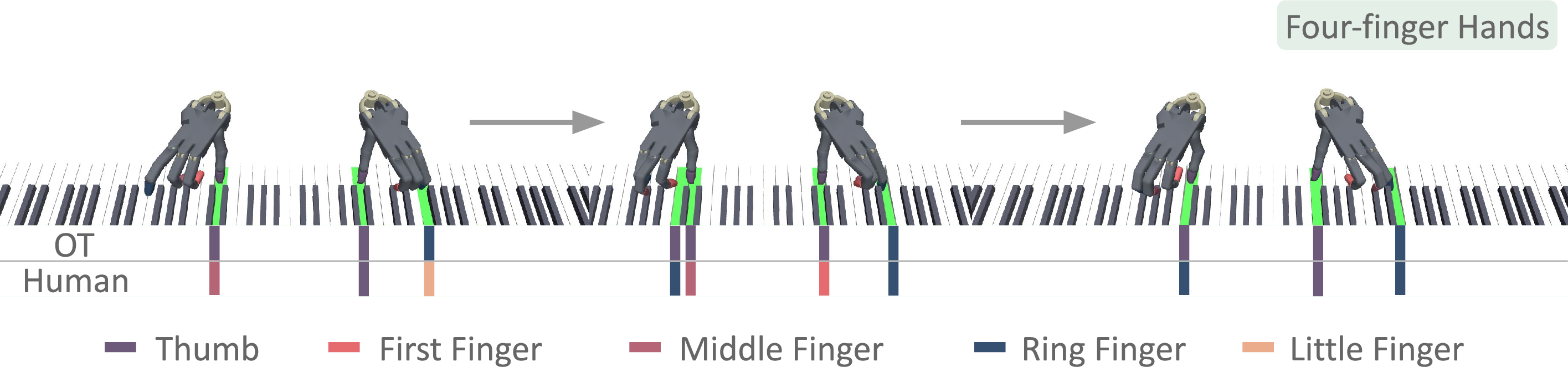

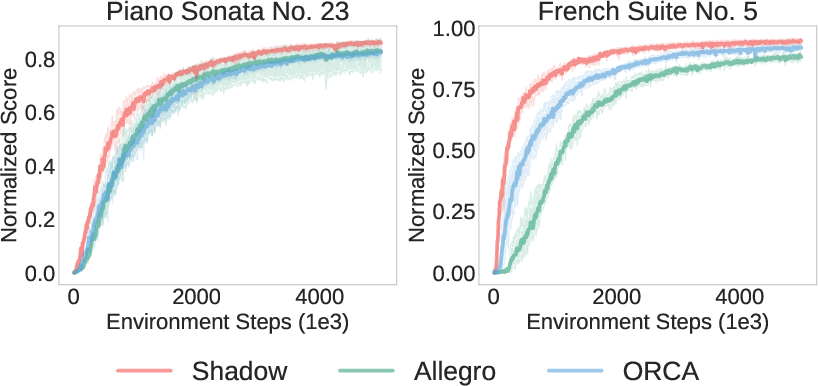

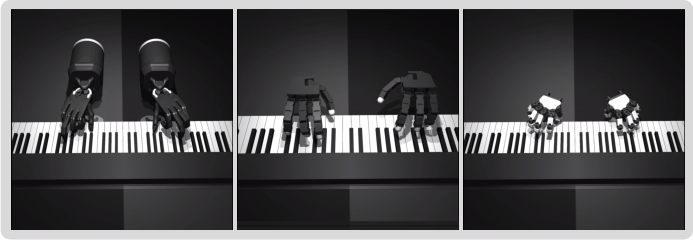

The OT problem formulation dynamically determines how fingers should move to press the required keys at each time step, significantly reducing the exploration space in RL. This fingering strategy adapts well to different robotic architectures, allowing for the transferability across various robotic hands (Figure 4 and Figure 5).

Figure 4: Comparison of agent-discovered and human-annotated fingering.

Figure 5: Performance across different robotic embodiments.

Reinforcement Learning with RP1M++

The OT-based fingering enables efficient training of RL specialists, each specializing in a single musical piece. The RP1M++ dataset aggregates over 2,000 such specialists, improving both data diversity and state-space coverage essential for training a multi-song agent capable of generalizing to unseen pieces (Figure 6).

Figure 6: RP1M++ provides better coverage leading to superior training results.

This transformer model efficiently processes complex music sequences. It utilizes multi-head attention to handle the temporal and spatial dependencies inherent in piano playing, thereby improving the agent's ability to perform diverse tasks with high fidelity (Figure 3).

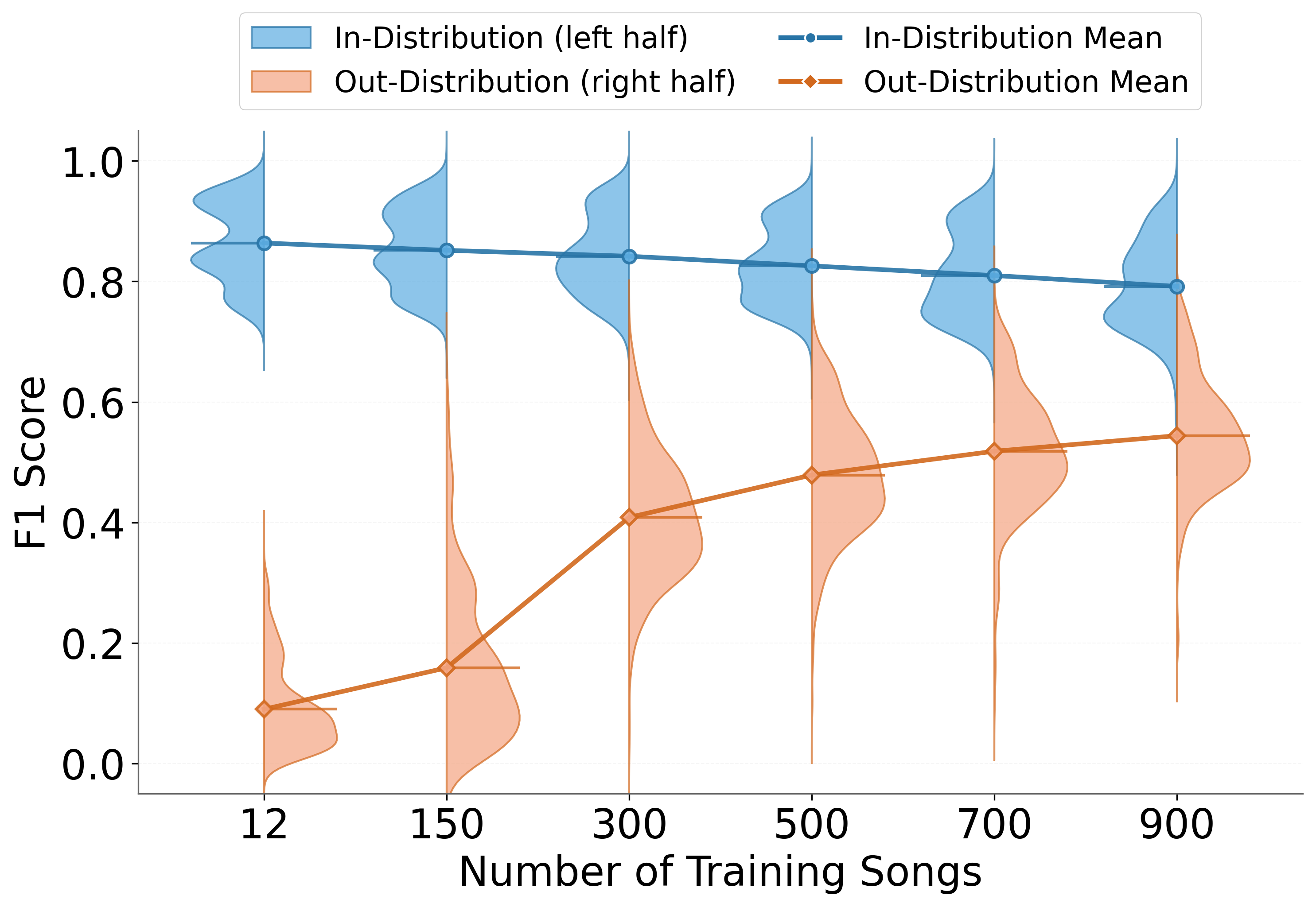

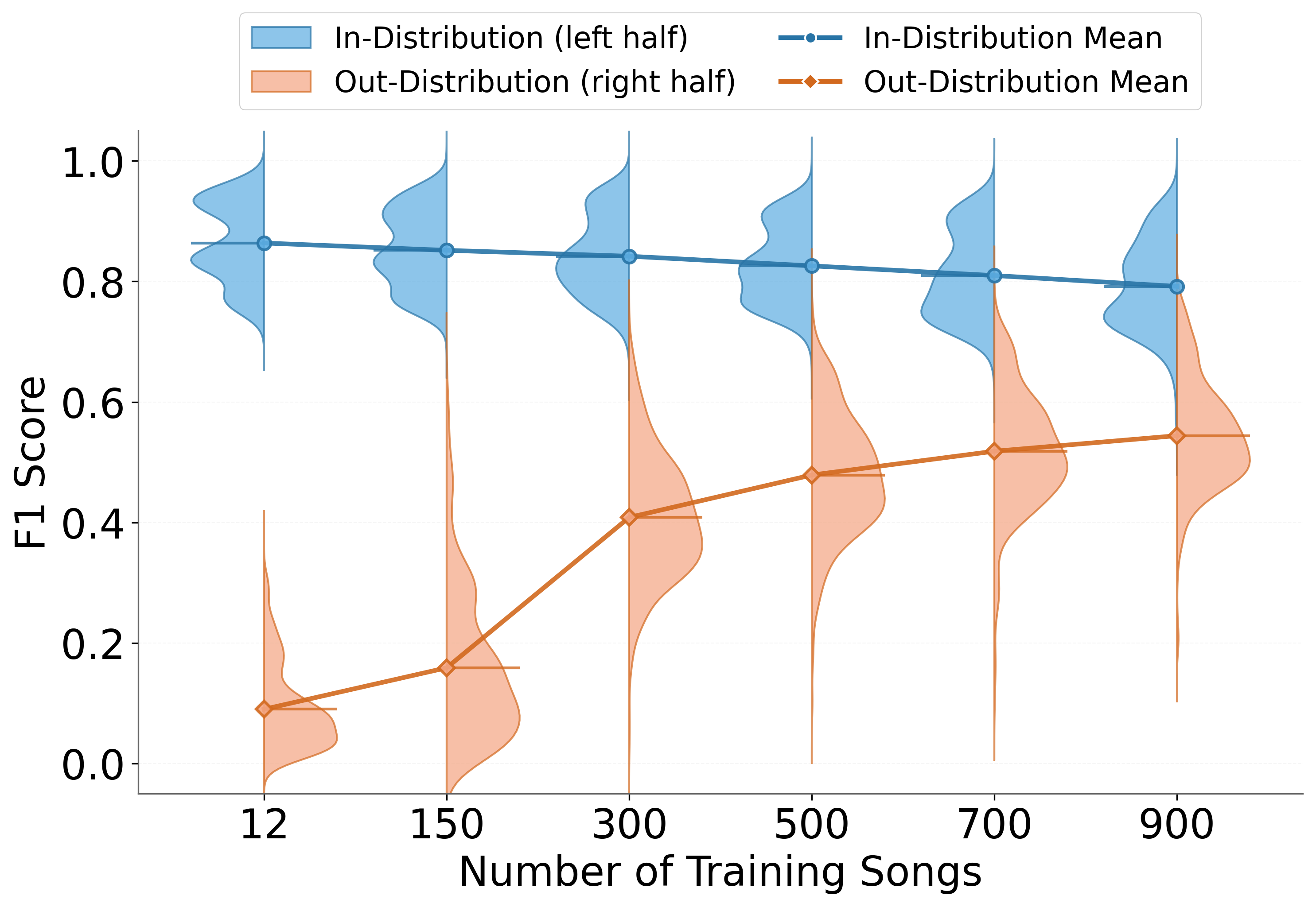

The method's scalability is demonstrated by training and evaluating the multi-song agent, OmniPianist, across various datasets. The performance metrics on both in-distribution and out-of-distribution sets underscore the improvements brought by RP1M++, affirming the method's robustness and generalization capacity (Figure 7).

Figure 7: Data scaling laws showing consistent improvements with larger datasets.

Conclusion

The paper presents a scalable framework for dexterous robotic piano playing that bypasses the limitations of traditional human-demonstration-based methods. By integrating optimal transport-based fingering with large-scale RL and the Flow Matching Transformer, OmniPianist demonstrates remarkable proficiency and versatility in performing complex musical tasks. Future work may explore extending this framework to real-world implementations and integrating multimodal sensory inputs to enhance precision and control during performances.