DeepSeek-OCR: Contexts Optical Compression (2510.18234v1)

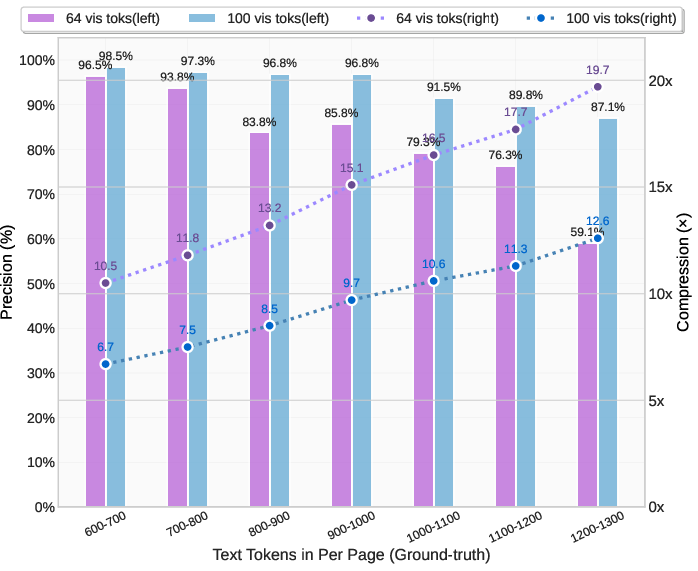

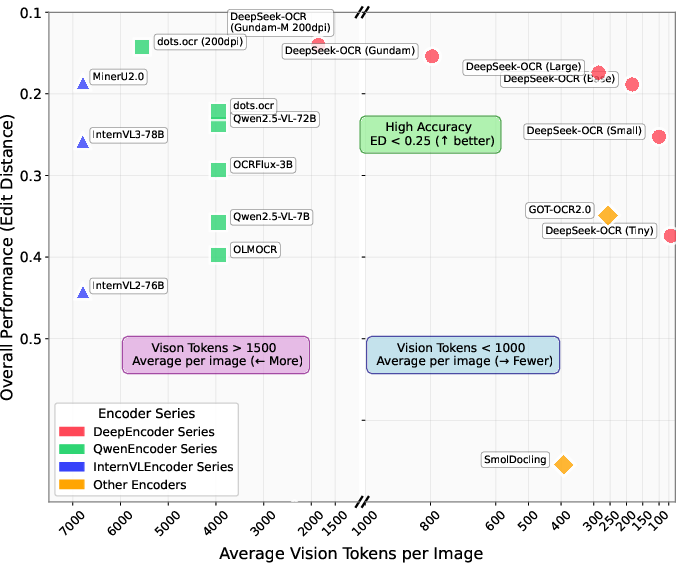

Abstract: We present DeepSeek-OCR as an initial investigation into the feasibility of compressing long contexts via optical 2D mapping. DeepSeek-OCR consists of two components: DeepEncoder and DeepSeek3B-MoE-A570M as the decoder. Specifically, DeepEncoder serves as the core engine, designed to maintain low activations under high-resolution input while achieving high compression ratios to ensure an optimal and manageable number of vision tokens. Experiments show that when the number of text tokens is within 10 times that of vision tokens (i.e., a compression ratio < 10x), the model can achieve decoding (OCR) precision of 97%. Even at a compression ratio of 20x, the OCR accuracy still remains at about 60%. This shows considerable promise for research areas such as historical long-context compression and memory forgetting mechanisms in LLMs. Beyond this, DeepSeek-OCR also demonstrates high practical value. On OmniDocBench, it surpasses GOT-OCR2.0 (256 tokens/page) using only 100 vision tokens, and outperforms MinerU2.0 (6000+ tokens per page on average) while utilizing fewer than 800 vision tokens. In production, DeepSeek-OCR can generate training data for LLMs/VLMs at a scale of 200k+ pages per day (a single A100-40G). Codes and model weights are publicly accessible at http://github.com/deepseek-ai/DeepSeek-OCR.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

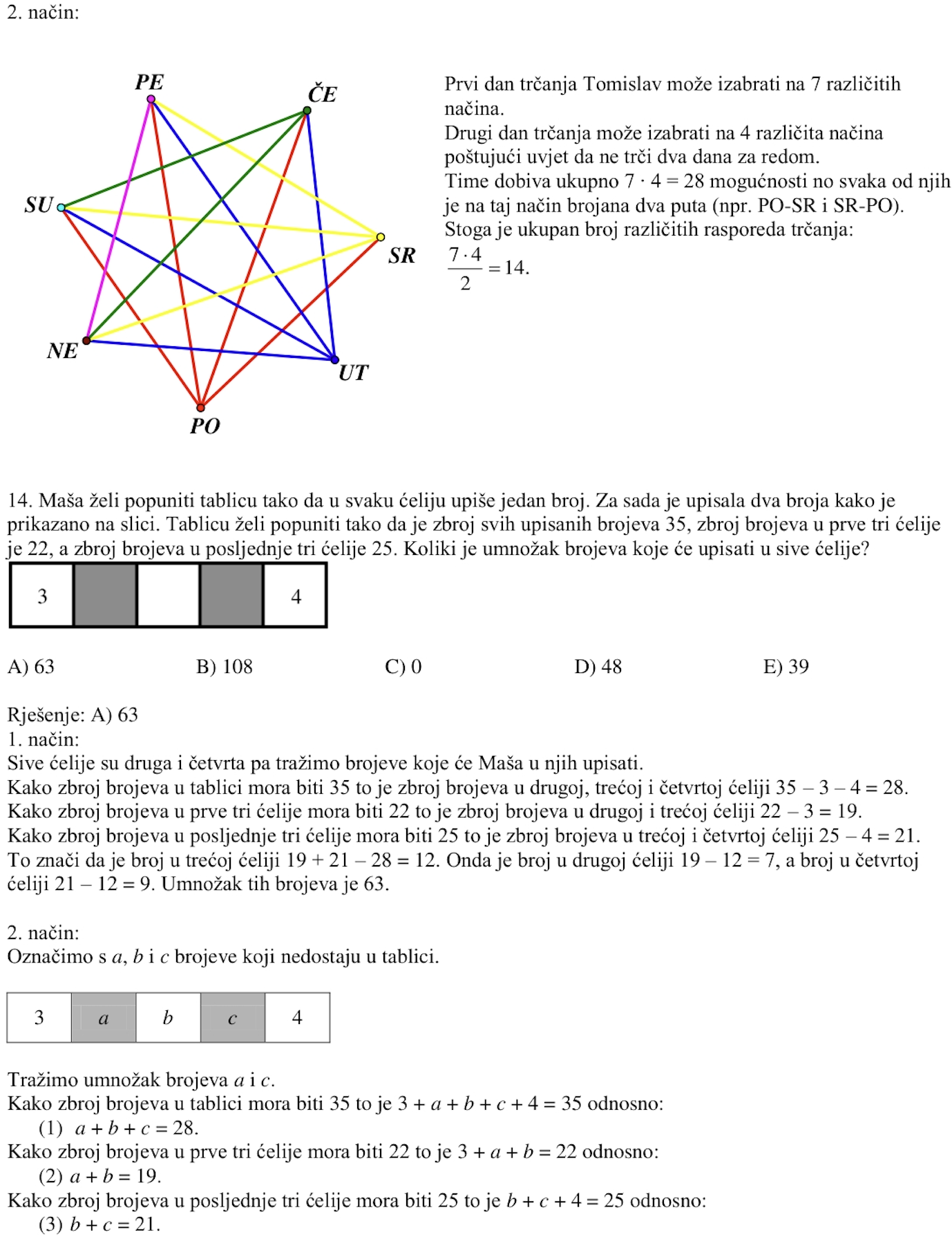

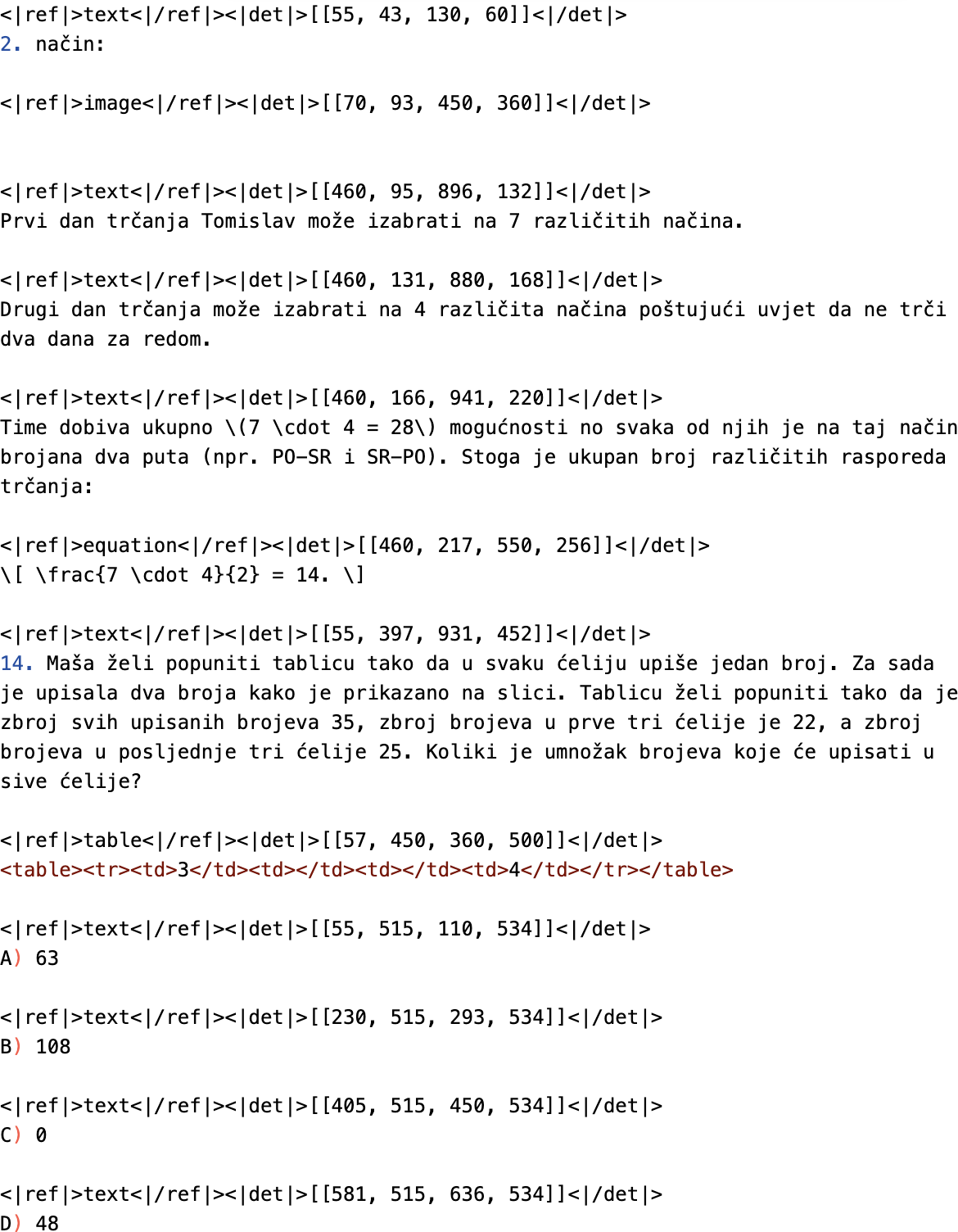

This paper introduces DeepSeek-OCR, a new system that turns pictures of documents into text very efficiently. The big idea is to use images as a smart way to “compress” long text so that AI models (like chatbots) can handle more information without using tons of computing power. The authors show that a small number of image pieces (called vision tokens) can hold the same information as many more text pieces (text tokens), and the AI can still read it back accurately.

What questions did the researchers ask?

To make the idea simple, think of a long document like a huge puzzle. Each small piece is a “token.” The researchers wanted to know:

- How few image pieces (vision tokens) do we need to rebuild the original text pieces (text tokens) correctly?

- Can we design an image reader (encoder) that handles big, high-resolution pages without using too much memory?

- Will this approach work well on real-world document tasks (like PDFs with text, tables, charts, formulas), and can it be fast enough for practical use?

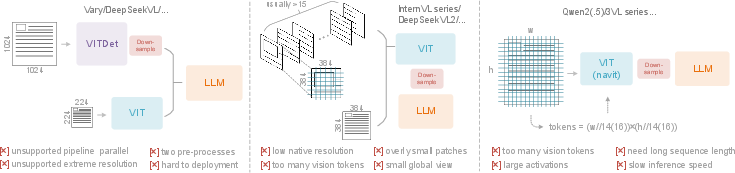

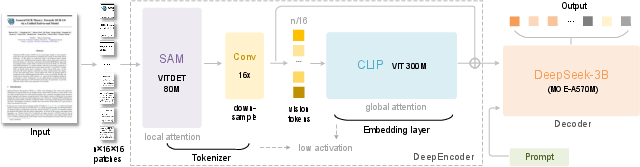

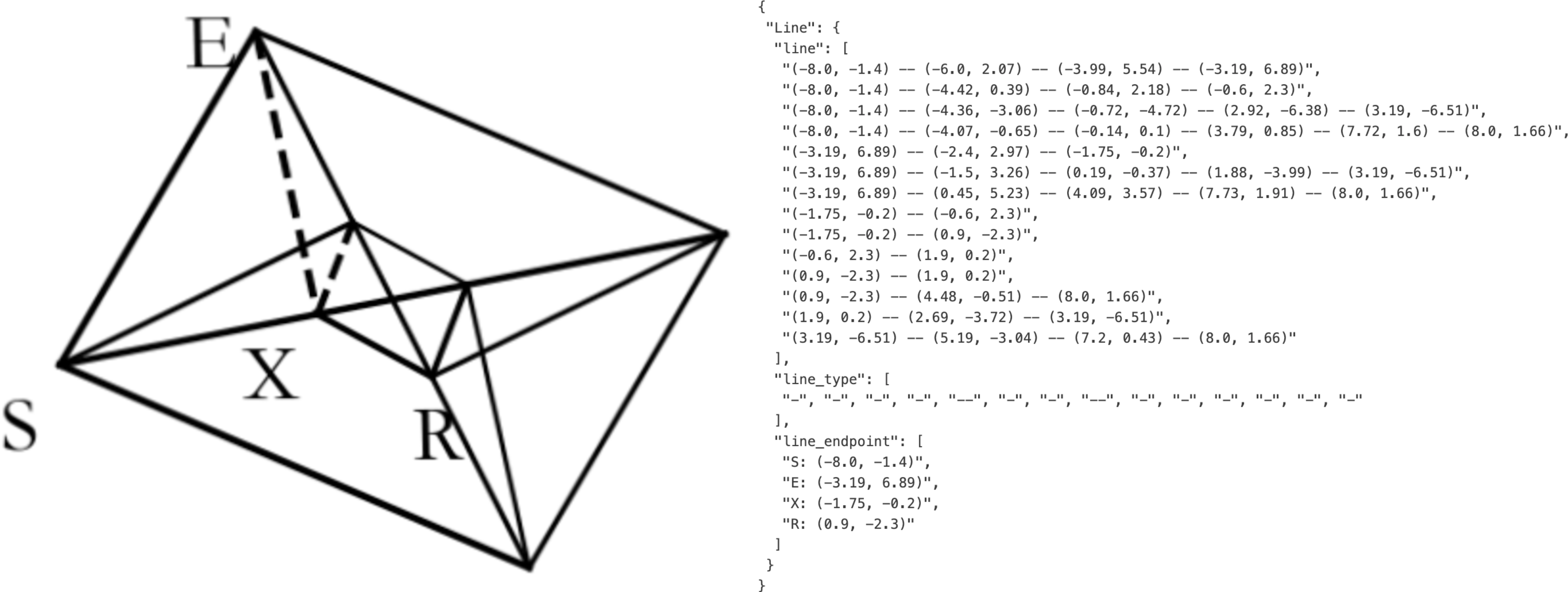

How does DeepSeek-OCR work?

Imagine a two-part team: a “camera brain” that breaks the page image into meaningful chunks, and a “reading brain” that turns those chunks back into clean text.

- The camera brain is called DeepEncoder. It has two parts:

- A “window attention” part (like scanning the page through many small windows to find details). This uses SAM.

- A “global attention” part (like stepping back to see the whole page and connect the dots). This uses CLIP.

- Between those two parts, there’s a “shrinker” (a 16× compressor) that reduces how many image tokens need to be handled globally. Think of it as shrinking a big photo while keeping the important details clear enough to read.

- The reading brain is a LLM (DeepSeek-3B-MoE). “MoE” means “Mixture of Experts”: multiple small specialists inside the model each focus on what they’re best at, which makes reading faster and smarter.

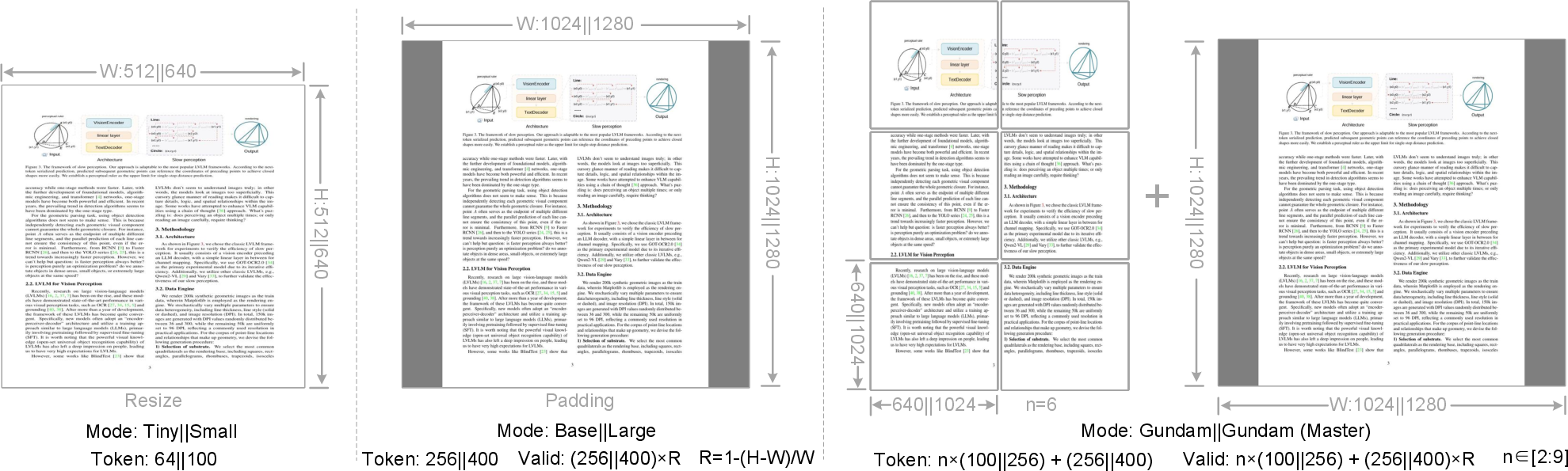

To handle different kinds of pages, they trained the system with multiple resolution modes:

- Tiny and Small for fewer tokens (quick scans),

- Base and Large for more detail,

- Gundam/Gundam-M for very big or dense pages (cutting the page into tiles plus a global view, like reading both zoomed-in pieces and the full page).

For training, they used several kinds of data:

- OCR 1.0: regular documents and street/scene text.

- OCR 2.0: tricky things like charts, chemical formulas, and geometry drawings.

- General vision: tasks like captions and object detection to keep some broad image understanding.

- Text-only: to keep the language skills strong.

They trained the encoder first, then the full system, using lots of pages and smart prompts (instructions) for different tasks.

What did they find, and why does it matter?

The results are impressive and easy to understand with a few key numbers:

- Compression power:

- About 97% accuracy when the text is compressed to within 10× (meaning the number of text tokens is roughly 10 times the number of vision tokens).

- Around 60% accuracy even at 20× compression (much tighter packing).

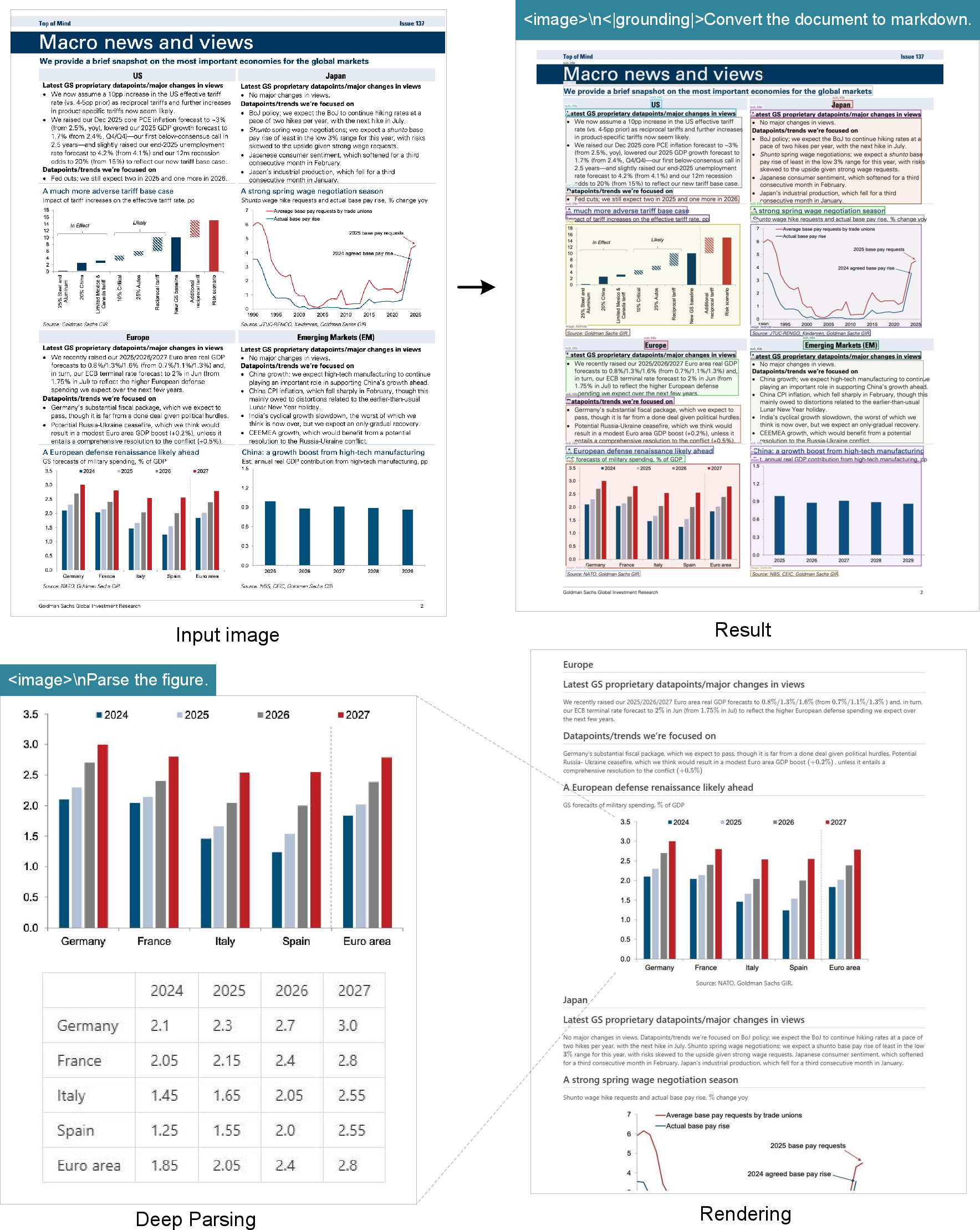

- Real-world performance:

- On OmniDocBench, an evaluation of document parsing:

- DeepSeek-OCR beat GOT-OCR2.0 using only 100 vision tokens.

- It also outperformed MinerU2.0 while using fewer than 800 vision tokens (MinerU used nearly 7,000).

- Versatility:

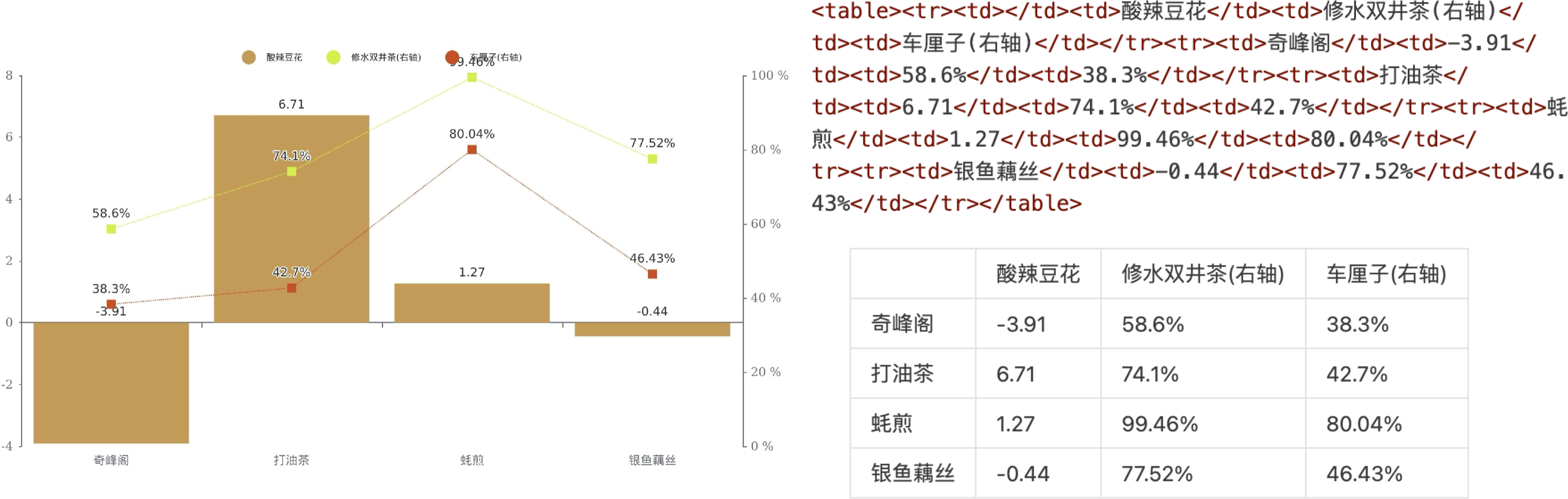

- It can handle not just plain text, but also charts, chemical formulas, simple geometry, and natural images.

- It supports nearly 100 languages for PDF OCR.

- Speed and scale:

- It can generate training data at very large scale (200k+ pages per day on one GPU; tens of millions per day on a cluster), which is valuable for building better AI models.

Why it matters: If AI can compress and read long text using images, then big LLMs can remember more context (like conversation history or long documents) while using fewer resources. That means faster, cheaper, and more capable AI systems.

What could this change in the future?

The paper suggests a new way to handle long-term memory in AI:

- Optical context compression: Turn earlier parts of a long conversation or document into images and read them with fewer tokens. Keep recent parts in high detail, and compress older parts more. This mimics “forgetting” like humans do: older memories become fuzzier and take less space, but important recent ones stay sharp.

- Better long-context AI: With smart compression, AI could handle much longer histories without running out of memory.

- Practical tools: DeepSeek-OCR is already useful—for creating training data, doing large-scale OCR, and parsing complex documents with charts and formulas—while using fewer resources.

In short, DeepSeek-OCR shows that “a picture is worth a thousand words” can be true for AI too. By packing text into images and reading it back efficiently, we can build faster, smarter systems that remember more without slowing down.

Collections

Sign up for free to add this paper to one or more collections.