- The paper introduces a test-time self-improvement mechanism that leverages uncertainty-based sample selection, synthetic data generation, and lightweight parameter updates to enhance LLM agent performance.

- The approach achieves an average accuracy gain of +5.48% and demonstrates 68× higher sample efficiency compared to standard supervised fine-tuning.

- Its modular design enables the integration of alternative uncertainty metrics and data synthesis methods, paving the way for future self-adapting, lifelong learning systems.

Test-Time Self-Improvement for LLM Agents: Framework, Empirical Analysis, and Implications

The paper "Self-Improving LLM Agents at Test-Time" (2510.07841) introduces a modular framework for enabling LLM agents to adapt and improve their performance during inference, without reliance on large-scale offline retraining. The proposed Test-Time Self-Improvement (TT-SI) algorithm leverages uncertainty estimation, targeted data synthesis, and lightweight parameter updates to achieve substantial gains in agentic tasks, with strong empirical results and efficiency advantages over conventional fine-tuning paradigms.

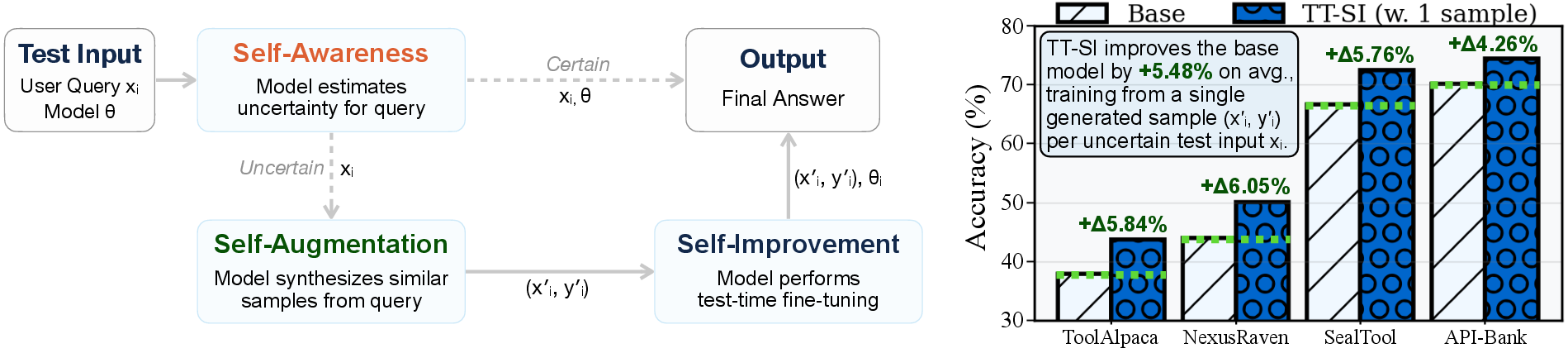

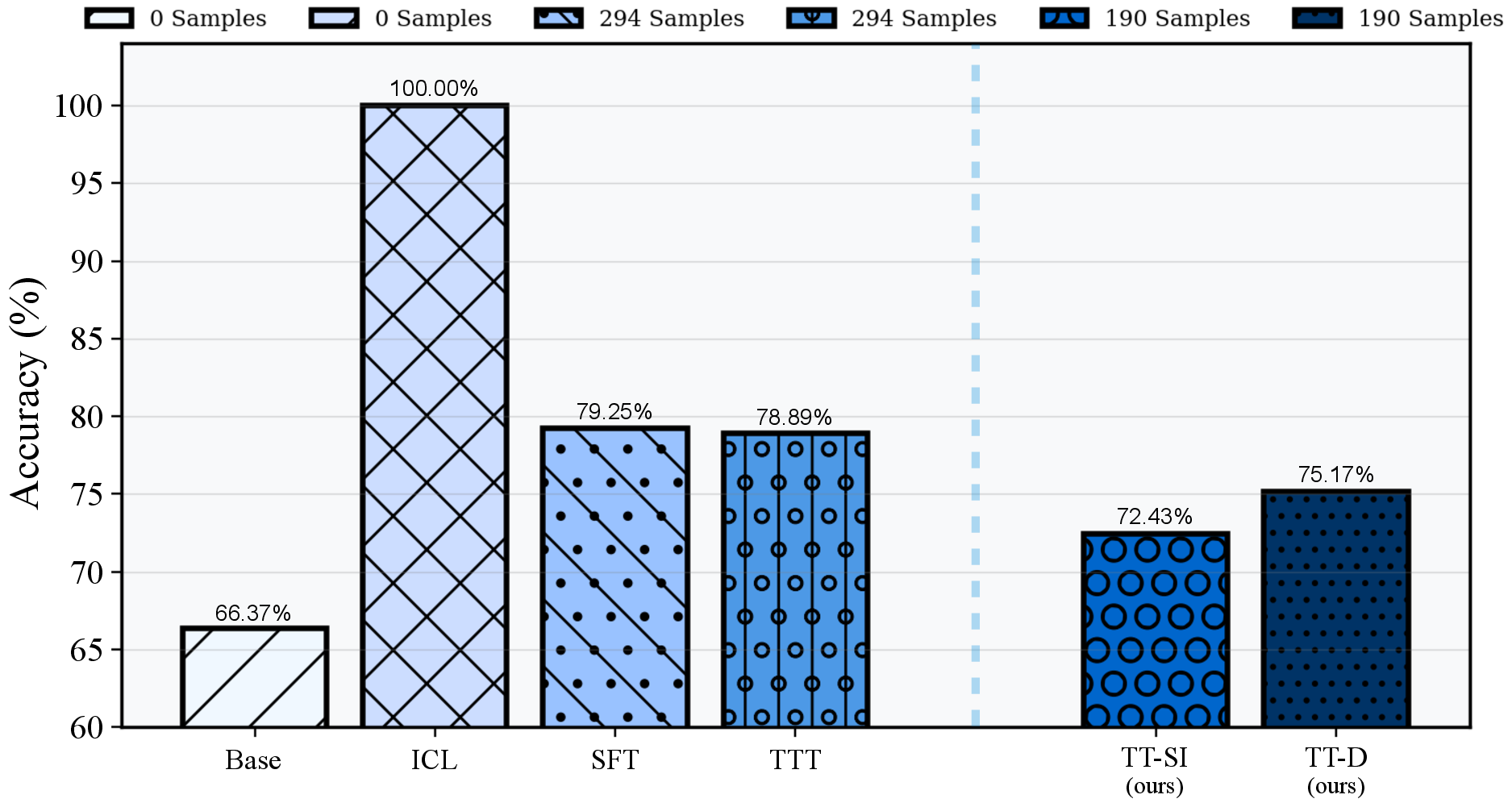

Figure 1: Overview of the TT-SI framework, illustrating the three-stage process and average delta-accuracy gains across four agentic benchmarks.

Traditional LLM agent fine-tuning relies on large, diverse datasets and expensive training cycles, yet suffers from several limitations: distributional shift between train and test, high annotation and compute costs, redundancy in training samples, and catastrophic forgetting. The TT-SI paradigm is motivated by transductive and local learning principles, as well as human self-regulated learning, where adaptation is focused on challenging, informative instances rather than exhaustive coverage.

TT-SI reframes agentic adaptation as a test-time process, where the model:

- Identifies uncertain test samples via a margin-based confidence estimator (SAorange).

- Synthesizes similar training instances for each uncertain sample using the model itself (SAforest) or a stronger teacher (TT-D variant).

- Performs lightweight parameter updates (SAblue) using PEFT (LoRA), then resets parameters after inference.

This approach enables on-the-fly, instance-specific adaptation, targeting the model's weaknesses and surfacing latent knowledge.

Algorithmic Framework

The TT-SI algorithm is formalized as follows:

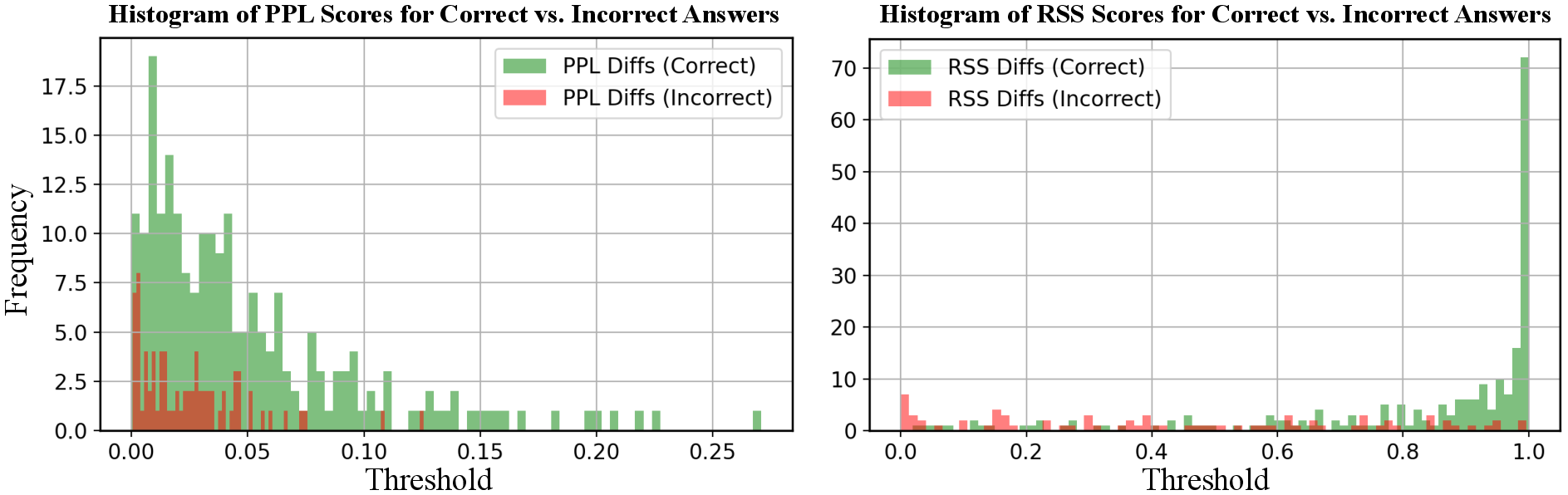

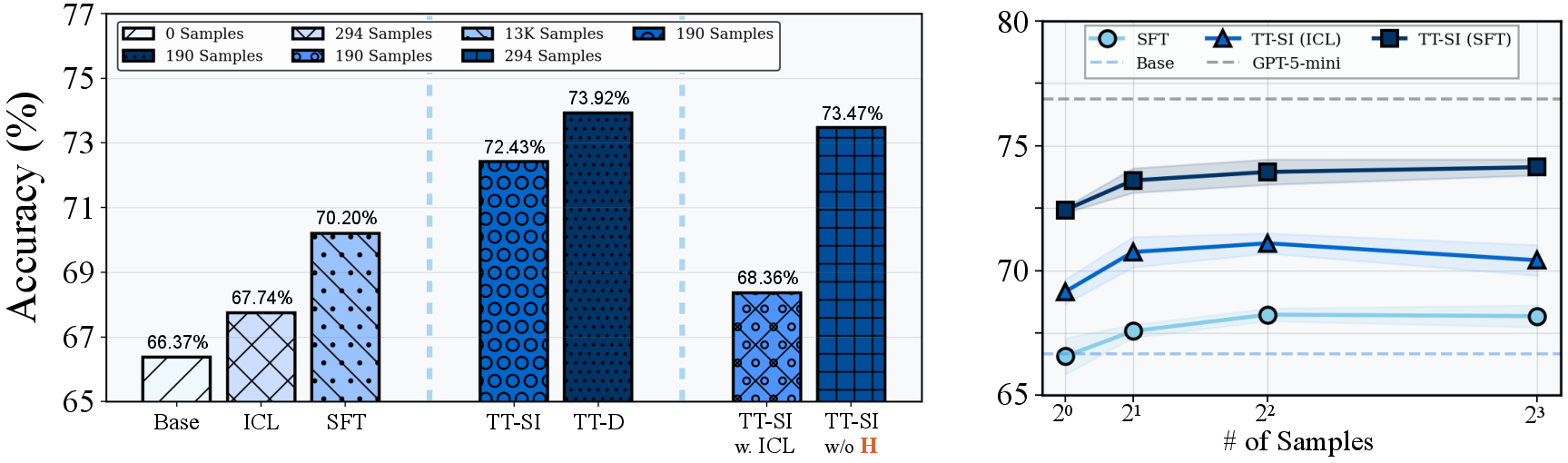

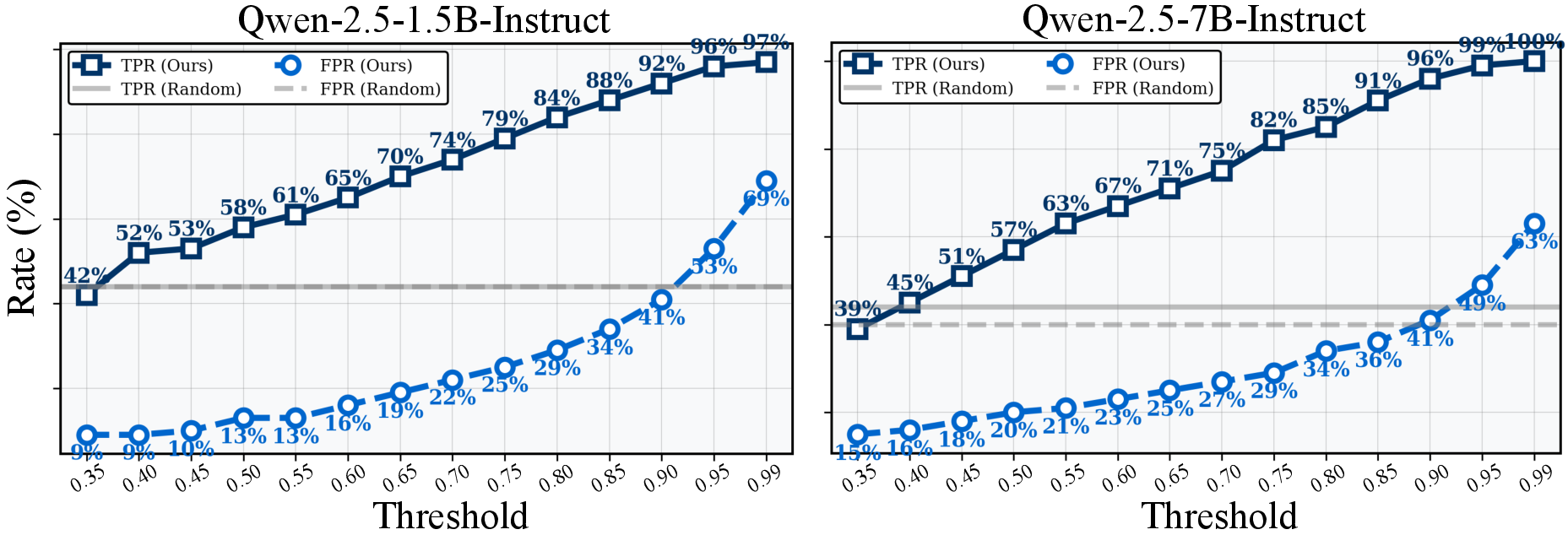

- Uncertainty Estimation (SAorange): For each test input xi, compute the negative log-likelihood for candidate actions, normalize via Relative Softmax Scoring (RSS), and select samples where the softmax-difference u(xi)=p(1)−p(2) falls below a threshold τ.

- Data Synthesis (SAforest): For each uncertain xi, generate K synthetic input-output pairs using a prompt-based LLM generation process, ensuring semantic proximity to the original query.

- Test-Time Fine-Tuning (SAblue): Temporarily update model parameters via LoRA on the synthesized data, perform inference, and restore original weights.

Pseudocode for the full procedure is provided in the paper, and the modular design allows for substitution of uncertainty metrics, data generators, and update rules.

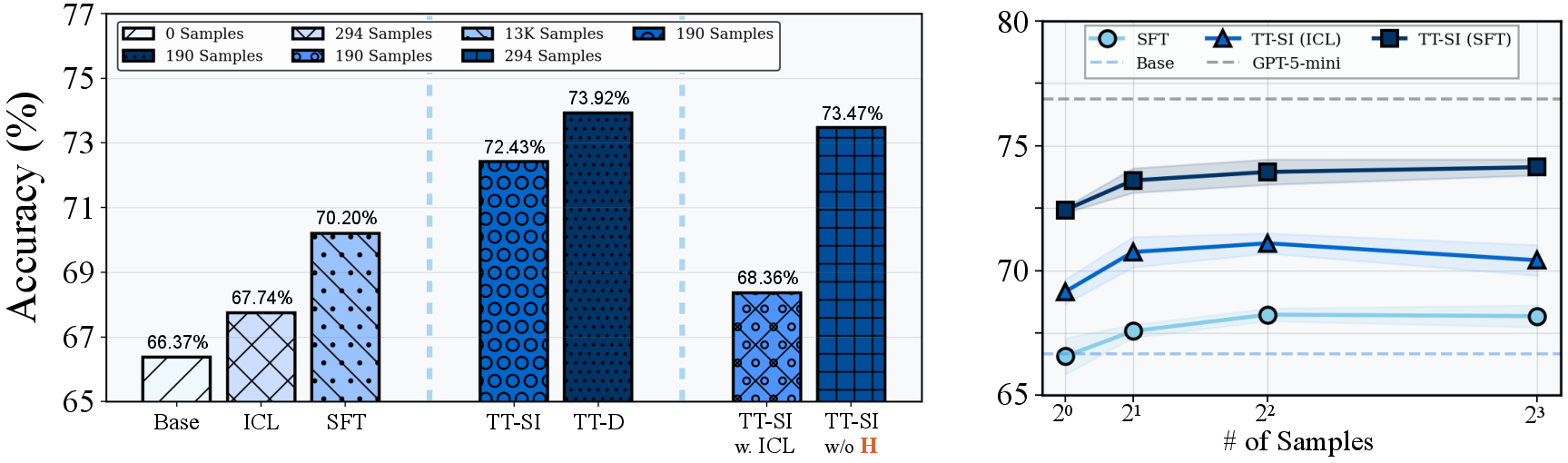

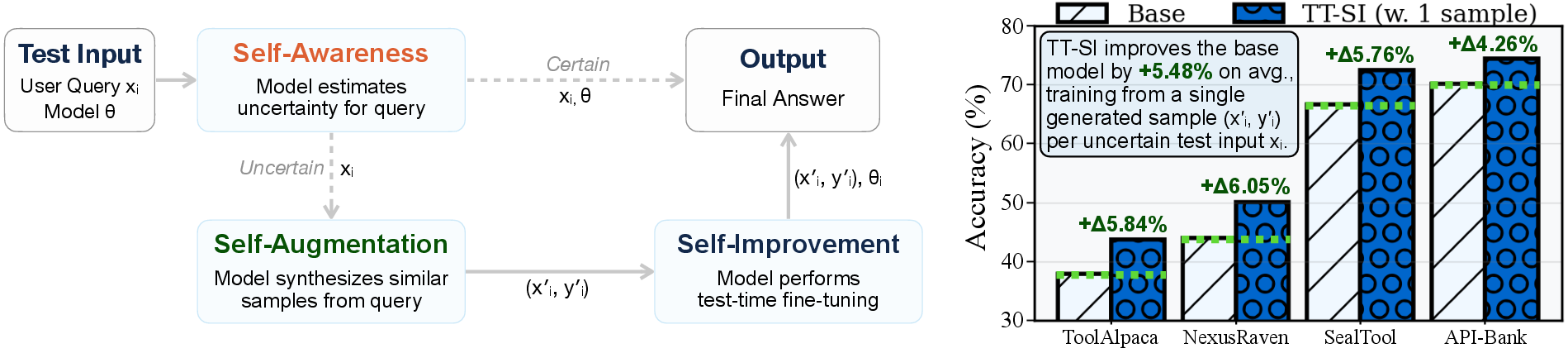

Figure 2: TT-SI accuracy and scaling behavior on SealTool, including ablations and sample efficiency analysis.

Empirical Results

TT-SI is evaluated on four agentic benchmarks: NexusRaven, SealTool, API-Bank, and ToolAlpaca, using Qwen2.5-1.5B-Instruct and Qwen2.5-7B-Instruct. Key findings include:

- Absolute accuracy gains: TT-SI improves baseline prompting by +5.48% on average, with consistent gains across all benchmarks.

- Sample efficiency: TT-SI achieves higher accuracy than supervised fine-tuning (SFT) on SealTool using 68× fewer samples (190 vs. 13k), demonstrating strong efficiency.

- Test-time distillation (TT-D): Using a stronger teacher for data synthesis yields further improvements, especially in context-heavy scenarios.

- ICL variant: When training is infeasible, TT-SI with in-context learning (ICL) offers a training-free alternative, outperforming standard ICL baselines.

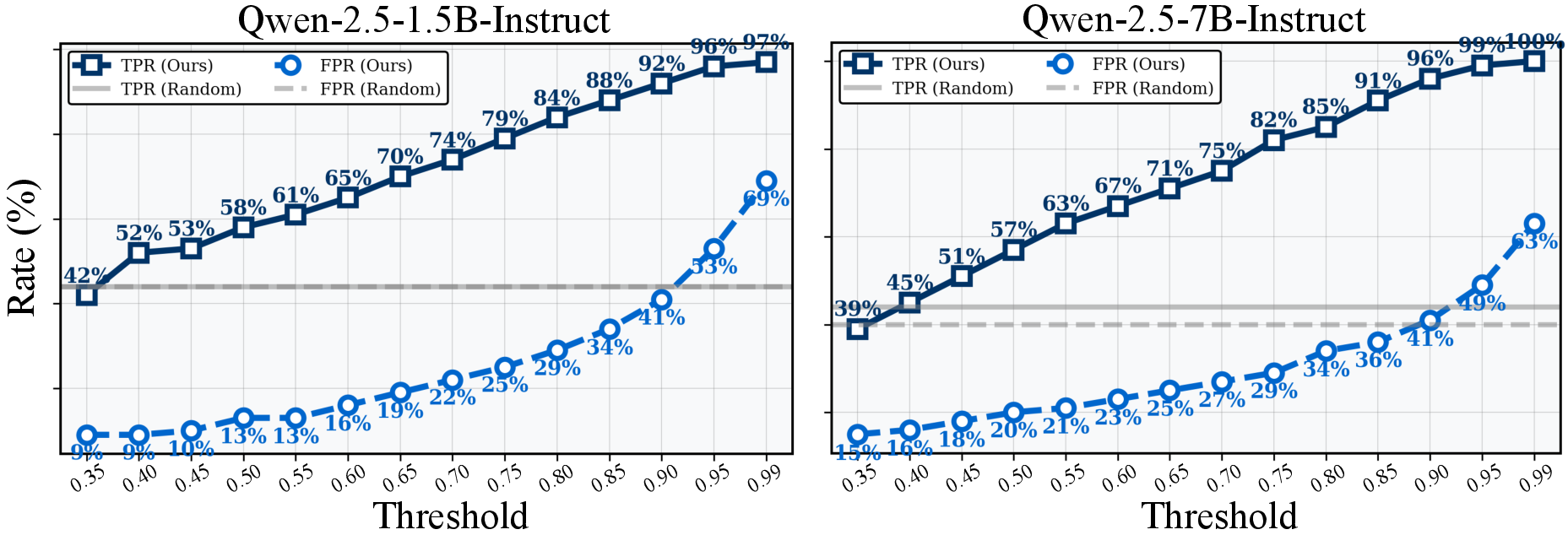

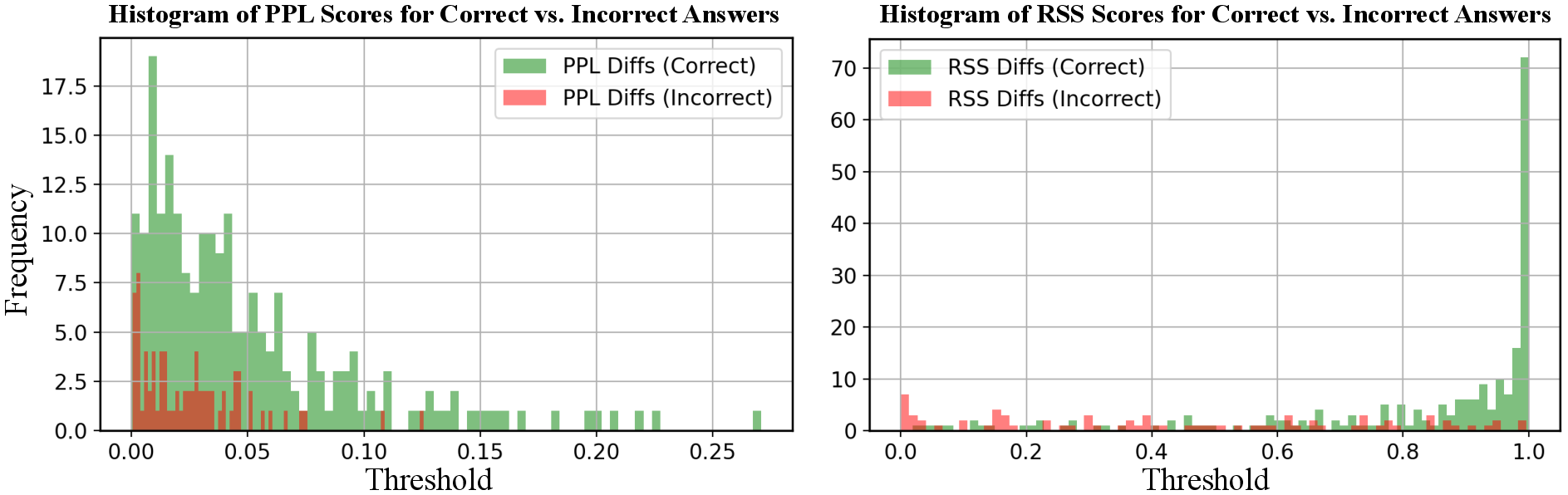

- Uncertainty filtering: Ablations show that focusing adaptation on uncertain samples yields near-optimal accuracy with reduced computational cost, and the choice of τ controls the trade-off between coverage and efficiency.

Figure 3: RSS-based uncertainty estimation yields clearer separation between correct and incorrect predictions compared to perplexity-based baselines.

Figure 4: Trade-off between true positive rate and false positive rate as the uncertainty threshold τ varies.

Ablation and Analysis

Implementation and Resource Considerations

TT-SI is implemented using HuggingFace Transformers for uncertainty estimation, vLLM for data generation and inference, and LLaMA-Factory for LoRA-based fine-tuning. Average per-sample latency is 7.3s for uncertain samples, with overall wall-clock speed-up over SFT. The framework is compatible with public agentic benchmarks and can be extended to other domains.

Theoretical and Practical Implications

TT-SI demonstrates that LLM agents can self-improve during inference by leveraging uncertainty-guided adaptation and self-generated data, surfacing latent knowledge without external supervision. This challenges the necessity of large-scale retraining and opens new directions for efficient, lifelong agent learning. The modular design allows for future integration of improved uncertainty estimators, adaptive data generation, and co-evolutionary training setups.

Limitations include sensitivity to the uncertainty threshold τ and the inherent knowledge boundary of the base model. TT-SI cannot recover information absent from the pretrained weights, suggesting the need for retrieval or external augmentation in such cases.

Conclusion

The TT-SI framework provides a principled, efficient approach for test-time adaptation of LLM agents, achieving strong empirical gains with minimal data and compute. By integrating self-awareness, targeted self-augmentation, and lightweight self-improvement, TT-SI advances the paradigm of self-improving agents and lays the groundwork for future research in self-evolving, lifelong learning systems. The results highlight both the practical utility and theoretical significance of uncertainty-driven, modular test-time learning for agentic NLP tasks.