Overview of Agent-R: Training LLM Agents to Reflect via Iterative Self-Training

The paper presents a novel framework, Agent-R, aimed at enhancing the capabilities of LLM agents in interactive environments through a process of iterative self-training and reflection. This approach addresses fundamental challenges faced by language agents, particularly those related to error correction and dynamic decision-making in complex, multi-turn interaction settings.

LLM agents, driven by LLMs, have become indispensable in tackling intricate tasks that demand autonomous decision-making and error rectification. Nonetheless, existing methodologies predominantly rely on behavior cloning from expert obseervations, thus limiting their applicability in real-world scenarios where unforeseen errors persist throughout task execution. These limitations highlight the necessity for a mechanism that enables agents to identify and correct errors in real-time rather than exclusively depending on post hoc feedback.

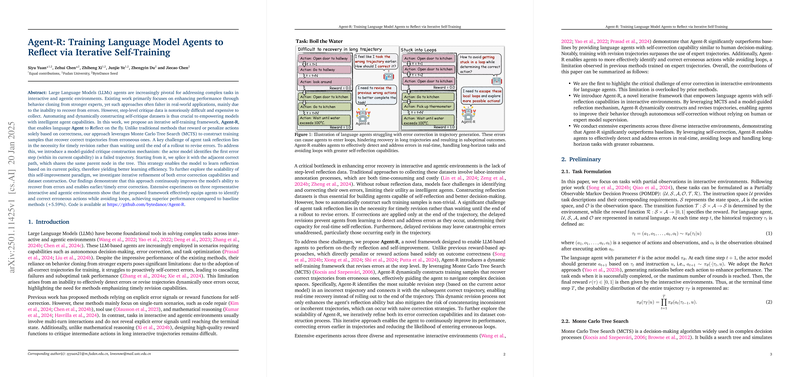

Agent-R introduces an innovative approach to agent training via a self-reflective mechanism. This mechanism allows agents to assess their actions continuously and optimize behavior based on past performance. Central to this approach is the Monte Carlo Tree Search (MCTS) algorithm, which aids in constructing corrective trajectories by assimilating and juxtaposing erroneous paths with correct ones. Such a dynamic methodology empowers the agent with immediate corrective capabilities, precluding the need for end-state error rectification that often proves ineffective.

The framework comprises two essential phases: model-guided reflection trajectory generation and iterative self-training with the constructed trajectories. The initial phase deploys MCTS to explore potential decision paths dynamically, formulating correction trajectories by promptly identifying transition points between effective and ineffective paths. This is achieved by leveraging an actor model capable of detecting the initial instance of error to splice it with a subsequent correct path.

In the subsequent phase, the refinement of the agent through iterative training enables the accommodation of more complex error patterns and the development of robust correction pathways. The adaptive mechanism progressively enhances the agent's competency through a feedback loop between validation and learning. The continuous improvement evident in Agent-R-facilitated agents underscores the power of real-time correction underpinned by self-assessment.

Empirically, Agent-R demonstrates considerable improvement across multiple representative interactive environments, outperforming baseline models significantly. The framework facilitates earlier and more precise correction of errors, resulting in minimal failure rates and fewer task repetitions, as reflected in a performance elevation of over 5.59% compared to existing models. These promising results illustrate the potential of integrating reflective self-training processes in language agents to extend their applicability and enhance task efficiency and reliability.

Implications of this research extend beyond practical implementations, suggesting transformative shifts in the landscape of AI development where independent learning and error correction become ingrained within agent operations. This advancement harmonizes with broader trends in artificial intelligence, advocating for systems that possess innate adaptability and self-critique faculties, paving the way for more sophisticated, autonomous AI systems in future explorations.

In conclusion, Agent-R represents a significant step toward empowering LLM agents with reflective capabilities, thereby enhancing their adaptability and effectiveness in performing intricate, dynamic tasks. As AI continues to evolve, such frameworks will play an integral role in refining the interaction paradigms between intelligent agents and their operational environments, fostering innovation within AI research and enabling more efficient task execution.