- The paper presents ELMUR, a novel transformer architecture that integrates external memory tracks at every layer to manage long-term dependencies in partially observable RL tasks.

- It employs bidirectional cross-attention through mem2tok and tok2mem blocks and an LRU-based update mechanism to balance new information with memory stability.

- The approach outperforms baseline models on benchmarks like T-Maze and POPGym, achieving perfect success rates and extended retention well beyond typical attention windows.

ELMUR: External Layer Memory with Update/Rewrite for Long-Horizon RL

Introduction

The paper "ELMUR: External Layer Memory with Update/Rewrite for Long-Horizon RL" presents a novel transformer architecture designed to tackle the challenges of long-horizon reinforcement learning (RL) under partial observability. The authors propose integrating structured external memory within each transformer layer, facilitating long-term dependencies by enabling persistent token-memory interactions. This approach aims to address the deficiencies of existing models that struggle with retaining information over extended periods due to limited context windows and sparse efficiency under scale.

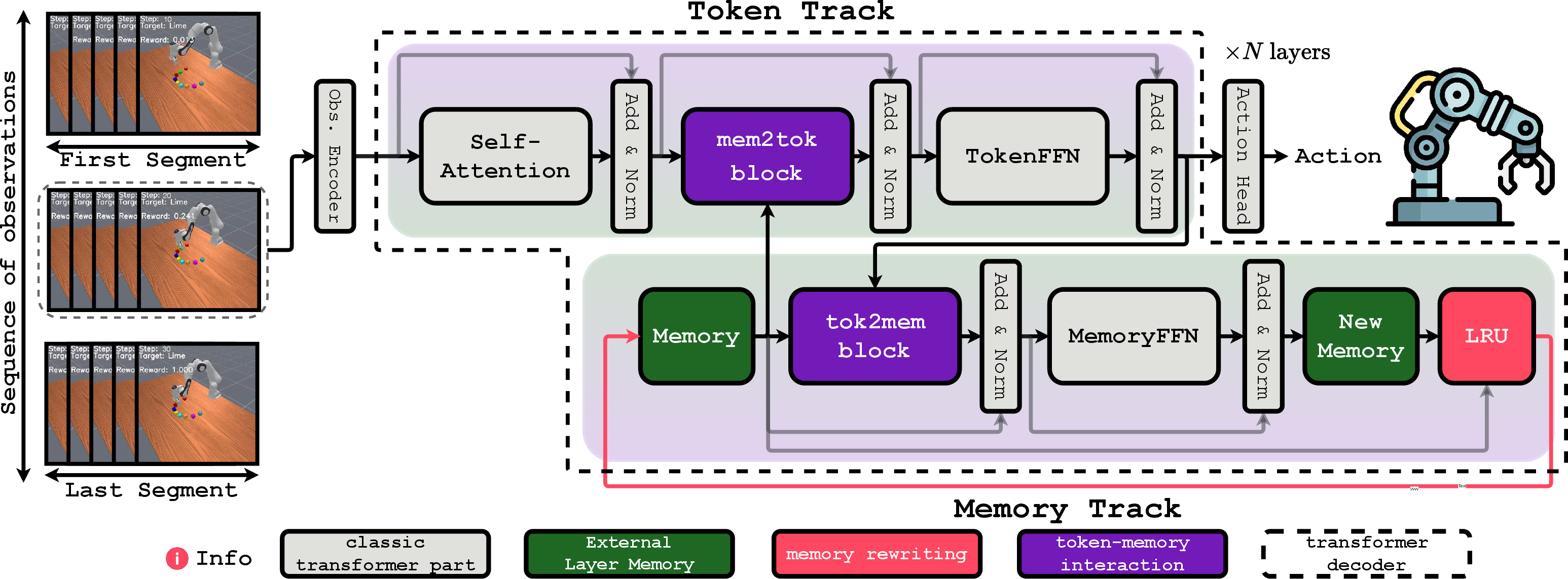

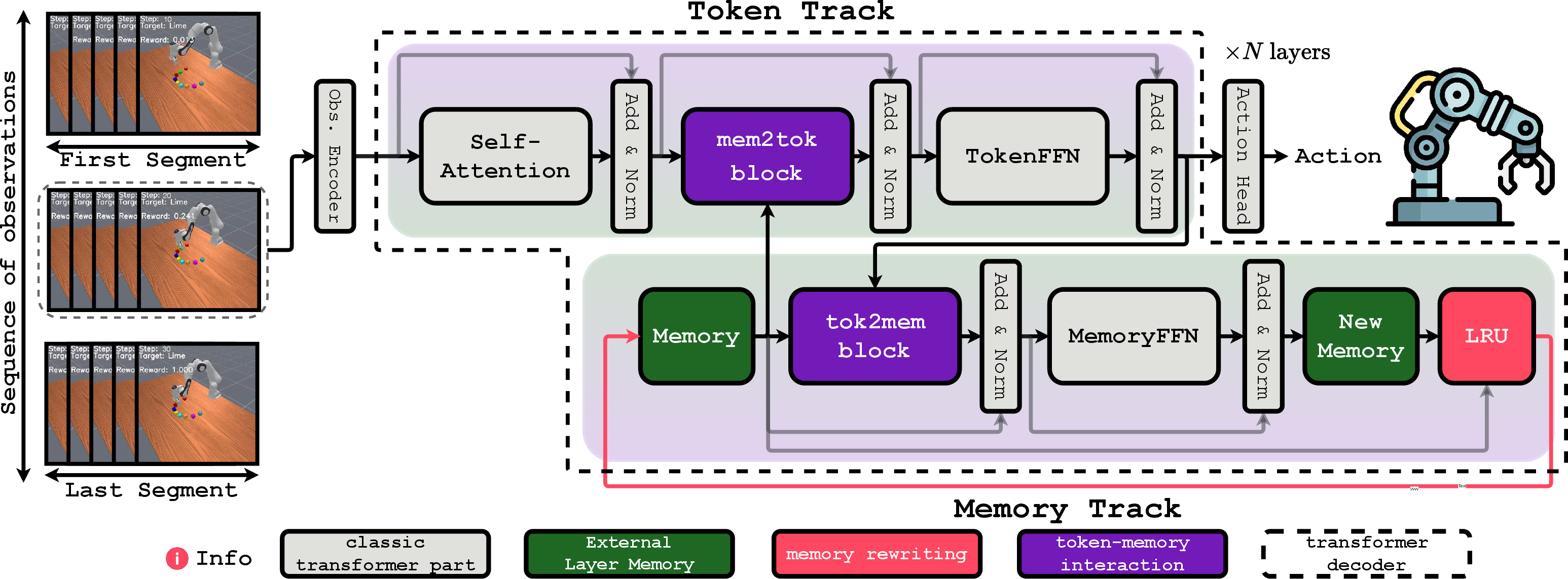

Figure 1: ELMUR overview. Each transformer layer is augmented with an external memory track that runs in parallel with the token track, enabling token-memory interaction and long-horizon recall.

ELMUR Architecture

ELMUR augments each layer of a transformer with an external memory track, providing structured memory embeddings that persist across segments. The architecture features bidirectional cross-attention through mem2tok (for reading from memory) and tok2mem (for updating memory) blocks. An LRU-based memory update mechanism ensures efficient memory management, balancing stability and adaptability through replacement or convex blending.

Memory Management

The LRU block selectively rewrites memory, prioritizing the least recently used slots for updates. This approach allows continuous integration of new information while maintaining bounded memory capacity. The design facilitates recall far beyond the standard attention window by enabling seamless token-memory interactions across large temporal distances.

Experimental Results

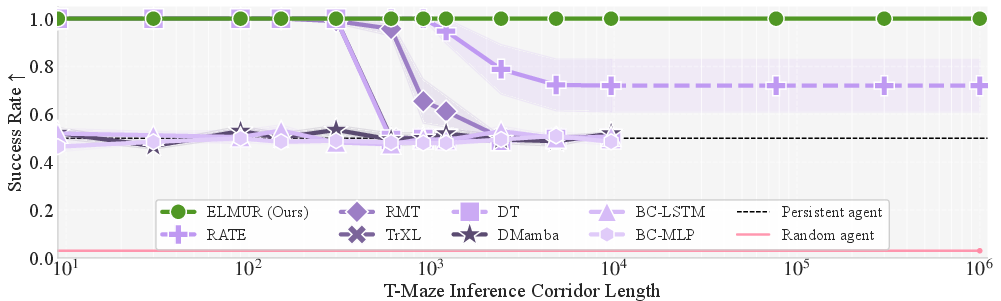

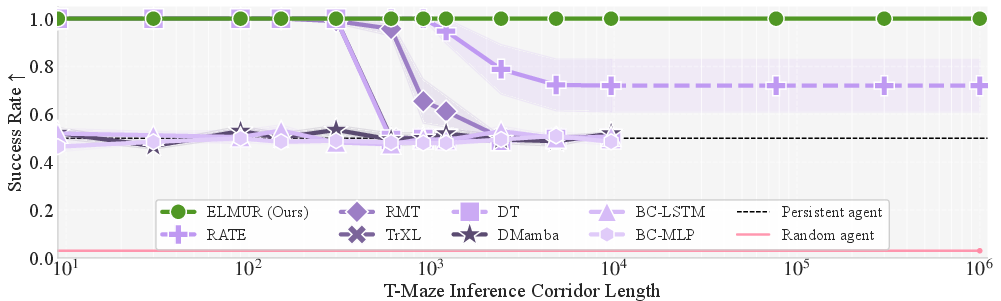

Figure 2: Success rate on the T-Maze task as a function of inference corridor length, demonstrating ELMUR's capability to maintain perfect success rates across long horizons.

Retention Ability

ELMUR's effectiveness in handling long-term dependencies is demonstrated through its performance on the T-Maze task, where it achieves a 100% success rate across one million steps, showcasing its ability to extend token-memory interaction up to 100,000 times beyond the attention window. This retention capability is crucial for solving tasks that require recalling information far beyond typical context limits.

Comparison with Baselines

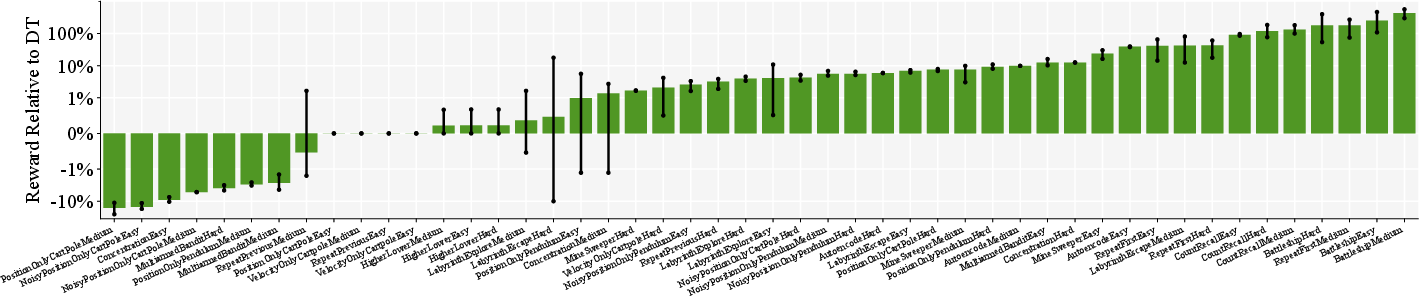

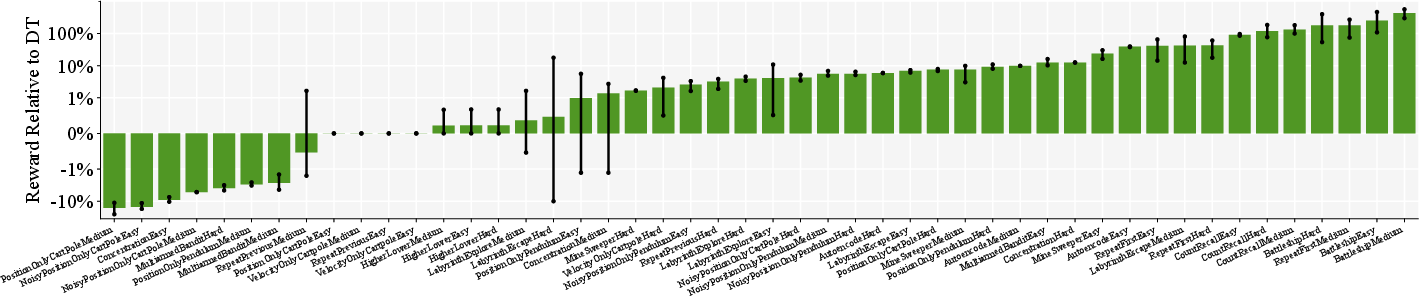

ELMUR consistently outperforms strong baseline models across various benchmarks, including the MIKASA-Robo suite and the POPGym tasks. The architectural enhancements provide quantifiable improvements in environments characterized by sparse rewards and partial observability.

Figure 3: ELMUR compared to DT on all 48 POPGym tasks, illustrating consistent performance improvements, especially on memory-intensive puzzles.

Theoretical Analysis

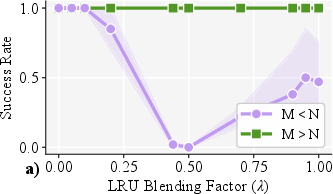

The authors provide a theoretical framework analyzing memory dynamics, establishing formal bounds on forgetting and retention horizons. Proposition 1 demonstrates exponential forgetting under the LRU update mechanism, offering insights into memory stability and efficiency in preserving task-relevant information.

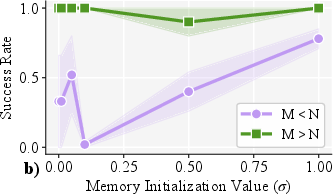

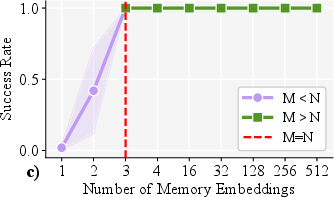

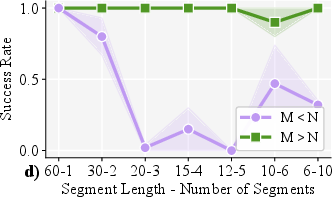

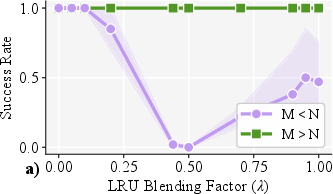

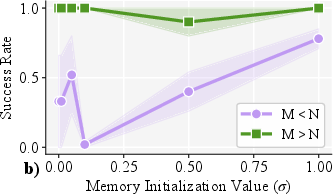

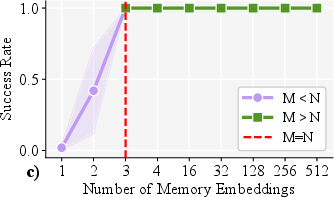

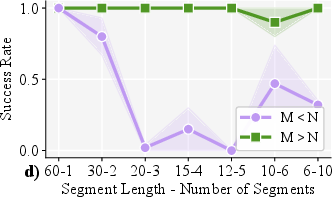

Figure 4: Ablations of ELMUR's memory hyperparameters on RememberColor3-v0, showing sensitivity to memory capacity and various initialization parameters.

Implications and Future Work

The proposed architecture not only advances the state-of-the-art in RL under partial observability but also presents a scalable and efficient approach to decision-making in complex environments involving long-horizon reasoning. The practical implications extend to AI applications in robotics, autonomous systems, and strategic planning. Future research could explore adaptive memory mechanisms and the deployment of ELMUR in real-world robotic settings to further validate its efficacy.

Conclusion

ELMUR introduces a compelling method for integrating structured external memory within transformer layers, effectively surmounting the challenges posed by long-horizon dependencies in partially observable tasks. With robust empirical results and theoretical guarantees, ELMUR emerges as a promising architecture for advancing RL methodologies and handling complex decision-making problems.