Associative Recurrent Memory Transformer

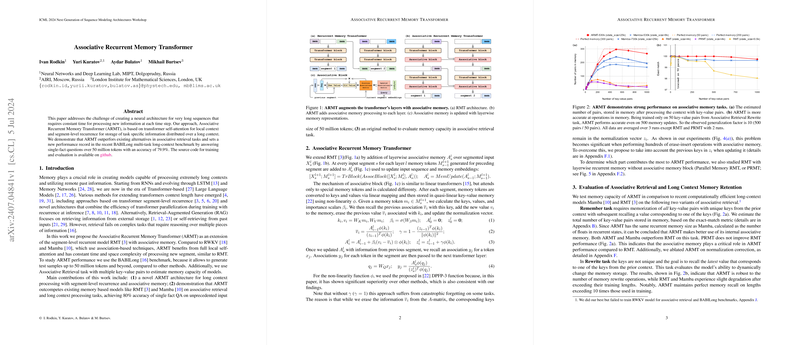

The paper "Associative Recurrent Memory Transformer" by Ivan Rodkin, Yuri Kuratov, Aydar Bulatov, and Mikhail Burtsev introduces a novel neural architecture designed to address the challenge of processing very long sequences efficiently. The proposed model, termed Associative Recurrent Memory Transformer (ARMT), combines the mechanisms of transformer self-attention and segment-level recurrence with an innovative associative memory component. This new architecture aims to manage extremely long contexts with constant time and space complexity for processing new segments.

Key Contributions

The main contributions of this work are threefold:

- Novel Architecture: The introduction of ARMT, which incorporates segment-level recurrence and associative memory to enhance long context processing.

- Performance Benchmarking: Demonstration of ARMT's superior performance in associative retrieval tasks and on the BABILong multi-task long-context benchmark, achieving a new record with 79.9% accuracy on single-fact questions over 50 million tokens.

- Memory Evaluation Method: Development of a unique method to evaluate memory capacity in associative retrieval tasks.

Detailed Architecture

The ARMT architecture extends the existing Recurrent Memory Transformer (RMT) by integrating an associative memory mechanism that operates at each layer of the transformer's segments. This extension is critical for improving memory capacity and the model’s ability to process long sequences.

The mechanism involves:

- Layerwise Associative Memory (): For each segment and layer , memory tokens from the preceding segment are added to the associative memory, which is then used to update the input sequence and memory embeddings.

- Memory State Updates: Upon processing each segment, memory tokens are converted to key-value pairs and stored in a quasi-linear key-value memory matrix using specific non-linearity functions.

- Recall Mechanism: The associative memory generates associations for each token in the segment, which are used in subsequent layers to maintain context over long sequences.

Performance and Evaluation

Associative Retrieval Tasks

The memory capacity of ARMT was evaluated against models like Mamba and RMT using two associative retrieval tasks:

- Remember Task: Required the model to memorize and recall key-value pairs from prior context. ARMT outperformed other models by making efficient use of its internal associative memory, demonstrating superior capacity.

- Rewrite Task: Focused on recalling the latest value for non-unique keys, testing the model's ability to handle dynamic memory storage. ARMT displayed robust performance, maintaining accurate memory even with substantial memory updates, unlike RMT and Mamba, which showed slight degradation.

BABILong Benchmark

ARMT was tested on the BABILong benchmark, which includes tasks requiring reasoning over extremely long sequences of up to 50 million tokens. The ARMT achieved unprecedented generalization with high accuracy on various tasks:

- Achieved 79.9% accuracy on QA1 single supporting fact with inputs up to 50 million tokens.

- Demonstrated significant performance on multi-hop reasoning tasks with contexts up to 10 million tokens.

Implications and Future Work

The implications of this research are significant for tasks involving long-range dependencies and large-scale sequence processing. The ARMT model's ability to handle vast amounts of data with constant time complexity opens up new possibilities for applications in natural language processing, including large-scale document analysis, historical data processing, and advanced LLMing.

Future research could explore:

- Scaling ARMT to larger models to evaluate its potential in high-resource settings.

- Optimizing the training procedures to further improve performance on LLMing tasks.

- Integrating ARMT with other advanced architectures to enhance its applicability and efficiency.

Conclusion

In conclusion, the Associative Recurrent Memory Transformer introduces a significant advancement in the area of long-context neural processing. By combining the strengths of segment-level recurrence and associative memory, ARMT sets a new standard in associative retrieval tasks and the processing of extensive sequences. The model's innovative architecture and impressive performance on the BABILong benchmark underscore its potential for handling complex, long-range tasks and its promise for future developments in artificial intelligence research.