- The paper demonstrates how neuroscience principles inspire the integration of multi-timescale memory into Transformer models.

- It categorizes memory augmentation based on functional objectives, memory types, and integration techniques, leading to improved context handling.

- Scalable operations like associative retrieval and surprise-gated updates enable enhanced continual learning and stable inference.

Transformers, renowned for excelling at sequence modeling, face inherent limitations in capturing long-range dependencies due to self-attention's quadratic complexity. These constraints impair their prowess in continual learning and adapting to new contexts, a stark contrast to human cognitive systems. The paper systematically reviews how neuroscience principles—like multi-timescale memory and selective attention—inspire enhancements in Transformer architectures, transforming these models into dynamic, memory-augmented systems capable of more sophisticated tasks.

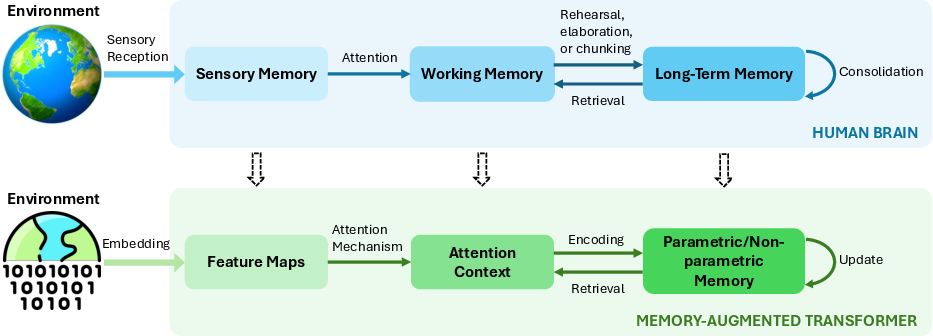

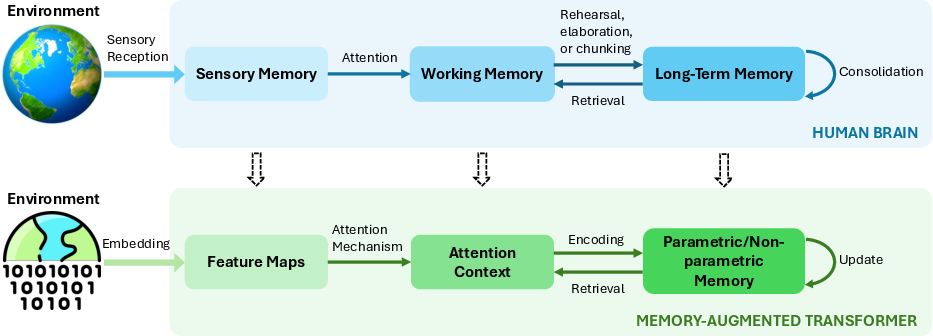

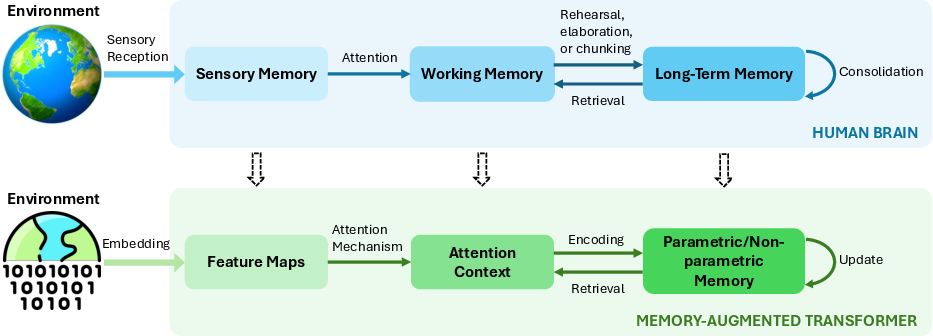

Figure 1: Parallels between the memory systems in the human brain and memory-augmented Transformers.

Biological Memory Systems and their Inspiration

Human memory comprises sensory, working, and long-term subsystems, each optimized for different temporal and processing requirements. Sensory memory acts as a transient buffer for immediate perceptual input, working memory serves as a limited-capacity workspace for active processing, and long-term memory consolidates information for prolonged retention. These systems communicate through intricate cortical and subcortical loops to encode, consolidate, and retrieve information—a blueprint increasingly mirrored in memory-augmented Transformers.

Transformers have begun to implement similar hierarchical structures, wherein embeddings and attention mechanisms allow for efficient storage and access to contextually relevant information across various scales. This alignment fosters models that can mimic human-like cognitive flexibility and adaptability, providing vital insights into constructing more efficient learning systems.

Taxonomy and Functional Objectives

The paper categorizes memory-augmented Transformers across three taxonomies: functional objectives, types of memory representations, and integration mechanisms. These dimensions address specific AI challenges:

- Functional Objectives:

- Context Extension: Techniques such as token pruning and sparse attention extend the context length.

- Reasoning Enhancement: Models like MemReasoner use external memories for iterative inference, crucial for tasks like question answering.

- Knowledge Integration: Systems like EMAT integrate structured knowledge via fast retrieval mechanisms, improving tasks requiring extensive domain knowledge.

- Memory Types:

- Parameter-Encoded: Directly stores knowledge within model weights, inspired by biological synaptic consolidation.

- State-Based: Utilizes persistent activations for context preservation over processing steps.

- Explicit Storage: Employs external modules for scalable, persistent information storage and retrieval, analogous to hippocampal indexing.

- Integration Techniques:

- Attention-Based Fusion: Combines live inputs with memory content through cross-attention.

- Gated Control: Mimics neuromodulatory gating, selectively writing and maintaining information.

- Associative Memory: Enables content-addressable recall, crucial for relational and pattern completion tasks.

Core Memory Operations

Core operations in memory-augmented Transformers include reading, writing, forgetting, and capacity management:

- Reading: Advanced systems implement constant-time associative retrieval, scaling recall for long-context tasks.

- Writing: Decouples write triggers from immediate computation, allowing for surprise-gated updates that preserve model stability.

- Forgetting: Intelligent decay mechanisms selectively prune irrelevant information, mitigating memory saturation risks.

- Capacity Optimization: Techniques like hierarchical buffering and compression maintain scalable inference across lengthy sequences.

These operations, influenced by biological analogs, progressively refine memory dynamics to support continual learning and robust context management.

Challenges and Future Directions

Despite advancements, memory-augmented Transformers face scalability and interference challenges. Retrieval efficiency often degrades as memory size increases, highlighting the need for more efficient indexing mechanisms. Additionally, interference from concurrently accessed memory entries can jeopardize performance, necessitating sophisticated coordination strategies.

Future developments aim to foster AI systems with greater cognitive flexibility and lifelong learning capabilities, leveraging test-time adaptation and multimodal memory architectures. Ethical considerations are equally paramount, advocating for systems design prioritizing transparency and user agency over memory utilization.

Conclusion

Memory-augmented Transformers mark a pivotal step toward integrating cognitive neuroscience principles into AI. By emulating the efficiency and adaptability of human memory, these models not only enhance computational capabilities but also pave the way for more intelligent and context-aware AI systems. Continued research will be vital to bridge the gap between current technological constraints and the ultimate promise of human-like cognition.