- The paper introduces a taxonomy of reasoning topologies—direct, linear chain, and branch-structured—to address forecasting, anomaly detection, and causal inference.

- It highlights methodologies that integrate LLMs for multimodal fusion, synthetic data generation, and agentic control to improve decision-making processes.

- The survey identifies evaluation challenges and future directions, emphasizing standardized benchmarks and adaptive memory for managing long-term dependencies.

A Survey of Reasoning and Agentic Systems in Time Series with LLMs

Overview of the Paper

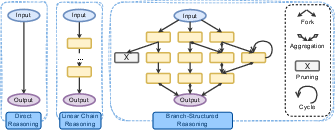

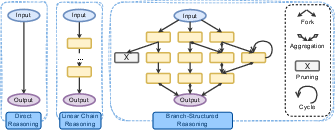

The paper "A Survey of Reasoning and Agentic Systems in Time Series with LLMs" provides a comprehensive overview of time series reasoning, especially focusing on how LLMs can be utilized to enhance analysis, forecasting, and decision-making. It classifies the approaches into three main reasoning topologies—direct reasoning, linear chain reasoning, and branch-structured reasoning—across traditional time series analysis, explanation and understanding, causal inference, and decision making.

Reasoning Topologies in Time Series

Direct Reasoning: The simplest form of reasoning involves mapping time series inputs to outputs in a single step. It is efficient but lacks the capacity for interpretability or handling complex tasks. Direct reasoning is commonly applied to straightforward tasks such as forecasting and anomaly detection.

Linear Chain Reasoning: This involves a sequence of steps where each depends on the previous, providing intermediate stages that can be inspected or revised. It allows more transparency and modularity than direct reasoning, useful in tasks like structured forecasting and anomaly detection with one-step verification.

Branch-Structured Reasoning: The most complex topology, enabling exploration and parallel hypothesis testing within a single execution. It supports iterative critiques, feedback loops, and fusion of multiple candidates, thus improving capacity for self-correction and adaptability in agentic control and complex decision-making.

Figure 1: Three types of reasoning topologies: direct reasoning, linear chain reasoning, and branch-structured reasoning. Yellow boxes represent intermediate reasoning steps. Branch-structured reasoning additionally supports four structures: fork, aggregation, pruning, and cycle.

Key Areas of Application

Traditional Time Series Analysis: This includes forecasting, classification, and anomaly detection. Techniques range from zero-shot learning using prompt-engineered models to agent-driven frameworks that integrate multimodal information.

Explanation and Understanding: Focused on producing interpretable insights rather than computational predictions, using methods like temporal question answering and structure discovery that can operate within a single reasoning path or utilize multi-agent branching.

Causal Inference and Decision Making: Involves using LLMs for policy recommendations, leveraging their capacity to model interventions over time and provide structured decision-making support. Branch-structured reasoning is often used here to maintain alternatives and adapt strategies iteratively.

Time Series Generation: This refers to the generation of synthetic time series data under specific conditions, often utilizing branch-structured reasoning to explore and validate multiple generative paths before selecting a coherent output.

Challenges and Future Directions

Evaluation and Benchmarking: Current benchmarks often fail to capture the full application scope due to narrow and simplified datasets. Future efforts should include standardized stress tests and benchmarks that explicitly test reasoning abilities across various domains.

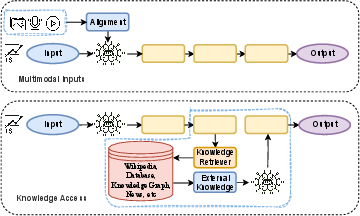

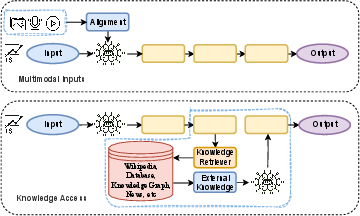

Multimodal Fusion and Alignment: Improving time synchronization and semantic alignment across data types such as numeric sequences and textual descriptions is crucial for enhancing the robustness of fused models.

Figure 2: Information sources: multimodal inputs and external knowledge access.

Long-Term Context and Efficiency: Handling long-term dependencies efficiently remains a challenge. Strategies like adaptive memory and stateful management are promising directions for enabling more scalable applications.

Agentic Control and Tool Use: The integration of tool usage within reasoning processes extends capabilities but requires effective coordination and control strategies to ensure robustness and actionable insights.

Conclusion

The surveyed work organizes the reasoning landscape, providing a taxonomy that spans reasoning topologies and primary objectives with attribute tags including tools, multimodal inputs, and agentic systems. Future research's emphasis should be on creating benchmarks connecting reasoning quality to utility and implementing streaming evaluations that handle long horizons effectively to advance the field from accuracy-focused tasks to broader, reliable applications in dynamic environments.