- The paper introduces a functional taxonomy for implicit reasoning, categorizing techniques such as latent optimization, signal-guided control, and layer-recurrent execution.

- It demonstrates that implicit reasoning reduces computational overhead while forgoing explicit, stepwise outputs, offering both efficiency and challenges in interpretability.

- The survey outlines evaluation metrics and identifies key challenges, guiding future research towards more efficient, robust, and cognitively aligned AI systems.

Implicit Reasoning in LLMs: A Comprehensive Survey

Introduction

The survey "Implicit Reasoning in LLMs: A Comprehensive Survey" (2509.02350) provides a systematic and mechanism-level analysis of implicit reasoning in LLMs, distinguishing it from explicit reasoning paradigms such as Chain-of-Thought (CoT) prompting. The authors introduce a functional taxonomy based on execution paradigms—latent optimization, signal-guided control, and layer-recurrent execution—shifting the focus from representational forms to computational strategies. The work synthesizes evidence for implicit reasoning, reviews evaluation protocols, and identifies open challenges, aiming to unify fragmented research efforts and guide future developments in efficient, robust, and cognitively aligned reasoning systems.

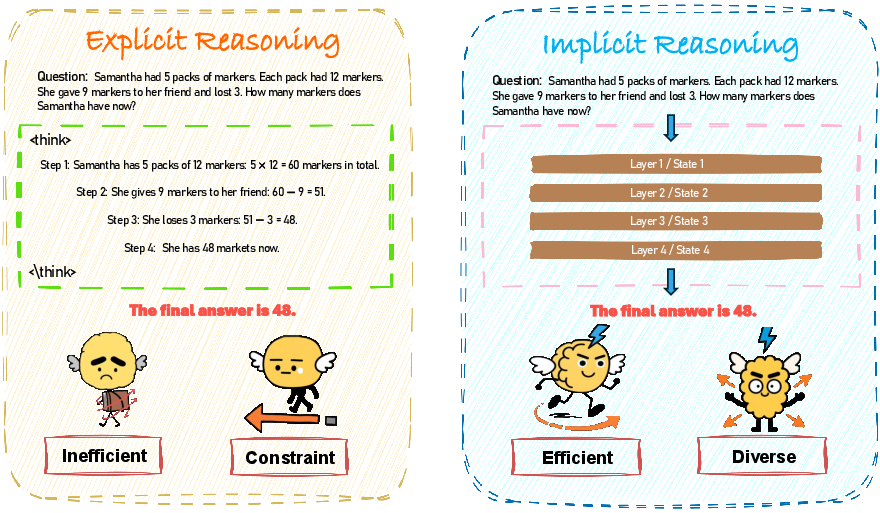

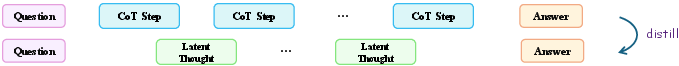

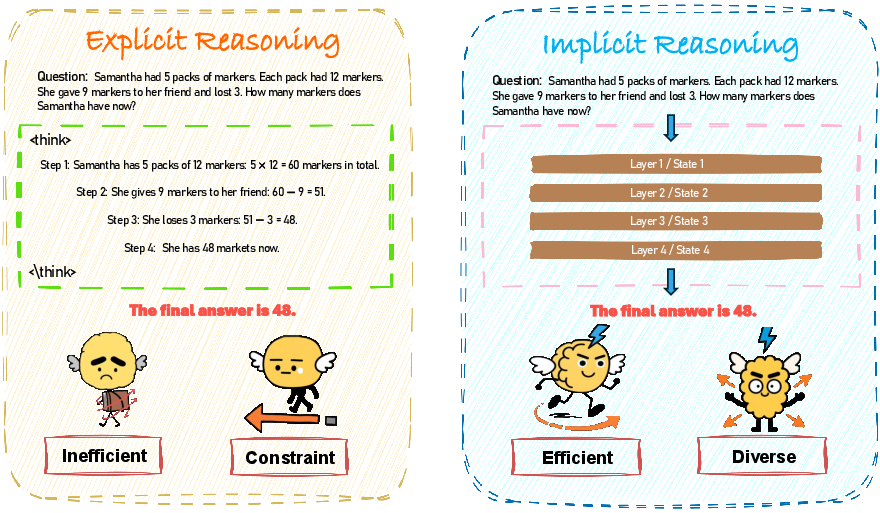

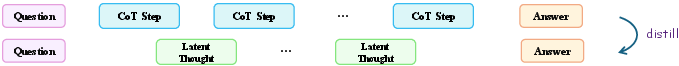

Figure 1: Comparison between explicit and implicit reasoning in LLMs. Explicit reasoning emits stepwise natural language explanations, while implicit reasoning operates entirely within hidden representations, supporting faster and more flexible computation.

Explicit reasoning in LLMs involves the generation of intermediate textual steps, enhancing interpretability and enabling step-level supervision. However, this approach incurs significant computational overhead due to verbose outputs and increased latency, especially in multi-step tasks. In contrast, implicit reasoning internalizes the reasoning process, leveraging latent states, hidden activations, or recurrent layer dynamics to arrive at answers without emitting intermediate steps. This paradigm offers improved efficiency, reduced resource consumption, and the potential for richer, parallel exploration of reasoning trajectories, but at the cost of reduced transparency and interpretability.

The survey formalizes both paradigms as two-stage inference processes, differing only in the visibility of the reasoning trace. Explicit reasoning is characterized by stepwise textual outputs, while implicit reasoning operates entirely within the model's latent space, with only the final answer exposed.

Taxonomy of Implicit Reasoning Paradigms

The authors propose a taxonomy comprising three execution-centric paradigms:

- Latent Optimization: Direct manipulation and optimization of internal representations, subdivided into token-level, trajectory-level, and internal-state-level approaches.

- Signal-Guided Control: Steering internal computation via specialized control signals (e.g., thinking tokens, pause tokens, planning tokens), enabling lightweight and architecture-compatible modulation of reasoning.

- Layer-Recurrent Execution: Introducing recurrence into transformer architectures, allowing iterative refinement of hidden states through shared weights and dynamic depth control.

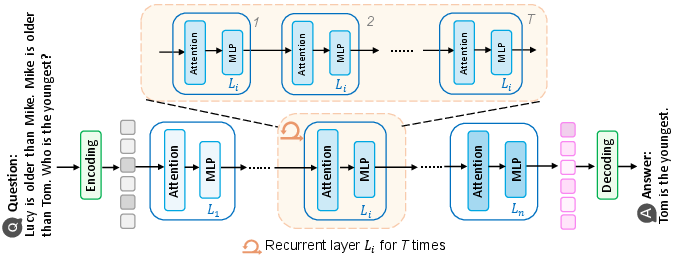

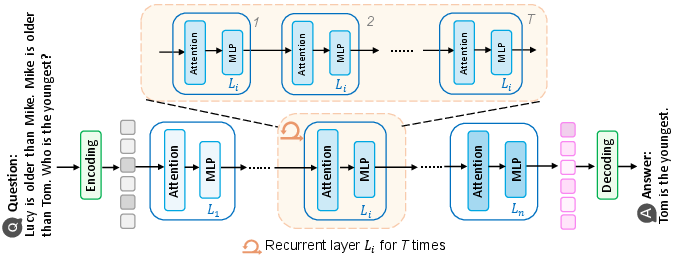

Figure 2: Layer-recurrent execution enables multi-step reasoning by reusing parameters across recurrent layers, refining hidden states through depth-wise computation.

Latent Optimization

- Token-Level: Methods such as CoCoMix and Latent Token insert semantic or non-interpretable latent tokens to guide reasoning, often leveraging sparse autoencoders or vector quantization for concept abstraction.

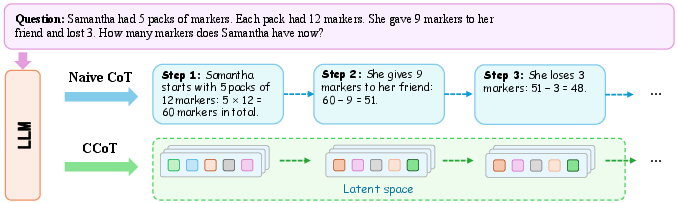

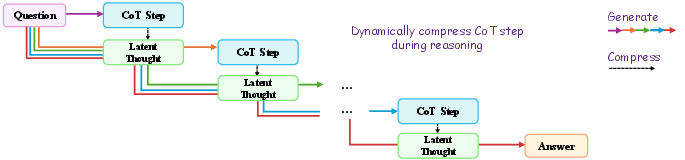

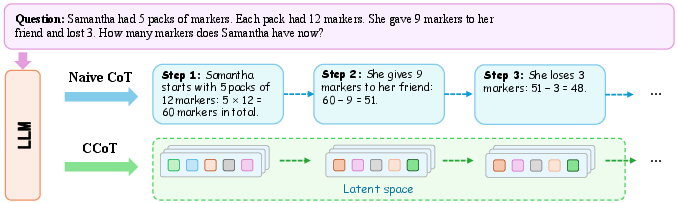

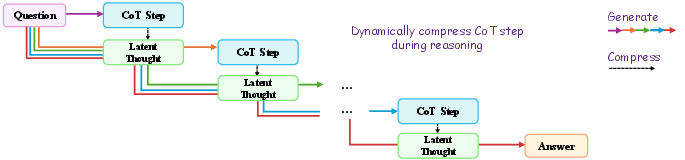

- Trajectory-Level: Approaches like CCoT, HCoT, and Coconut compress explicit reasoning chains into continuous latent trajectories, anchored semantically to explicit supervision. Adaptive mechanisms (LightThinker, CoT-Valve, CoLaR) dynamically adjust reasoning length and speed, while progressive refinement (ICoT-SI, PonderingLM, BoLT) internalizes reasoning steps via curriculum or iterative feedback. Exploratory diversification (LaTRO, Soft Thinking, SoftCoT++) enables parallel exploration of multiple reasoning paths in latent space.

Figure 3: CCoT compresses chain-of-thought traces into short sequences of continuous embeddings, reducing decoding cost while preserving essential reasoning semantics.

Internal-State-Level

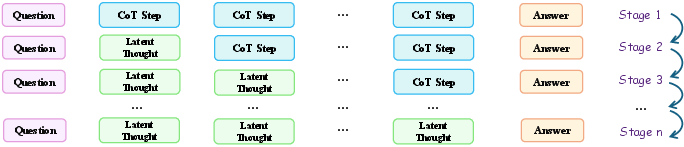

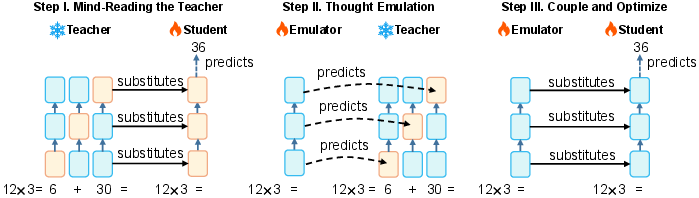

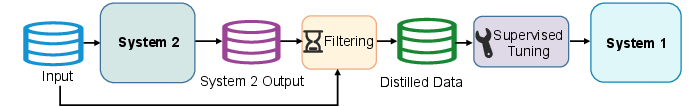

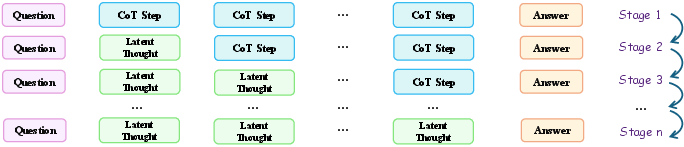

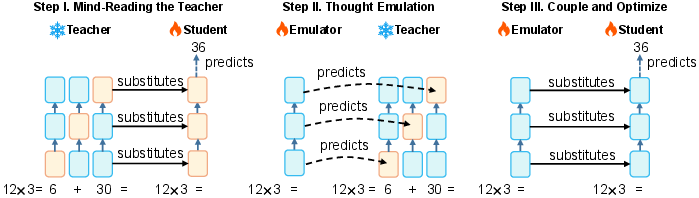

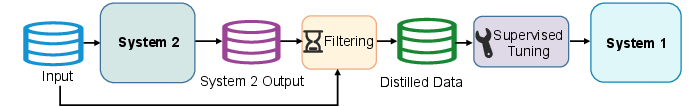

- Methods such as ICoT-KD and System2 Distillation distill explicit reasoning traces into compact internal representations, enabling vertical reasoning in hidden state space. Latent Thought Models (LTMs) and System-1.5 Reasoning introduce posterior inference and dynamic shortcuts, supporting scalable and budget-controllable reasoning. Hybrid approaches (HRPO) combine discrete and continuous latent reasoning via reinforcement learning.

Figure 4: Distilling the hidden states of explicit reasoning enables implicit reasoning by transferring structured reasoning into latent embeddings.

Signal-Guided Control

- Single-Type Signal: Insertion of control tokens (thinking, pause, filler, planning) or dynamic latent control (LatentSeek, DIT) modulates reasoning depth and allocation of computational resources.

- Multi-Type Signal: Multiple control signals (e.g., memory and reasoning tokens in Memory Reasoning, short/think tokens in Thinkless) enable fine-grained, adaptive selection of reasoning strategies.

Layer-Recurrent Execution

- Architectures such as ITT, looped Transformer, CoTFormer, Huginn, and RELAY implement recurrent computation, simulating multi-step reasoning by iteratively refining token representations with shared weights and dynamic depth adaptation. These models achieve parameter efficiency and generalization in long-context or multi-hop tasks.

Mechanistic and Behavioral Evidence

The survey synthesizes evidence for implicit reasoning from three perspectives:

- Layer-wise Structural Evidence: Analysis of intermediate activations and shortcut learning reveals that reasoning can be completed internally, with distinct subtasks scheduled across layers and superposition states encoding multiple reasoning traces.

- Behavioral Signatures: Training dynamics (e.g., grokking), step-skipping, and reasoning leaps indicate that LLMs can internalize computations and flexibly adjust reasoning granularity without explicit outputs.

- Representation-Based Analysis: Probing, intervention, and reverse-engineering techniques demonstrate that reasoning trees, latent trajectories, and symbolic inference circuits are encoded in hidden states, supporting parallel and depth-bounded reasoning.

Evaluation Protocols and Benchmarks

Implicit reasoning methods are evaluated primarily on final-answer correctness (accuracy, pass@k, exact match), resource efficiency (latency, output length, computational usage, ACU), LLMing capability (perplexity), and internal probing accuracy. The survey categorizes over 70 benchmarks into general knowledge, mathematical reasoning, LLMing, multi-hop QA, and multimodal reasoning, highlighting the need for standardized evaluation suites tailored to implicit reasoning.

Challenges and Future Directions

The authors identify six key limitations:

- Limited Interpretability and Latent Opacity: The opacity of latent computation hinders mechanistic understanding and error diagnosis.

- Limited Control and Reliability: Absence of built-in supervision or uncertainty estimates reduces robustness in high-stakes applications.

- Performance Gap Compared to Explicit Reasoning: Implicit methods often underperform explicit CoT strategies, especially on complex tasks.

- Lack of Standardized Evaluation: Inconsistent benchmarking practices impede fair comparison and reproducibility.

- Architecture and Generalization Constraints: Many methods rely on architecture-specific components and are evaluated on small-scale models.

- Dependence on Explicit Supervision: Most approaches require explicit reasoning traces for training, limiting scalability and independence.

The survey advocates for the development of causal intervention analysis, state-trajectory visualization, confidence-aware execution, hybrid supervision strategies, architecture-agnostic designs, and unsupervised discovery of latent reasoning structures.

Conclusion

This survey establishes a coherent framework for implicit reasoning in LLMs, organizing diverse methods into execution-centric paradigms and synthesizing mechanistic evidence and evaluation practices. While implicit reasoning offers efficiency and cognitive alignment, substantial challenges remain in interpretability, reliability, and performance. The work provides a foundation for future research toward efficient, robust, and cognitively grounded reasoning systems, emphasizing the need for unified benchmarks, mechanistic analysis, and scalable, architecture-agnostic approaches.