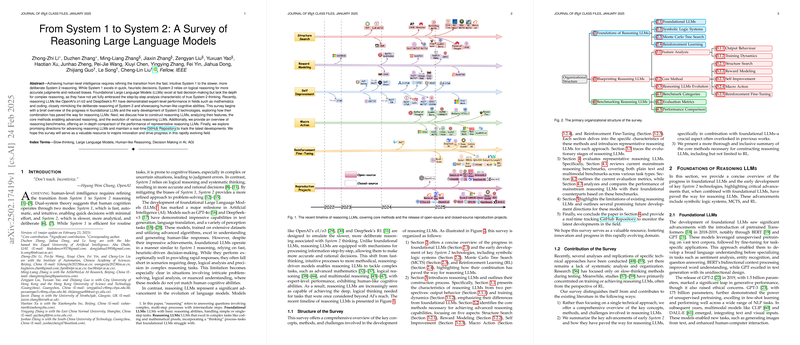

The paper provides a comprehensive survey of reasoning LLMs. It begins by highlighting the limitations of foundational LLMs, which, despite their advancements, still operate primarily on System 1-like reasoning, characterized by fast, intuitive decision-making that often falls short in complex, multi-step problem-solving. The survey then explores reasoning LLMs, designed to emulate the more deliberate System 2 thinking, offering a detailed analysis of their features, construction, and evaluation.

The survey is structured as follows:

- The evolution of early System 2 technologies, including symbolic logic systems, Monte Carlo Tree Search (MCTS), and Reinforcement Learning (RL), and how, combined with foundational LLMs, these technologies have enabled the development of reasoning LLMs.

- The characteristics of reasoning LLMs, examining both output behavior and training dynamics to highlight their differences from foundational LLMs.

- The core methods necessary for achieving advanced reasoning capabilities, focusing on Structure Search, Reward Modeling, Self Improvement, Macro Action, and Reinforcement Fine-Tuning.

- The evolutionary stages of reasoning LLMs.

- An evaluation of representative reasoning LLMs, providing an in-depth comparison of their performance using current benchmarks.

- The limitations of existing reasoning LLMs, and outlining several promising future development directions for these models.

Analysis of the Features of Reasoning LLMs

The survey analyzes the features of reasoning LLMs from two perspectives:

- Output Behavior Perspective: Reasoning LLMs exhibit a strong tendency for exploratory behavior in their output structures, especially when compared to models that rely on conventional Chain-of-Thought (CoT) reasoning approaches. They also incorporate verification and check structures, using macro-level actions for long-term strategic planning and micro-level actions for meticulous verification. Reasoning LLMs often generate outputs exceeding 2000 tokens to tackle complex problems, which can sometimes lead to overthinking.

- Training Dynamic Perspective: The development of effective reasoning LLMs does not require extensive datasets or dense reward signals. Moreover, training LLMs for slow-thinking results in relatively uniform gradient norms across different layers, and larger models are more compatible with reasoning LLMs training due to their enhanced capacity for complex reasoning.

Core Methods Enabling Reasoning LLMs

The paper identifies five core methods that drive the advanced reasoning capabilities of reasoning LLMs:

- Structure Search: It addresses the limitations of foundational LLMs in intricate reasoning tasks by employing MCTS to systematically explore and evaluate reasoning paths. MCTS constructs a reasoning tree, where each node represents a reasoning state, and actions expand the tree by considering potential next steps. The integration of MCTS into LLMs depends on defining actions and rewards to guide reasoning path exploration and assess quality. Actions can be categorized into reasoning steps as nodes, token-level decisions, task-specific structures, and self-correction and exploration. Reward design can be categorized into outcome-based rewards, stepwise evaluations, self-evaluation mechanisms, domain-specific criteria, and iterative preference learning.

- Reward Modeling: It contrasts outcome supervision, which focuses on the correctness of the final answer, with process supervision, which provides step-by-step labels for the solution trajectory, evaluating the quality of each reasoning step. Process Reward Models (PRMs) offer fine-grained, step-wise supervision, allowing for the identification of specific errors within a solution path and closely mirroring human reasoning behavior.

- Self Improvement: It enhances LLMs performance through self-supervision, gradually improving capabilities in tasks such as translation, mathematical reasoning, and multimodal perception. Training-based self improvement in LLMs can be categorized based on exploration and improvement strategies. The exploration phase focuses on data collection to facilitate subsequent training improvements, while improvement strategies exhibit diversity, from combining filtering with Supervised Fine-Tuning (SFT) to introducing innovative reward calculation methods to enhance RL training for policy models.

- Macro Action: It enhances the emulation of human-like System 2 cognitive processes through sophisticated thought architectures. These structured reasoning systems go beyond traditional token-level autoregressive generation by introducing hierarchical cognitive phases, such as strategic planning, introspective verification, and iterative refinement. Macro actions enhance reasoning diversity and generalization in data synthesis and training frameworks.

- Reinforcement Fine-Tuning: It focuses on optimizing the model's reasoning process by using a reward mechanism to guide the model's evolution, thereby enhancing its reasoning capabilities and accuracy. This technique focuses on improving model performance in a specific domain with minimal high-quality training data. DeepSeek-R1 demonstrates simplified training pipeline, enhanced scalability, and emergent properties.

Benchmarking Reasoning LLMs

The survey categorizes reasoning benchmarks by task type, broadly divided into math, code, scientific, agent, medical, and multimodal reasoning. Depending on task types, technical proposals, and reasoning paradigms, various evaluation metrics have been introduced for reasoning LLMs. Mathematical reasoning typically uses Pass@k and Cons@k, code tasks use Elo and Percentile, and scientific tasks use Exact Match and Accuracy.

A comparison of the performance of different reasoning LLMs and their corresponding foundational LLMs on plain text benchmarks demonstrates that reasoning LLMs, such as DeepSeek-R1 and OpenAI-o1/o3, achieve high scores on multiple plain-text benchmarks, showcasing their robust text-based reasoning abilities. Reasoning LLMs also excel in multimodal tasks.

Challenges and Future Directions

The survey identifies several challenges that persist, limiting the generalizability and practical applicability of reasoning LLMs, and highlights potential research directions to address them:

- Efficient Reasoning LLMs: Integrating external reasoning tools to enable early stopping and verification mechanisms, and exploring strategies to implement slow-thinking reasoning capabilities in smaller-scale LLMs (SLMs).

- Collaborative Slow & Fast-thinking Systems: Developing adaptive switching mechanisms, joint training frameworks, and co-evolution strategies to harmonize the efficiency of fast-thinking systems with the precision of reasoning LLMs.

- Reasoning LLMs For Science: Refining model formulation and hypothesis testing in fields such as medicine, mathematics, physics, engineering, and computational biology.

- Deep Integration of Neural and Symbolic Systems: Combining reasoning LLMs with symbolic engines to balance adaptability and interpretability.

- Multilingual Reasoning LLMs: Overcoming the challenges posed by data scarcity and cultural biases in low-resource languages.

- Multimodal Reasoning LLMs: Developing hierarchical reasoning LLMs that enable fine-grained cross-modal understanding and generation, tailored to the unique characteristics of modalities such as audio, video, and 3D data.

- Safe Reasoning LLMs: Developing methods for controlling and guiding the actions of superintelligent models capable of continuous self-evolution, thereby balancing AI power with ethical decision-making.