On the Generalization of SFT: A Reinforcement Learning Perspective with Reward Rectification (2508.05629v1)

Abstract: We present a simple yet theoretically motivated improvement to Supervised Fine-Tuning (SFT) for the LLM, addressing its limited generalization compared to reinforcement learning (RL). Through mathematical analysis, we reveal that standard SFT gradients implicitly encode a problematic reward structure that may severely restrict the generalization capabilities of model. To rectify this, we propose Dynamic Fine-Tuning (DFT), stabilizing gradient updates for each token by dynamically rescaling the objective function with the probability of this token. Remarkably, this single-line code change significantly outperforms standard SFT across multiple challenging benchmarks and base models, demonstrating greatly improved generalization. Additionally, our approach shows competitive results in offline RL settings, offering an effective yet simpler alternative. This work bridges theoretical insight and practical solutions, substantially advancing SFT performance. The code will be available at https://github.com/yongliang-wu/DFT.

Summary

- The paper demonstrates that the SFT gradient is equivalent to a policy gradient update with an inverse-probability reward, resulting in high variance.

- It introduces Dynamic Fine-Tuning (DFT) which rescales token-level loss to stabilize optimization and significantly improve generalization, especially on math reasoning benchmarks.

- Empirical results show DFT consistently outperforms standard SFT and RL-based methods, achieving nearly 6x improvement in accuracy on key mathematical tasks.

Dynamic Fine-Tuning: Rectifying SFT for Improved Generalization via RL Perspective

Introduction

This paper presents a rigorous theoretical and empirical analysis of Supervised Fine-Tuning (SFT) for LLMs, identifying its generalization limitations through the lens of reinforcement learning (RL). The authors demonstrate that the SFT gradient is mathematically equivalent to a policy gradient update with an implicit, ill-posed reward structure—specifically, a reward inversely proportional to the model's probability of generating expert actions. This leads to high-variance, unstable optimization and poor generalization, especially when the model assigns low probability to expert tokens. To address this, the paper introduces Dynamic Fine-Tuning (DFT), a simple yet principled modification that rescales the SFT objective by the token probability, thereby stabilizing updates and improving generalization. Extensive experiments on mathematical reasoning benchmarks show that DFT consistently and substantially outperforms standard SFT and even surpasses state-of-the-art RL-based fine-tuning methods in offline settings.

Theoretical Analysis: SFT as Policy Gradient with Implicit Reward

The core theoretical contribution is the formal equivalence between the SFT gradient and a policy gradient update in RL. By expressing the SFT gradient as an on-policy expectation with importance sampling, the authors show that SFT is a special case of RL with a reward function r(x,y)=1[y=y⋆] and an importance weight 1/πθ(y∣x). This structure is problematic: when πθ(y⋆∣x) is small, the gradient magnitude becomes large, resulting in unbounded variance and a tendency to overfit rare expert trajectories. The optimization landscape is thus dominated by high-variance updates, undermining generalization.

Dynamic Fine-Tuning: Reward Rectification

To neutralize the ill-posed reward structure, DFT introduces a dynamic reweighting of the SFT loss by multiplying with the token probability, i.e., the loss becomes −πθ(yt⋆∣x)logπθ(yt⋆∣x) at the token level. This modification cancels the inverse-probability weighting, yielding a stable, uniformly-weighted update. The stop-gradient operator ensures that gradients do not flow through the probability scaling term, maintaining unbiased updates. The result is a simple, one-line change to the standard SFT objective that fundamentally alters the optimization dynamics.

Empirical Results: Mathematical Reasoning Benchmarks

DFT is evaluated on multiple mathematical reasoning datasets (Math500, Minerva Math, Olympiad Bench, AIME 2024, AMC 2023) using several state-of-the-art LLMs (Qwen2.5-Math-1.5B/7B, LLaMA-3.2-3B/8B, DeepSeekMath-7B). Across all models and benchmarks, DFT yields substantial improvements over both base models and standard SFT. For example, on Qwen2.5-Math-1.5B, DFT achieves an average accuracy gain of +15.66 points over the base model, compared to +2.09 for SFT—a nearly 6x improvement. DFT also demonstrates robustness on challenging benchmarks where SFT degrades performance due to overfitting.

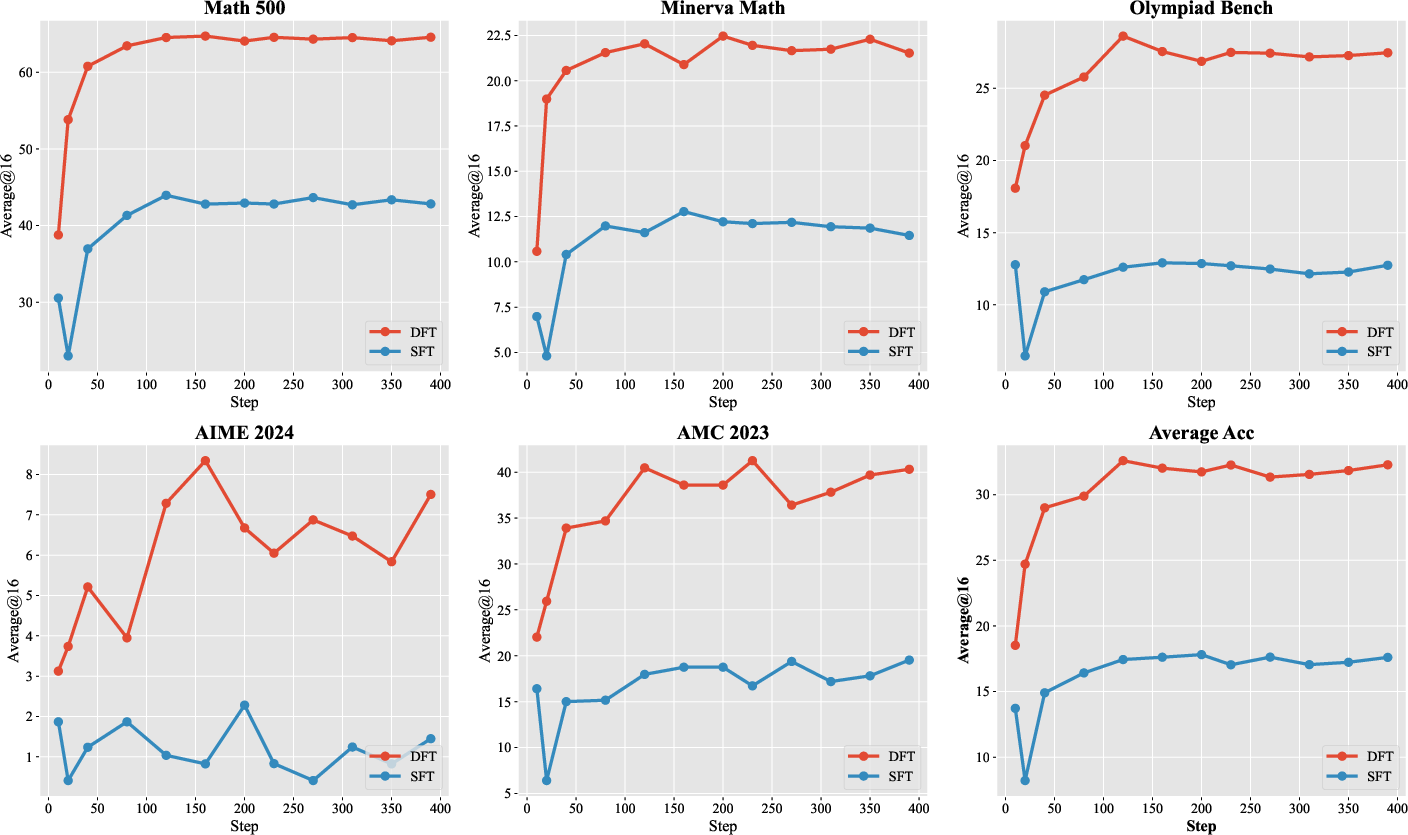

Figure 1: Accuracy progression for Qwen2.5-MATH-1.5B across mathematical benchmarks, illustrating faster convergence and better performance achieved by DFT relative to SFT.

DFT exhibits faster convergence and higher sample efficiency, reaching optimal performance within the first 120 training steps and outperforming SFT even in early training. This indicates that DFT provides more informative gradient updates and avoids optimization plateaus.

Comparison with RL and Concurrent Methods

The paper benchmarks DFT against offline RL methods (DPO, RFT) and online RL algorithms (PPO, GRPO), as well as the concurrent Importance-Weighted SFT (iw-SFT). In the offline RL setting, DFT achieves the highest average score, outperforming RFT by +11.46 points and GRPO by +3.43 points on Qwen2.5-Math-1.5B. DFT also surpasses iw-SFT in most settings, with higher average accuracy and greater robustness across datasets. Unlike iw-SFT, which requires a reference model for importance weights, DFT derives its weighting directly from the model's own token probabilities, resulting in lower computational overhead.

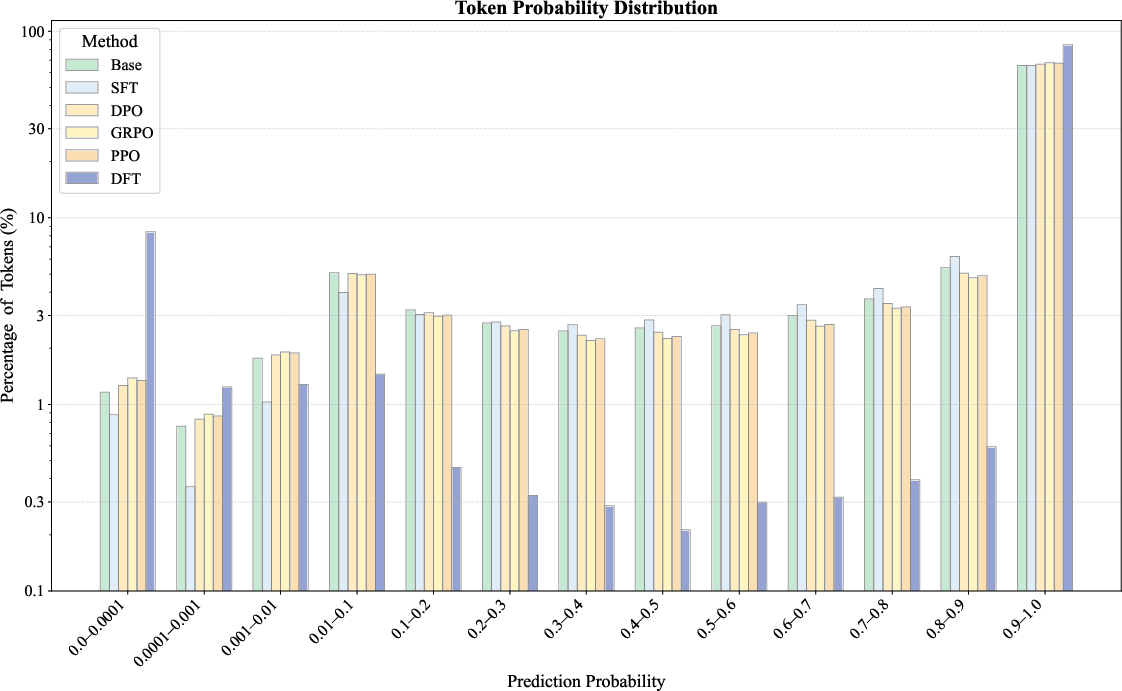

Token Probability Distribution Analysis

The authors analyze the token probability distributions before and after fine-tuning with DFT, SFT, and RL methods. SFT uniformly increases token probabilities, tightly fitting the training data. In contrast, DFT produces a bimodal distribution, boosting probabilities for some tokens while suppressing others, particularly grammatical or connective words. This suggests that robust generalization may require deprioritizing tokens with low semantic content.

Figure 2: Token probability distributions on the training set before training and after fine-tuning with DFT, SFT, and various RL methods including DPO, PPO, and GRPO. A logarithmic scale is used on the y-axis to improve visualization clarity.

Hyperparameter Ablation

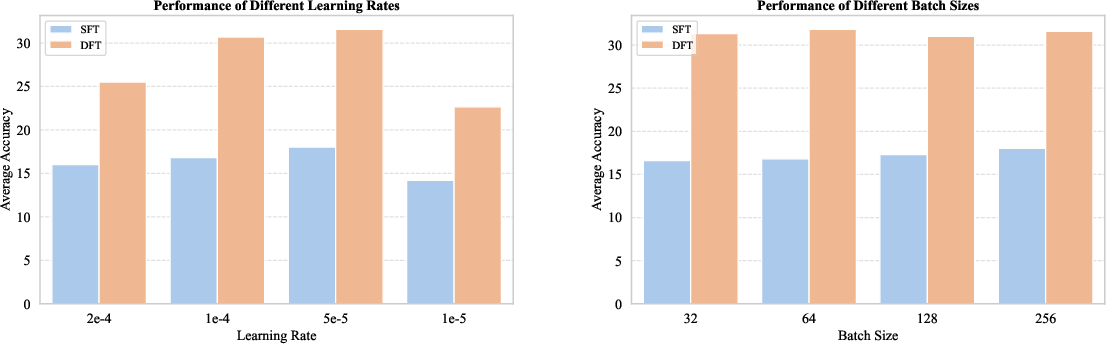

Ablation studies on learning rate and batch size confirm that DFT consistently outperforms SFT across all configurations. Both methods are sensitive to learning rate, with intermediate values yielding the best results, but batch size has minimal impact on final accuracy.

Figure 3: Ablation paper of training hyper-parameters, learning rates and batch size, for DFT and SFT on Qwen2.5-Math-1.5B model.

Implementation and Practical Considerations

DFT is straightforward to implement: simply multiply the token-level cross-entropy loss by the model's token probability, with a stop-gradient to prevent bias. This modification is computationally efficient and does not require additional sampling, reward models, or reference policies. DFT is robust to hyperparameter choices and scales well across model sizes and dataset sizes. The method is particularly advantageous in settings where only positive expert demonstrations are available and RL is impractical due to resource constraints or lack of reward signals.

Implications and Future Directions

Theoretically, this work clarifies the connection between SFT and RL, pinpointing the source of SFT's generalization gap and providing a principled solution. Practically, DFT offers a scalable, efficient alternative to RL-based fine-tuning, with strong empirical performance on mathematical reasoning tasks. The findings suggest that dynamic reweighting can mitigate overfitting and improve generalization in LLMs, potentially extending to other domains such as code generation, commonsense QA, and multimodal tasks. Future work should explore DFT's applicability to larger models and diverse datasets, as well as its integration with hybrid SFT-RL pipelines.

Conclusion

This paper provides a rigorous theoretical foundation for understanding the limitations of SFT in LLMs and introduces Dynamic Fine-Tuning as a simple, effective remedy. By rectifying the implicit reward structure of SFT, DFT stabilizes optimization and substantially improves generalization, outperforming both standard SFT and RL-based methods in challenging mathematical reasoning tasks. The approach is easy to implement, computationally efficient, and robust across models and datasets, making it a practical solution for LLM alignment in resource-constrained or feedback-limited scenarios. The insights and methodology presented here have significant implications for the future development of scalable, generalizable LLMs.

Follow-up Questions

- How does the theoretical equivalence between SFT and policy gradients influence optimization strategies for LLMs?

- What specific challenges does high-variance gradient behavior present in standard SFT methods?

- In what ways does DFT's token probability reweighting contribute to improved model generalization?

- How can the implementation of DFT be scaled to larger models and diverse datasets in resource-constrained environments?

- Find recent papers about reinforcement learning in language model fine-tuning.

Related Papers

- Intuitive Fine-Tuning: Towards Simplifying Alignment into a Single Process (2024)

- Q-SFT: Q-Learning for Language Models via Supervised Fine-Tuning (2024)

- SFT Memorizes, RL Generalizes: A Comparative Study of Foundation Model Post-training (2025)

- Demystifying Long Chain-of-Thought Reasoning in LLMs (2025)

- Discriminative Finetuning of Generative Large Language Models without Reward Models and Human Preference Data (2025)

- d1: Scaling Reasoning in Diffusion Large Language Models via Reinforcement Learning (2025)

- Do Not Let Low-Probability Tokens Over-Dominate in RL for LLMs (2025)

- UFT: Unifying Supervised and Reinforcement Fine-Tuning (2025)

- AceReason-Nemotron 1.1: Advancing Math and Code Reasoning through SFT and RL Synergy (2025)

- Does Math Reasoning Improve General LLM Capabilities? Understanding Transferability of LLM Reasoning (2025)

Tweets

alphaXiv

- On the Generalization of SFT: A Reinforcement Learning Perspective with Reward Rectification (70 likes, 1 question)