How Far Are AI Scientists from Changing the World? (2507.23276v1)

Abstract: The emergence of LLMs is propelling automated scientific discovery to the next level, with LLM-based AI Scientist systems now taking the lead in scientific research. Several influential works have already appeared in the field of AI Scientist systems, with AI-generated research papers having been accepted at the ICLR 2025 workshop, suggesting that a human-level AI Scientist capable of uncovering phenomena previously unknown to humans, may soon become a reality. In this survey, we focus on the central question: How far are AI scientists from changing the world and reshaping the scientific research paradigm? To answer this question, we provide a prospect-driven review that comprehensively analyzes the current achievements of AI Scientist systems, identifying key bottlenecks and the critical components required for the emergence of a scientific agent capable of producing ground-breaking discoveries that solve grand challenges. We hope this survey will contribute to a clearer understanding of limitations of current AI Scientist systems, showing where we are, what is missing, and what the ultimate goals for scientific AI should be.

Summary

- The paper presents a structured four-level framework—from knowledge acquisition to evolution—that systematically quantifies AI Scientist capabilities.

- It demonstrates that papers with detailed experimental verifications achieve higher citation rates, highlighting the value of executable research.

- The findings underscore key limitations in hypothesis generation and ethical oversight, urging advancements in dynamic planning and autonomous learning.

AI Scientists: Gauging Proximity to Real-World Impact

The paper "How Far Are AI Scientists from Changing the World?" (2507.23276) presents a comprehensive survey of AI Scientist systems, assessing their capabilities and limitations in automating scientific discovery. The authors propose a framework categorizing AI Scientist development into four levels: knowledge acquisition, idea generation, verification and falsification, and evolution, providing a roadmap for future research in this domain.

Capability Levels of AI Scientists

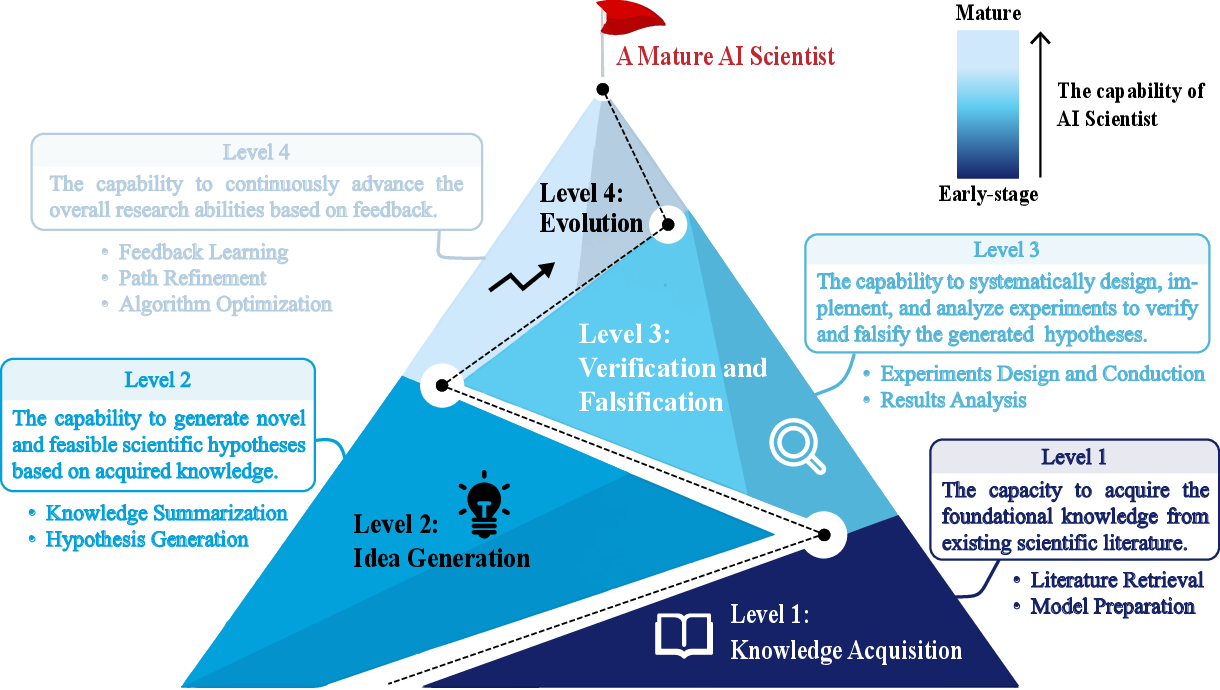

The paper introduces a structured, four-level framework to evaluate the developmental stages of AI Scientists (Figure 1).

Figure 1: The capability level of an AI Scientist, illustrating the progression from foundational knowledge acquisition (Level 1), through idea generation (Level 2), rigorous hypothesis verification and falsification (Level 3), to continuous evolution (Level 4).

These levels are defined as follows:

- Knowledge Acquisition: This foundational level involves the ability to autonomously retrieve, review, and comprehend scientific knowledge from existing research, establishing a knowledge base for subsequent research.

- Idea Generation: This level entails the ability to generate innovative and feasible hypotheses, distinguishing AI Scientist systems from mere automated scientific tools.

- Verification and Falsification: This involves systematically designing, implementing, and analyzing experiments to test and potentially disprove AI-generated scientific hypotheses, transforming the AI Scientist into an autonomous scientific intelligence.

- Evolution: This advanced level requires the continuous advancement of research abilities based on internal and external feedback, essential for a mature scientific agent.

The authors critically analyze existing research through this framework, identifying key bottlenecks and missing components necessary for groundbreaking discoveries produced by autonomous scientific intelligence.

Knowledge Acquisition: From Pre-LLM to the LLM Era

The survey categorizes knowledge acquisition methods into pre-LLM and LLM eras. In the pre-LLM era, smaller PLMs, such as SciBERT [Beltagy2019SciBERT] and domain-specific models like ClinicalBERT, PubMedBERT, and MatSciBERT, were fine-tuned to learn scientific representations and extract structured insights. These models demonstrated capabilities in structured text mining and knowledge extraction but were limited in scalability and contextual understanding.

The LLM era has seen the development of specialized toolkits and systems that leverage LLMs for literature search and curation, such as PaperWeaver [lee2024paperweaver] and DORA AI Scientist [naumov2025dora]. These systems often incorporate RAG to enhance contextual relevance and automation. Idea-oriented approaches focus on extracting key concepts and insights from research papers, while experiment-oriented approaches retrieve experimental results and summarize them into comprehensive reports.

Idea Generation: The Core of AI-Driven Research

The paper highlights idea generation as the capability that distinguishes AI Scientist systems from automated tools. It discusses various methods for enabling LLMs to generate innovative scientific hypotheses, including specialized prompting strategies [li2024chain, hu2024nova, lu2024ai, yamada2025ai, jansen2025codescientist], post-training methods [qi2023large, wang-etal-2024-scimon, weng2025cycleresearcher], and multi-agent collaboration [liu2024aigs, yang2024large, yang2025moosechem, gottweis2025towards].

Verification and Falsification: Completing the Research Cycle

The ability to design, implement, and analyze experiments to verify and falsify AI-generated hypotheses is crucial for transforming AI Scientists from idea generators into autonomous scientific intelligence. The paper notes that despite the increasing number of publications in the AI Scientist domain, studies that include substantive implementation details achieve a significantly higher average number of citations (Figure 2).

(Figure 2)

Figure 2: An analysis of the number of publications in the field of AI Scientist systems on arXiv. The upper panel displays the average number of citations up to now, categorized by containing implementation details. The lower panel shows the growth in the total number of these papers with the same categorization.

This underscores the community's valuation of executable verifications. The evaluation of state-of-the-art LLMs on benchmarks like MLE-Bench [chan2024mle] and PaperBench [starace2025paperbench] reveals significant difficulty in translating conceptual understanding into verifiably correct and operational code.

Evolution: Continuous Advancement for Scientific Discovery

Evolution, the capability to continuously advance overall research abilities based on internal and external feedback, is essential for an elementary AI Scientist to evolve into a mature scientific agent. The paper discusses key technologies required for evolution, including strategies for dynamic planning of research directions and methods for autonomous learning. Dynamic planning remains relatively underdeveloped, while autonomous learning strategies include self-reflection methods and externally guided methods. The absence of robust collaboration mechanisms slows down their evolution.

Review System: Assessing the Quality of AI-Generated Research

The development of AI reviewer systems, which can automate detailed, structured reviews, is discussed. These systems aim to make the review process more efficient and equitable. The paper classifies AI reviewer systems based on their underlying paradigms and the complexity of the tasks they address, ranging from classification and scoring systems to generation-based and multi-agent systems. An evaluation of AI-generated papers produced by various AI Scientist systems reveals their persistent deficiencies in scientific rigor, with "Experimental Weakness" appearing in 100% of the papers.

Limitations and Ethical Challenges

The inherent limitations of LLMs, such as hallucinations, high costs and inefficiencies in knowledge updating, and catastrophic forgetting, pose significant barriers. Additionally, current AI Scientist systems exhibit inadequate scientific research abilities in key aspects such as generating high-quality hypotheses and employing correct validation methods. The paper also addresses ethical challenges, including the potential for misuse, entry into dangerous research domains, weakening of scientific training, and introduction of security vulnerabilities and biases. To mitigate these risks, a comprehensive system of generation management, ethical oversight, and quality evaluation is necessary.

Future Directions

The paper outlines potential directions to bridge the existing gaps in AI Scientist systems, including addressing fundamental model limitations, managing long-term research endeavors comprehensively, and developing robust communication protocols. Future pathways of AI Scientists can be envisioned along two interdependent paradigms: personalized AI Scientist systems tailored to individual human researchers and AI Scientist systems serving broader human society, accelerating global solutions.

Conclusion

The paper provides a valuable survey of AI Scientist systems, offering a structured framework for evaluating their capabilities and highlighting key areas for future development. By addressing the identified limitations and ethical challenges, the field can progress towards truly autonomous scientific intelligence.

Follow-up Questions

- How does the four-level framework improve our understanding of AI Scientist capabilities compared to traditional models?

- What methodological challenges are encountered when transitioning from knowledge acquisition to idea generation in AI-driven research?

- In what ways does the inclusion of experimental verifications enhance the credibility and impact of AI Scientist papers?

- How can dynamic planning and autonomous learning strategies be refined to overcome current limitations in AI Scientist systems?

- Find recent papers about autonomous scientific discovery.

Related Papers

- The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery (2024)

- AIGS: Generating Science from AI-Powered Automated Falsification (2024)

- LLM4SR: A Survey on Large Language Models for Scientific Research (2025)

- Transforming Science with Large Language Models: A Survey on AI-assisted Scientific Discovery, Experimentation, Content Generation, and Evaluation (2025)

- The AI Scientist-v2: Workshop-Level Automated Scientific Discovery via Agentic Tree Search (2025)

- From Automation to Autonomy: A Survey on Large Language Models in Scientific Discovery (2025)

- AI-Researcher: Autonomous Scientific Innovation (2025)

- AI Scientists Fail Without Strong Implementation Capability (2025)

- AI4Research: A Survey of Artificial Intelligence for Scientific Research (2025)

- The Evolving Role of Large Language Models in Scientific Innovation: Evaluator, Collaborator, and Scientist (2025)