- The paper introduces semantic-aware permutation to efficiently identify and process critical tokens in Diffusion Transformers for video generation.

- It integrates a centroid-based estimation and customized attention kernel to optimize computational budgets and balance speed with quality.

- Results show up to 2.30x speedup on HunyuanVideo and PSNR improvements up to 30, underscoring practical performance gains.

Sparse VideoGen2: Accelerating Video Generation through Sparse Attention and Semantic-Aware Permutation

Introduction

Sparse VideoGen2 (SVG2) is a novel approach designed to enhance the efficiency of video generation in Diffusion Transformers (DiTs) by leveraging sparse attention mechanisms. DiTs are powerful in generating high-quality videos but suffer from significant latency due to the quadratic complexity of attention mechanisms. SVG2 addresses this bottleneck by focusing on the efficient computation of critical tokens through sparse attention, thus achieving a balance between computational efficiency and generation quality.

Technical Approach

SVG2 introduces a semantic-aware permutation method to optimize token clustering, focusing on semantic rather than positional similarity to improve the accuracy of critical token identification. This approach involves clustering tokens based on their semantic activations, allowing for more representative aggregated activations and better approximations of attention scores, which are crucial for identifying critical tokens accurately. The proposed semantic-aware permutation also reorganizes critical tokens into contiguous blocks, minimizing computation waste and enhancing GPU efficiency.

To further refine the model, SVG2 integrates a centroid-based estimation system that uses the cluster centroids to predict attention scores, assisting in the dynamic allocation of the computational budget. Additionally, SVG2’s implementation involves a customized attention kernel that supports variable block sizes, crucial for handling the diverse cluster sizes resulting from semantic-aware clustering.

Numerical Results

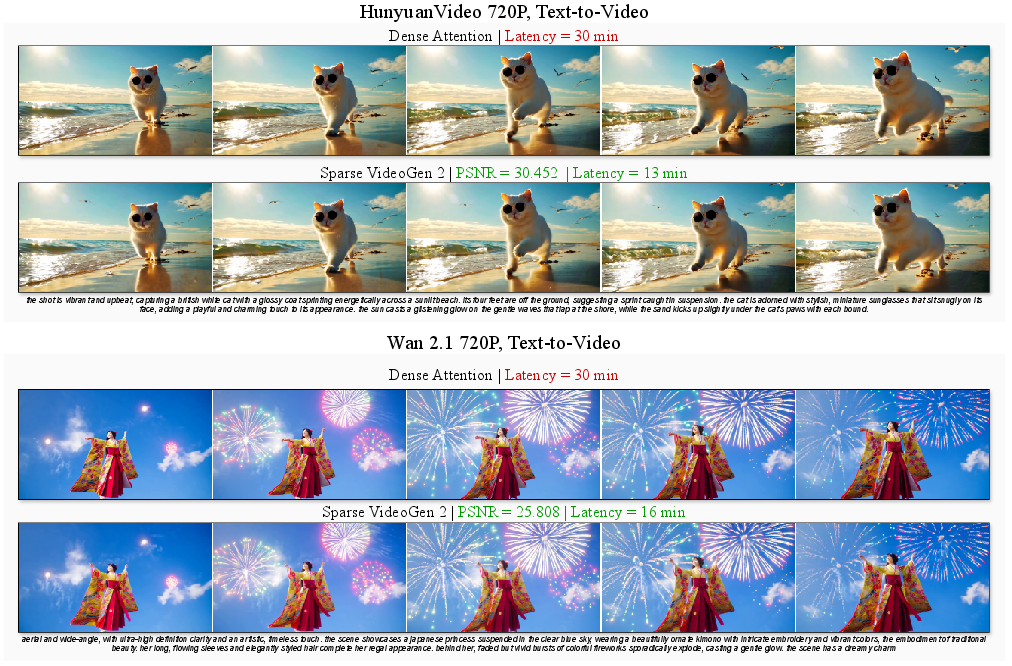

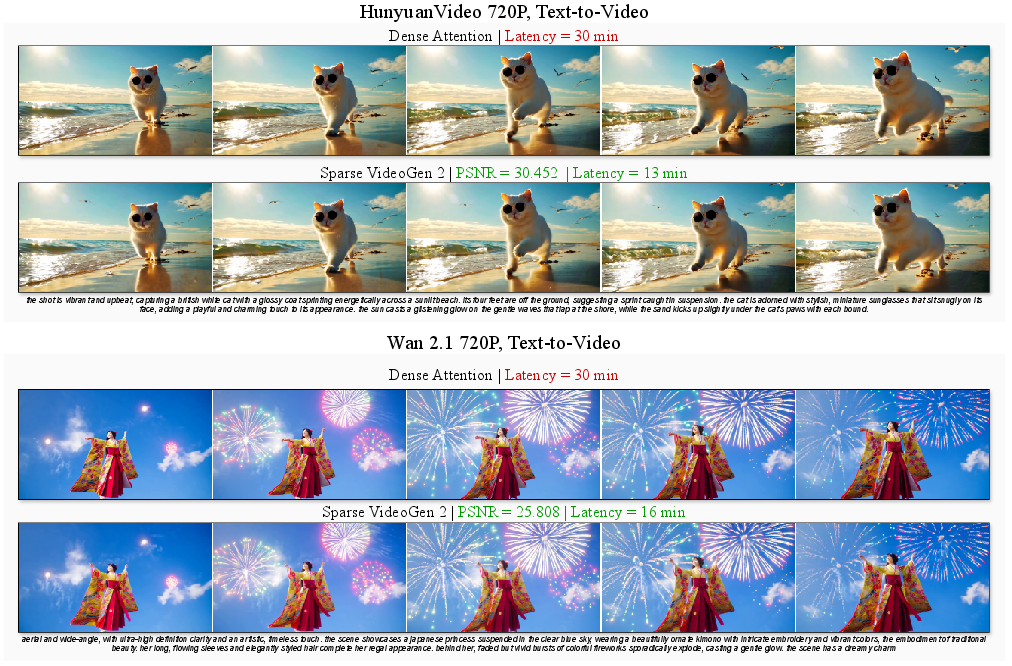

SVG2 demonstrates significant improvements in both speed and quality metrics. On a single H100 GPU, SVG2 achieves up to a 2.30 times speedup for HunyuanVideo and a 1.89 times speedup for Wan 2.1, with impressive PSNR values of up to 30 and 26, respectively. These results underscore SVG2's ability to maintain high video quality while significantly reducing latency (Figure 1).

Figure 1: SVG2 accelerates video generation while maintaining high quality. On a single H100, for HunyuanVideo and Wan 2.1, SVG2 achieves up to 2.30 and 1.89 end-to-end speedup, with a PSNR up to 30 and 26.

SVG2 builds on prior research by incorporating semantic clustering techniques, which differ from common position-based clustering methods that often lead to suboptimal predictions of attention scores and increased computation waste. SVG2's dynamic sparsity approach aligns with recent advances in adaptive attention mechanisms, focusing on runtime selection and clustering of critical tokens based on real-time data semantics.

Implications and Future Work

The introduction of semantic-aware permutation and centroid-based selection techniques in SVG2 provides a substantial leap toward efficient video generation using Diffusion Transformers. The practical implications are profound, suggesting that future frameworks could incorporate similar clustering methods to further exploit natural data sparsity. As video complexity and resolution demands continue to escalate, SVG2 offers a promising avenue toward sustainable computational demand without compromising on quality.

Potential future developments include exploring the extension of semantic-aware clustering to other domains of attention mechanisms beyond video generation or applying these principles to improve multi-modal generative models.

Conclusion

SVG2 marks a significant advancement in the field of video generation, providing an efficient and effective method for reducing latency without sacrificing output quality. By leveraging semantic-aware permutations and a robust computational framework, SVG2 paves the way for more sophisticated and scalable generative models in AI. Future research could further examine the adaptability of these methods to a broader range of generative tasks and attention-based architectures.