- The paper introduces a multi-agent framework, AgentDroid, that integrates multimodal analysis to detect fraudulent Android apps.

- It employs specialized agents using static analysis and prompt-engineered communication to achieve 91.70% accuracy.

- The approach reduces false positives compared to traditional methods, paving the way for further open-source research.

AgentDroid: A Multi-Agent Framework for Detecting Fraudulent Android Applications

AgentDroid presents a sophisticated multi-agent framework designed to detect fraudulent Android applications using multimodal analysis and specialized agents. This framework particularly addresses the limitations inherent in traditional detection systems by integrating multiple modalities and leveraging collaborative agent-based analytics.

Motivation and Background

The proliferation of Android applications has simultaneously escalated the potential for fraudulent apps, posing significant cybersecurity threats such as data breaches and service disruptions. Existing detection methods utilizing static analysis and feature extraction face challenges, like high false positives and limited scope in multimodal data interpretation. The AgentDroid framework addresses these challenges by deploying a comprehensive, collaborative agent system based on GPT-4o models, significantly enhancing detection accuracy and reducing false alarms.

Framework Architecture

AgentDroid operates through several specialized agents, each tasked with processing specific aspects of an application. The following sections outline the system's architecture and its components:

Multi-Agent System

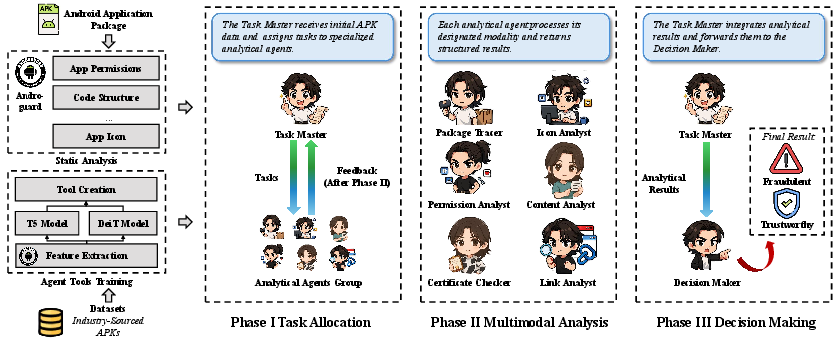

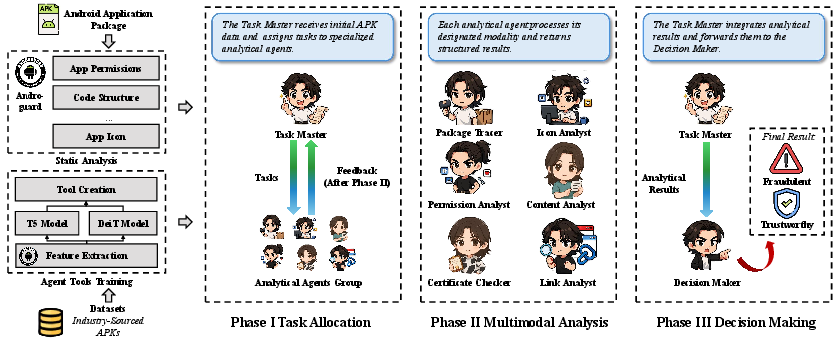

Figure 1: An Overview of AgentDroid.

The multi-agent system in AgentDroid includes roles such as the Task Master, Icon Analyst, Permission Analyst, and more, each focusing on distinct modalities or tasks. The Task Master orchestrates these agents, aggregating their results to form a holistic detection mechanism. This division of labor among agents allows for a specialized focus, improving accuracy and interpretability.

Static Analysis and Task Allocation

AgentDroid begins by performing static analysis using tools like Androguard to extract multimodal features (e.g., metadata, icons, permissions) from APK files. The Task Master assigns tasks to corresponding agents based on this initial data, facilitating targeted analysis without unnecessary computational overhead.

Dynamic Collaboration Among Agents

Each agent operates under a defined task scope, producing insights that collectively inform the Decision Maker's final verdict. This collaborative environment is synchronized through LangGraph and prompt-engineered communications, allowing agents to dynamically adapt their focus based on intermediate results. For instance, positive detections of suspicious patterns by the Package Tracer trigger further scrutiny by the Link Analyst.

Decision-Making Process

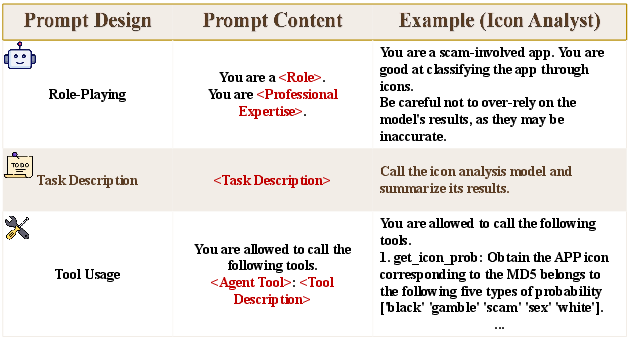

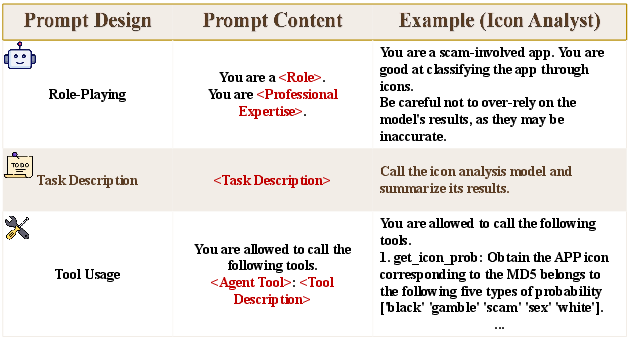

Figure 2: Prompt Template used by AgentDroid. The complete prompt template is available at our repository.

The final decision-making phase involves integrating results from all agents to classify the application as legitimate or fraudulent. The Decision Maker evaluates the coherence and strength of evidence from each agent, enabling adaptive improvement over purely statistical models.

Experimental Evaluation

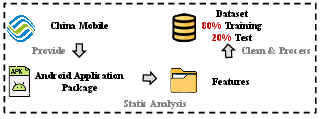

AgentDroid was evaluated on a dataset of 660 APKs, demonstrating impressive performance metrics with an accuracy of 91.70% and an F1 score of 91.68%. These results outperform traditional methods and recent LLM-based models, showcasing the robustness and efficacy of the multi-agent approach.

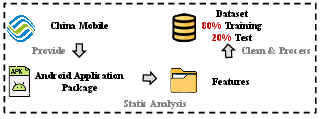

Figure 3: The workflow of curating the dataset.

Comparative Analysis

The superior performance of AgentDroid is reflected across all benchmarks compared to baseline models, including ResNet-18 and traditional tools like Drebin. This is attributed to its integrated multimodal analysis and the unique contributions of specialized agents.

Conclusion

AgentDroid represents an advanced approach to autonomous Android application fraud detection, utilizing a multi-agent architecture to enhance detection capabilities. The open-source availability of the tool and dataset encourages further research and development in this critical area. Future work will focus on incorporating dynamic analysis to address runtime behaviors and broader dataset evaluations to refine generalizability and robustness.