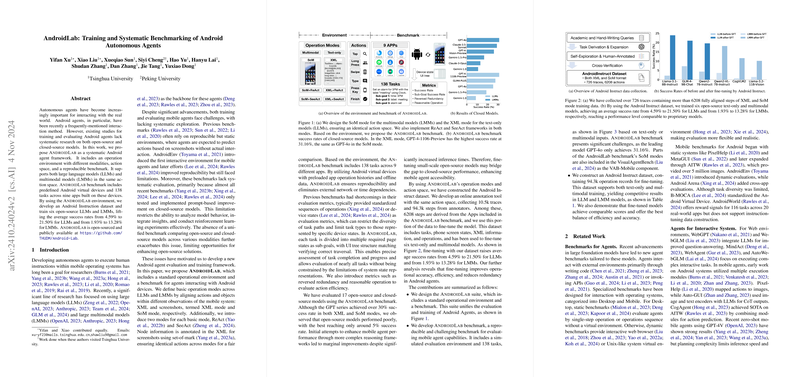

AndroidLab provides a systematic framework for the development, training, and evaluation of autonomous agents designed to interact with Android environments (Xu et al., 31 Oct 2024 ). It addresses the lack of standardized tooling and benchmarks for comparing both open-source and closed-source models, particularly LLMs and Large Multimodal Models (LMMs), operating within the Android ecosystem. The framework comprises an operational environment, a defined action space, a reproducible benchmark suite, and a specialized dataset for instruction tuning.

AndroidLab Environment

The core of AndroidLab is its operational environment, designed to facilitate agent interaction with Android Virtual Devices (AVDs). This environment provides agents with multimodal observational data and accepts actions within a standardized space.

Modalities and Observation Space

The environment exposes multiple modalities to the agent to perceive the device state comprehensively. This includes:

- Screen Pixels: Raw visual information from the device screen.

- View Hierarchy: Structural information of the UI elements currently displayed, typically represented as an XML-like structure. This provides crucial context about interactable elements, their properties (e.g., resource ID, text content, bounding box), and their relationships.

- Task Description: Natural language instructions specifying the goal the agent needs to achieve.

This multimodal input stream allows agents, particularly LMMs, to leverage both visual and structural information for decision-making.

Action Space

A key contribution is the unified action space designed to be compatible with both LLMs and LMMs. This space abstracts common user interactions on Android devices. The specific actions supported include operations like tapping on UI elements identified by their properties (e.g., text content, resource ID, or coordinates derived from the view hierarchy or visual grounding), inputting text into fields, swiping, and system-level actions (e.g., pressing the back or home button). Representing actions in a structured format (e.g., JSON or function calls) allows different model architectures to generate executable commands within the same framework. The design aims for reproducibility and simplifies the process of adapting diverse models to the Android interaction task.

Android Virtual Devices (AVDs)

AndroidLab utilizes pre-configured AVDs as the execution backend. This ensures a controlled and reproducible environment. The framework includes setup scripts and configurations for these AVDs, which host the applications used in the benchmark tasks. Using AVDs allows for parallel execution and isolation, facilitating large-scale experimentation and benchmarking.

AndroidLab Benchmark

The framework includes a reproducible benchmark consisting of 138 tasks distributed across nine common Android applications. These applications are pre-installed on the provided AVD configurations.

Task Design

The tasks are designed to cover a range of typical user interactions and complexities, from simple navigation and information retrieval to more complex multi-step operations involving data entry and manipulation. Examples include tasks like "Send an email with subject X and body Y," "Set a reminder for time T," or "Find directions from location A to location B." Each task is defined by a natural language instruction.

Evaluation Metrics

The primary evaluation metric is the Success Rate (SR), which measures the percentage of tasks completed successfully by the agent. Task completion is typically determined by checking if the final state of the application or device matches the state expected upon successful execution of the given instruction. The benchmark infrastructure provides mechanisms for automated evaluation based on predefined success criteria for each task.

Agent Training and Dataset

Recognizing the performance gap of existing models on Android interaction tasks, AndroidLab facilitates agent training through a custom dataset.

Android Instruction Dataset

An "Android Instruction" dataset was curated using the AndroidLab environment. This dataset comprises trajectories of interactions, where each step includes the multimodal observation (screen, view hierarchy), the natural language instruction, and the corresponding ground-truth action taken to progress towards the task goal. This dataset is specifically designed for instruction-tuning LLMs and LMMs to improve their ability to map instructions and device states to appropriate actions within the Android environment.

Training Methodology

The paper demonstrates the effectiveness of this dataset by fine-tuning several open-source models. Six models (both LLMs and LMMs) were trained using the Android Instruction dataset. The training objective is typically to maximize the likelihood of predicting the correct action given the instruction and the current state observation. Standard supervised fine-tuning techniques are employed.

Experimental Results and Findings

Systematic benchmarking was performed using the AndroidLab framework, evaluating both pre-trained and fine-tuned models.

Baseline Performance

Initial evaluations revealed low success rates for pre-trained, off-the-shelf models on the benchmark tasks. Average success rates were reported as 4.59% for the evaluated LLMs and 1.93% for the LMMs. This highlights the challenge of applying general-purpose models directly to complex, goal-oriented interaction tasks within the Android GUI paradigm without specific adaptation.

Post-Training Performance Improvement

Significant performance improvements were observed after fine-tuning the open-source models on the Android Instruction dataset.

- The average success rate for the fine-tuned LLMs increased from 4.59% to 21.50%.

- The average success rate for the fine-tuned LMMs increased from 1.93% to 13.28%.

These results demonstrate the efficacy of the curated dataset and the fine-tuning process within the AndroidLab framework for enhancing agent capabilities in Android interaction. Although LMMs started from a lower baseline, the relative improvement suggests their potential, possibly requiring further optimization or architectural adaptation for better utilization of visual cues in conjunction with structural information. The framework also supports evaluating closed-source models (e.g., via APIs), allowing for broader comparisons, although the paper primarily focused on improvements achieved through fine-tuning open-source models.

Conclusion

AndroidLab offers a valuable contribution by providing an open-source, systematic framework and benchmark for Android autonomous agents (Xu et al., 31 Oct 2024 ). Its standardized environment, unified action space, diverse task suite, and curated training dataset facilitate reproducible research and development in this area. The demonstrated significant improvement in model performance after fine-tuning underscores the importance of specialized training data and methodologies for enabling effective agent interaction with mobile GUIs. The framework serves as a robust platform for future research in Android agents, supporting the evaluation and comparison of a wide range of AI models.