- The paper presents CROSS-GAiT, a cross-attention multimodal fusion approach that dynamically adapts gait parameters for quadrupedal robots in complex terrains.

- It efficiently integrates visual and proprioceptive sensor data using a vision transformer and dilated causal convolutions to adjust gait in real time.

- Empirical results on the Ghost Robotics Vision 60 platform demonstrate notable improvements in success rate, energy efficiency, and navigation speed.

CROSS-GAiT: An Analytical Overview for Advanced Gait Adaptation in Quadrupedal Robotics

Introduction

The paper "CROSS-GAiT: Cross-Attention-Based Multimodal Representation Fusion for Parametric Gait Adaptation in Complex Terrains" introduces a methodology to enhance quadrupedal robot locomotion. This method, CROSS-GAiT, incorporates a cross-attention-based multimodal fusion system that dynamically adapts gait parameters such as step height and hip splay by integrating visual and proprioceptive sensory inputs.

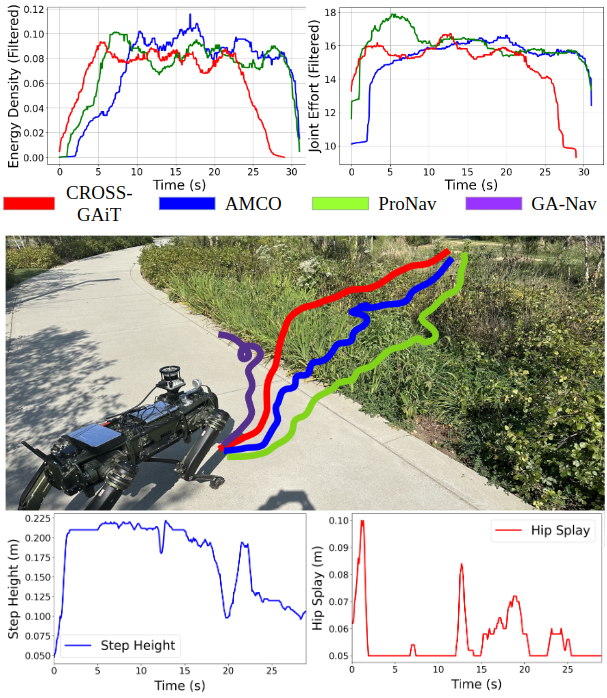

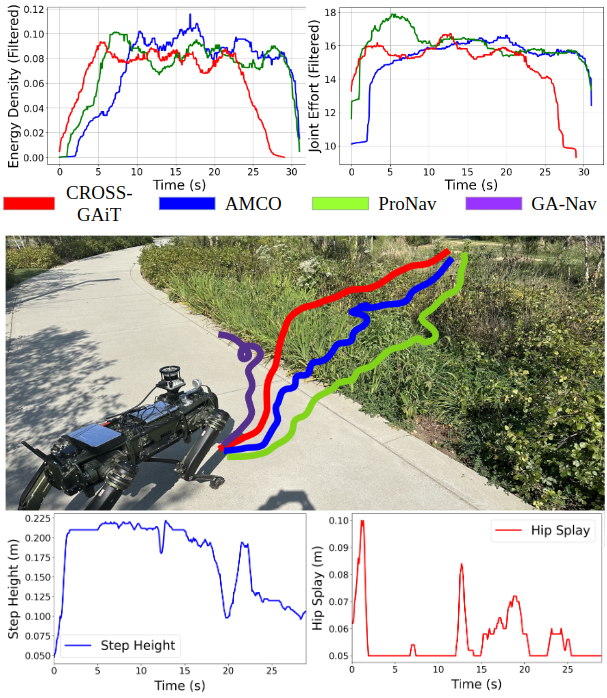

Figure 1: Comparison of CROSS-GAiT with state-of-the-art methods showing its superior adaptability in complex terrains.

Algorithm and Model Architecture

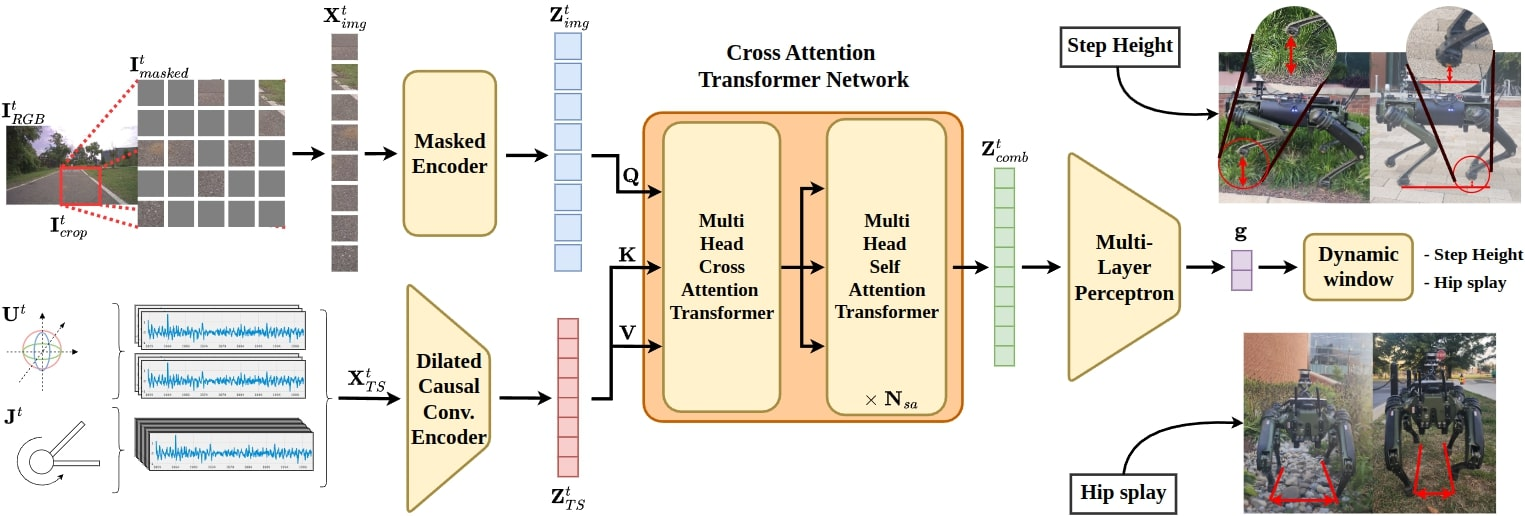

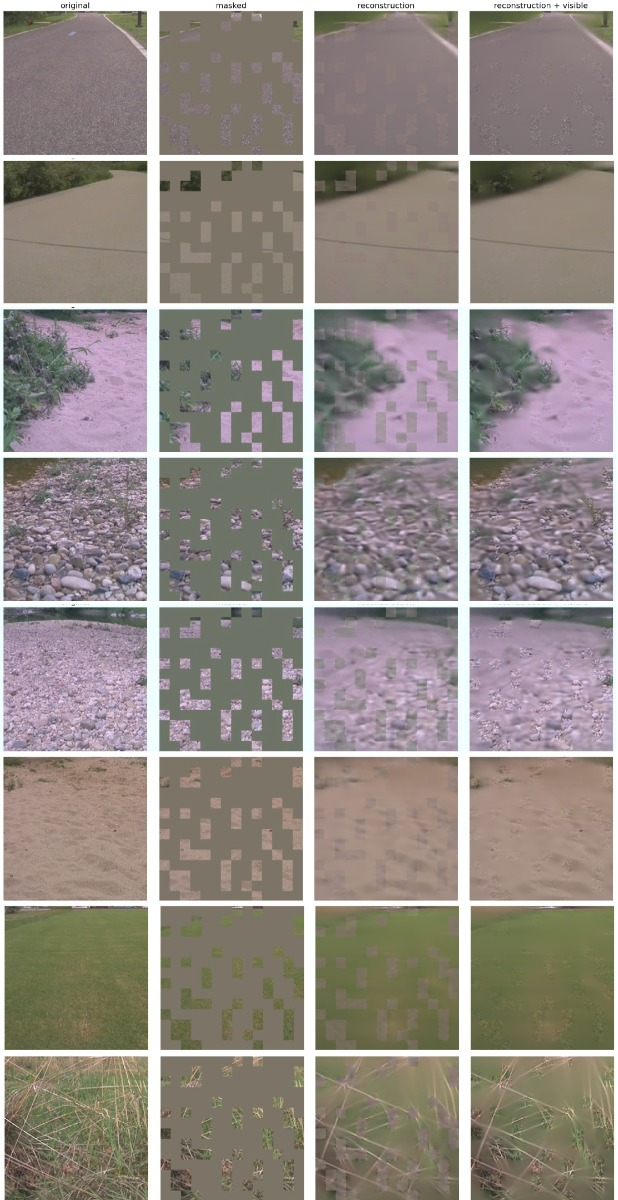

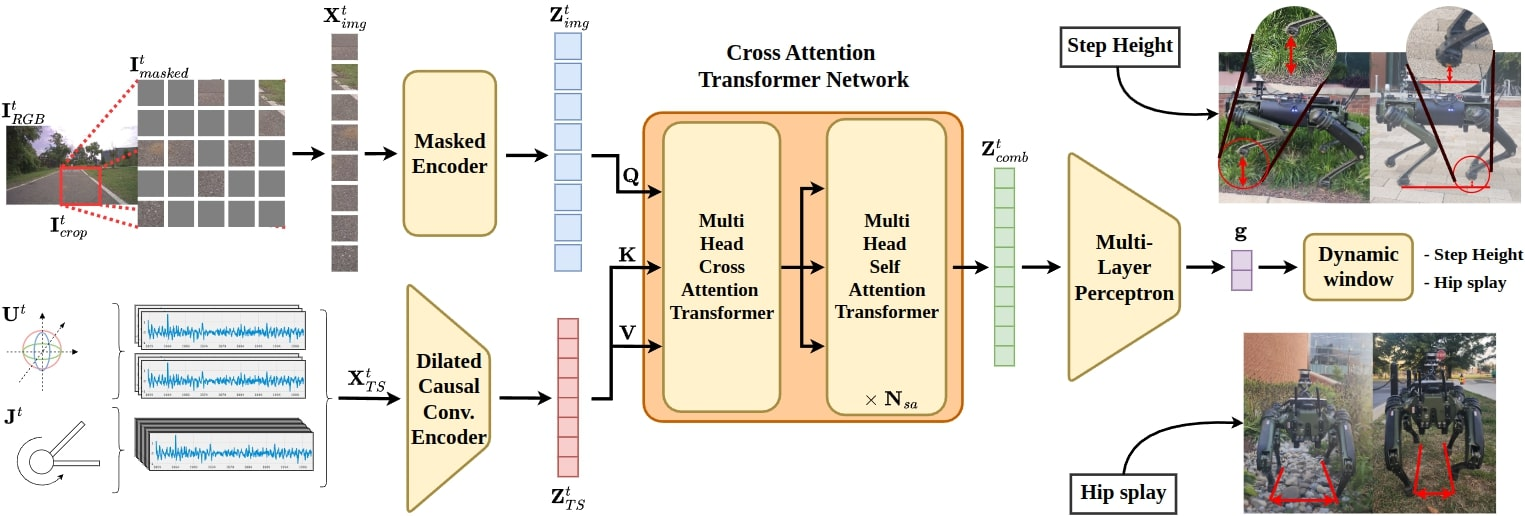

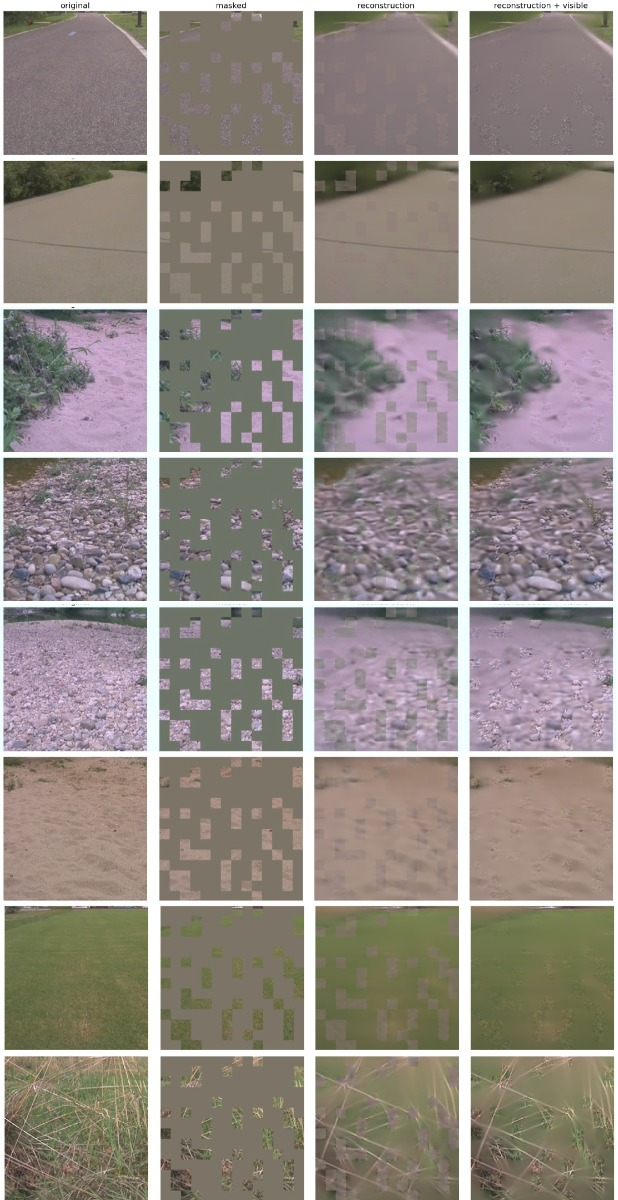

The core innovation of CROSS-GAiT lies in its model architecture, which efficiently combines data from vision-based and proprioceptive sensors. The system processes visual input using a Vision Transformer (ViT) based masked autoencoder to capture terrain features (Figure 2). Additionally, a dilated causal convolutional encoder processes time-series sensor inputs, such as IMU readings and joint effort, generating temporal terrain representations.

Figure 3: Architecture utilizing masked autoencoder for image data and dilated causal convolutions for IMU and joint effort data fusion.

The cross-attention transformer network is then employed for multimodal data fusion, producing a unified latent representation that informs gait parameter adjustments. This approach allows the robot to maintain stability and energy efficiency across varying terrain conditions.

Fusion and Gait Adaptation Mechanism

CROSS-GAiT uniquely employs a cross-attention mechanism for the fusion of visual and proprioceptive data, contrasting static transformation methods like MLPs, which often lack adaptability. This dynamic feature alignment fosters comprehensive terrain understanding and facilitates continuous gait adaptation, enabling the system to adjust in real-time rather than relying on pre-set discrete gait collections.

The gait parameter generation, handled by a multi-layer perceptron regressor, uses the combined latent representation to produce suitable gait attributes. The training regimen incorporates a contrastive loss function that aids in learning discriminative features crucial for terrain classification tasks. The continuous nature of this approach underlines its robustness in real-world scenarios, offering advantages over traditional discrete gait transition systems.

Figure 2: Image reconstruction outputs illustrating the MAE's capacity to capture detailed terrain features.

Empirical Evaluation and Results

CROSS-GAiT was tested using the Ghost Robotics Vision 60 platform, showcasing its performance across four complex terrain scenarios consisting of combinations of hard surfaces, vegetation, sand, and rocks. Strong numerical results demonstrated CROSS-GAiT's capability to improve metrics such as success rate, energy efficiency, and navigation speed (Table 1).

Practical Implications and Future Directions

CROSS-GAiT's integration of multimodal fusion techniques presents significant implications for robotic navigation in unstructured environments. By emphasizing real-time adaptability over static configurations, CROSS-GAiT aligns with the needs of advanced mobile robots requiring efficient navigation over challenging terrains.

Future work could focus on further optimizing the architecture for even broader terrain types by potentially including additional sensory modalities like thermal imaging or advanced LIDAR systems. Additionally, the exploration of reinforcement learning techniques could offer avenues to refine parameter adjustments automatically, based on evolving environmental conditions, without pre-labeled terrain data.

Conclusion

CROSS-GAiT stands as a formidable advancement in the adaptive navigation of quadrupedal robots through complex terrains. Its cross-attention-based multimodal approach offers greater adaptability and energy efficiency, marking significant improvements over existing methodologies. As the field progresses, such insights will foster the development of increasingly autonomous systems capable of robust performance in diverse and dynamic environments.