- The paper presents a novel E(3)-equivariant GNN framework that reduces computational costs compared to traditional DFT approaches.

- It leverages symmetry-aware models and Hessian-derived dynamical matrices to achieve high accuracy in phonon band predictions.

- The integration within the Phonax framework and successful application to diverse materials underscore its potential for high-throughput screening and vibrational analysis.

Phonon Predictions with E(3)-Equivariant Graph Neural Networks

Phonons, as quantizations of vibration modes within atomic structures, are crucial to understanding various material properties, including mechanical, thermal, transport, and superconductivity characteristics. The conventional methods for phonon prediction depend heavily on computationally expensive approaches such as DFT and DFPT. In this paper, E(3)-equivariant graph neural networks are introduced as efficient tools for phonon prediction, leveraging symmetry-aware models to automatically retain crystal symmetries.

Equivariant Neural Network Architecture

The proposed model utilizes E(3)-equivariant graph neural networks that parameterize atomic interactions while preserving 3D Euclidean symmetries. This implies data-efficient model predictions for atomic energy and forces based on 3D atomistic systems.

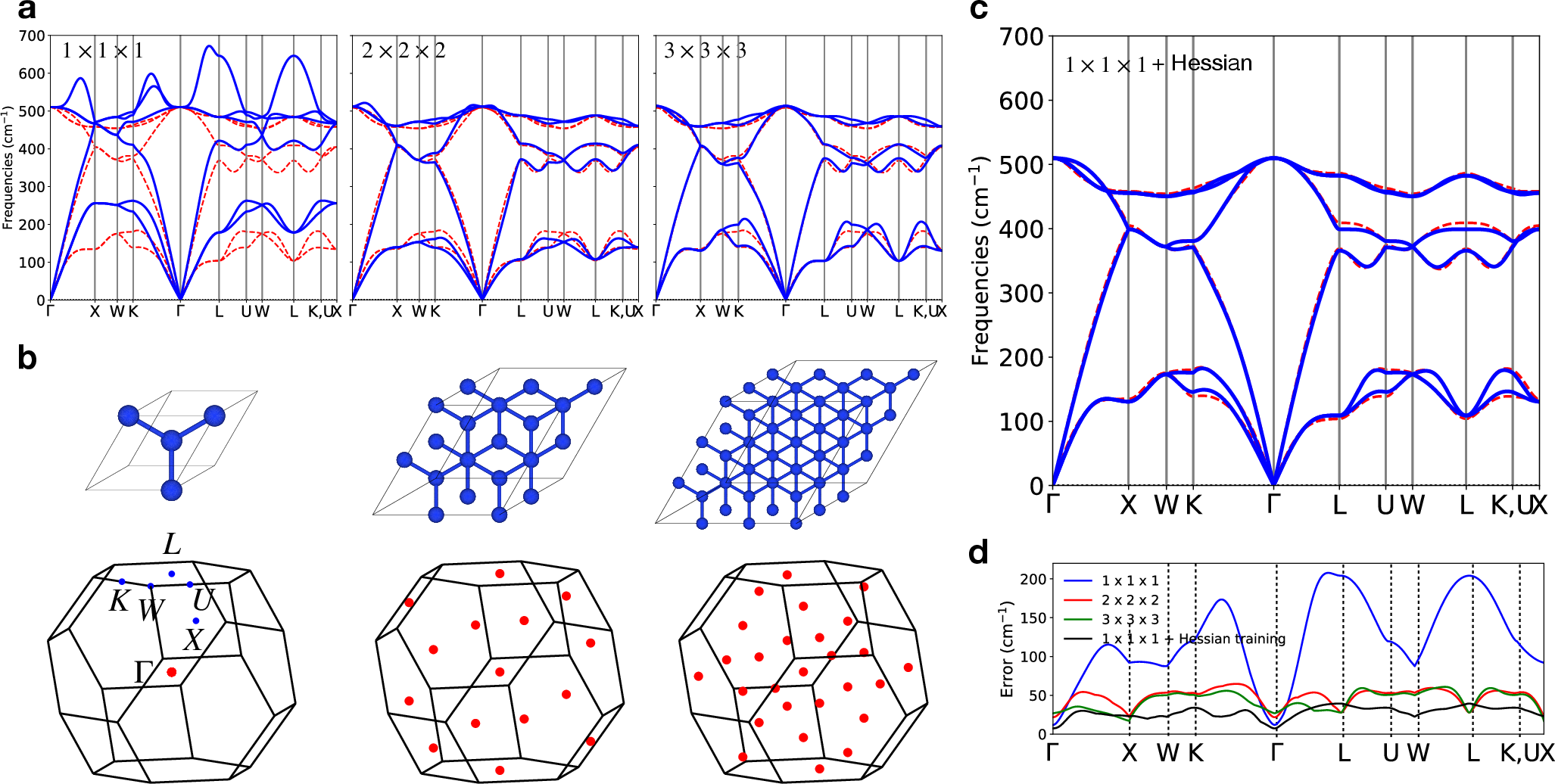

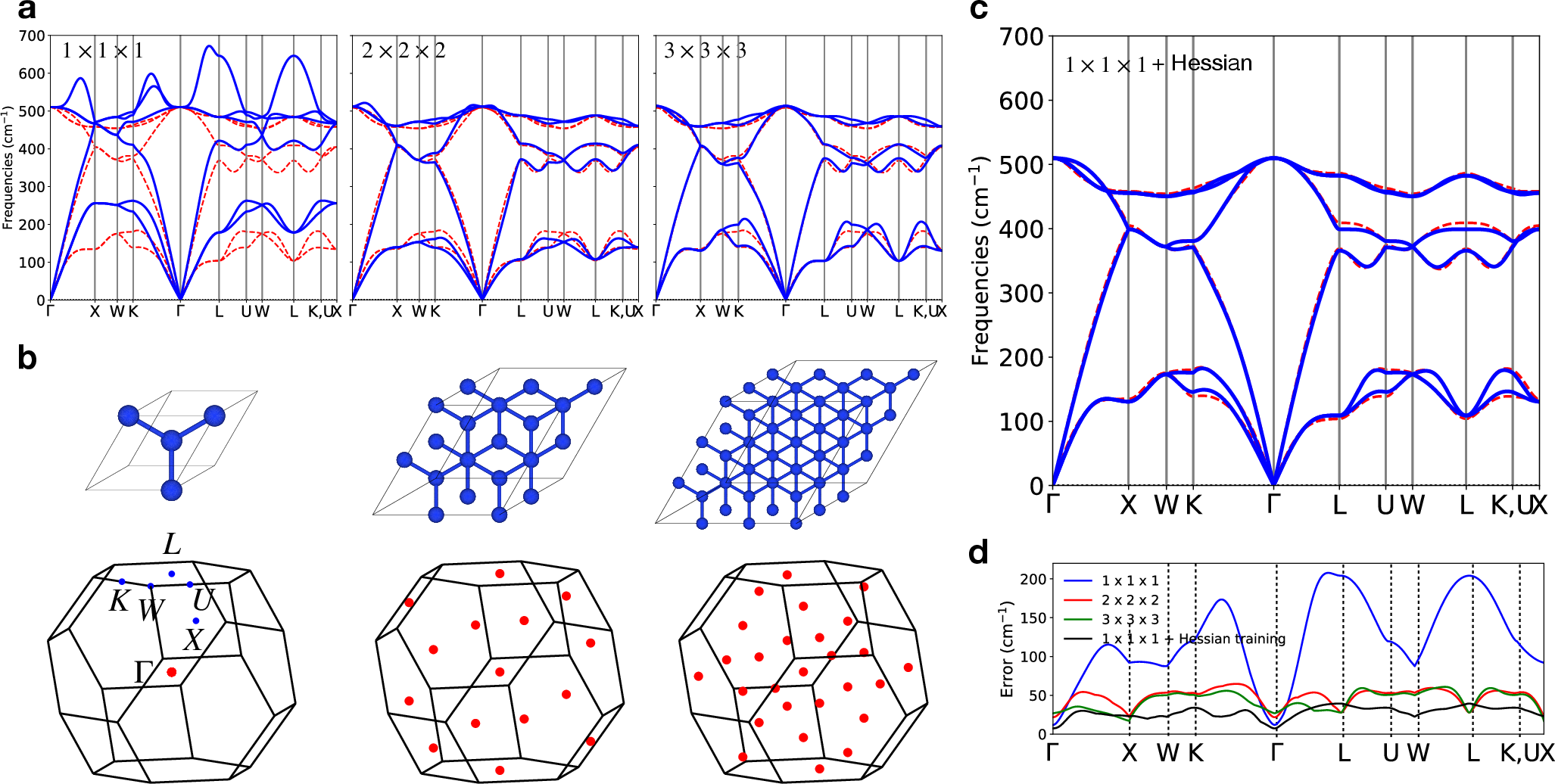

Figure 1: Architecture comparison showcasing the efficiency of equivariant models on phonon band predictions.

These models use energy and force data for training, producing dynamical matrices derived from second derivative computations. The accuracy of phonon dispersion predictions improves significantly with data from larger supercell structures. Experimental benchmarks using silicon crystals exemplify how larger supercell datasets can refine phonon band predictions.

Implementation in Phonax Framework

The Phonax framework, based on JAX, provides automatic differentiation for computing Hessian matrices. It efficiently predicts the dynamical characteristics of periodic crystals and molecules using graph neural networks. Extended graph construction generalizes the periodic boundary constraints to perform derivative evaluations for phonons and vibrational modes.

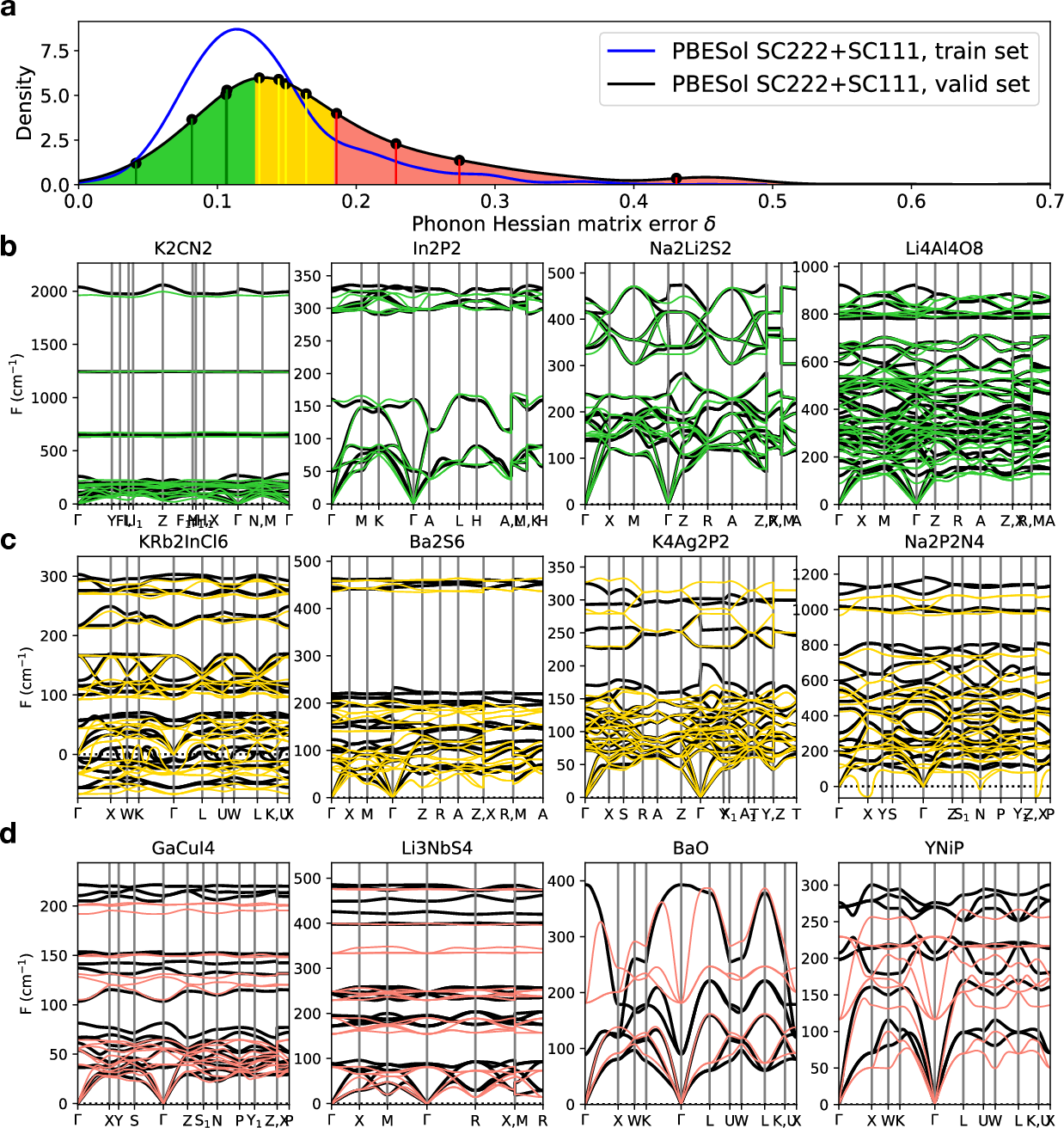

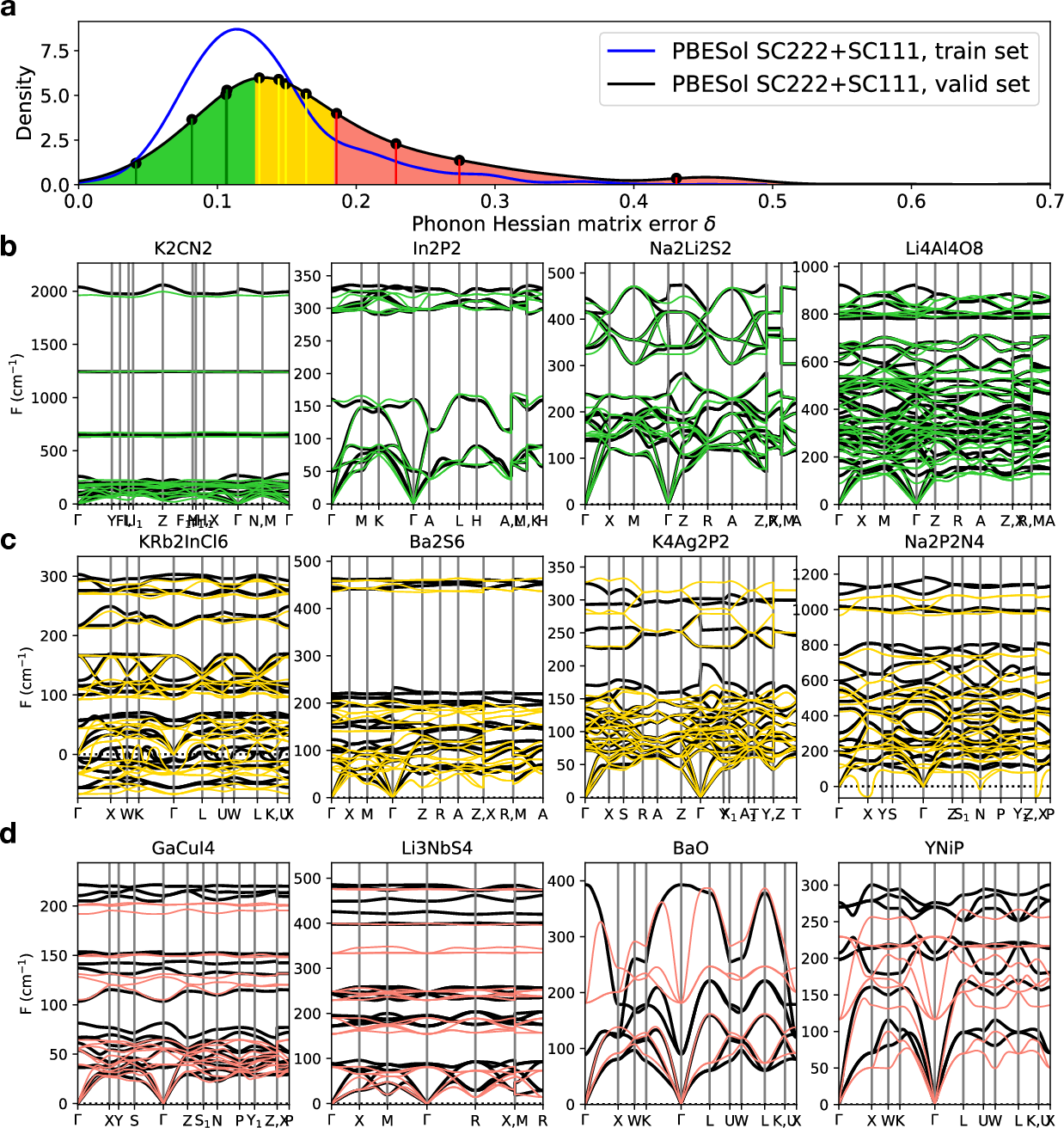

Figure 2: Error analysis demonstrating precise phonon predictions for multiple crystal structures.

The framework verifies predictions against known phonon datasets, demonstrating the effectiveness of the method across numerous crystalline forms. The rigorous representation of symmetry features enhances prediction quality, evidenced by reduced errors in phonon dynamical matrix approximations.

General Phonon Predictions

Extending beyond single-compound validations, the models applied to a diverse range of crystal structures facilitate accelerated high-throughput screening of material properties. Training is done on diverse datasets, including non-equilibrium structures, ensuring robustness against real-world depictions of materials.

Utilizing energy models trained on universal interatomic potentials enables broad predictions across different structural configurations. Accuracy, however, depends critically on consistency between DFT settings employed in training and those in existing phonon databases.

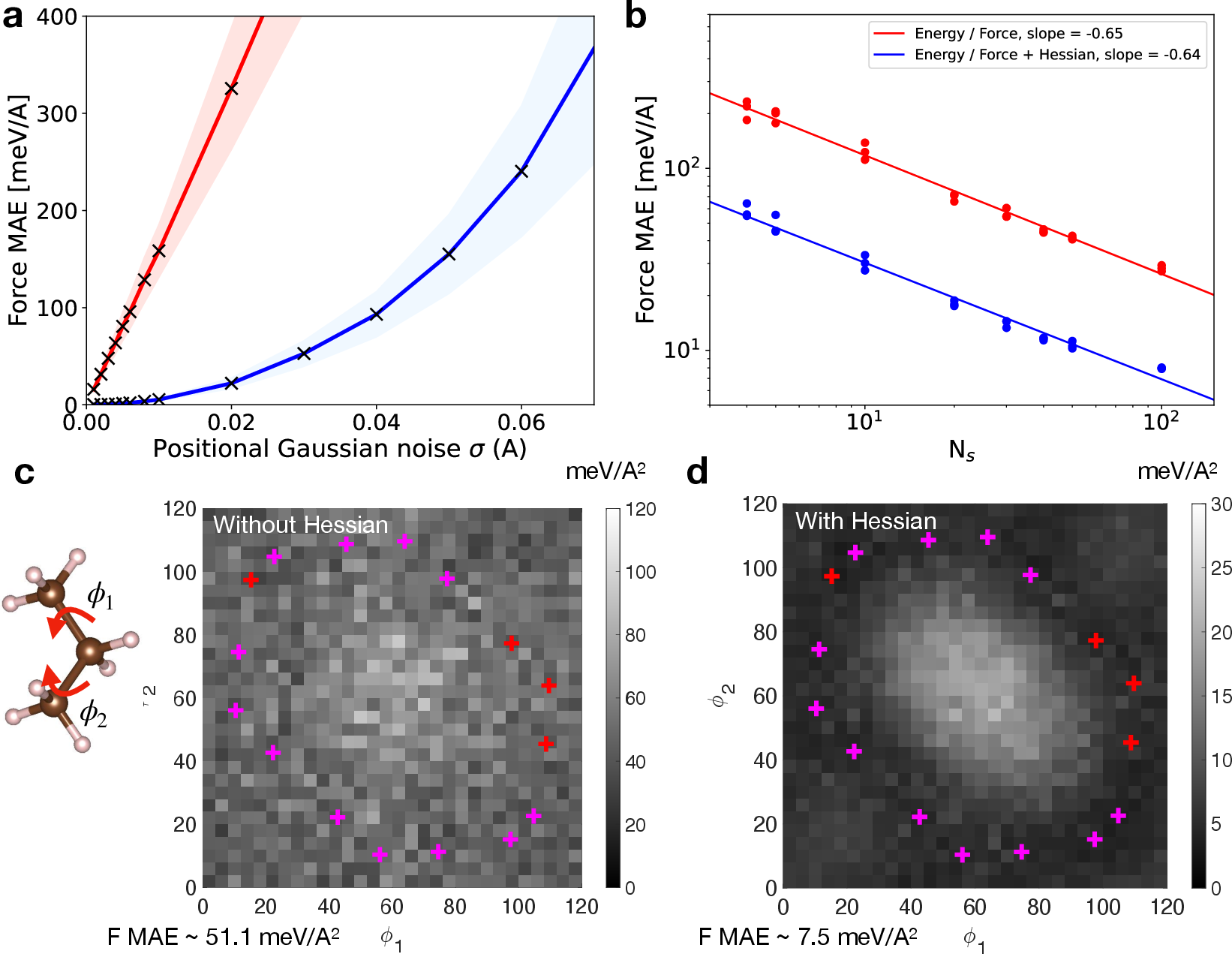

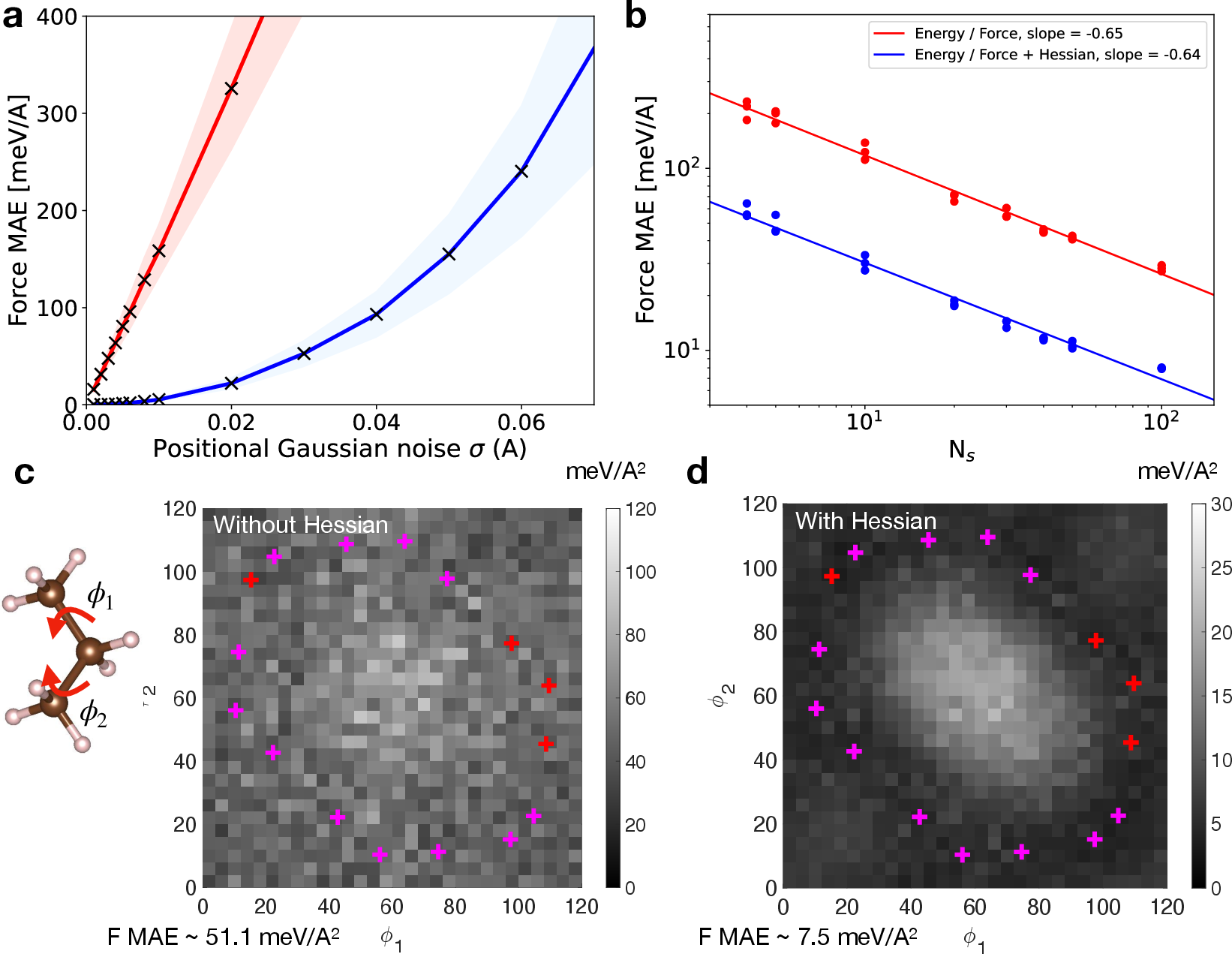

Figure 3: Evaluation of molecular configurations with augmented Hessian training data showcasing force prediction improvements.

Molecular Applications and Data Augmentation

Molecular vibrational mode predictions extend the utility to chemical and pharmaceutical applications, with Hessian data training visibly enhancing model performance. Hessian matrices, as demonstrated, can exploit experimental spectra predictions for model fine-tuning.

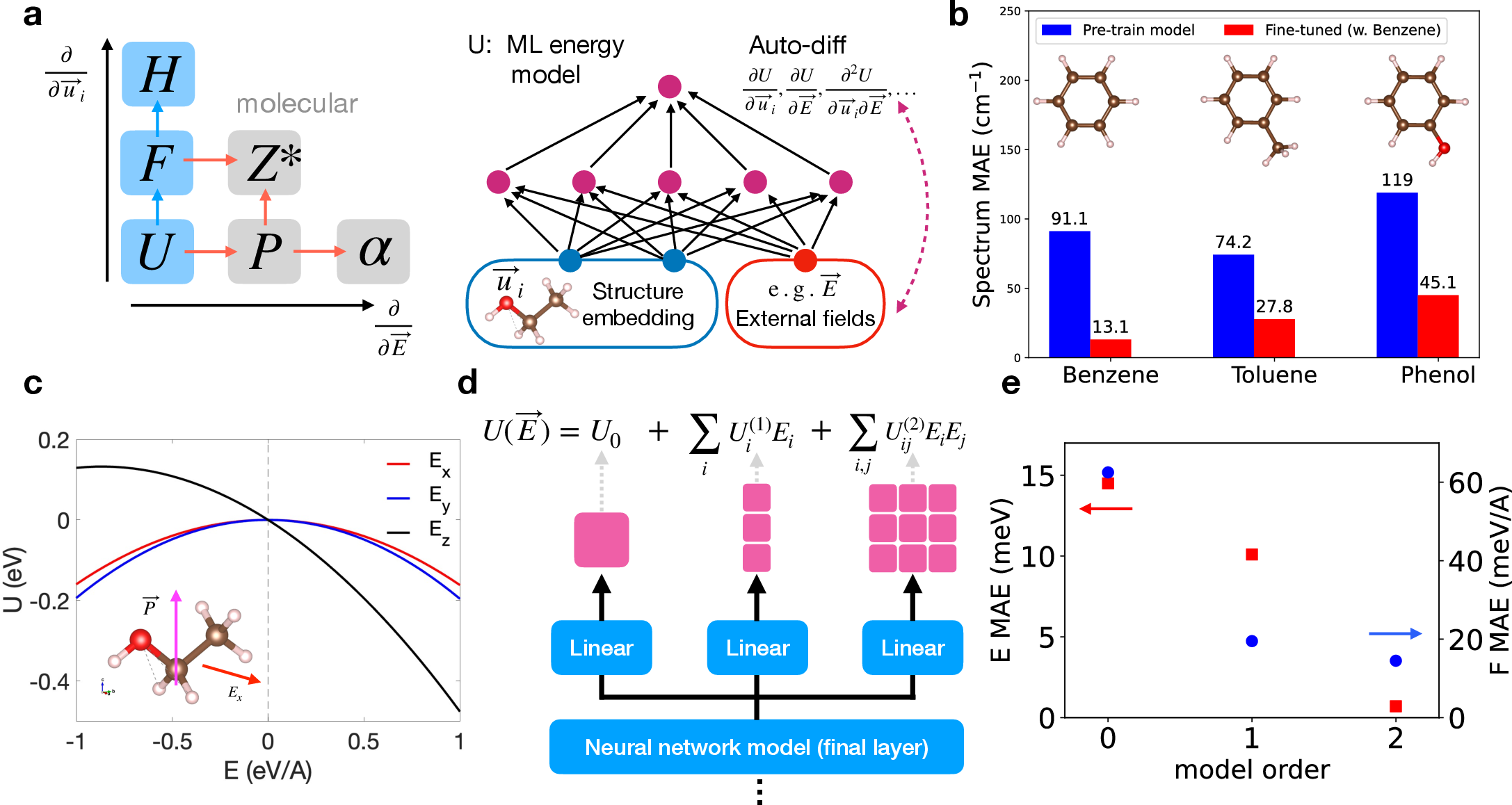

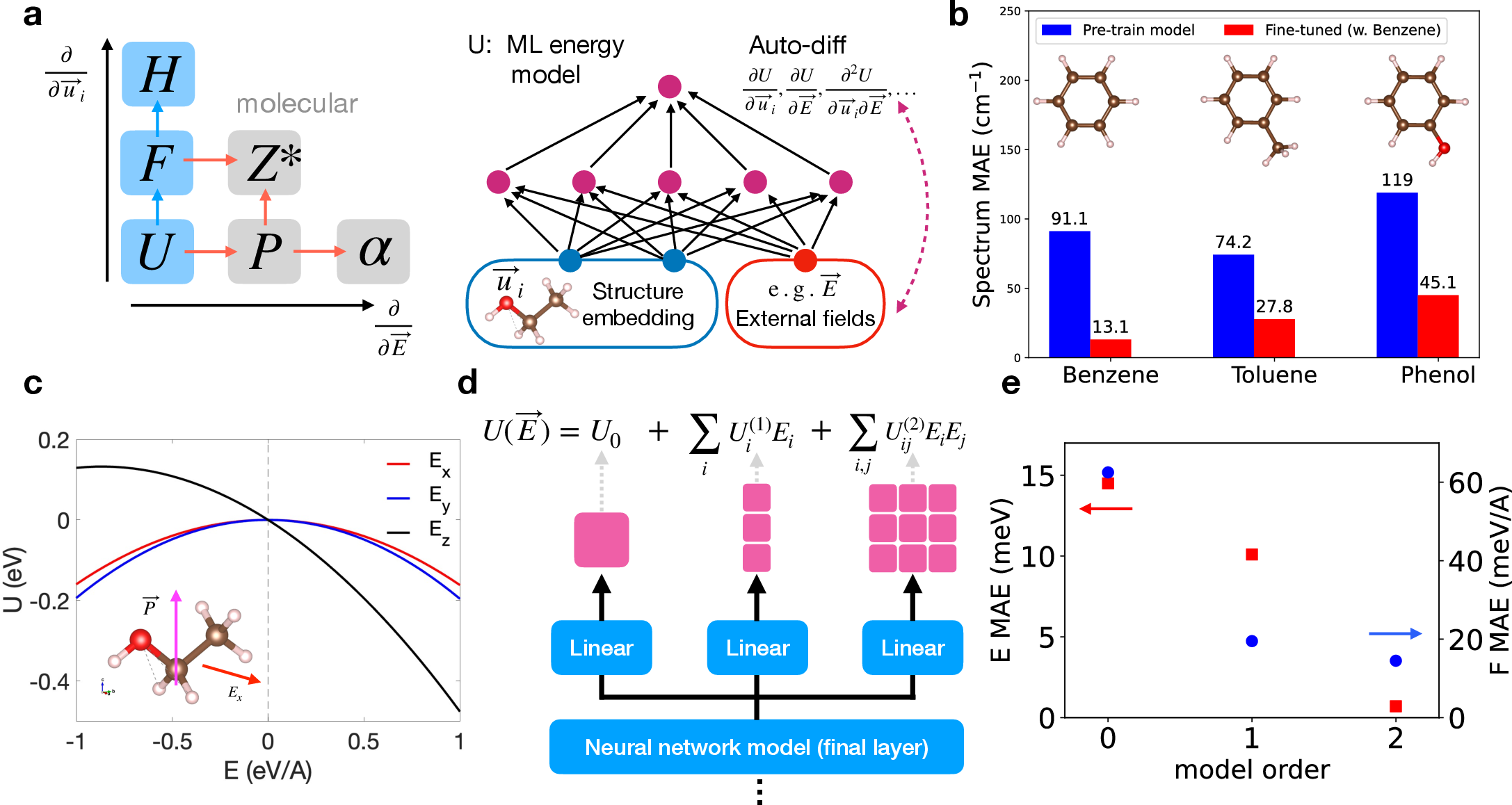

Figure 4: Generalized energy models incorporating external fields, showcasing their impact on spectra predictions.

Generalizing energy models by incorporating external fields further extends the framework to derive relevant physical quantities like polarization, Born effective charges, and polarizabilities. This connects diverse experimental observables to machine learning models directly through derivative-based formulations.

Conclusion

The research introduces an advanced equivariant neural network approach for phonon predictions, demonstrating significant efficiency and accuracy improvements. The Phonax framework successfully bridges theoretical models and experimental comprehensible predictions, with applications spanning from high-throughput materials screening to molecular vibrational analysis. Future directions include exploring interactions with other excitation types and solidifying model generalization strategies in varied domains. The scalability and adaptability of the approach bear promising implications for advancing material science with AI.