- The paper demonstrates a novel framework that co-invents symbols and skills from raw demonstrations to enable efficient real-time planning in long-horizon tasks.

- The methodology segments demonstrations into motion phases and learns predicates and operators using Gaussian distributions to capture spatial relationships.

- Experimental results in both simulation and real-world tests show improved task success rates and robust recovery with passive impedance control.

Symskill: Symbol and Skill Co-Invention for Data-Efficient and Real-Time Long-Horizon Manipulation

Introduction

The paper "Symskill: Symbol and Skill Co-Invention for Data-Efficient and Real-Time Long-Horizon Manipulation" focuses on addressing challenges in robotic manipulation tasks that require multi-step planning and real-time execution recovery. Traditional methods like imitation learning (IL) and task-and-motion planning (TAMP) have limitations in terms of compositional generalization and real-time adaptability. The proposed "SymSkill" framework combines the strengths of IL and TAMP, enabling data-efficient learning from unlabeled, unsegmented demonstrations and facilitating real-time task execution alongside compositional generalization.

Methodology

SymSkill introduces a unified framework leveraging unsupervised learning to simultaneously learn predicates, operators, and skills from raw demonstration data. It achieves this through the segmentation of demonstrations into premotion and motion segments, where the robot's trajectory data is expressed in relative frames associated with key objects of interest.

1. Demo Segmentation and Reference-Frame Selection:

Each demonstration is divided into segments based on changes in object motion, with specific objects designated for reference frames during skill learning. This segmentation into gripper-only and gripper-object segments allows focused learning on meaningful interactions.

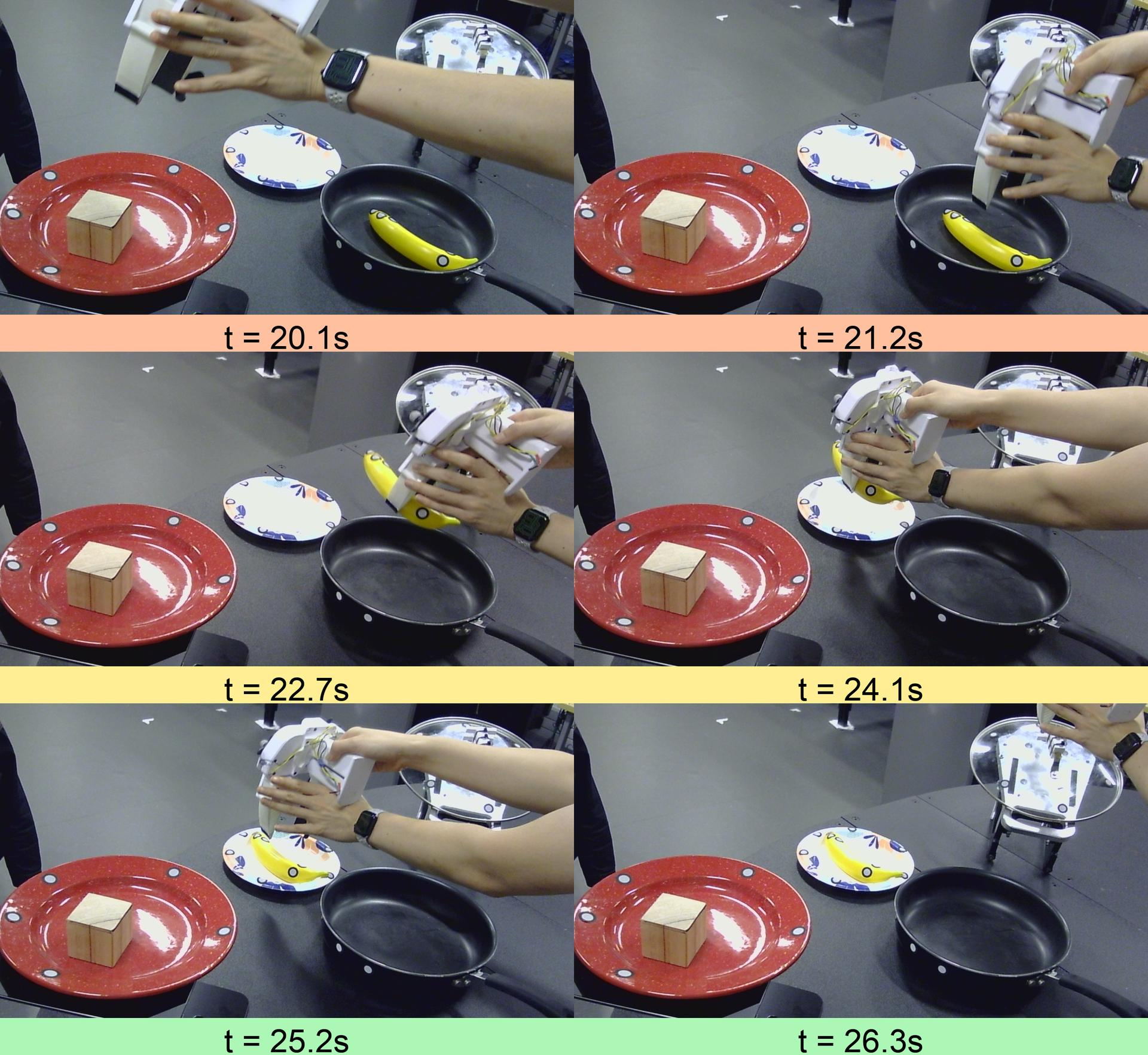

Figure 1: The VLM prompt used for the real-world learning-from-play experiment proceeds as follows. First, the initial image is used to obtain text descriptions of all objects in view. Next, four equally spaced images from each motion segment are provided to Gemini together with the required output enumeration object, using the structured output feature. The returned text is then mapped back to the corresponding object name.

2. Relative Pose Predicate Learning:

The framework uses Gaussian distributions to learn relative pose predicates, capturing meaningful spatial relationships between objects and the robot's end effector. These predicates serve as essential symbolic abstractions for planning and execution.

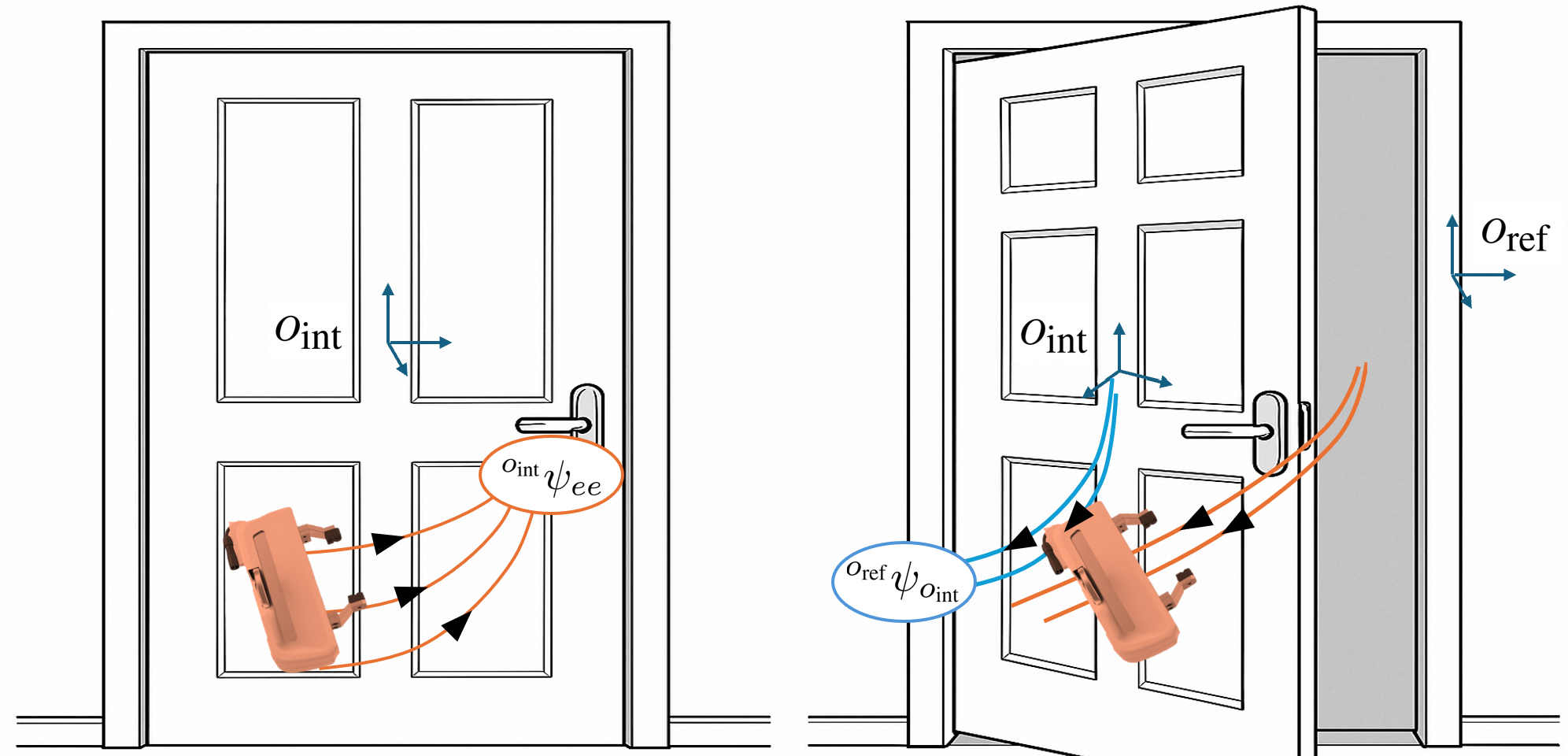

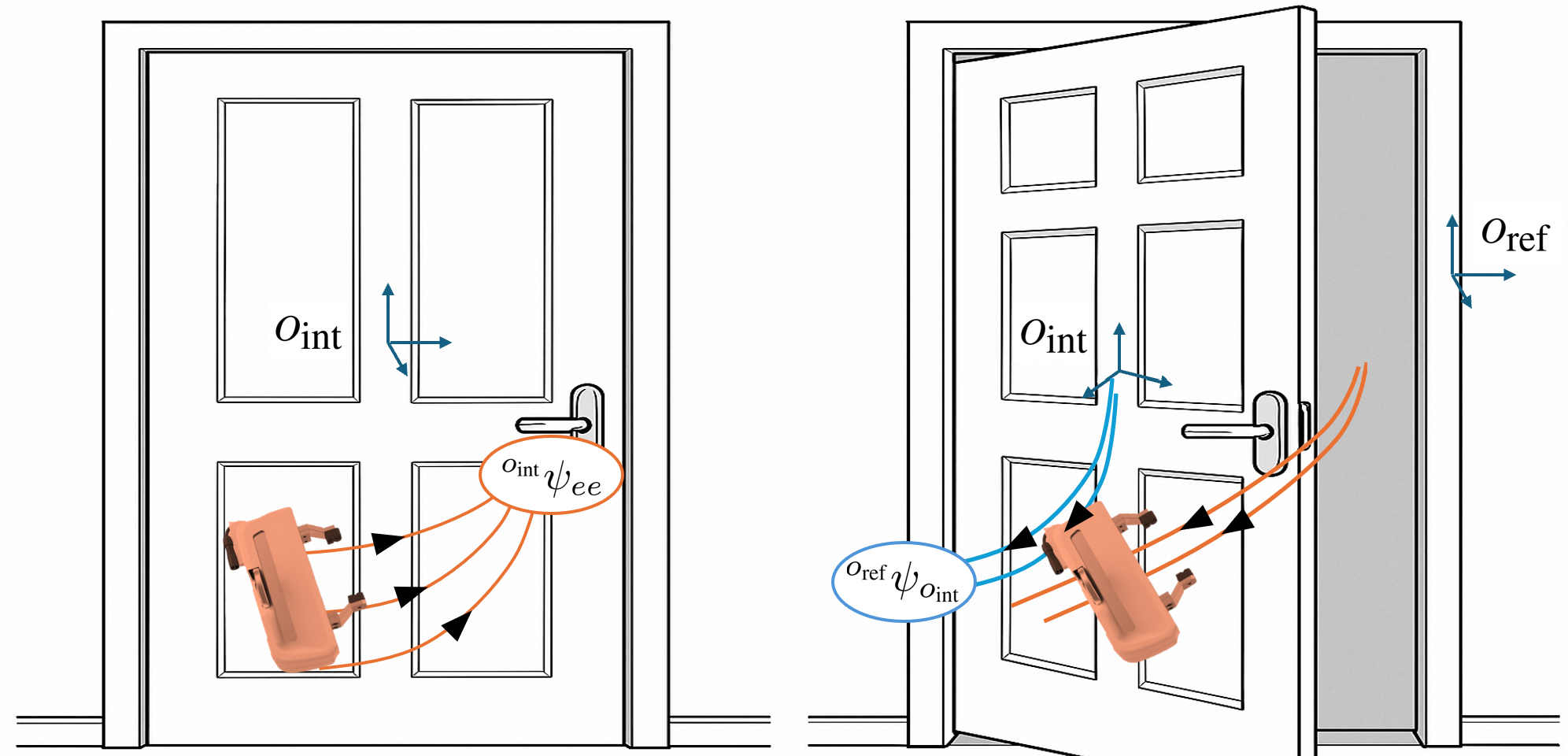

Figure 2: Illustration of the \methodname{} predicate and skill co-invention process on a DoorOpen task.

3. Operator Learning and Skill Integration:

Operators are built by associating learned predicates with symbolic transitions derived from demonstration trajectories. Skills, represented as SE(3) dynamical system policies, are trained for operators to ensure stable and efficient execution.

4. Real-Time Execution and Monitoring:

SymSkill enables real-time execution by monitoring state transitions and re-planning in response to deviations or failures. It uses a passive impedance controller to ensure continuous and safe task execution.

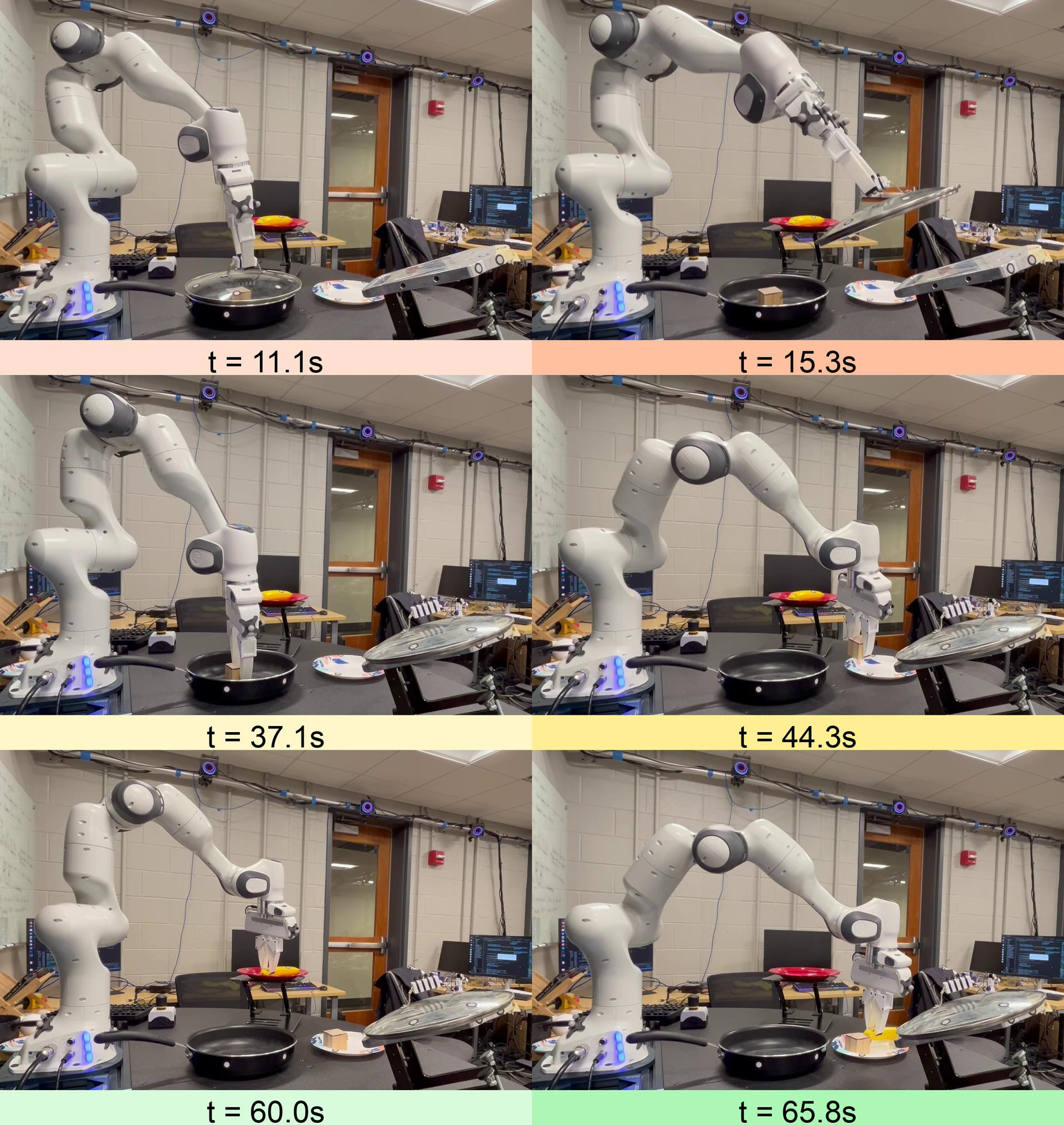

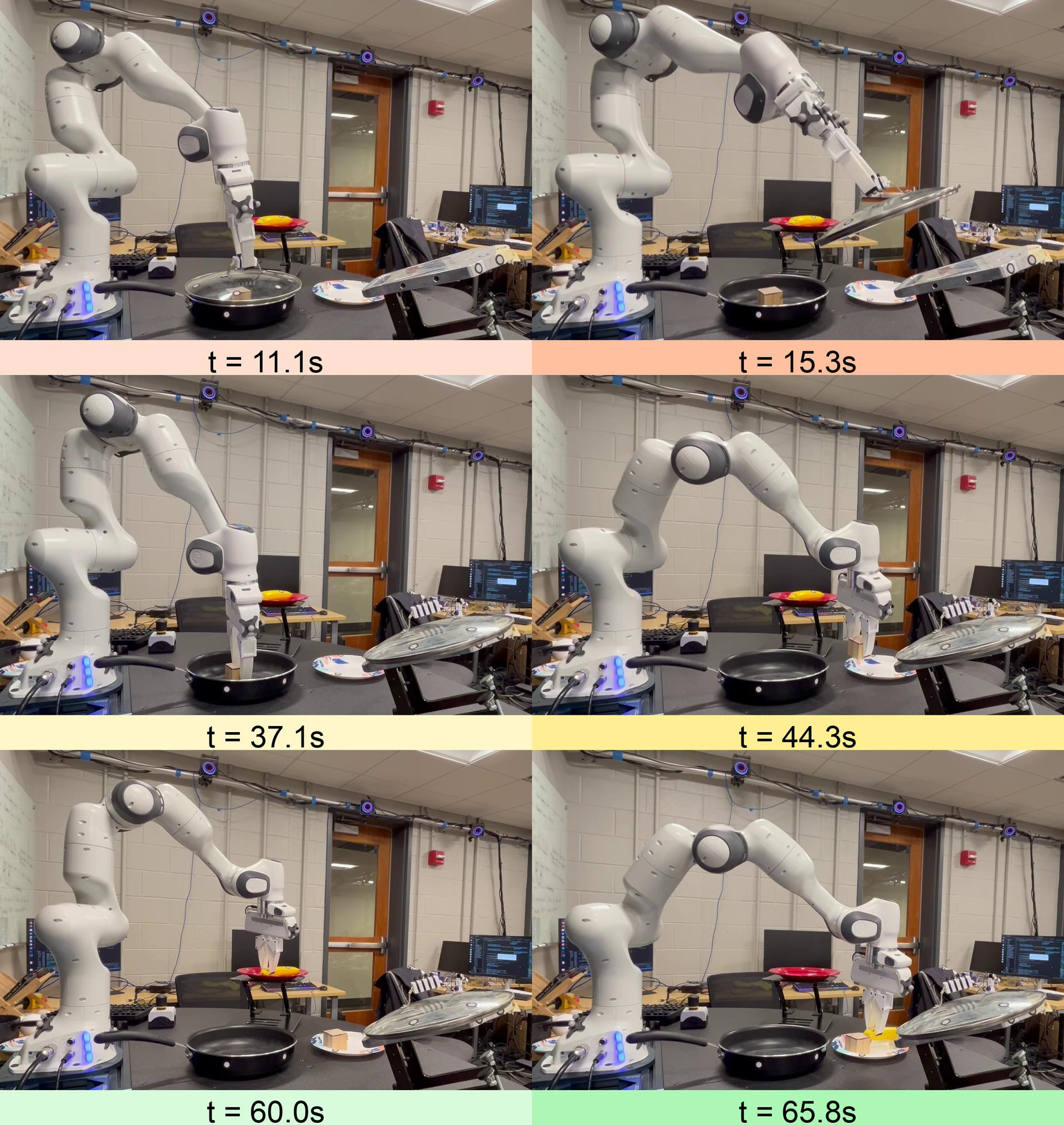

Figure 3: Real-world execution of \methodname{}.

Experimental Results

Simulated Environment:

In the RoboCasa simulation, SymSkill demonstrated a high success rate across various single-step manipulation tasks. The framework's ability to learn and compose skills in real-time outperformed baselines such as diffusion policies, especially in data-limited scenarios.

Real-World Application:

SymSkill showed effective learning from real-world play data captured using motion capture systems and webcams. Learned operators and skills were used to execute complex tasks such as object sorting and storage, validating the framework's robustness and adaptability in dynamic environments.

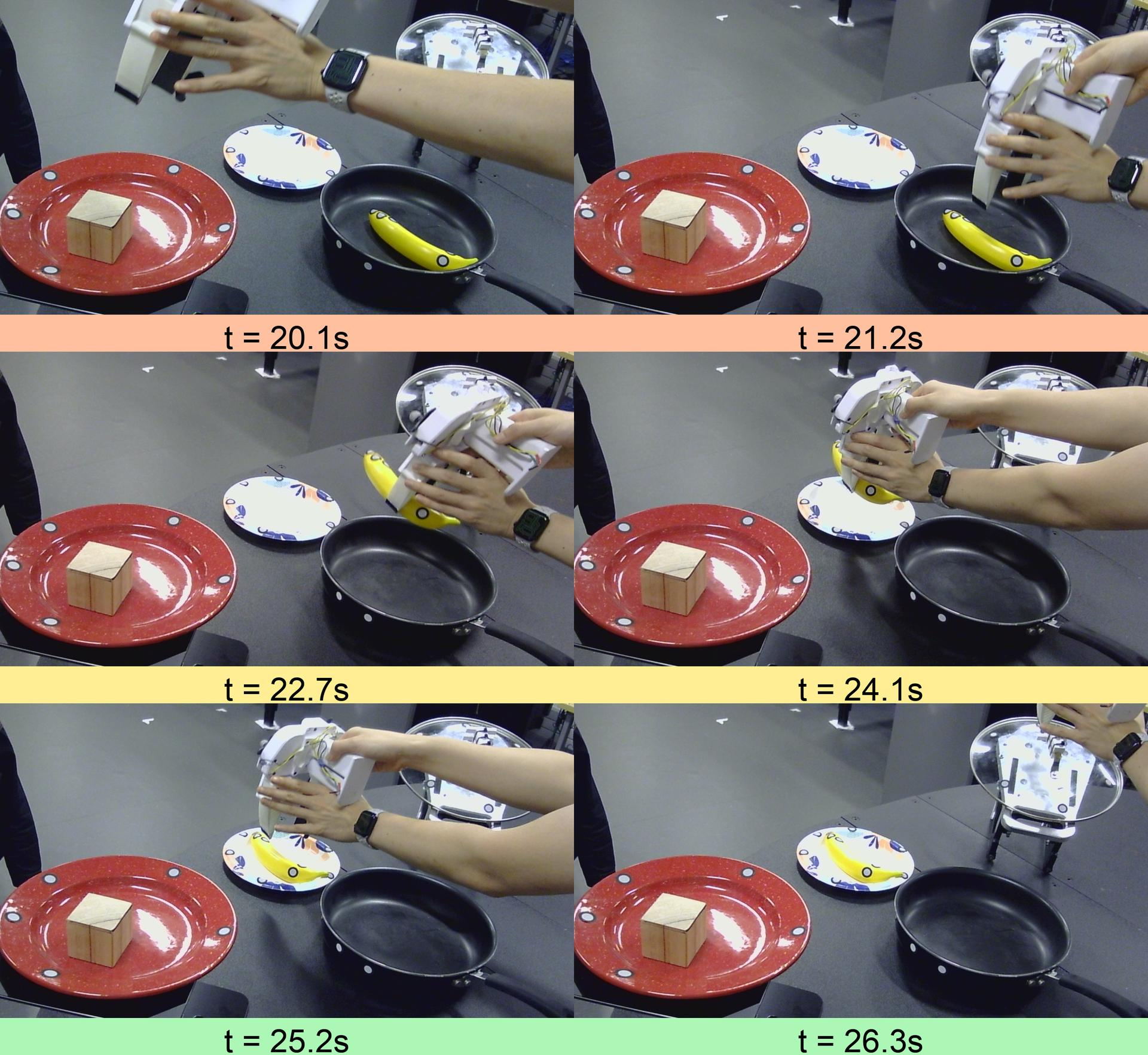

Figure 4: Real-world data collection pipeline. We use a motion capture system to record object interactions in the workspace.

Conclusion

The paper highlights SymSkill's contributions to advancing robotic manipulation through efficient symbol and skill co-invention. Its sample-efficient learning and real-time planning capabilities make it a compelling solution for long-horizon tasks in dynamic environments. Future work could explore extending the framework to mobile manipulation and egocentric video input, broadening the scope of applications.

SymSkill provides a robust foundation for real-world robotic systems requiring adaptability, compositionality, and efficiency, setting a new standard in the integration of IL and TAMP methodologies.