- The paper introduces the IterResearch paradigm, reformulating deep research tasks as a Markov Decision Process with periodic synthesis and workspace reconstruction.

- It leverages a multi-agent data synthesis engine, WebFrontier, and employs rejection sampling alongside reinforcement learning to optimize structured reasoning trajectories.

- Empirical results demonstrate that WebResearcher outperforms state-of-the-art agents on multiple benchmarks, validating the effectiveness of iterative synthesis for long-horizon reasoning.

WebResearcher: Iterative Deep-Research Agents for Unbounded Long-Horizon Reasoning

Introduction and Motivation

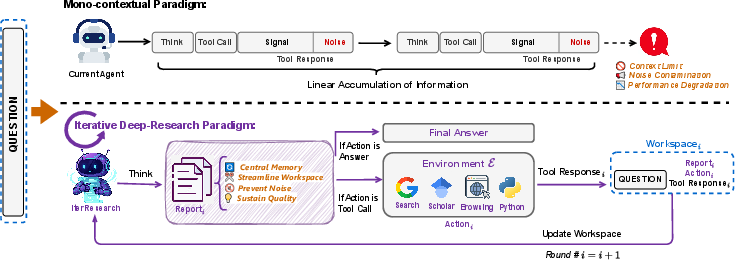

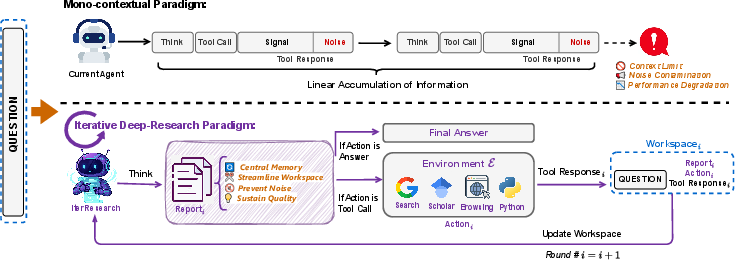

The WebResearcher framework addresses fundamental limitations in current deep-research agents, particularly those relying on mono-contextual paradigms for long-horizon tasks. Existing systems accumulate all retrieved information and intermediate reasoning steps into a single, ever-expanding context window. This approach leads to cognitive workspace suffocation and irreversible noise contamination, severely constraining reasoning depth and quality as research complexity increases. WebResearcher introduces an iterative paradigm—IterResearch—reformulating deep research as a Markov Decision Process (MDP) with periodic consolidation and workspace reconstruction. This enables agents to maintain focused cognitive workspaces and sustain high-quality reasoning across arbitrarily complex research tasks.

IterResearch: Markovian Iterative Synthesis

The core innovation of WebResearcher is the IterResearch paradigm, which decomposes research into discrete rounds. Each round consists of three structured meta-information categories: Think (internal reasoning), Report (evolving synthesis), and Action (tool invocation or final answer). The agent's state at round i is compact, containing only the research question, the evolving report from the previous round, and the most recent tool response. This design enforces the Markov property, ensuring that each round's reasoning is conditioned only on essential, synthesized information rather than the entire historical context.

Figure 1: IterResearch paradigm enables periodic workspace reconstruction, preventing context bloat and noise propagation compared to mono-contextual accumulation.

Periodic synthesis and workspace reconstruction are central to IterResearch. Instead of appending raw data, the agent actively integrates new findings with existing knowledge, resolving conflicts and updating conclusions. This maintains a coherent, high-density summary and filters out noise, enabling error recovery and monotonic information gain. The constant-size workspace ensures full reasoning capacity regardless of research depth, in contrast to the diminishing returns of mono-contextual systems.

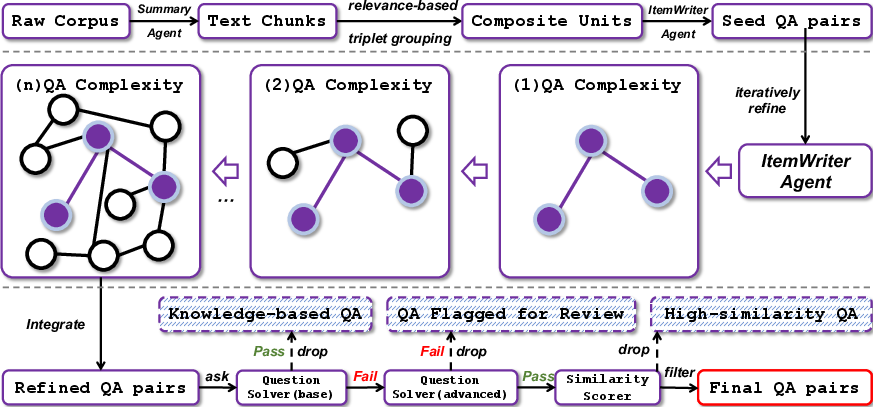

Scalable Data Synthesis: WebFrontier Engine

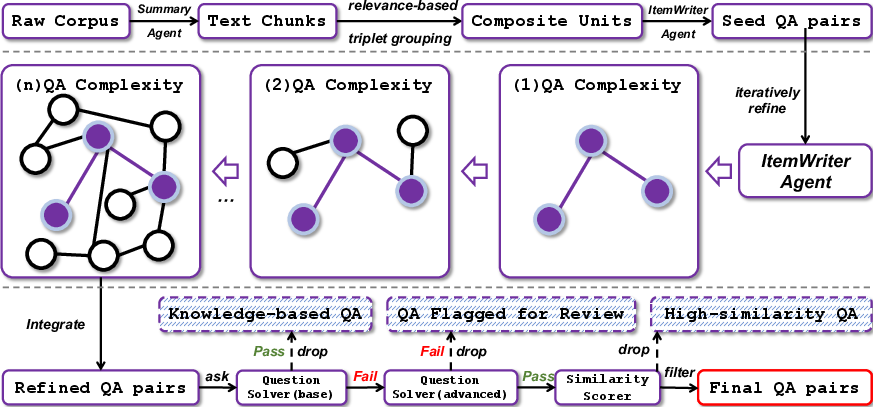

Training deep-research agents requires large-scale, high-quality datasets that probe complex reasoning and tool-use capabilities. WebResearcher introduces WebFrontier, a scalable data synthesis engine employing a multi-agent system in a three-stage workflow: seed data generation, iterative complexity escalation, and rigorous quality control.

Figure 2: Multi-agent data synthesis workflow systematically escalates task complexity and ensures factual correctness.

Seed tasks are generated from a curated corpus and designed to require multi-source synthesis. Tool-augmented agents iteratively evolve these tasks, expanding knowledge scope, abstracting concepts, cross-validating facts, and introducing computational challenges. Quality control agents filter out trivial or intractable tasks, ensuring that the final dataset is both challenging and verifiable. This process efficiently maps the "capability gap" between baseline and tool-augmented models, providing rich training signals for agentic intelligence.

Training and Optimization

WebResearcher employs rejection sampling fine-tuning (RFT) and reinforcement learning (RL) for model optimization. RFT ensures that only trajectories with correct reasoning and answers are retained, enforcing the structured iterative format. RL is implemented via Group Sequence Policy Optimization (GSPO), leveraging the natural decomposition of trajectories into rounds for efficient batched training and advantage normalization. This yields substantial data amplification compared to mono-contextual approaches, as each research question generates multiple training samples per round.

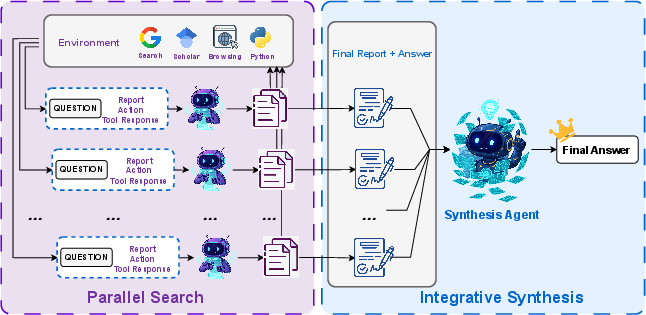

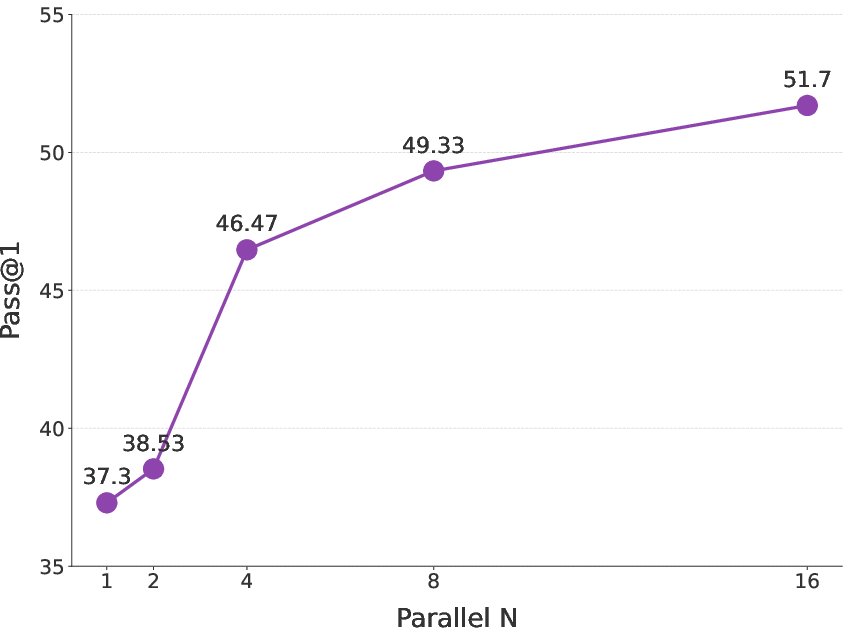

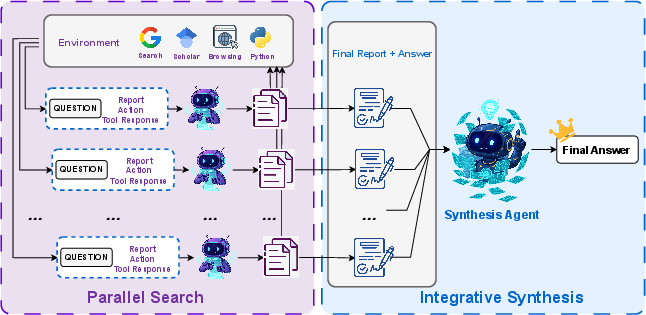

At inference, the Research-Synthesis Framework enables test-time scaling through parallel multi-agent exploration. Multiple Research Agents independently solve the target problem, each producing a final report and answer. A Synthesis Agent then integrates these findings, leveraging the diversity of reasoning paths for robust conclusions.

Figure 3: Reason-Synthesis Framework aggregates parallel research trajectories for integrative synthesis.

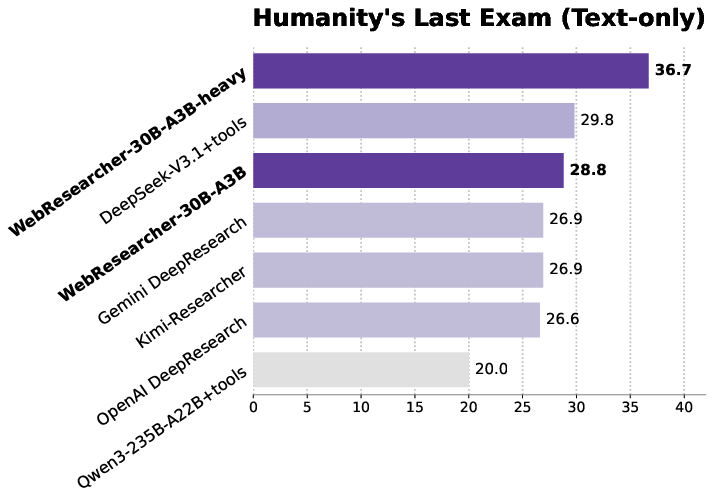

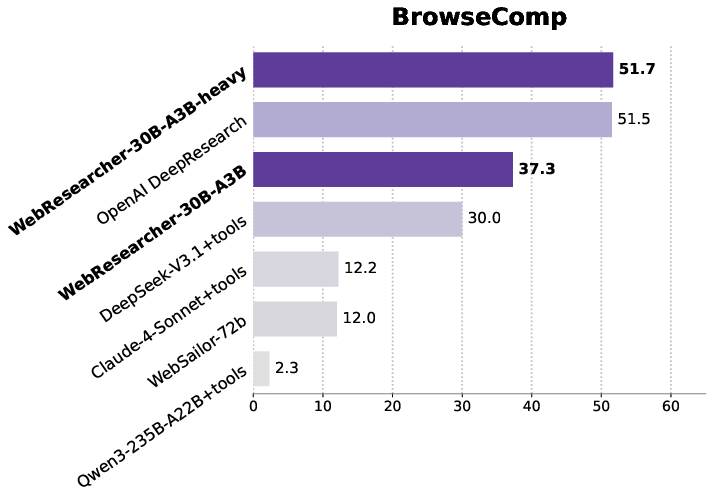

Empirical Results

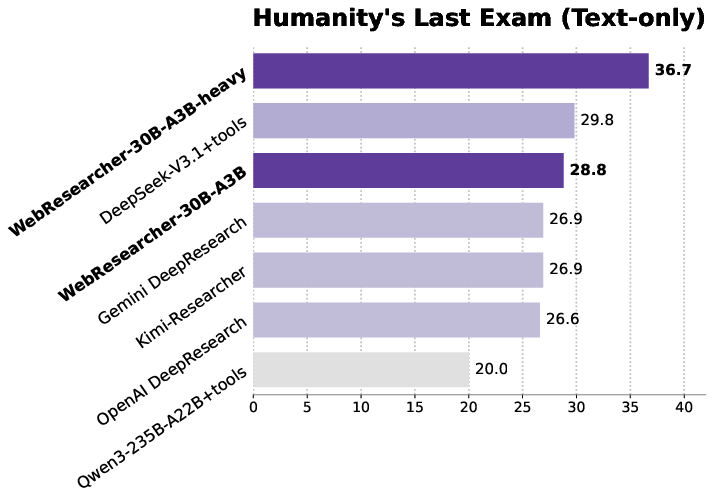

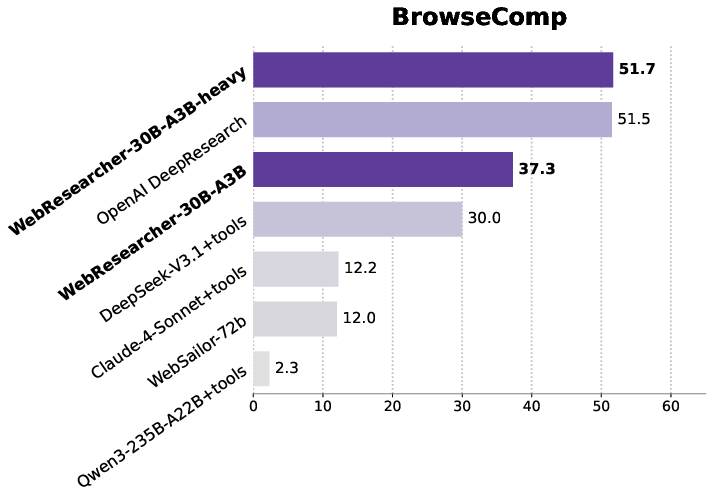

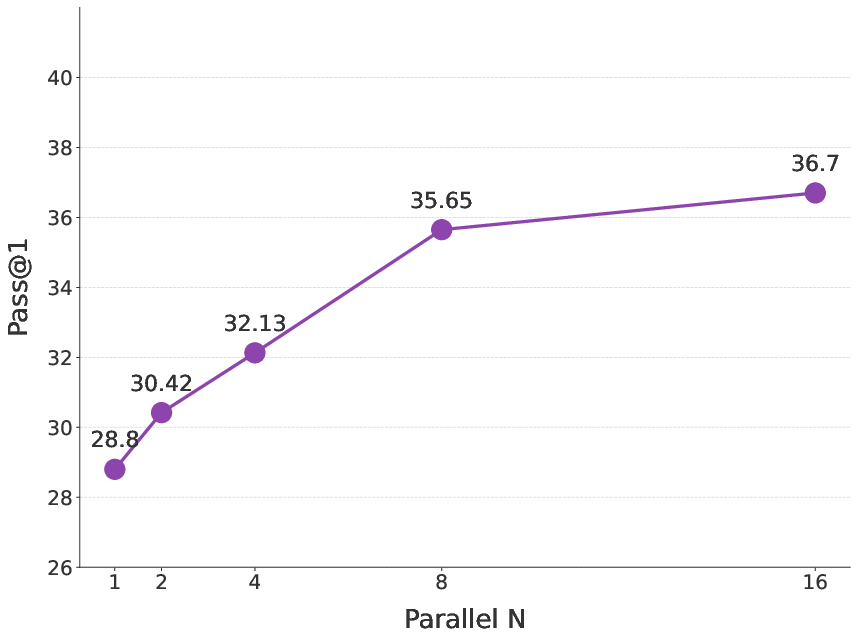

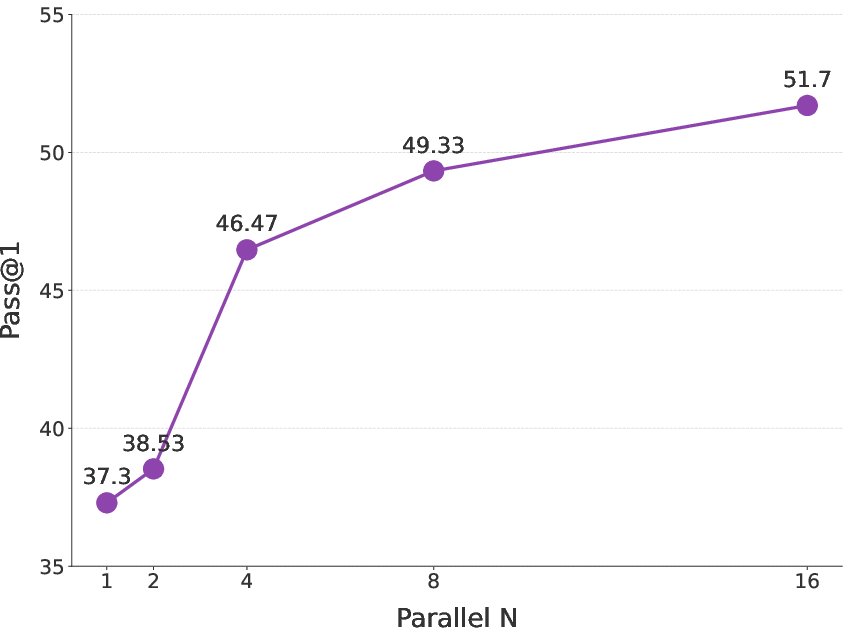

WebResearcher demonstrates state-of-the-art performance across six challenging benchmarks, including Humanity's Last Exam (HLE), BrowseComp-en/zh, GAIA, Xbench-DeepSearch, and FRAMES. On HLE, WebResearcher-heavy achieves 36.7% accuracy, outperforming DeepSeek-V3.1 (29.8%) and OpenAI Deep Research (26.6%). On BrowseComp-en, it reaches 51.7%, matching OpenAI's proprietary system and exceeding the best open-source alternative by 21.7 percentage points.

Figure 4: WebResearcher outperforms state-of-the-art deep-research agents across multiple benchmarks.

Ablation studies confirm that the iterative paradigm itself, not just the training data, is the critical driver of performance. Mono-Agent + Iter (mono-contextual agent with iterative training data) improves over the base Mono-Agent, but WebResearcher (full iterative paradigm) achieves the highest scores, isolating the impact of periodic synthesis and workspace reconstruction.

Analysis of tool-use behavior reveals that IterResearch agents dynamically adapt their strategies to task demands. On HLE, the agent employs concise reasoning chains with focused academic search, while on BrowseComp, it sustains long, exploratory sequences with extensive web navigation and information integration. The average number of reasoning turns varies from 4.7 (HLE) to 61.4 (BrowseComp), with some tasks requiring over 200 interaction turns. This adaptivity validates the effectiveness of the iterative architecture for both targeted and exploratory research.

Test-Time Scaling and Ensemble Effects

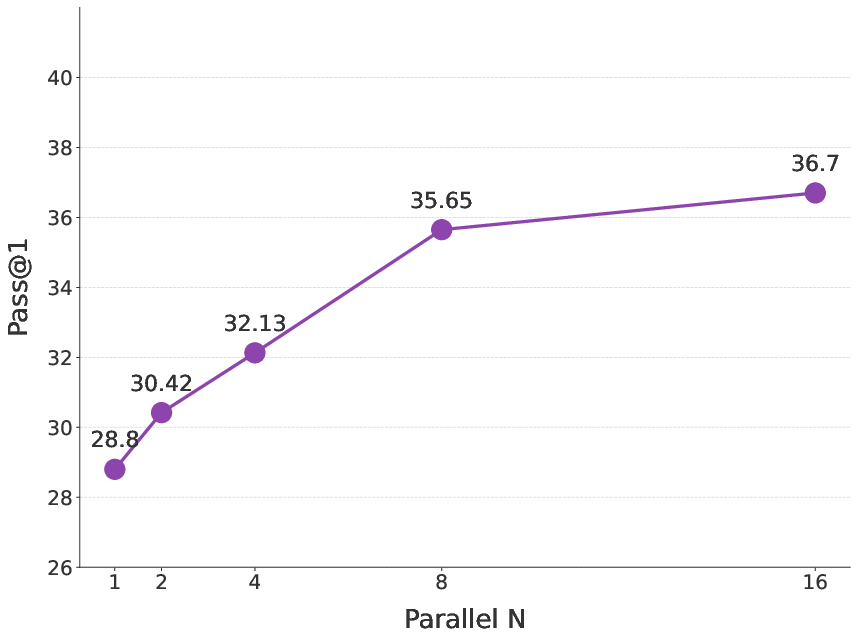

The Reason-Synthesis Framework enables performance enhancement via parallel research trajectories. Increasing the number of parallel agents (n) yields consistent improvements in pass@1 scores, with diminishing returns beyond n=8. This ensemble effect allows the Synthesis Agent to fuse diverse reasoning paths, producing more robust answers at the cost of increased computational resources.

Figure 5: Increasing the number of parallel research agents (n) improves HLE performance, with optimal trade-off at n=8.

Implications and Future Directions

WebResearcher establishes a new paradigm for long-horizon agentic reasoning, demonstrating that periodic synthesis and Markovian state reconstruction are essential for sustained cognitive performance. The framework's modularity enables integration with diverse toolsets and scaling via parallelism. The data synthesis engine provides a blueprint for generating complex, verifiable training corpora, addressing a major bottleneck in agentic AI development.

Theoretical implications include the formalization of deep research as an MDP, enabling principled analysis and optimization. Practically, WebResearcher can be extended to domains requiring multi-hop reasoning, cross-domain synthesis, and autonomous knowledge construction. Future work may explore hierarchical agent architectures, adaptive synthesis strategies, and continual learning in dynamic environments.

Conclusion

WebResearcher introduces an iterative deep-research paradigm that overcomes the limitations of mono-contextual accumulation, enabling unbounded reasoning capability in long-horizon agents. Through Markovian synthesis, scalable data generation, and parallel research-synthesis, the framework achieves superior performance on complex benchmarks and establishes a foundation for future advances in agentic intelligence. The results underscore the necessity of structured iteration and synthesis for effective autonomous research, with broad implications for the development of general-purpose AI agents.