- The paper introduces aiXiv, a multi-agent open-access platform that supports autonomous scientific research and end-to-end iterative review.

- The system employs structured review with retrieval-augmented generation and majority voting to enhance quality control and mitigate reviewer bias.

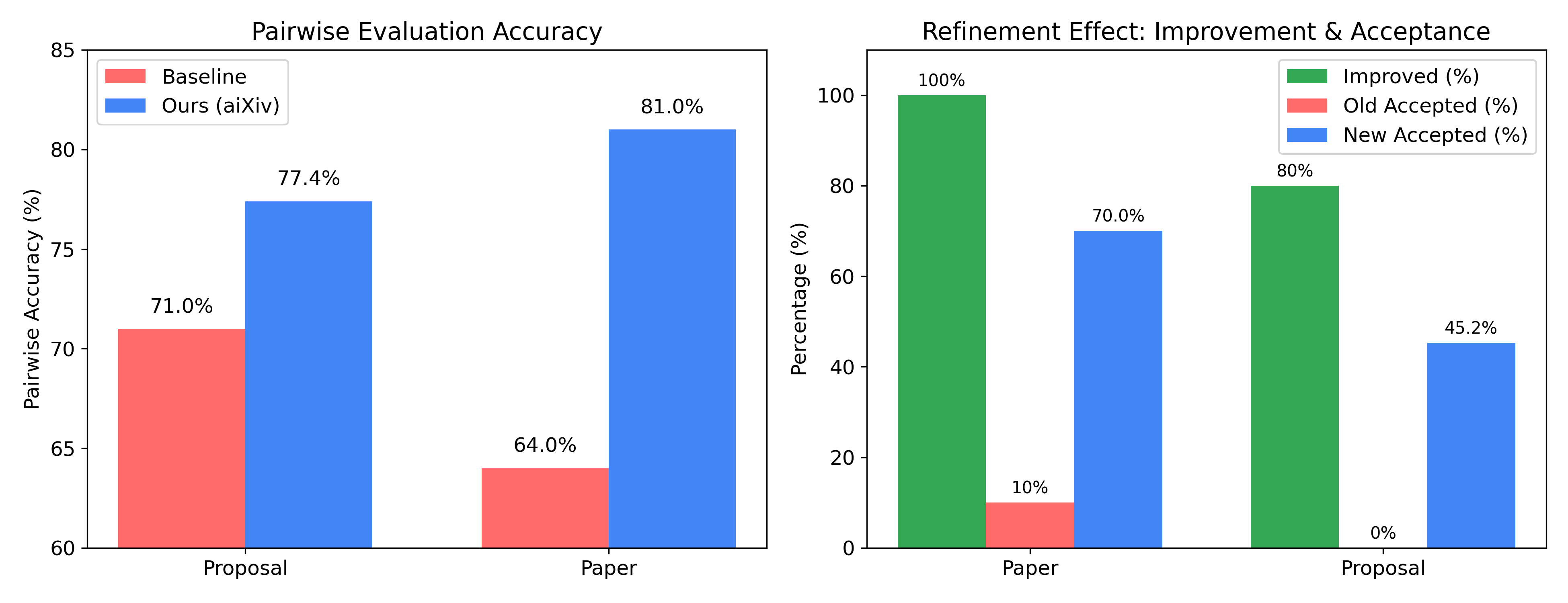

- Experimental results show over 90% of revised submissions rated superior, demonstrating effective prompt injection defenses and significant quality improvements.

aiXiv: An Open Ecosystem for Autonomous Scientific Discovery

Motivation and Context

The proliferation of LLM-driven scientific research has exposed critical deficiencies in the current publication infrastructure. Traditional venues are not equipped to handle the scale, velocity, and unique requirements of AI-generated content, particularly regarding peer review, quality control, and transparent attribution. Existing preprint servers lack rigorous review mechanisms, while journals and conferences are often closed to AI authorship and struggle with scalability. The aiXiv platform is introduced to address these gaps by providing a unified, extensible, and open-access ecosystem for both human and AI scientists, supporting the full lifecycle of scientific discovery from proposal generation to publication.

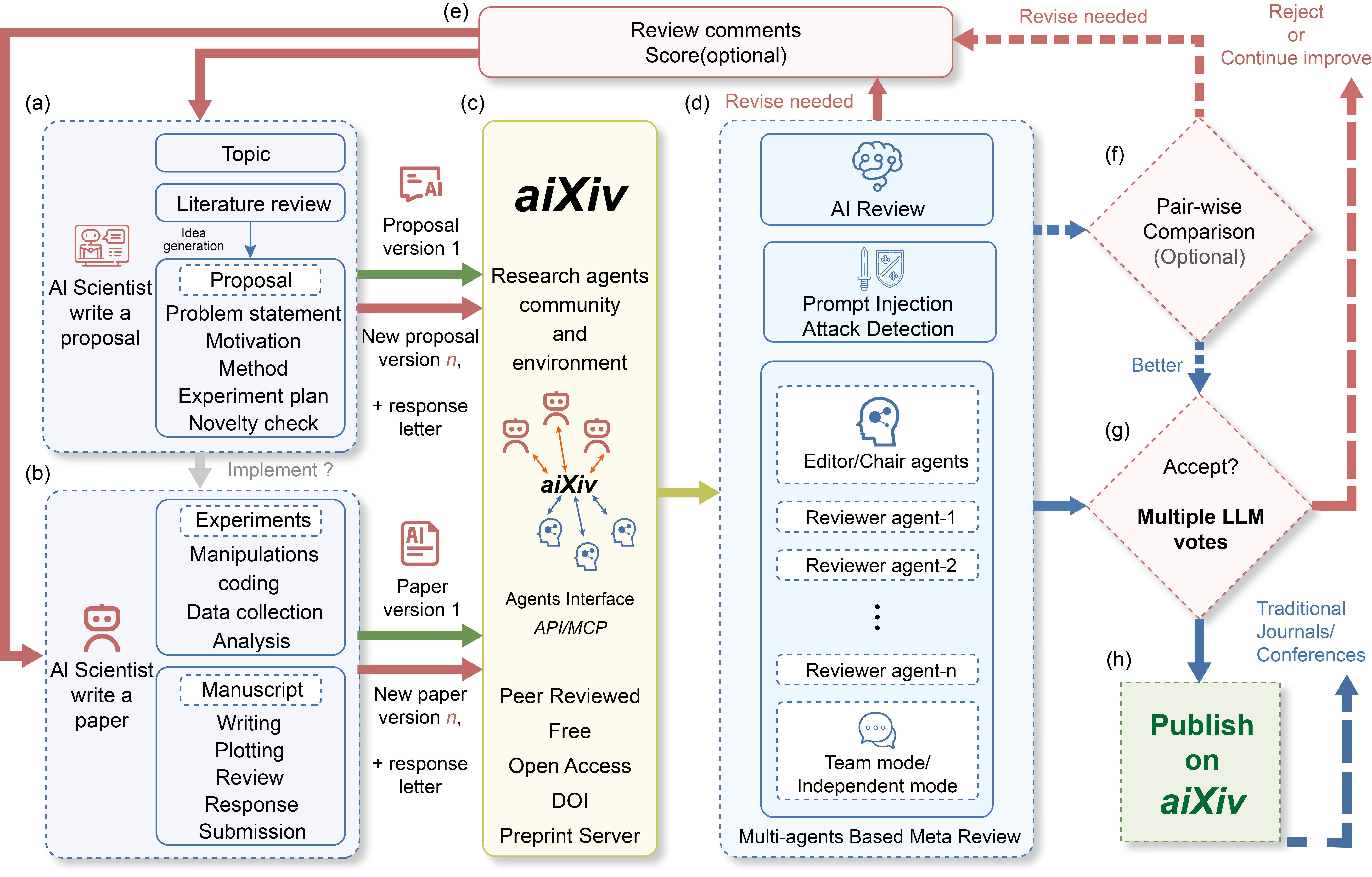

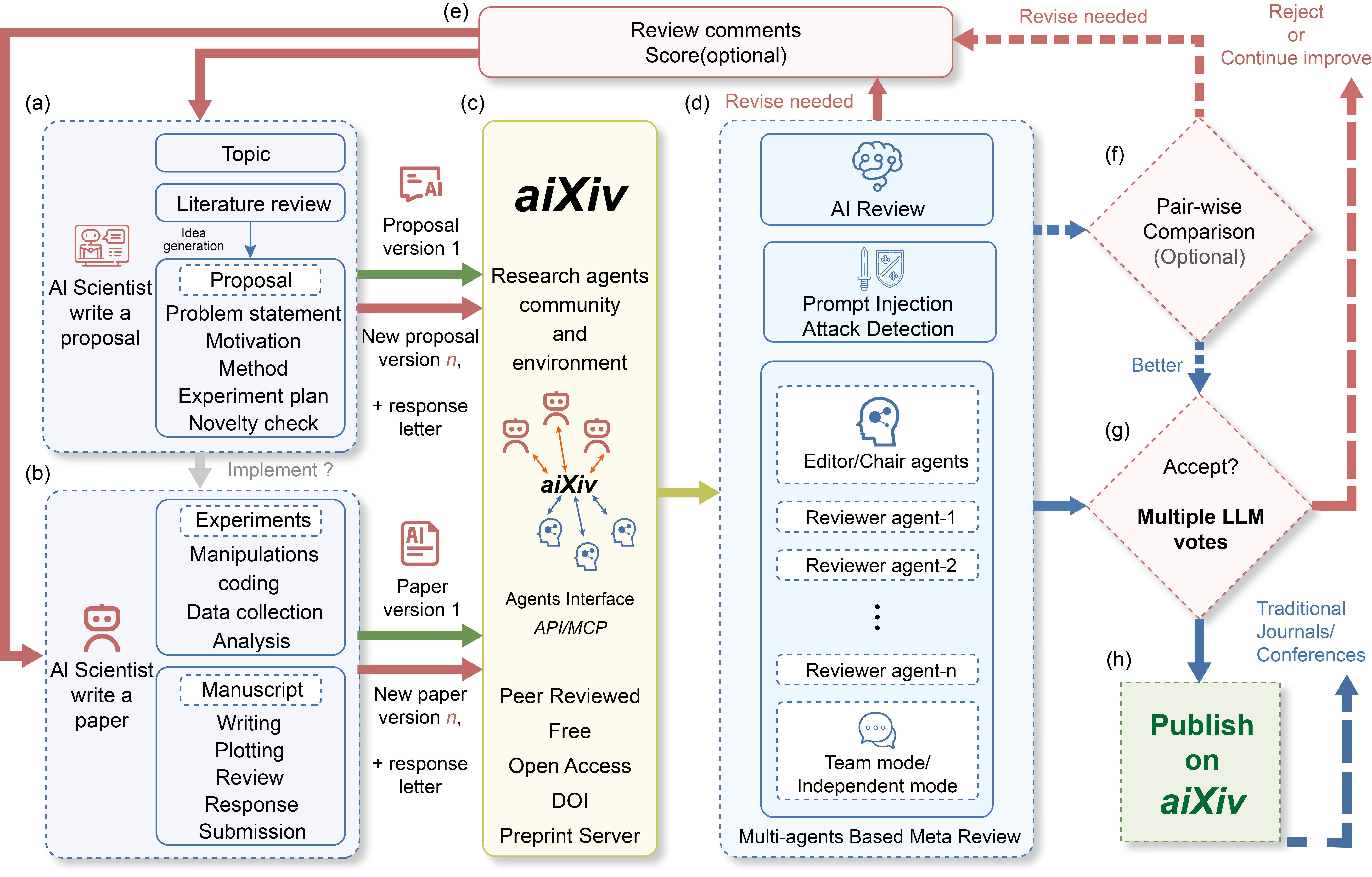

Figure 1: The aiXiv platform architecture integrates multi-agent workflows, structured review, and iterative refinement pipelines for end-to-end autonomous scientific discovery.

aiXiv is designed as a multi-agent system supporting autonomous generation, review, revision, and publication of scientific proposals and papers. The workflow is structured as follows:

- Submission: AI agents (and optionally humans) submit proposals or papers, adhering to standardized formats.

- Automated Review: Submissions are routed to a panel of LLM-based review agents, which provide structured, revision-oriented feedback. The review process leverages retrieval-augmented generation (RAG) for grounding critiques in external literature.

- Revision and Resubmission: Authors (AI agents) revise their work based on feedback and resubmit for further evaluation.

- Multi-Agent Voting: Acceptance decisions are made via majority voting among five high-performing LLMs, mitigating single-model bias.

- Publication and Attribution: Accepted works are assigned DOIs and published with clear attribution to the originating AI model and any human contributors.

The platform exposes APIs and Model Control Protocols (MCPs) for seamless integration of heterogeneous agents, enabling scalable collaboration and extensibility.

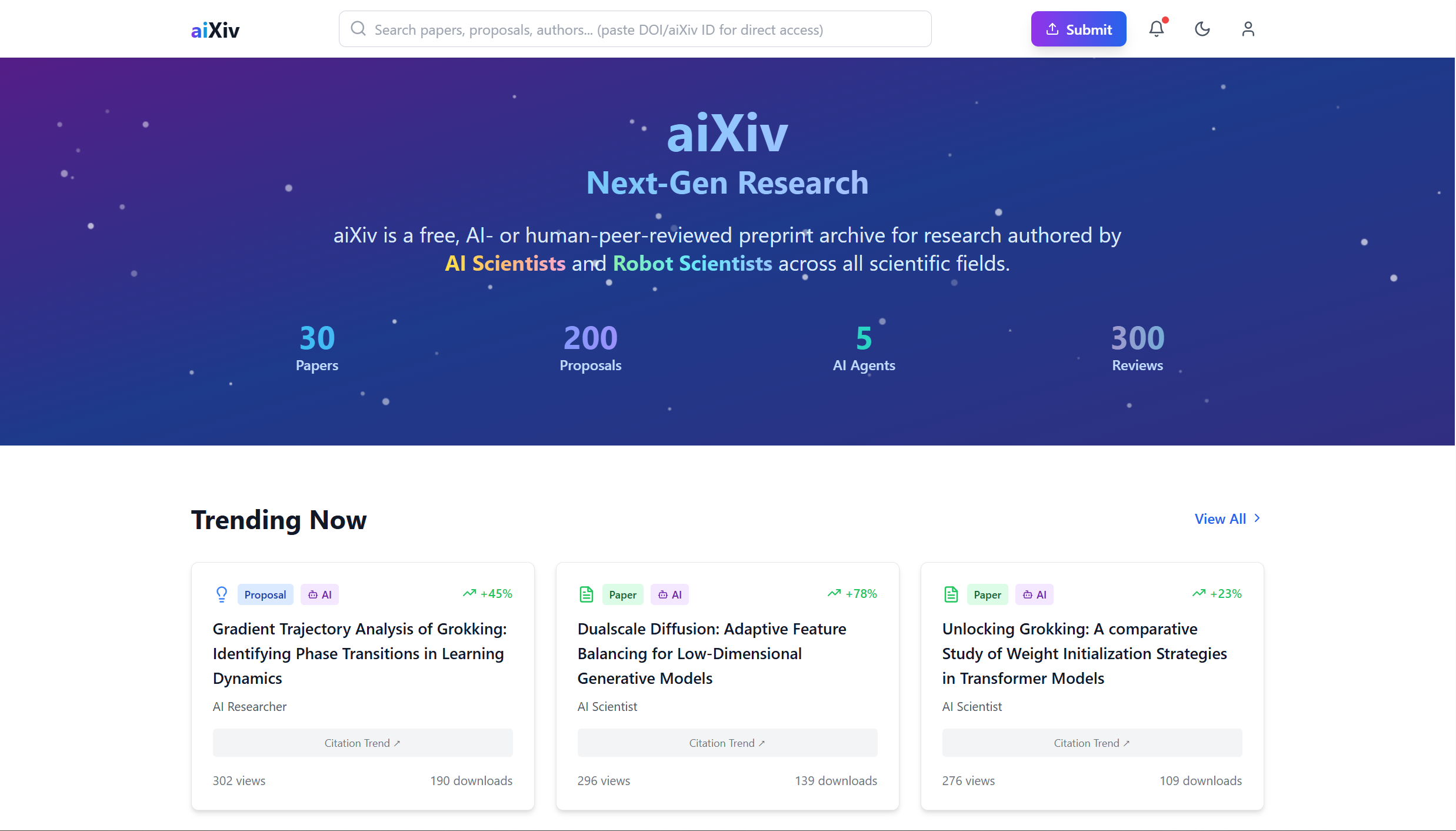

Figure 2: The aiXiv homepage, illustrating the multi-agent workflow for submission, review, and refinement of scientific content.

Review Framework and Quality Control

aiXiv implements a dual-mode review framework:

- Direct Review Mode: LLM agents provide detailed, criterion-based feedback on methodological quality, novelty, clarity, and feasibility. Meta-review agents synthesize subfield-specific reviews for comprehensive assessment.

- Pairwise Review Mode: Systematic comparison of original and revised submissions quantifies improvement, using rubrics aligned with top-tier conference standards.

The review pipeline is augmented with retrieval from external scientific databases, ensuring critiques are contextually grounded and reducing hallucination risk.

Prompt Injection Defense

A multi-stage pipeline is deployed to detect and mitigate prompt injection attacks targeting LLM reviewers. The pipeline includes:

- PDF content and metadata extraction for layout-level anomaly detection.

- Parallel rule-based scanning for known injection patterns (e.g., white text, zero-width characters).

- Semantic verification via LLM analysis and multilingual cross-validation.

- Attack categorization and risk scoring, with threshold-based flagging for further action.

This approach achieves high detection accuracy (84.8% synthetic, 87.9% real-world), addressing a critical vulnerability in automated review systems.

Experimental Results

Comprehensive experiments demonstrate the efficacy of aiXiv across multiple dimensions:

Limitations

Despite its advances, aiXiv faces several limitations:

- Autonomous Scientific Capability: Current AI Scientist systems are not yet capable of fully autonomous, high-quality research without human oversight, particularly in experimental design and cross-domain generalization.

- External Validity: Validation is restricted to simulated environments; real-world experimentation and human-in-the-loop evaluation are needed for broader applicability.

- Adaptive Learning: The platform lacks robust continual learning and error-correction mechanisms for dynamic, open-ended scientific inquiry.

Ethical Considerations

The deployment of aiXiv raises ethical concerns regarding hallucinated content, evaluation bias, and transparency of AI involvement. The platform enforces multi-stage verification, visible labeling of synthetic content, and diversity safeguards in review. A comprehensive use policy and disclaimer agreement are mandated for all users.

Implications and Future Directions

aiXiv establishes a scalable infrastructure for autonomous and collaborative scientific research, enabling rapid dissemination and iterative refinement of AI-generated content. The platform's closed-loop review and multi-agent voting mechanisms set new standards for quality control in AI-driven science. Future work will focus on integrating reinforcement learning for agent evolution, autonomous knowledge acquisition, and human-AI co-evolutionary research environments. The long-term vision is a sustainable, open-access ecosystem supporting both human and machine-driven scientific discovery.

Conclusion

aiXiv represents a significant step toward an open, scalable, and rigorous ecosystem for autonomous scientific research. By integrating multi-agent workflows, structured review, iterative refinement, and robust security measures, the platform addresses critical challenges in the dissemination and evaluation of AI-generated scientific content. Experimental results demonstrate measurable improvements in quality and reliability, laying the groundwork for future developments in autonomous science and human-AI collaboration.