- The paper presents a systematic taxonomy that decomposes Context Engineering into core components such as retrieval, processing, and management.

- It details system implementations like RAG architectures, memory systems, and tool-integrated reasoning to enhance LLM performance.

- It identifies a critical asymmetry where LLMs excel in context understanding yet struggle with sophisticated long-form output generation, urging future research.

Context Engineering for LLMs: A Systematic Review

This survey paper (2507.13334) introduces Context Engineering as a formal discipline for optimizing the information payloads provided to LLMs. It presents a structured taxonomy that decomposes Context Engineering into its foundational components and system implementations, offering a unified framework for researchers and engineers. The paper identifies a research gap: the asymmetry between LLMs' proficiency in understanding complex contexts and their limitations in generating equally sophisticated long-form outputs.

Core Components of Context Engineering

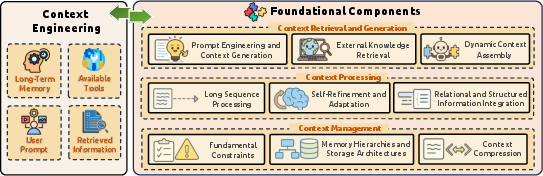

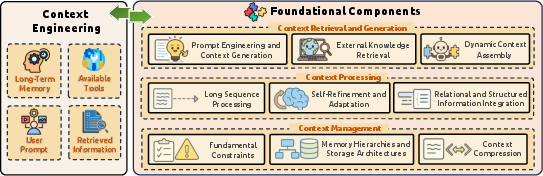

The paper categorizes Context Engineering into three foundational components:

- Context Retrieval and Generation: This component focuses on prompt-based generation and external knowledge acquisition, including techniques like Chain-of-Thought (CoT), Retrieval-Augmented Generation (RAG), and Cognitive Prompting.

- Context Processing: This addresses long sequence processing, self-refinement mechanisms, and structured information integration, incorporating methods such as FlashAttention, Self-Refine, and StructGPT.

- Context Management: This component covers memory hierarchies, compression, and optimization strategies, employing techniques like Context Compression, KV Cache Management, and Activation Refilling.

Figure 1: The Context Engineering Framework illustrates the components and implementations within the field, highlighting the relationships between Context Retrieval and Generation, Context Processing, Context Management, and various system implementations.

System Implementations

The paper explores how the foundational components are integrated into sophisticated system implementations:

- Retrieval-Augmented Generation (RAG): This includes modular, agentic, and graph-enhanced architectures, such as FlashRAG, Self-RAG, and GraphRAG.

- Memory Systems: These systems enable persistent interactions, with examples like MemoryBank, MemLLM, and MemGPT.

- Tool-Integrated Reasoning: This involves function calling and environmental interaction, utilizing systems like Toolformer, ReAct, and ToolLLM.

- Multi-Agent Systems: These systems coordinate communication and orchestration, employing communication protocols and coordination strategies.

Figure 2: The Retrieval-Augmented Generation Framework outlines the different architectures, including Modular RAG, Agentic RAG Systems, and Graph-Enhanced RAG approaches for integrating external context.

Addressing the Asymmetry Between Understanding and Generation

A key contribution of this survey is the identification of a significant asymmetry in LLMs: they demonstrate remarkable proficiency in understanding complex contexts but struggle to generate equally sophisticated, long-form outputs. This gap highlights the need for future research to focus on enhancing the generative capabilities of LLMs.

Future Research Directions and Challenges

The paper outlines several future research directions and challenges:

Evaluation Methodologies

The survey also discusses evaluation methodologies for assessing the performance of context-engineered systems, emphasizing the need for component-level diagnostics, system-level integration assessments, and benchmark datasets tailored to specific applications. The importance of evaluation frameworks and benchmark datasets is highlighted to ensure comprehensive and systematic assessment of context-aware AI systems.

Conclusion

The paper concludes by emphasizing the critical role of Context Engineering in advancing context-aware AI and providing a roadmap for future research and innovation in the field. The systematic review and taxonomy presented in the paper offer a valuable resource for both researchers and engineers seeking to develop and deploy intelligent systems that can effectively leverage contextual information.