Examining the Evolution and Mechanisms of Retrieval-Augmented Generation (RAG) for Enhancing LLMs

Introduction

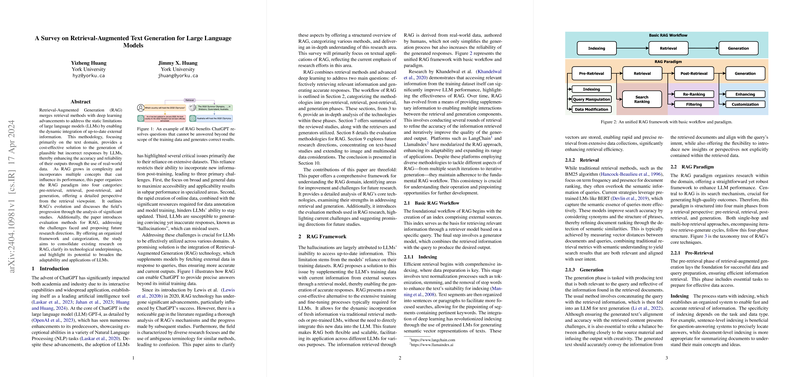

The paper explores the advancements and methodologies of Retrieval-Augmented Generation (RAG), focusing on its role in overcoming the limitations of LLMs due to static training datasets. By integrating dynamic, up-to-date external information, RAG addresses response accuracy issues in LLMs, such as poor performance in specialized domains and "hallucinations" of plausible but incorrect answers. The analysis spans the pre-retrieval, retrieval, post-retrieval, and generation stages, offering a comprehensive framework for RAG application in text-based AI systems, with insights into multimodal applications.

RAG Implementation Framework

The four-phase structure of RAG implementation comprises:

- Pre-Retrieval: Operations like indexing and query manipulation prepare the system for efficient information retrieval.

- Retrieval: This phase employs methods to search and rank data relevant to the input query, leveraging both traditional techniques like BM25 and newer semantic-oriented models such as BERT.

- Post-Retrieval: Involves re-ranking and filtering to optimize the selection of retrieved content for the generation phase.

- Generation: The final text output is generated, merging retrieved information with the original query to produce accurate and contextually appropriate responses.

Evaluation of RAG Systems

The paper discusses evaluation methods focusing on:

- Segmented Analysis: Examining retrieval and generation components individually to assess performance accurately in relevant tasks such as question answering.

- End-to-End Evaluation: Evaluating the system's overall performance to ensure the coherence and correctness of generated responses.

Impact and Theoretical Implications

The integration of retrieval mechanisms within LLMs presents both practical and theoretical implications:

- Practical Applications: Enhancing the adaptability of LLMs in various domains by integrating real-time data, thus maintaining their relevance over time.

- Theoretical Advancements: RAG prompts re-evaluation of traditional LLM architectures, proposing hybrid models that dynamically interact with external data sources.

Future Prospects and Developments

Looking ahead, the paper suggests expanding RAG applications beyond text to include multimodal data, which could revolutionize areas like interactive AI and automated content creation. Advances in retrieval methods, such as differentiable search indices and integration of generative models, hold promise for further enhancing the precision and efficiency of RAG systems.

Conclusion

This paper provides a structured examination and categorization of RAG methodologies, offering a detailed analysis from a retrieval perspective. By discussing RAG's evolution, categorizing its mechanisms, and highlighting its impact, this paper serves as a crucial resource for researchers aiming to advance the functionality and application of LLMs through retrieval-augmented technologies.