Advances and Challenges in Transformer Architectures for Long-Context LLMs

The article titled "Advancing Transformer Architecture in Long-Context LLMs: A Comprehensive Survey" explores the significant evolution of LLMs with a specific focus on enhancing long-context capabilities, setting a comprehensive groundwork for future innovations in this domain. This survey systematically reviews the enhancements in Transformer-based LLM architectures, publicizing the emerging techniques crafted to overcome the intrinsic limitations when handling extended contexts. Transformer models have become pivotal in numerous applications requiring nuanced language understanding and generation across various domains. However, these models predominantly encounter challenges induced by their fundamental architecture when dealing with extended sequences due to their quadratic complexity bottlenecks and limited effective context windows.

Overcoming Structural Limitations in Transformers

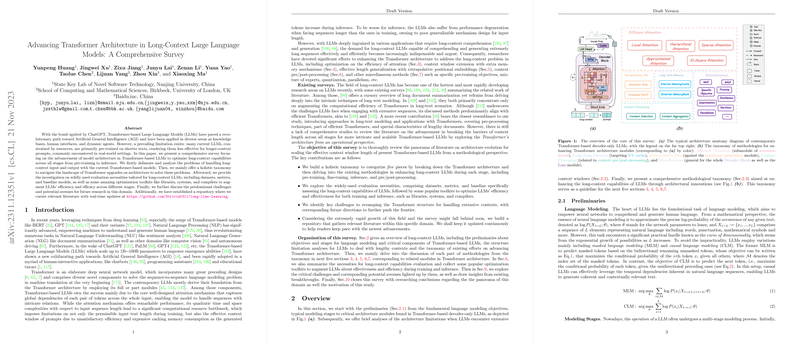

The survey first emphasizes the structural challenges of Transformers when handling long-context inputs. The primary issues identified include the computational and memory complexity of standard attention mechanisms, along with inadequate long-term memory, resulting in suboptimal performance with lengthy texts. Addressing these challenges necessitates redefining core architectural modules within the Transformer layers. The survey organizes the research into five methodological categories: Efficient Attention, Long-Term Memory mechanisms, Extrapolative Positional Encodings, Context Processing, and other Miscellaneous approaches.

Efficiency Strategies in Attention Mechanisms

A notable area of innovation lies in improving the efficiency of the attention mechanism. Efforts to optimize attention include implementing local, hierarchical, and sparse attention mechanisms, which limit or adapt the global attention scope, consequently reducing computational complexity. Local attention approaches constrict attention to nearby tokens, while hierarchical structures decompose attention into multi-scale dependencies, allowing for efficient aggregation of context over large sequences. Sparse attention and low-rank approximations further reduce unnecessary computations by leveraging the inherent sparsity and low-rank properties of natural language inputs.

Enhancements in Memory and Positional Encoding

Another facet explored involves modifications to enhance the memory mechanisms of Transformers. Internal memory cache and external memory bank strategies introduce methods for maintaining contextual information beyond the in-context memory previously inherent in Transformer designs. These strategies borrow concepts from recurrent neural networks, enabling models to track long-term dependencies over sequence segments. These enhancements are complemented by advances in positional encoding strategies that improve extrapolative properties, crucial for extending the Transformer’s capabilities to lengths beyond those seen during training.

Contextual Processing and Miscellaneous Strategies

The survey proposes several context processing techniques to maintain computational feasibility while utilizing current pretrained models. These include strategies that intelligently break input sequences into manageable segments or aggregate context information iteratively to produce coherent outputs without exceeding the transformer’s maximum sequence length.

The Way Forward: Theoretical and Methodological Speculations

Theoretical advancements are encouraged for future work, emphasizing the need for universally applicable LLMing objectives enabling extensive dependency capture. Questions are posited about the prospects of more scalable and robust attention approximations alongside intrinsic memory enhancements for maintaining coherence across vast contexts. Additionally, the survey underscores the significance of reliable and responsive evaluation metrics.

The research points towards a growing trend of integrating off-the-shelf models with sophisticated memory and processing techniques to address long-context challenges, suggesting a direction for creating more versatile models that adeptly manage extremely long dependencies efficiently. The paper concludes by identifying potential directions and advocating for a progressive sharing of advancements to refine LLM approaches towards achieving effective LLMing for AGI.

In sum, this article provides a deeper insight into the complexities and innovations surrounding Transformer architectures in handling long-contextual sequences, ensuring a nuanced blend of theoretical and practical advancements fostering efficient large-context processing within LLM frameworks. The detailed exposition in the survey can serve as a critical reference point for researchers embarking on further refining LLM architectures with enhanced long-context capabilities.