Contextual Compression in Retrieval-Augmented Generation for LLMs: An Overview

The research paper entitled "Contextual Compression in Retrieval-Augmented Generation for LLMs: A Survey" by Sourav Verma provides a comprehensive examination of the advancements, challenges, and future directions in contextual compression methods within the context of Retrieval-Augmented Generation (RAG) for LLMs. This paper is an extensive survey elucidating the evolution of contextual compression paradigms, with an aim to enhance the efficacy of RAG models when managing extensive and complex data inputs.

Introduction

LLMs have achieved significant milestones in NLP, excelling in tasks such as document summarization, Q&A systems, and conversational AI. Despite their strengths, LLMs face limitations, notably hallucinations, outdated knowledge, and restricted context windows, which hinder their performance in domains requiring current and specific knowledge. The introduction of RAG methods leverages external databases to mitigate these challenges by retrieving contextually relevant snippets, thus enhancing the generated content's coherence and reliability.

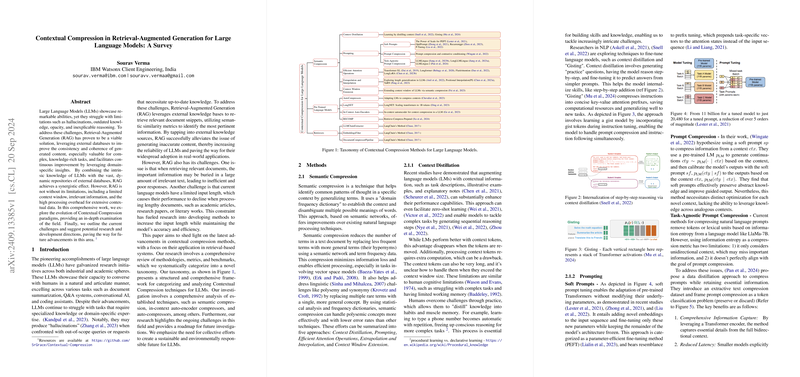

Core Challenges and Taxonomy of Contextual Compression

The key challenges with RAG systems include the overwhelming quantity of irrelevant information in retrieved documents and the computational overhead associated with processing expansive contextual data. Contextual compression methods aim to address these issues by condensing the context to include only pertinent information.

The survey categorizes contextual compression techniques into a structured taxonomy comprising:

- Semantic Compression

- Pre-trained LLMs

- Retrievers

Semantic Compression

This category involves summarizing text by replacing less frequent terms with their more general hypernyms, minimizing information loss, and addressing linguistic challenges like polysemy and synonymy. This procedure encompasses methods such as Context Distillation, where LMs are fine-tuned to internalize step-by-step reasoning, and methods like Gisting, which condenses instructions into concise key-value attention prefixes.

Key Techniques in Semantic Compression:

- Context Distillation (e.g., learning by distilling context).

- Prompting (including Soft Prompts, Prompt Compression, and Task-Agnostic Prompt Compression).

- Efficient Attention Operations (e.g., Transformer-XL, Longformer, FlashAttention).

- Extrapolation and Interpolation (methods for extending the context window, such as Position Interpolation and YaRN).

Pre-trained LLMs (PLMs)

PLMs like BERT, GPT, and their successors have revolutionized NLP with their ability to generalize across varied tasks using large datasets. The paper discusses AutoCompressors, LongNET, and In-Context Auto-Encoders which are instrumental in compressing long contextual data into smaller, manageable units without significant performance loss.

Notable Contributions in PLMs:

- AutoCompressors: Sequentially compress segments of long documents into summary vectors.

- LongNET: Utilizes dilated attention to scale sequence lengths to 1 billion tokens efficiently.

- In-Context Auto-Encoders: Compress lengthy contexts into fixed memory buffers for efficient processing.

Retrievers

Retrievers refine the selection process by returning only the most relevant documents extracted from large datasets. Contextual compression here involves methods like LLMChainExtractor, EmbeddingsFilter, and DocumentCompressorPipeline which help prune redundant or irrelevant data.

Evaluation Metrics and Benchmarks

The paper reviews the "Triad of Metrics" for evaluating RAG-based solutions: Groundedness, Context Relevance, and Answer Relevance. Additional metrics include Noise Robustness, Negative Rejection, Information Integration, and Counterfactual Robustness. The effectiveness and efficiency of compressed contexts are assessed via benchmarks and datasets designed for diverse NLP tasks.

Challenges and Future Directions

The survey identifies several challenges and prospective areas for future research:

- Advancing Compression Methods: Developing more sophisticated techniques tailored for LLMs to bridge performance gaps.

- Performance-Size Trade-offs: Balancing model performance with the size of context efficiently.

- Dynamic Contextual Compression: Automating the contextual compression process to adapt dynamically to the input data.

- Explainability: Ensuring compressed models maintain interpretability and reliability.

Conclusion

The survey serves as a detailed exploration of the current landscape of contextual compression for LLMs, presenting a thorough analysis of various methodologies, evaluation metrics, and benchmarks. It underscores the potential for these techniques to significantly enhance the effectiveness of LLM applications in real-world scenarios and emphasizes the need for continued innovation and ethical considerations in the development and deployment of LLMs.

This essay highlights the key contributions, challenges, and implications of the research, providing an insightful summary for researchers within the field of NLP.