From Rational Answers to Emotional Resonance: The Role of Controllable Emotion Generation in Language Models

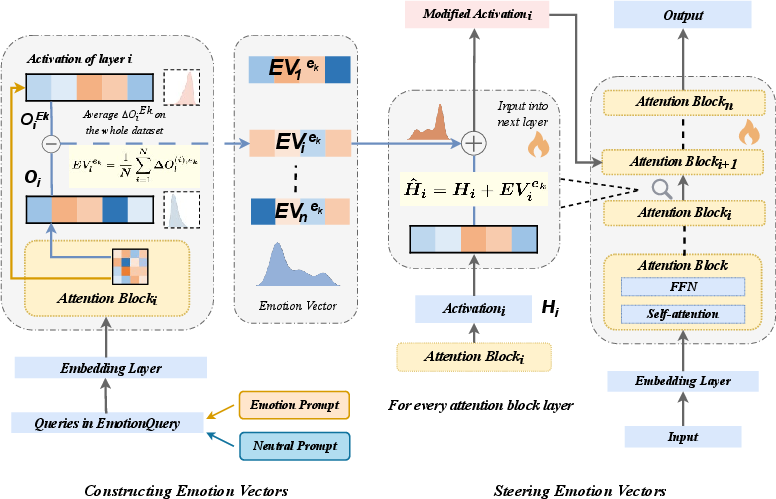

Abstract: Purpose: Emotion is a fundamental component of human communication, shaping understanding, trust, and engagement across domains such as education, healthcare, and mental health. While LLMs exhibit strong reasoning and knowledge generation capabilities, they still struggle to express emotions in a consistent, controllable, and contextually appropriate manner. This limitation restricts their potential for authentic human-AI interaction. Methods: We propose a controllable emotion generation framework based on Emotion Vectors (EVs) - latent representations derived from internal activation shifts between neutral and emotion-conditioned responses. By injecting these vectors into the hidden states of pretrained LLMs during inference, our method enables fine-grained, continuous modulation of emotional tone without any additional training or architectural modification. We further provide theoretical analysis proving that EV steering enhances emotional expressivity while maintaining semantic fidelity and linguistic fluency. Results: Extensive experiments across multiple LLM families show that the proposed approach achieves consistent emotional alignment, stable topic adherence, and controllable affect intensity. Compared with existing prompt-based and fine-tuning-based baselines, our method demonstrates superior flexibility and generalizability. Conclusion: Emotion Vector (EV) steering provides an efficient and interpretable means of bridging rational reasoning and affective understanding in LLMs, offering a promising direction for building emotionally resonant AI systems capable of more natural human-machine interaction.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about teaching AI chatbots to “talk with feelings” in a careful, controllable way. Today’s LLMs are great at reasoning and answering questions, but they often sound flat or awkward when trying to be emotional. That’s a problem in places like classrooms, hospitals, and mental health support, where warmth, empathy, and encouragement matter. The authors introduce a simple, plug-and-play method that lets LLMs adjust their emotional tone—like turning a dial from neutral to happy, sad, or calm—without retraining the model.

What questions did the researchers ask?

The team focused on three easy-to-understand goals:

- Can we make an LLM reliably sound more emotional when we want it to?

- Can we do this without messing up the meaning or fluency of its answers?

- Can we control how strong the emotion is, from light to intense, like a volume knob?

How did they do it?

Think of an LLM as a huge orchestra playing a song (your answer). Inside the model, there are “hidden states,” which are like the instruments’ notes before you hear the music. The researchers discovered a way to add an “emotion filter” to these notes so the final song sounds joyful, comforting, worried, etc.—without changing the instruments themselves.

Here’s the approach in everyday terms:

- They asked the model to respond to the same kinds of prompts in two ways: once neutral (no emotion), and once with a specific emotion (like joy or sadness).

- Inside the model, they compared the “hidden patterns” of the neutral and emotional responses. The difference between these patterns is what they call an Emotion Vector (EV). You can think of an EV as a “direction” that nudges the model’s tone toward a particular feeling.

- During answering (inference), they inject this EV into the model’s inner workings. It’s like adding a subtle flavor to a dish while it’s being cooked—no need to rebuild the kitchen.

- They use a simple number, alpha (α), to control intensity. Small α means a gentle emotional tone; larger α feels stronger. You can even mix EVs (like blending joy and calm), because they add together like colors.

Important details:

- No extra training is required.

- No changes to the model’s architecture.

- Works across many different LLMs.

- The method aims to keep the meaning of the original answer the same, while changing the emotional style.

What did they find and why it matters?

Main results in simple terms:

- It works across many models: The method made different LLMs sound appropriately emotional—joy, anger, sadness, fear, disgust—without losing track of the topic.

- Meaning and fluency stay stable: Measures of sentence fluency (how natural the text reads) and topic adherence (staying on-topic) stayed almost the same as the original answers, even after adding emotion. In some models, fluency even improved slightly.

- Clear control over emotion strength: Turning up α increased the emotional intensity in a predictable way, like a volume knob for feelings. Mixing EVs produced combined tones in a controllable way.

- Theory supports the method: The authors explain why this works mathematically. In short, because EVs are built from neutral vs. emotional pairs with the same meaning, they push emotion without pulling the topic off course.

- Too much intensity can cause issues: If α is set too high, some models may drift off-topic or become repetitive. So the “volume knob” should be used thoughtfully.

How they measured success:

- Emotion Probability Score: How likely a sentence sounds emotional. With EVs, this score went up across most models.

- Emotion Absolute Score: How strong the emotion feels on a 0–100 scale. With EVs, scores jumped significantly at moderate α.

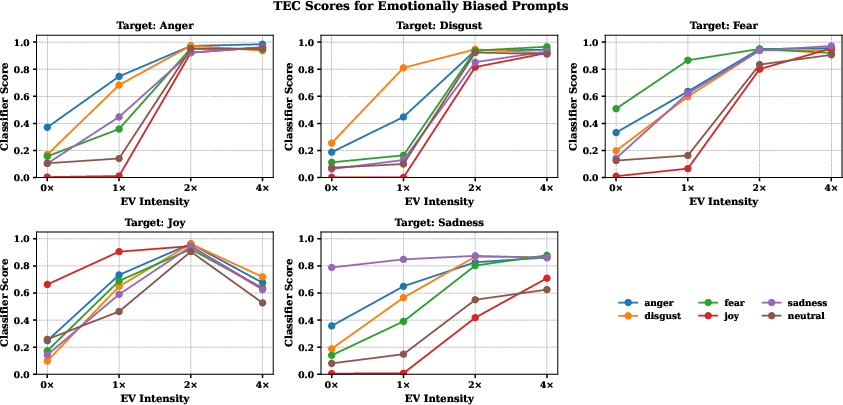

- Target Emotion Confidence: How confidently a classifier detects the specific target emotion (like joy). This improved notably at 1× and 2× EV strength, with less benefit or slight drops at very high levels (4×) for some models.

Visual checks:

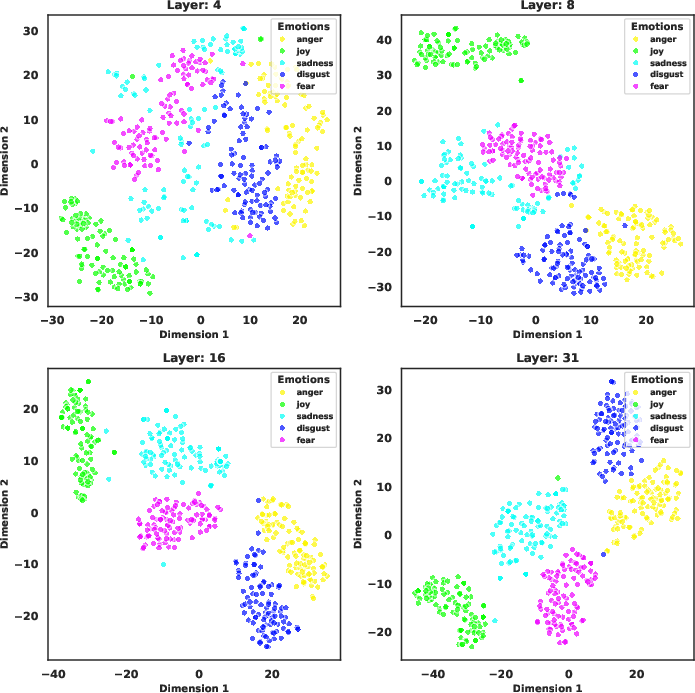

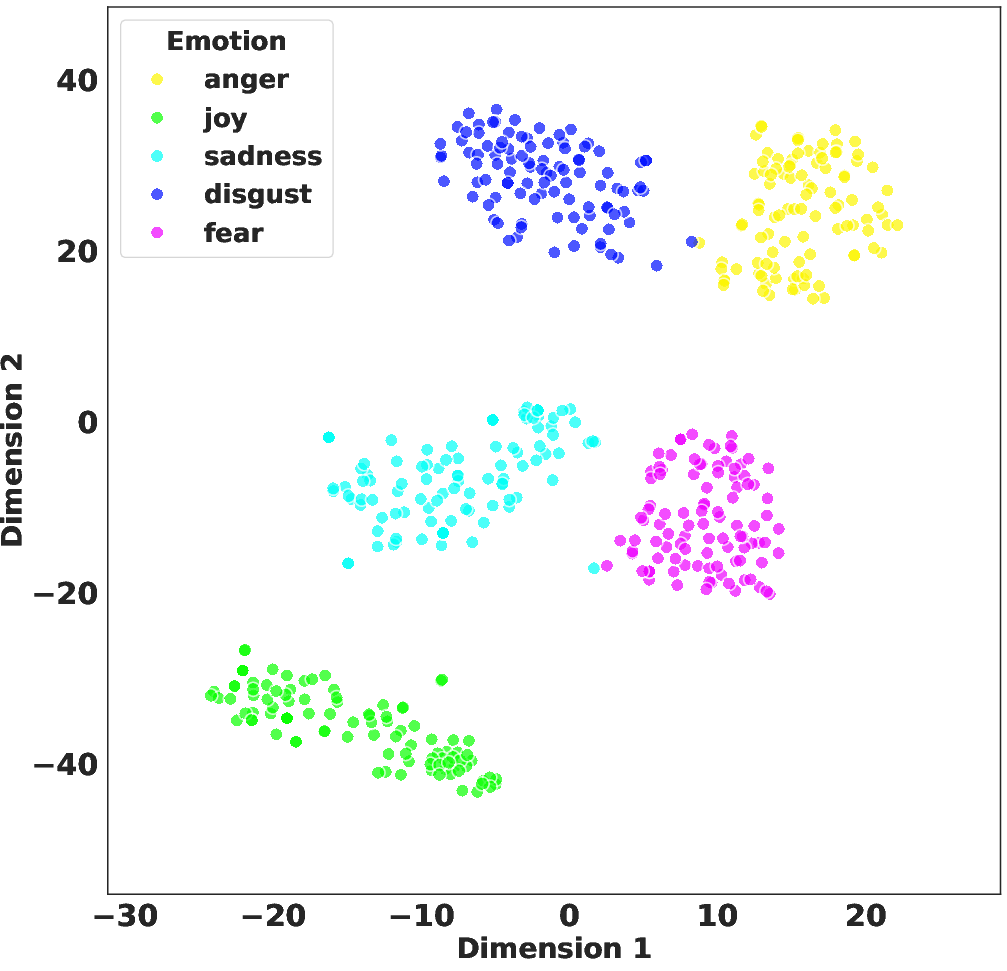

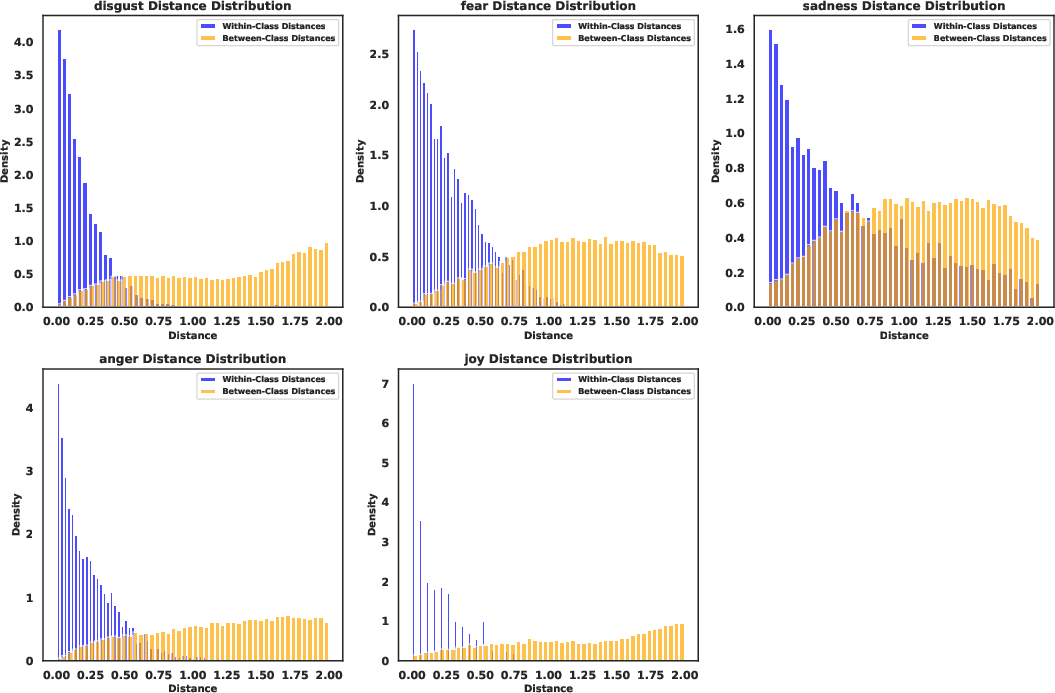

- The EVs form neat clusters (using t-SNE plots), meaning the vectors are stable and consistent for each emotion. That suggests they capture something real and reusable about each feeling.

Why is this important?

This approach can make AI more human-friendly:

- In education: AI tutors can encourage students when they struggle, celebrate successes, and keep them motivated.

- In healthcare: Assistants can share information while also sounding reassuring and empathetic.

- In mental health: Chatbots can offer support with warmth and validation, not just instructions.

Because it needs no retraining and works across many models, it’s efficient, flexible, and practical. It helps bridge the gap between “smart answers” and “answers that feel caring,” which can build trust and improve user satisfaction. In short, this is a step toward AI that not only thinks clearly but also communicates with heart—safely and in a controlled way.

Practical Applications

Immediate Applications

Below are concrete ways the paper’s Emotion Vector (EV) steering method can be deployed now, given its training-free, plug-and-play nature and demonstrated cross-model generality. Each item includes sectors, potential tools/workflows, and feasibility assumptions.

- Emotionally consistent customer support and sales chats (software, retail, finance, telecom, energy utilities)

- What: Add controllable warmth, reassurance, or calm to responses while preserving factual content; de-escalate frustrated users; A/B test conversion-oriented emotional tones in sales.

- Tools/workflows: EV Controller SDK integrated into inference servers (vLLM, TGI, llama.cpp); dashboard slider for α (intensity); per-queue presets (e.g., “calm” for incident queues).

- Assumptions/dependencies: Access to hidden states in serving stack (open-source or vendor-exposed hooks); guardrails to cap α and avoid topic drift.

- AI tutoring with affective feedback (education)

- What: Maintain encouragement and patience over multi-turn help; adapt tone to learner frustration; celebrate progress.

- Tools/workflows: LMS plugin with EV presets (encouraging, reassuring); teacher “tone templates” per course; logging to monitor adherence to syllabus constraints.

- Assumptions/dependencies: Instructor supervision; α calibrated per model to avoid semantic drift; clear disclosure to learners.

- Patient communications and portals (healthcare admin and engagement)

- What: Empathetic appointment reminders, test-result explanations, discharge guidance; reduce anxiety without altering clinical content.

- Tools/workflows: EV presets embedded in EHR messaging assistants; “reassure + concise” preset for sensitive notifications.

- Assumptions/dependencies: Use in non-diagnostic, non-therapeutic contexts; clinical oversight; compliance with HIPAA/GDPR; escalation policies.

- Peer-support and psychoeducation chat (mental health, wellbeing)

- What: Add validation and warmth in supportive, non-clinical contexts; maintain consistent empathetic tone across turns.

- Tools/workflows: EV α scheduler that lowers intensity near risky content; integrated crisis triage and handoff.

- Assumptions/dependencies: Clear disclaimers; safety filters; human-in-the-loop escalation; restricted scope (no therapy).

- Email and internal comms tone control (enterprise/HR, daily productivity)

- What: Rewrite drafts to “warm but professional,” “supportive,” or “concise and calm” without changing substance.

- Tools/workflows: Mail client add-ins (“Adjust tone” with α slider); batch tone normalization for announcements.

- Assumptions/dependencies: On-device or trusted-host inference for privacy; user consent; audit logs for sensitive contexts.

- Marketing copy and content operations (marketing, media)

- What: Control emotional resonance of headlines, CTAs, and scripts; systematically A/B test emotional intensity.

- Tools/workflows: “EV Copy Studio” with per-campaign tone presets; analytics loop connecting click-through rate and α.

- Assumptions/dependencies: Brand safety and compliance review; avoid manipulative intensities; content approvals.

- Community moderation and de-escalation assistants (platform ops, civic tech)

- What: Generate calm, empathic replies to heated posts; reduce toxicity while preserving policy references.

- Tools/workflows: Moderator cockpit with “calm/firm” presets; EV combined with policy retrieval.

- Assumptions/dependencies: Policy-aligned templates; abuse detection; α caps to prevent sermonizing or drift.

- NPC dialogue and roleplay (gaming, entertainment)

- What: Consistently steer NPCs to joy/anger/fear/sadness tones; blend emotions additively for nuanced scenes.

- Tools/workflows: “EV Mixer” for scene scripting; α linked to game state; TTS prosody mapping via SSML.

- Assumptions/dependencies: TTS engine that supports prosody/emotion tags; latency budget for multi-layer injection.

- Developer middleware for controllable generation (software tooling)

- What: Library to extract and apply EVs per model; α schedules; emotion mixing; evaluation harnesses.

- Tools/workflows: Python/TypeScript SDK; LangChain/LlamaIndex tool wrappers; CI checks for topic adherence and perplexity stability.

- Assumptions/dependencies: Access to model residual streams; per-model α calibration to avoid high-α degradation.

- Research instrumentation for interpretability and affective computing (academia)

- What: Use EVs to probe layerwise affect representation; build benchmarks for semantic preservation under affect control.

- Tools/workflows: Open “EmotionVector Hub” with EVs per model; reproducible notebooks for TEC/EPS/EAS metrics; cross-validation datasets.

- Assumptions/dependencies: Availability of model families and dataset licenses; common evaluators (MNLI-classifiers, LLM judges).

- Accessibility aids for tone management (daily life, neurodiversity support)

- What: Help users craft messages with appropriate warmth/firmness; offer alternatives for conflict-sensitive communication.

- Tools/workflows: Keyboard or messaging app extension with quick “tone fit” options; privacy-preserving local models.

- Assumptions/dependencies: On-device inference for sensitive content; user controls and opt-in.

- Sector-specific service desks (utilities, public services, insurance)

- What: Tone-tuned responses for outage notifications, claims updates, billing disputes; reduce churn by empathetic framing.

- Tools/workflows: “Context → EV preset” routing based on ticket category; α guardrails by policy.

- Assumptions/dependencies: Regulatory compliance; auditability; risk reviews for fairness across demographics.

Long-Term Applications

These opportunities require further research, platform support, scaling, or regulatory/ethical frameworks before broad deployment.

- Affect-aware clinical assistants and digital therapeutics (healthcare)

- What: Emotionally attuned bedside-manner for clinical Q&A; supportive coaching in chronic care; digital CBT companions with controlled affect.

- Tools/workflows: Longitudinal α personalization; integration with EHR; multimodal sensors (voice, wearables) for affect detection.

- Assumptions/dependencies: Clinical trials (efficacy and safety); SaMD/FDA/EMA pathways; continuous monitoring; strict guardrails.

- Emotionally adaptive learning at scale (education)

- What: Tutors that modulate affect by learner state (frustration/flow), across courses and semesters; cohort-level fairness audits.

- Tools/workflows: Affective state estimators; α policies tied to engagement metrics; teacher-in-the-loop dashboards.

- Assumptions/dependencies: Privacy-preserving telemetry; IRB-approved studies; bias and outcome monitoring.

- Social and service robotics with multimodal emotion (robotics, eldercare, retail)

- What: Map EVs to speech prosody, gestures, and facial expressions for coherent, culture-aware affect.

- Tools/workflows: Cross-modal mapping from EV to TTS/animation; scene-level α orchestration; safety “emotion governor.”

- Assumptions/dependencies: High-quality TTS with prosody control; cultural calibration; robust on-device inference.

- Platform-level “Tone-as-a-Service” APIs (software platforms, model providers)

- What: Cloud APIs that expose safe emotion controls (α-bounded) without revealing raw hidden states; standardized presets (reassure, encourage, de-escalate).

- Tools/workflows: Vendor-side activation hooks; usage caps and auditing; consent and disclosure primitives.

- Assumptions/dependencies: Provider support for activation steering; standardized safety evaluation and logging.

- Personalized affect profiles and adaptive regulation (cross-sector)

- What: Learn user-preferred tone and dynamically regulate α per recipient/context; reduce misfires in sensitive exchanges.

- Tools/workflows: Federated learning of tone preferences; differential privacy; “emotion budget” per session.

- Assumptions/dependencies: Consent and transparency; strong privacy guarantees; fairness constraints.

- Cross-lingual and cross-cultural EV banks (global products, localization)

- What: Culture-aligned EV repositories; mappings from dimensional valence-arousal to language-specific realizations.

- Tools/workflows: Localization toolchains; human-in-the-loop cultural QA; region-specific α calibration.

- Assumptions/dependencies: Diverse corpora; cultural review boards; multilingual evaluation benchmarks.

- Safety, governance, and compliance frameworks for emotional AI (policy, compliance)

- What: Standards for disclosure, opt-in, and limits on persuasive intensity; audits of emotional manipulation risk.

- Tools/workflows: “Emotional intensity ledger” and watermarking; third-party certification; incident reporting.

- Assumptions/dependencies: Regulator guidance; industry consortia; harmonized international norms.

- Empathy benchmarking and evaluation science (academia, standards bodies)

- What: Validated, task-specific measures beyond classifier scores (e.g., patient satisfaction, learning resilience).

- Tools/workflows: Shared datasets and protocols; multi-rater human studies; causal impact analyses.

- Assumptions/dependencies: Funding for longitudinal studies; interdisciplinary coordination.

- Negotiation, collections, and dispute resolution assistants (finance, gov services)

- What: Emotionally calibrated communication to reduce defaults and resolve disputes ethically.

- Tools/workflows: Outcome-aware α strategies; fairness and harm-minimization constraints; human review.

- Assumptions/dependencies: Strong compliance oversight; bias audits; stakeholder approval.

- Crisis response and public communications (public health, emergency management)

- What: Tone-tuned alerts and guidance that reduce panic while conveying urgency.

- Tools/workflows: Scenario libraries with approved EV presets; stress-tested α ranges; multilingual deployment.

- Assumptions/dependencies: Coordination with authorities; misinformation safeguards; rigorous simulations.

- Content moderation with psychological harm minimization (platforms)

- What: Generate restorative messages and counterspeech with controlled empathy/firmness; reduce recidivism.

- Tools/workflows: Harm-aware α controllers; continuous evaluation on platform metrics; red-teaming.

- Assumptions/dependencies: Ethical review; transparency; adversarial testing.

- Model architecture co-design for affect control (ML research)

- What: Dedicated “affect channels” that expose safely bounded control; disentangled representations for stable steering.

- Tools/workflows: Pretraining objectives that separate semantics and affect; safety layers to prevent drift at high α.

- Assumptions/dependencies: Access to pretraining; community validation; open benchmarks.

Cross-cutting assumptions and dependencies to keep in mind

- Access to hidden states: Immediate deployment favors open-source or vendor-supported models that expose residual streams; most hosted APIs do not.

- Calibration: α must be tuned per model to prevent topic drift and fluency degradation at high intensities; include automatic safeguards.

- Safety and ethics: Emotional steering can be manipulative if misused; require disclosure, opt-in, and use-case limits; strict guardrails in health/finance/politics.

- Cultural and linguistic variance: EVs may not transfer perfectly across languages and cultures; localized EVs and evaluations are needed.

- Evaluation reliability: Classifier- or LLM-judge-based emotion scores are proxies; incorporate human ratings and task outcomes for high-stakes use.

- Data privacy: Tone personalization and message rewriting may involve sensitive content; prefer on-device or private deployments with auditable logs.

Collections

Sign up for free to add this paper to one or more collections.