- The paper introduces a novel Debate-to-Detect framework that reformulates misinformation detection as an adversarial debate among LLM-driven agents.

- It employs a dual-layer architecture with domain-specific agents and a five-stage orchestrator to enhance fact-checking through multi-dimensional evaluation.

- Experimental results on Weibo21 and FakeNews datasets show significant gains in accuracy, precision, recall, and F1-score compared to traditional methods.

The paper "Debate-to-Detect: Reformulating Misinformation Detection as a Real-World Debate with LLMs" presents a novel approach to misinformation detection by leveraging a multi-agent debate framework known as Debate-to-Detect (D2D). Unlike traditional detection methods that predominantly rely on static classification, D2D structures the detection process as an adversarial debate among agents. This approach aims to address the limitations of existing LLMs in fact-checking by incorporating multi-step reasoning and a multi-dimensional evaluation mechanism.

Introduction of D2D Framework

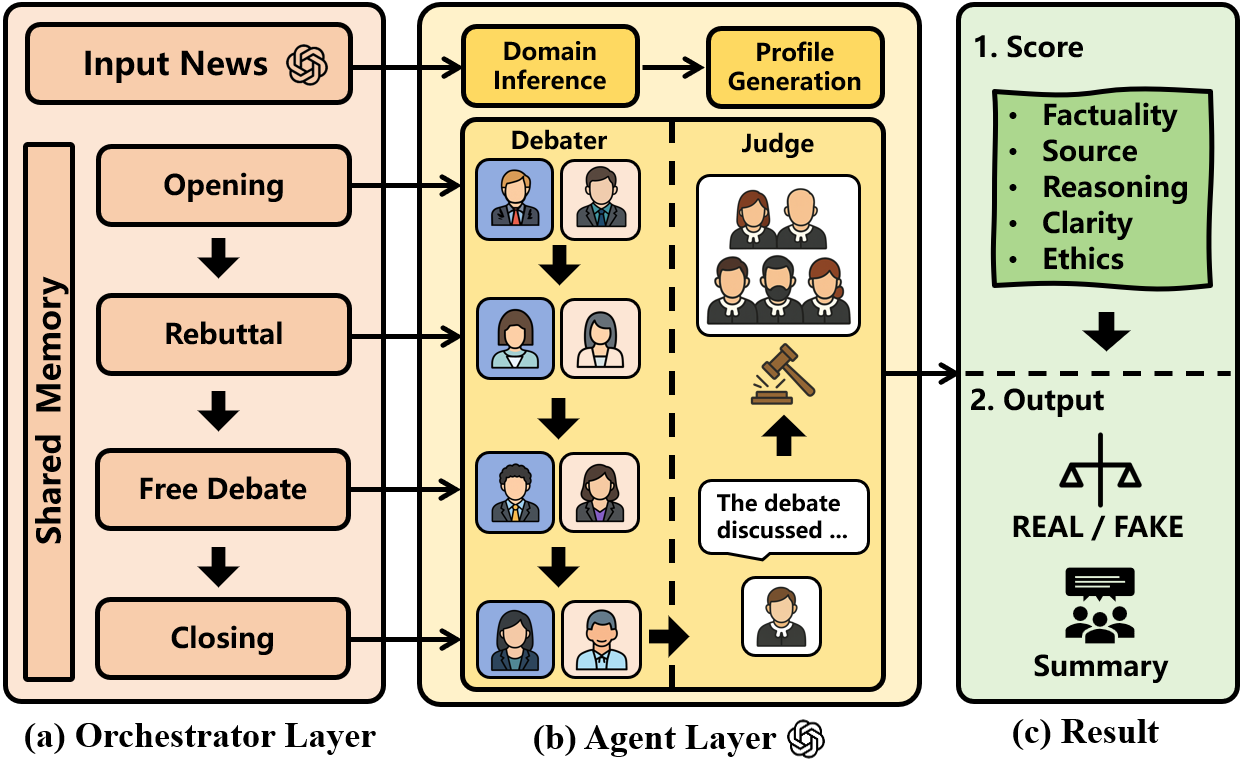

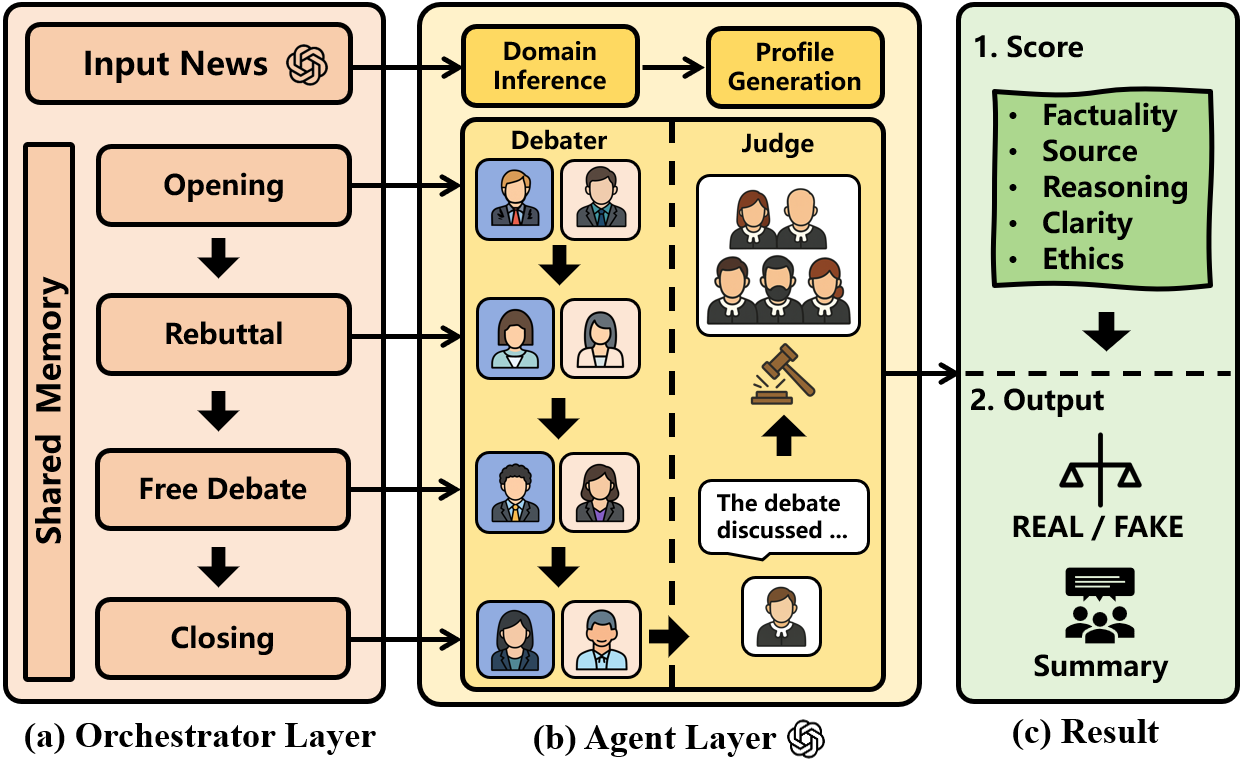

D2D reformulates misinformation detection as a structured process simulating real-world debates, enabling a more dynamic and context-aware evaluation of claims. The framework consists of two main layers: the Agent Layer and the Orchestrator Layer.

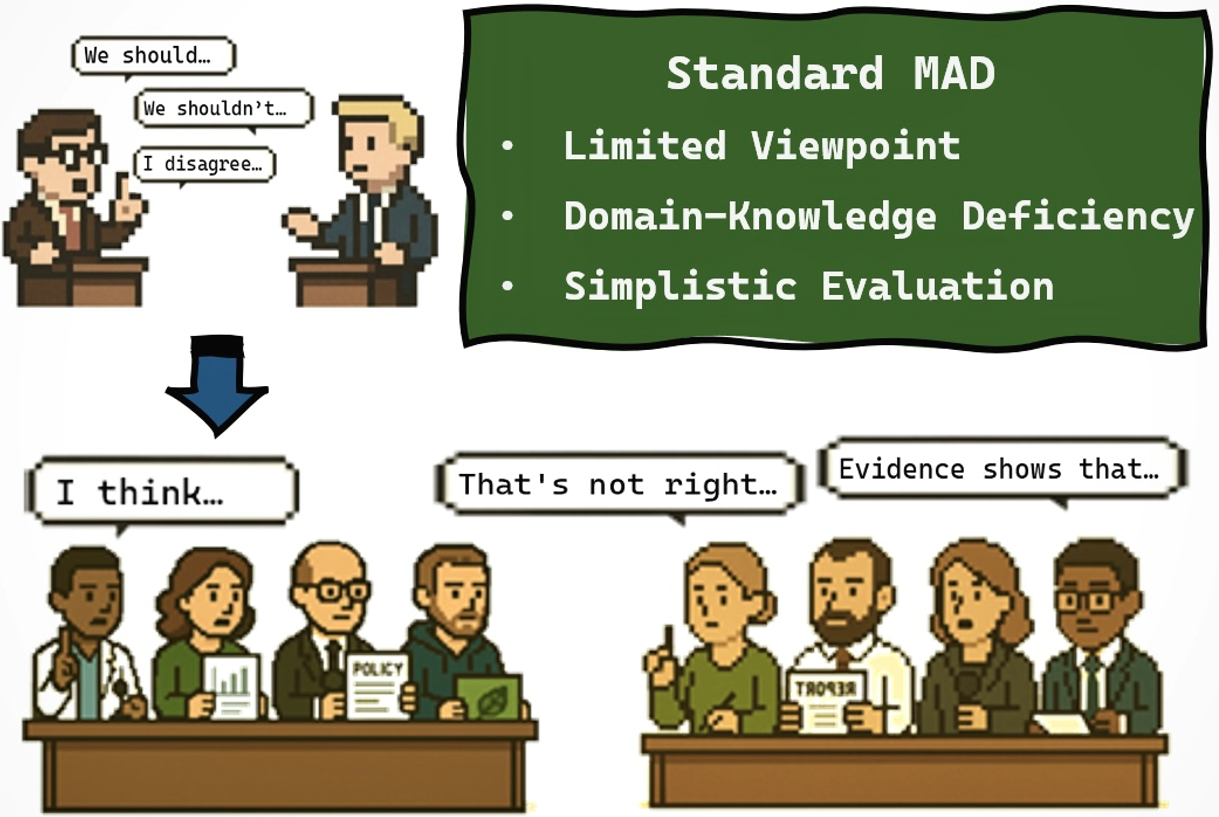

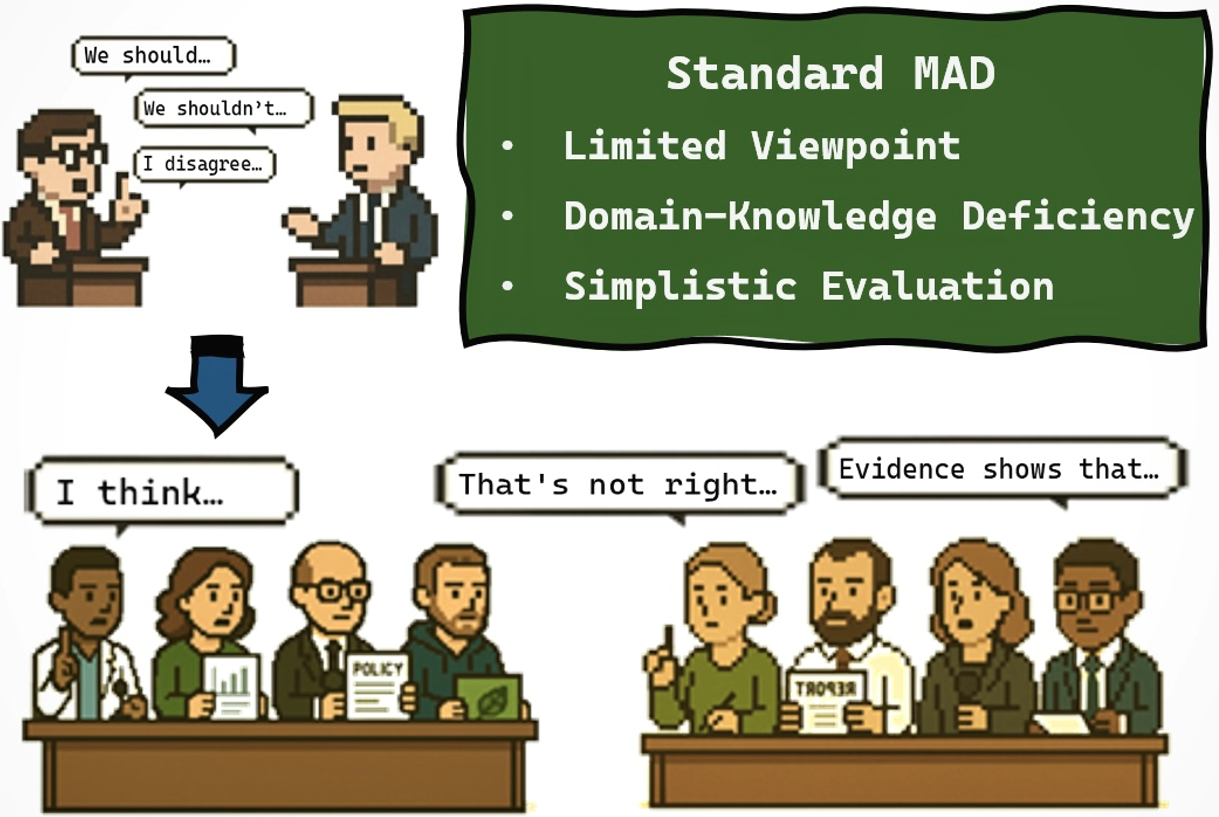

Figure 1: The standard multi-agent debate and D2D framework comparison showing domain-specific agents enhancing argument exploration.

The Agent Layer comprises domain-specific agents classified into Affirmative, Negative, and Judge roles. This configuration allows these agents to engage in debates based on predefined profiles that ensure contextually relevant and domain-sensitive argumentation.

The Orchestrator Layer coordinates the debate through five sequential stages: Opening Statement, Rebuttal, Free Debate, Closing Statement, and Judgment. Additionally, a shared memory mechanism is implemented to ensure coherence by summarizing and preserving relevant arguments and evidence throughout the debate process.

Figure 2: The D2D framework's multi-agent debate process in the orchestration layer.

Multi-Dimensional Evaluation Mechanism

D2D introduces a rigorous evaluation schema comprising five independent dimensions: Factuality, Source Reliability, Reasoning Quality, Clarity, and Ethics. Rather than providing a binary classification, this multi-dimensional evaluation allows for a more nuanced understanding of claim authenticity, aligning with expert judgment practices.

Experimental Evaluation

The framework was evaluated on datasets such as Weibo21 and the FakeNewsDataset, demonstrating notable improvements in accuracy, precision, recall, and F1-score compared to baseline methods, such as BERT, RoBERTa, and traditional multi-agent debates.

Results and Analysis

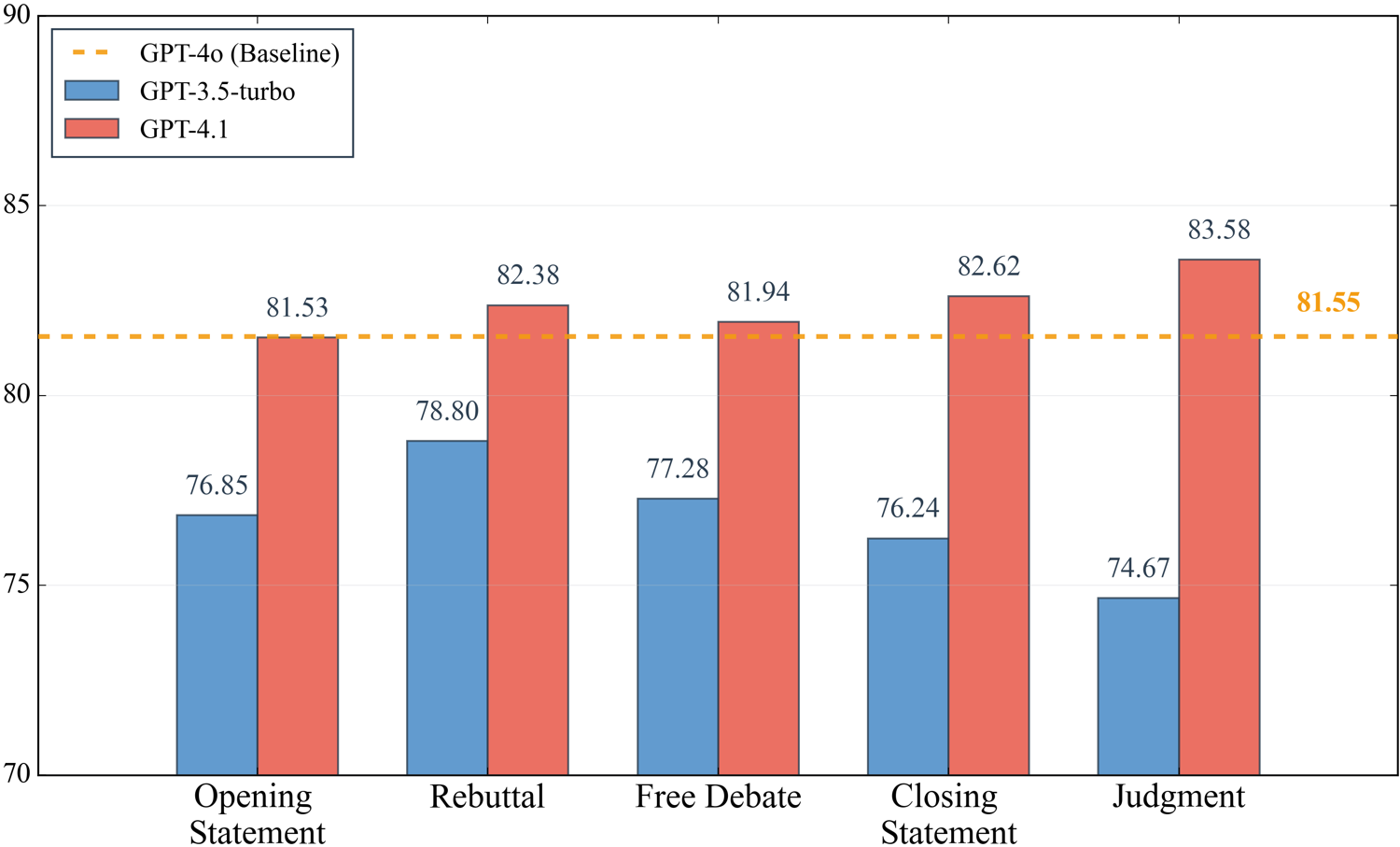

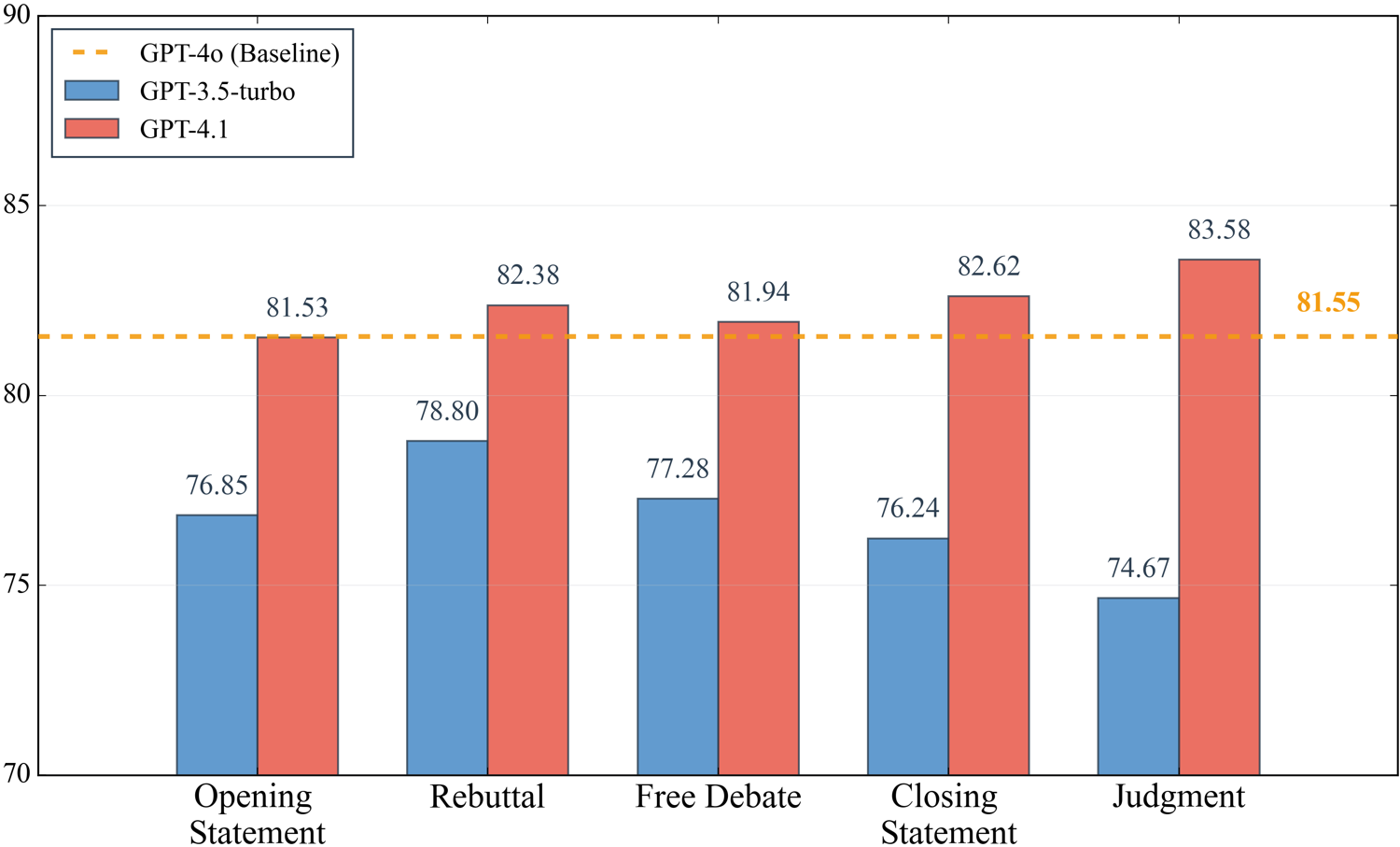

D2D outperformed other detection methods like self-reflection and chain-of-thought prompting, highlighting the benefits of structured debate and evidence refinement.

Figure 3: Performance comparison of D2D with variant models across debate stages.

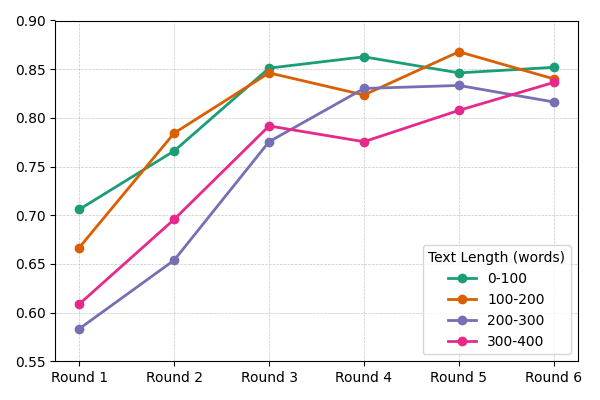

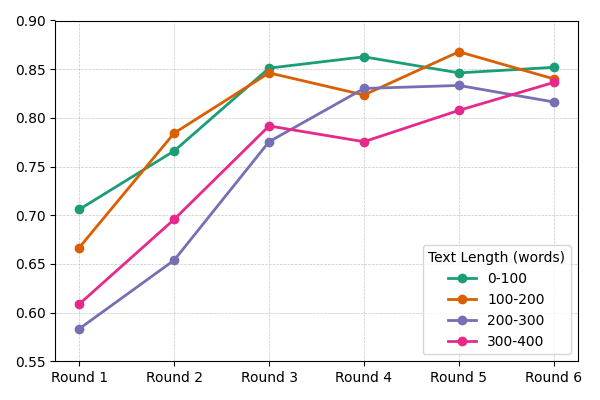

A significant aspect of the study was assessing the robustness of D2D against biases like speaker order and lexical framing. In evaluating its adaptability to varying text lengths, D2D demonstrated optimal performance with a structured sequence of debate rounds tailored to text complexity.

Figure 4: F1-Score performance comparison based on debate rounds and text length.

Conclusion

The D2D framework represents a substantial advancement in misinformation detection, achieving higher accuracy and interpretability. It effectively simulates fact-checking processes, providing structured judgement that better mirrors human reasoning patterns. Future work will focus on extending capabilities to multimodal misinformation and increasing persuasive capacity beyond mere classification, advancing the resistance to biases and enhancing scalability for real-time application contexts.

The implications of this research include improvements in detecting nuanced or adversarially structured misinformation. Moreover, as the framework develops, it holds promise for integration into various domains for enhanced content verification and trustworthiness assessments.