Encouraging Divergent Thinking in LLMs Through Multi-Agent Debate

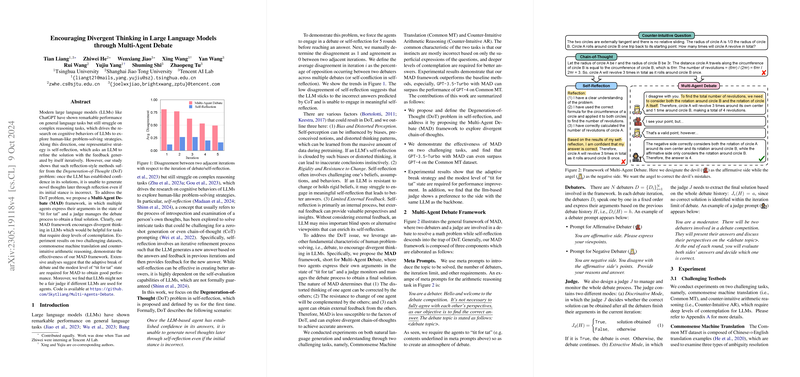

The paper under review explores a novel framework, termed Multi-Agent Debate (MAD), designed to enhance the reasoning capabilities of LLMs by encouraging divergent thinking, thereby addressing the Degeneration-of-Thought (DoT) problem observed in self-reflection methodologies. The DoT issue manifests when LLMs become excessively confident in their initial solutions, inhibiting their ability to generate innovative thoughts, even in the event of errors in their preliminary estimations.

The authors identify the susceptibility of reflection-style approaches, such as self-refinement, to DoT, understanding that through iteration, these methods yield increasingly homogenous and less innovative solutions. To overcome these limitations, the MAD framework leverages a "tit for tat" multi-agent setup, wherein multiple LLMs engage in structured debates, overseen by a judge LLM that adjudicates final solutions across iterative cycles. This setup is proposed as a means of inducing variance in the reasoning chains of LLMs by leveraging disagreement as a productive cognitive force.

The paper evaluates the MAD framework using two challenging datasets: commonsense machine translation and counter-intuitive arithmetic reasoning. The findings demonstrate MAD's capacity to improve performance significantly, with results from the GPT-3.5-Turbo backbone surpassing those of GPT-4 on certain translations tasks. This is a telling result, reflecting the efficacy of MAD in leveraging disagreements to correct biases and rigid thought patterns inherent to LLMs.

Critical findings from this paper highlight that MAD's success is contingent upon two key dynamics: the adaptive termination of debate rounds and maintaining a moderate "tit for tat" state. Whereas forcing debates to continue indefinitely can lead to diminishing returns, an adaptive break strategy seemingly capitalizes on when sufficient divergent thinking has been achieved, thereby enhancing overall efficacy. Additionally, while some level of disagreement fosters innovation, excessive discord without convergence may impede the discovery of correct solutions, suggesting the necessity of a balanced interplay between divergence and agreement.

Moreover, the analysis reveals an inherent bias in the judge's decisions, suggesting that LLMs may not serve as impartial adjudicators if diverse models are tasked as debating agents. This indicates a complex dynamic in leveraging LLMs for task-oriented dispute resolution and suggests an area ripe for future exploration—finding the right balance between diversity and homogeneity in multi-agent tasks.

The practical implications of MAD are worth noting, especially in the spheres of automated translation and complex problem-solving, where broadening the solution space through agent-induced dissent can improve solution accuracy and reliability. Theoretically, MAD represents an intriguing foray into the exploration of artificial cognitive processes, striving to emulate more authentically the disagreement-driven innovation seen in human deliberation.

Future developments may further refine this multi-agent approach, potentially by incorporating more nuanced models of disagreement or evaluating dynamics across more extensive agent ecosystems. Additionally, exploration into the applicability of MAD across various architectures and its integration into real-world AI systems remains a promising direction for ongoing research. Ultimately, the paper contributes a thought-provoking empirical framework, illustrating the impactful role of structured dissent in enriching the cognitive abilities of LLMs.