- The paper presents MPCG, a framework that simulates the dynamic evolution of misinformation through multi-round, persona-conditioned claim generation.

- Its evaluation demonstrates high role-playing consistency between human and model assessments, highlighting gaps in current detection systems.

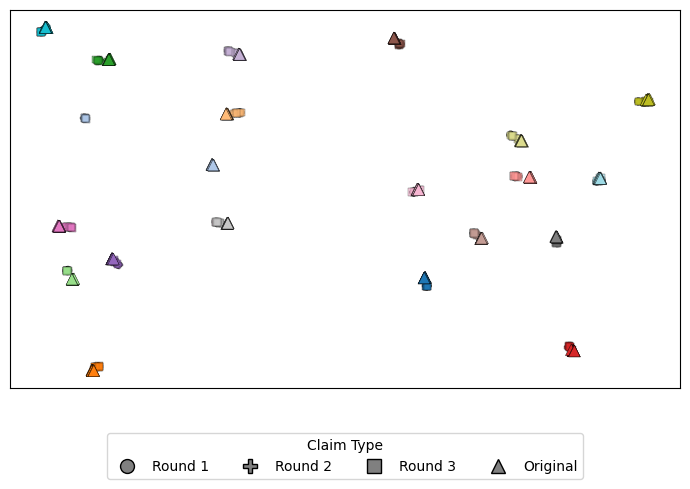

- Experiments reveal that ideological conditioning leads to semantic drift in claims, significantly reducing classifier reliability.

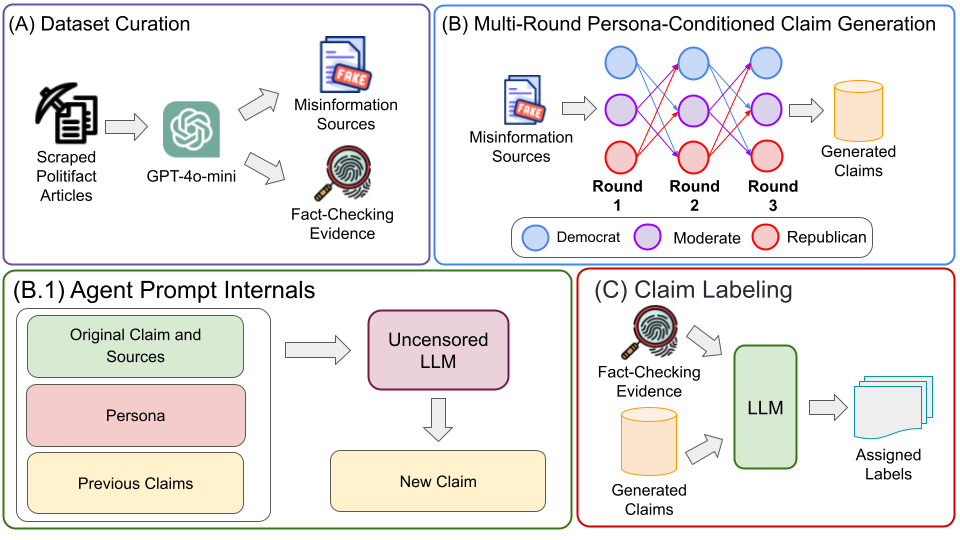

The paper introduces MPCG, a framework designed to simulate the dynamic evolution of misinformation by utilizing multi-round, persona-conditioned claim generation. This method allows the modeling of how misinformation adapts across different ideological perspectives using a LLM. The paper evaluates the framework's effectiveness through extensive experiments, highlighting its potential to stress-test current misinformation detection approaches.

Framework Overview

Multi-Round Persona-Conditioned Generation

MPCG operates by iterating the generation of claims through various ideological personas, such as Democrat, Republican, and Moderate, to model the stylistic and semantic evolution of misinformation. The process involves three main components:

- Dataset Curation: Utilizes articles from PolitiFact to gather misinformation sources and corresponding fact-checking evidence.

- Claim Generation: Employs an LLM to produce persona-aligned claims across three rounds of generation, each conditioned on the outputs from previous rounds and the original claim.

- Claim Labeling: Assigns veracity labels (True, Half-True, False) to generated claims for evaluation in downstream tasks.

The framework's architecture is outlined in an illustrative diagram, providing a clear depiction of its components and their interactions (Figure 1).

Figure 1: Overview of the MPCG Framework

Persona and Role Curation

MPCG defines personas based on political typologies, capturing distinct ideological perspectives within the American political spectrum. These roles are embedded into the claim generation process to simulate how misinformation might be framed differently by various ideological agents. This role-playing aspect is crucial for reproducing belief-driven claim adaptations.

Experimental Evaluation

The paper outlines a comprehensive evaluation of MPCG, focusing on several dimensions such as human and model agreement, cognitive effort, emotional and moral framings, and classifier robustness.

Human and Model Evaluation

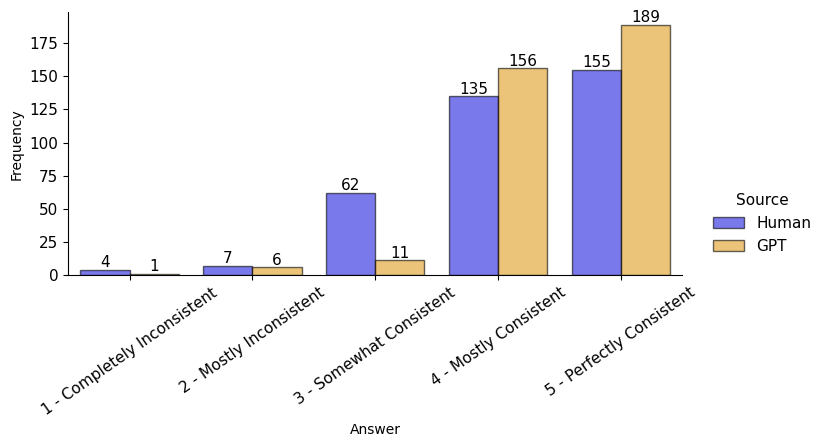

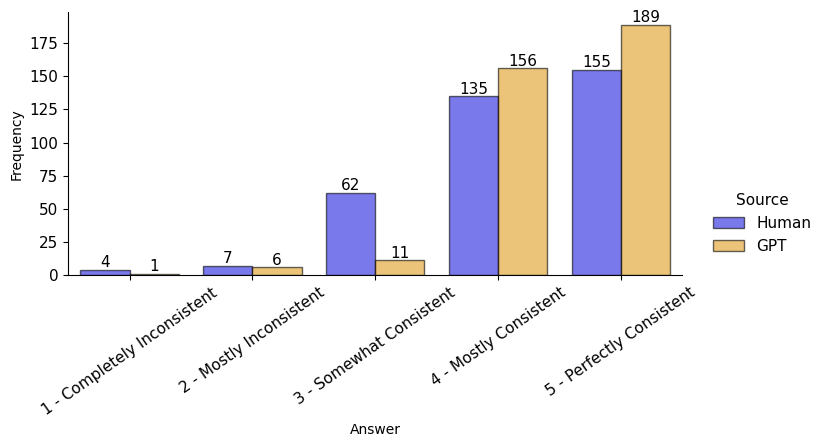

Utilizing both human annotators and automated assessments via GPT-4o-mini, the paper evaluates the quality of generated misinformation claims based on role-playing consistency, content relevance, fluency, and factuality. Notably, GPT-4o-mini showed strong alignment with human raters in most dimensions, albeit with higher divergence in fluency judgments.

Figure 2: Role-Playing Consistency scores between human annotators and GPT-4o-mini

Claim Characteristics

The analysis of cognitive and emotional metrics reveals that generated claims exhibit higher syntactical complexity and persona-aligned moral framing compared to original claims. Sentiment analysis confirms that Democrats and Republicans tend to generate more negative sentiment claims, while Moderates produce neutral ones.

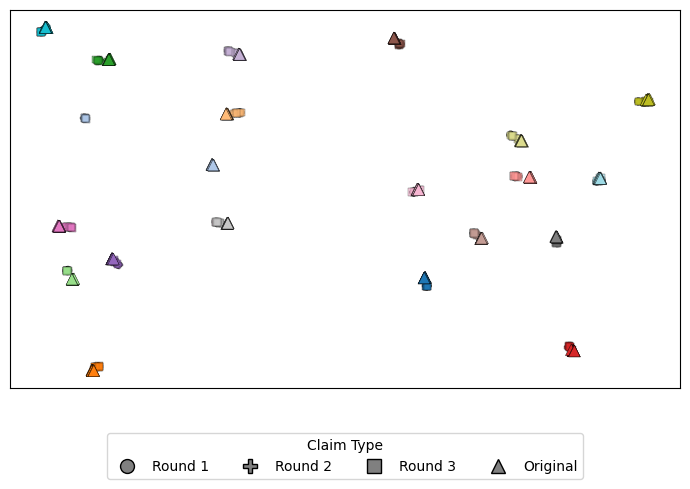

Figure 3: Semantic clusters of Round 1 generated claims (Circle), Round 2 generated claims (Plus), Round 3 generated claims (Square), and the original claims (Triangle). Each color represents a group of claims associated with the same original PolitiFact URL.

Classification Robustness

The paper demonstrates that commonly used misinformation classifiers suffer significant performance degradation when exposed to transformed claim variants from MPCG. The semantic drift introduced by persona conditioning leads to substantial drops in macro-F1 scores, highlighting vulnerabilities in current detection systems.

Conclusion and Future Directions

The paper concludes that MPCG effectively models misinformation evolution, providing a robust framework for testing the limits of existing Automated Fact Checking systems. Future work could explore multilingual and multimodal extensions to broaden the applicability of the framework. Additionally, developing standardized metrics for generation quality evaluation could reduce reliance on subjective assessments.

In summary, MPCG offers a novel approach for simulating the ideological adaptation of misinformation, emphasizing the need for more resilient detection methods to address the dynamic nature of misinformation evolution.