- The paper introduces KAAR, a novel approach that explicitly augments LLMs with structured hierarchical priors to enhance performance on ARC tasks.

- The paper shows that integrating explicit domain priors, such as objectness and goal-directedness, yields a 5–7% absolute accuracy boost and up to 64% relative gain.

- The paper highlights key failure modes like overfitting and abstraction mismatches, indicating the need for improved abstraction selection in future AGI research.

Knowledge-Augmented LLM Program Synthesis on ARC

Motivation and Benchmark Overview

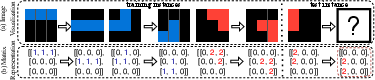

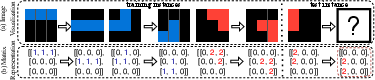

The Abstraction and Reasoning Corpus (ARC) is designed to evaluate generalization and abstract reasoning, which are central to the cognitive capabilities underlying human intelligence. Whereas most advances in LLMs have focused on learning from vast training data in domains such as NLP and computer vision, ARC grounds its tasks in core knowledge priors: objectness, geometry/topology, numbers/counting, and goal-directedness. Each ARC task presents a small set of input-output image pairs from which the model must infer abstract transformations and apply them to unseen test instances, all represented as 2D matrices (Figure 1).

Figure 1: An illustrative ARC problem showing training pairs, test input, and their 2D matrix encodings; the test output is highlighted in red.

ARC as Program Synthesis: Solver Taxonomy

ARC tasks are formally cast as a program synthesis problem. Given P=⟨Ir,It⟩ (training and test instances), the goal is to synthesize a mapping s such that s(ii)=io for each (ii,io)∈It, based solely on Ir and the test inputs. Solution synthesis is explored through nine solver variants spanning:

- Generation strategies: direct generation, repeated sampling, and iterative refinement.

- Solution modalities: standalone code synthesis and planning-aided code synthesis.

- Solution representations: textual plans and Python code.

Repeated sampling with planning-aided code generation (RSPC) exhibits the strongest performance in generalization and test-set accuracy across multiple LLMs, notably GPT-o3-mini, Gemini-2.0, QwQ-32B, and DeepSeek-R1-70B.

Knowledge Augmentation: The KAAR Framework

Despite the capabilities of advanced LLMs, implicit encoding of ARC’s core priors is insufficient for robust generalization. The Knowledge Augmentation for Abstract Reasoning (KAAR) paradigm explicitly introduces structured priors to LLMs using a lightweight ontology, organized hierarchically by dependencies:

- Objectness abstraction: pixel grouping according to adjacency, color, and additional non-standard abstractions.

- Geometry and topology: extraction of attributes (size, color, shape, symmetry, bounding box, holes) and relations (spatial alignment, congruence, inclusion, touching).

- Numbers and counting: statistical summaries over component attributes.

- Goal-directedness: categorization and schema extraction for task-specific transformations such as movement, extension, recolor, flipping, copying.

KAAR applies priors incrementally, invoking the backbone RSPC solver after each augmentation stage, thereby reducing interference and enhancing stage-wise reasoning. Priors are generated either through image-processing via GPAR or prompted stepwise for goal-directedness.

Experimental Analysis

Comprehensive evaluation on the 400-task ARC public test set compares all nine solvers and KAAR’s impact across multiple LLMs. Key findings:

- RSPC performance: achieves 30–32% test accuracy on GPT-o3-mini, double that of Gemini-2.0, with significantly higher scores than open-source models.

- KAAR improvement: bolsters accuracy by an absolute 5–7% and a relative gain up to 64% (DeepSeek-R1-70B), with comparable generalization to non-augmented RSPC.

- Coverage: KAAR increases cross-LLM task alignment, allowing weaker models to solve more tasks solved by stronger models.

- Task class breakdown: KAAR excels especially on movement and recolor transformations. Extensions involving pixel-level manipulations remain challenging, as component-level abstractions are less effective there.

- Scalability: Performance degrades with growing image size, highlighting the need for improved LLM attention mechanisms and representations.

- Iteration analysis: Progressive augmentation with KAAR yields consistent accuracy gains, especially following introduction of goal-directedness priors.

Solution and Generalization Failure Modes

Manual inspection reveals RSPC/LSPC solutions are often overfit to training examples unless guided by explicit abstractions—e.g., shifting pixel grids without recognition of objectness, or misapplying 4-connected instead of 8-connected components in structurally ambiguous inputs. Even with KAAR, failures arise if the first abstraction that solves Ir is not suitable for It, indicating a challenge in abstraction selection and necessitating validation mechanisms.

The generalization gap between Ir and It persists due to solutions that encode low-level procedural steps, lack of abstraction induction, and insufficient high-level reasoning. Planning-aided pipelines reduce but do not eliminate this gap.

Theoretical and Practical Implications

KAAR demonstrates that explicit, hierarchical augmentation of domain priors substantially improves reasoning-oriented LLM performance on ARC. This approach enables transferable, domain-general methodologies for hierarchical reasoning in robotics, image captioning, and VQA. The reliance on pipeline-based program synthesis, with incremental feedback and structured abstraction prompting, indicates that scalable AGI solutions will require precise, modular knowledge grounding and validation—not only end-to-end learning.

However, KAAR’s prompting incurs significant computational overhead due to increased token utilization, especially in multi-stage or unsuccessful abstraction attempts. The inability of LLMs to autonomously infer correct abstractions from data remains a key bottleneck. Realizing more human-like generalization and abstraction may require integration of fine-tuning, hybrid search mechanisms, or self-improving synthesis.

Conclusion

This study rigorously evaluates generic and knowledge-augmented LLM solvers on the ARC benchmark, establishing that explicit hierarchical augmentation via KAAR yields substantial absolute and relative performance improvements while remaining model-agnostic. Nevertheless, ARC continues to expose fundamental limitations in reasoning and generalization in state-of-the-art LLMs, providing a robust avenue for future progress in AGI and cognitive AI system design.