The paper, titled "LLMs and the Abstraction and Reasoning Corpus: Successes, Failures, and the Importance of Object-based Representations," investigates the performance of GPT, specifically GPT-4, on the ARC (Abstraction and Reasoning Corpus), a benchmark designed to assess abstract reasoning abilities without prior knowledge. The authors scrutinize GPT-4's capabilities in handling ARC tasks, which require fundamental interpretative skills such as object identification, goal-oriented reasoning, counting, and basic geometrical understanding from limited input-output examples.

Main Contributions and Observations:

- Evaluation on ARC Tasks:

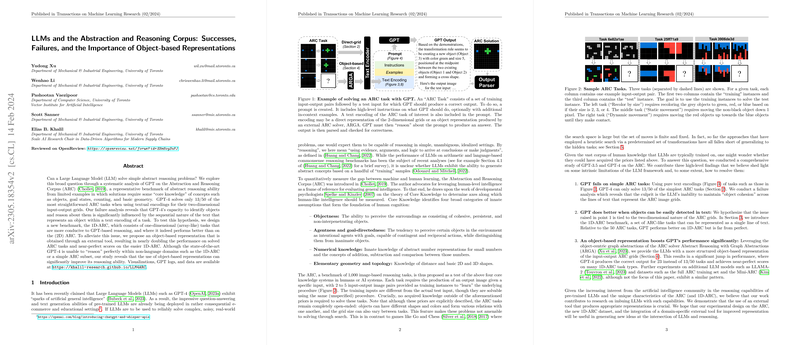

- GPT-4 can solve only a modest 13 out of 50 easier ARC tasks using textual encodings for two-dimensional grids. The right textual encoding is a significant challenge, given that it requires maintaining "object cohesion" across sequential text lines, which GPT-4 struggles with.

- Introduction of 1D-ARC:

- To isolate textual representation issues, the authors propose a simpler one-dimensional version of ARC tasks, termed 1D-ARC. This allows objects to be processed more easily by maintaining sequential text representations. GPT-4 demonstrates improved performance on 1D-ARC but still falls short of complete problem-solving efficacy.

- Proposal of Object-based Representations:

- A key improvement strategy involves transitioning to object-based representations. By utilizing the ARGA tool for external object abstraction, the performance on ARC tasks nearly doubles. These representations effectively enhance the LLM's reasoning, pushing solved ARC tasks from 13 to 23 out of 50, with near-perfect scores on many 1D-ARC variants.

Findings and Further Analysis:

- Object Cohesion:

The paper analyzes how non-sequential textual object depictions impede GPT's ability to solve tasks, revealing that GPT is limited in its capacity for object cohesion in text. Vertical and horizontal positioning of objects in text encoding impacts solvability, which is substantiated through additional experiments.

- Regression Analysis:

Logistic regression is employed to glean insights into task features that correlate with GPT's successes and failures. Notably, a higher number of black pixels in testing tasks inversely correlates with solvability, highlighting the challenges in handling object abstraction.

- Chain-of-Thought (CoT) and Few-shot Learning:

The paper compares the efficacy of few-shot learning strategies, including in-context examples with and without CoT reasoning steps, underscoring the potential advantage of more structured representations via ARGA in enhancing performance.

Conclusion and Future Work:

The research concludes that successful ARC task-solving by LLMs requires not only sophisticated reasoning but also effective abstraction of objects within task representations. Given GPT-4's difficulty maintaining cohesive textual representations, external tools providing domain-specific abstractions substantially mitigate this limitation. Future directions may include testing GPT-4's multimodal capacity for interpreting visual input or employing a language of transformations to tackle ARC-type problems.

The paper provides a clear roadmap in the competitive domain of LLM capability enhancements, particularly in abstract reasoning settings, emphasizing that augmenting text-only models with external structured representations can notably improve AI reasoning and problem-solving outcomes.