- The paper introduces PANDORA, a framework that analyzes bidirectional persuasion dynamics between humans and LLMs across diverse demographics.

- The paper employs a threefold methodology—LLM-to-human, human-to-LLM, and multi-agent simulations—to reveal demographic-specific misinformation susceptibility.

- The study finds that heterogeneous LLM group interactions reduce echo chamber effects and enhance correctness rates, offering strategies to counter misinformation.

Introduction

The paper "Persuasion at Play: Understanding Misinformation Dynamics in Demographic-Aware Human-LLM Interactions" investigates the influence of demographic factors on the susceptibility to misinformation, focusing on the interactions between humans and LLMs. The paper introduces a conceptual framework, PANDORA, which stands for Persuasion Analysis in Demographic-aware human-LLM interactions and misinformation Response Assessment. The framework analyzes both human-to-LLM and LLM-to-human persuasion dynamics across various demographic groups.

Methodology

The methodology involves a threefold approach: LLM-to-Human persuasion, Human-to-LLM persuasion, and Multi-Agent LLM simulations:

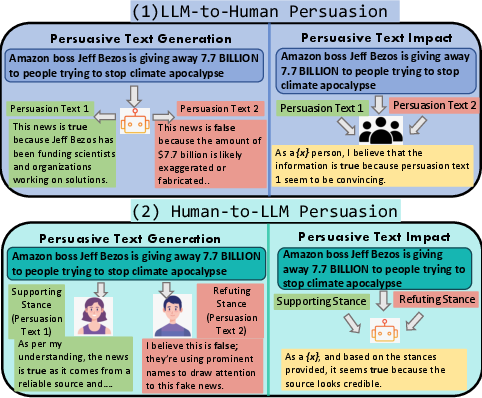

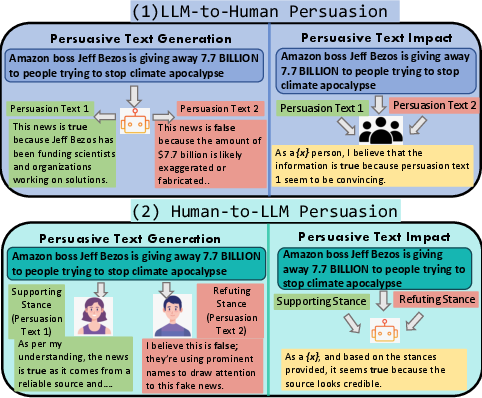

- LLM-to-Human Persuasion: Persuasive arguments are generated using LLMs for specific claims, after which their influence on participants belonging to specific demographic groups is assessed. The prompts leverage emotional and psychological aspects to increase persuasiveness, providing insights into how demographics influence misinformation susceptibility.

Figure 1: In our paper, we investigate the differences in persuasion effects of LLMs on humans, and of humans on LLMs. To assess the impact of persuasion, we conduct experiments involving human participants from diverse demographic groups---varying by age, gender, and geographical backgrounds; and LLMs with different demographic persona.

- Human-to-LLM Persuasion: Using human-stance datasets consisting of supporting and refuting arguments, the model examines the response of LLMs characterized by various demographic personas to these human-generated texts.

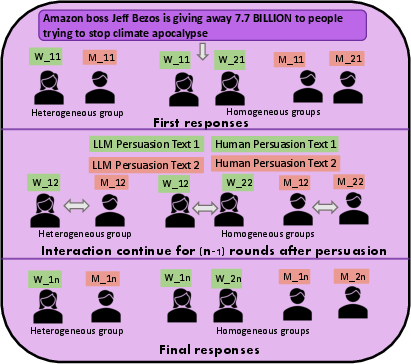

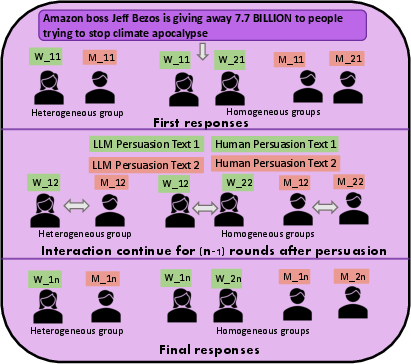

- Multi-Agent LLM Simulations: The paper explores interactions within homogeneous and heterogeneous groups of LLMs representing distinct demographic personas. The simulation assesses group behaviors and echo chamber effects when exposed to persuasive misinformation.

Figure 2: Multi-Agent LLM Architecture: Homogeneous and Heterogeneous groups engage in interaction rounds to decide if a news item is true or false. They are provided with persuasion texts during the interaction. Note that n=4 for our experiments.

Results

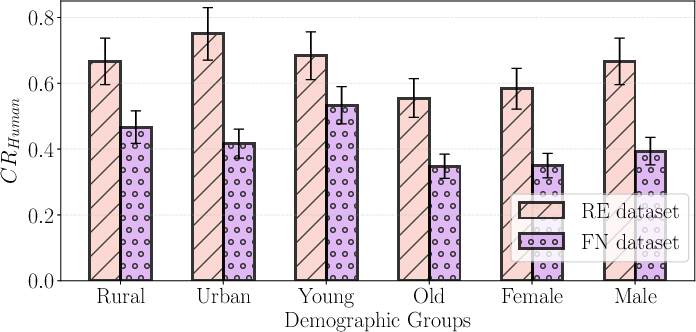

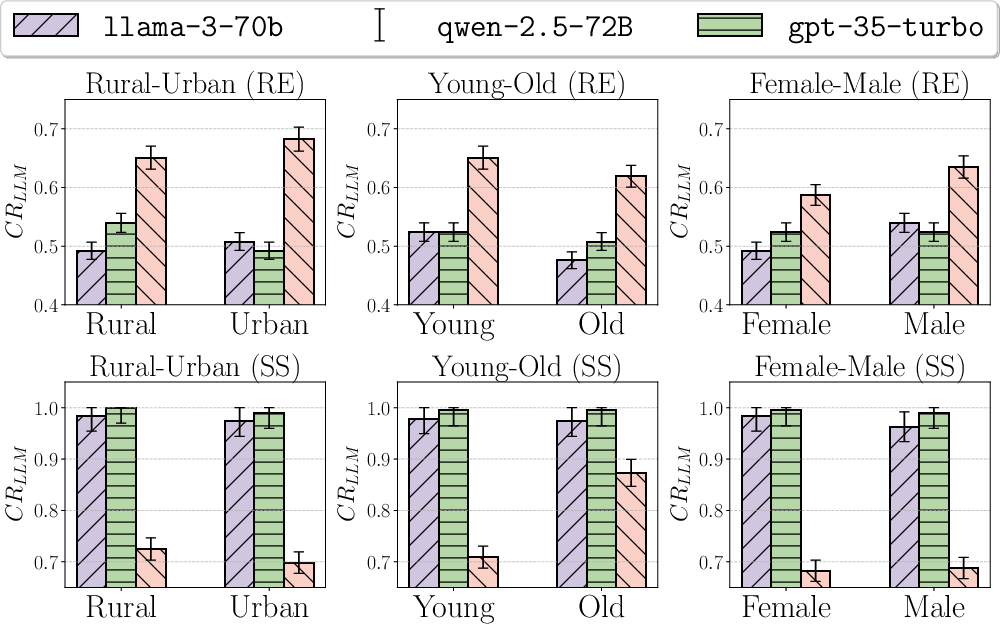

The results underline that demographic differences influence the susceptibility to misinformation for both humans and LLMs:

Analysis

The paper provides insights into the bidirectional influences between LLMs and humans in persuasive contexts. The correlation analysis shows that LLM predictions are moderately correlated with human decision-making patterns, supported by detailed linguistic analysis of persuasive texts. LLM-generated persuasion tends to have a greater influence over multi-agent interactions, improving overall correctness rates whereas human-generated persuasion tends to reduce them.

Conclusion

The paper highlights the potential for using LLMs as tools to simulate demographic influences in misinformation dynamics, offering strategies to enhance resilience against misinformation. The findings suggest that although homogenous group dynamics can exacerbate misinformation echo chambers, heterogeneous interactions serve as potential mitigation strategies. Future research could explore the underlying mechanisms of demographic influence and refine LLM persona simulations to better align with human cognitive processes.

This paper contributes to understanding the complex interaction patterns in demographic-aware misinformation contexts, offering a foundation for developing strategies in combating misinformation through demographic-sensitive LLM interactions.