Assessing LLMs' Ability to Detect Persuasive Arguments

Introduction

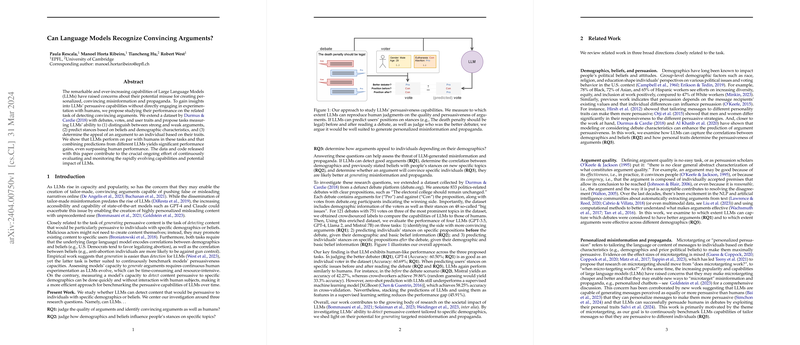

The advent of LLMs has introduced new potentialities for the creation and dissemination of customized persuasive content, raising significant concerns regarding the mass production of misinformation and propaganda. In response to these concerns, this paper investigates LLMs' capabilities in recognizing convincing arguments, predicting stance shifts based on demographic and belief attributes, and gauging the appeal of arguments to distinct individuals. The research, extending a dataset from Durmus and Cardie (2018), explores these dimensions across three principal questions aimed at understanding how well LLMs can interact with persuasive content and the implications this has for the generation of targeted misinformation.

Methodology

The paper leverages an enriched dataset from debate.org, annotating 833 political debates with propositions, arguments, votes, and voter demographics and beliefs. It evaluates the performances of four LLMs (GPT-3.5, GPT-4, Llama-2, and Mistral 7B) in tasks designed to reflect the models' ability to discern argument quality (RQ1), predict pre-debate stances based on demographics and beliefs (RQ2), and anticipate post-debate stance shifts (RQ3). The analysis compares LLMs against human benchmarks, exploring the potential for LLMs to surpass human performance when predictions from different models are combined.

Findings

- Argument Quality Recognition (RQ1): GPT-4 exhibited a significant lead in detecting more convincing arguments, achieving an accuracy comparable to individual human judgment within the dataset.

- Stance Prediction (RQ2 and RQ3): Across the tasks of predicting stances before and after exposure to debate content, LLMs showed performance on par with human capabilities. Particularly, the 'stacked' model, which combined predictions from different LLMs, demonstrated a notable improvement, suggesting the value of multi-model approaches for enhancing prediction accuracy.

- Comparison with Supervised Learning Models: Despite LLMs' impressive performance, supervised machine learning models like XGBoost still showed superior results in predicting stances based on demographic and belief indicators, emphasizing the room for improvement in LLMs' predictive functions.

Implications

This paper elucidates the nuanced capabilities of LLMs in processing and evaluating persuasive content, showing that these models can replicate human-like performance in specific contexts. The findings underscore the realistic potential for LLMs to contribute to the generation of targeted misinformation, especially as their predictive accuracies improve through techniques like model stacking. However, the comparison with traditional supervised learning models highlights the current limitations of LLMs in fully understanding the complexities of human beliefs and demographic influences on persuasion.

Future Directions

Given the dynamic nature of LLM development, continuous evaluation of their capabilities in recognizing and generating persuasive content is imperative. Future research should explore more granular demographic and psychographic variables, potentially offering richer insights into the models' abilities to tailor persuasive content more effectively. Moreover, expanding the scope of analysis to include non-English language debates could provide a more global perspective on LLMs' roles in international misinformation campaigns.

Conclusion

While LLMs demonstrate promising abilities in detecting convincing arguments and predicting stance shifts, their performance remains just at human levels for these tasks. The potential for these models to enable sophisticated, targeted misinformation campaigns necessitates ongoing scrutiny and rigorous evaluation of both their capabilities and limitations. As LLMs continue to evolve, their role in shaping public discourse and influence strategies will undoubtedly warrant closer examination and proactive management.