- The paper introduces GATE, a model that mimics hippocampal working memory via persistent EC3 activity and re-entrant gating.

- It employs a multi-lamellar dorsoventral architecture that processes sensory inputs into abstract representations for adaptive learning.

- The study demonstrates accelerated learning and generalization in simulated tasks, linking neural dynamics with intelligent system design.

Introduction

This paper introduces a computational model named Generalization and Associative Temporary Encoding (GATE), inspired by the hippocampal formation's (HF) mechanisms related to working memory (WM) and generalization. The GATE model employs a multi-lamellar dorsoventral architecture to simulate the information processing capabilities observed in the HF. With a specific focus on the re-entrant loop architecture within HF, the model aims to replicate HF's adaptive learning functions through persistent activity and gating mechanisms. The paper proposes GATE as a viable framework to develop brain-inspired intelligent systems paralleling HF's flexible memory dynamics.

Results

EC3 Persistent Activity

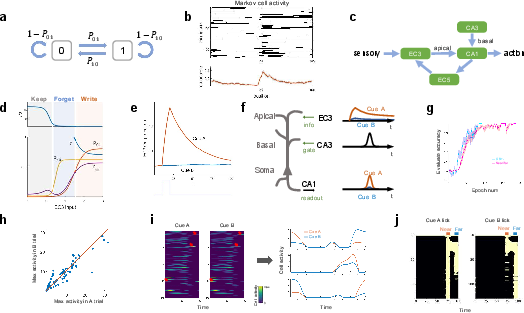

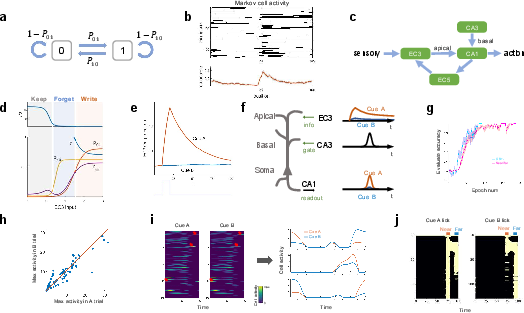

The model leverages persistent activity observed in the entorhinal cortex (EC3) as the basis for implementing WM functions. By utilizing EC3's ability to maintain information, GATE forms a population-level model that adjusts based on its input, implementing mechanisms to write, retain, and forget information. This EC3 mechanism not only encodes external cues but also exhibits state transitions akin to a Markov chain, allowing the model to adapt task-relevant information seamlessly.

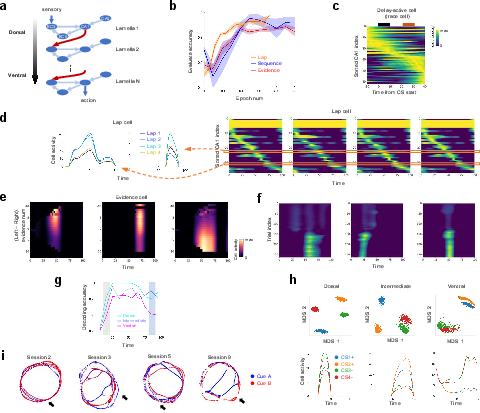

Figure 1: Single-lamellar model learns to maintain information.

Re-entrant Loop Architecture

GATE introduces a re-entrant loop architecture composed of EC3, CA1, CA3, and EC5. This loop facilitates self-regulating information processing and gating functions. Specifically, CA1 selectively reads from EC3 when gated by CA3, while EC5 integrates information to further modulate EC3. These interactions underpin the model’s ability to form complex cognitive maps that align with biological observations across various WM tasks such as CS+ and Near/Far tasks.

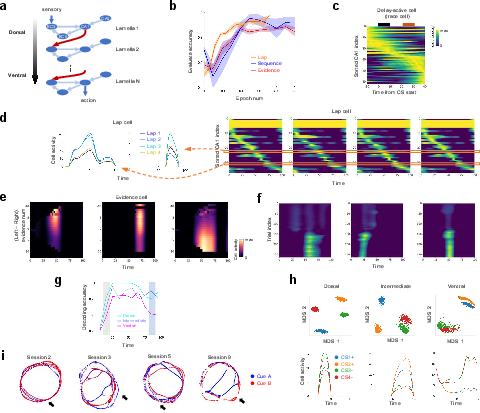

Dorsoventral Axis and Multi-lamellar Architecture

To handle complex tasks that require integration of externally and internally driven information, GATE employs a multi-lamellar structure along the dorsoventral axis. This structure allows dorsal lamellae to process sensory inputs while ventral lamellae transform these inputs into abstract representations. The model effectively addresses various WM tasks by developing representations that parallel experimental evidence of hippocampal neuron types, including splitter cells, lap cells, evidence cells, and trace cells.

Figure 2: Multi-lamellar model learns complex working memory tasks.

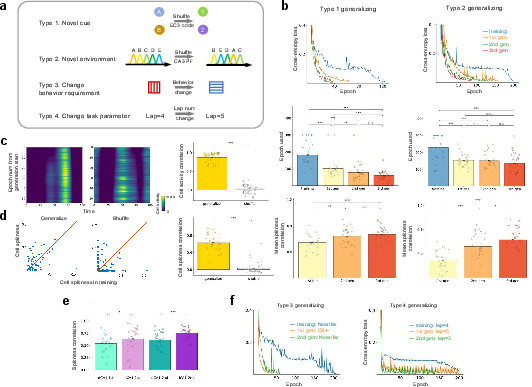

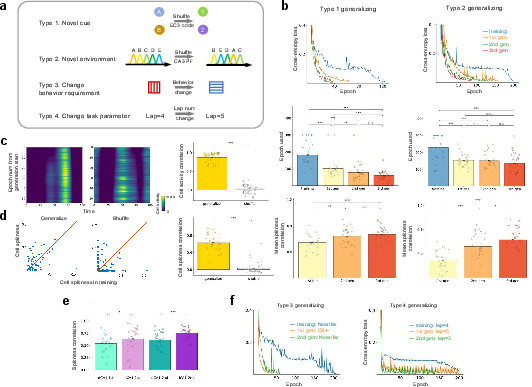

Learning and Generalization Capabilities

The proposed GATE model showcases accelerated learning in new environments or task modifications while maintaining previously learned representations. Through different generalization paradigms, GATE demonstrates that learning speed increases with each novel setting, highlighting the model's ability to leverage and inherit abstract representations from past experiences. This process closely mirrors the HF's adaptability in rodents.

Figure 3: Working memory enables generalization.

Discussion

The GATE model successfully simulates WM and generalization functionalities rooted in hippocampal dynamics, offering a plausible framework that bridges neural representations and cognitive processes. By integrating EC3 persistent activity, CA1 readout influenced by CA3, and EC5 integration, the model recreates essential neural mechanisms involved in memory processing and task adaptation. Additionally, GATE offers experimentally testable predictions, such as probing EC3 neurons related to information keeping and decoding task stages from EC5 activity.

GATE's alignment with biological properties is evident in both its individual neuron representations and population-level encoding, offering insights into how HF supports rapid learning and flexible adaptation. Although areas such as lifelong learning and episodic memory remain unaddressed, GATE stands as a robust brain-inspired framework conducive to advancing AI models in cognitive science.

Conclusion

GATE provides a nuanced approach toward understanding HF-driven cognitive functioning, integrating working memory and generalization mechanisms. This model lays the groundwork for developing intelligent systems inspired by HF's operational anatomy, effectively contributing to bridging biological insights with artificial intelligence advancements.