- The paper introduces a Dynamic Gated Neuron (DGN) model that integrates biologically-inspired dynamic conductance for adaptive noise suppression in spiking neural networks.

- It demonstrates via theoretical SDE analysis and empirical benchmarks that the DGN model outperforms standard LIF neurons, achieving 99.10% top-1 accuracy on TIDIGITS.

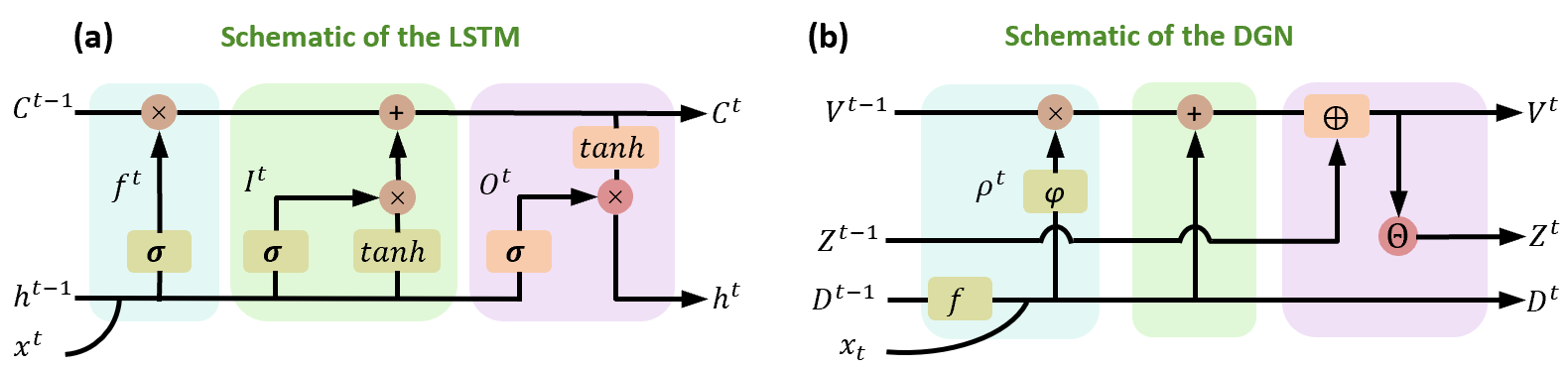

- The study reveals a structural homology between DGN and LSTM units, suggesting a unified framework for robust temporal information processing.

Brain-Inspired Dynamic Gating for Robust Spiking Neural Computation

Introduction

This paper introduces the Dynamic Gated Neuron (DGN), a spiking neuron model that incorporates biologically inspired dynamic conductance as a gating mechanism to modulate information flow and enhance robustness in Spiking Neural Networks (SNNs). The work addresses the limitations of conventional LIF neurons, which lack adaptive conductance mechanisms and thus fail to capture the dynamic noise rejection and temporal integration properties observed in biological neurons. The DGN model is theoretically analyzed and empirically validated on multiple temporal and neuromorphic benchmarks, demonstrating superior robustness and accuracy, particularly under noise and adversarial perturbations.

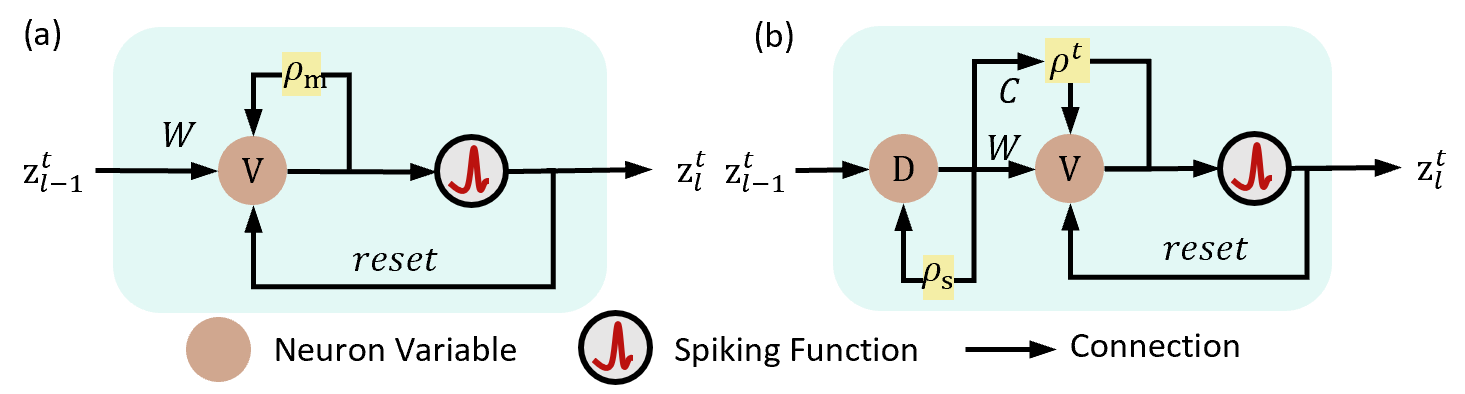

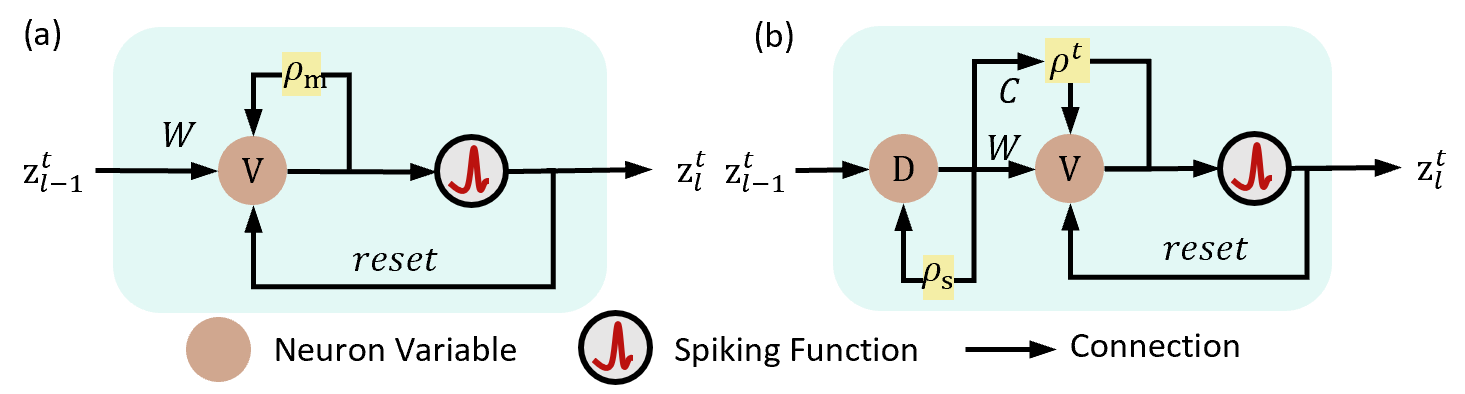

Dynamic Gated Neuron Model

The DGN model extends the standard LIF framework by introducing activity-dependent modulation of membrane conductance. The membrane potential dynamics are governed by both static leak conductance and dynamic, input-driven synaptic conductance, enabling adaptive control over information retention and noise suppression. The discrete-time update equations for DGN are:

Dit=e−τsΔtDit−1+zit

ρt=φ(1−gl⋅Δt−Δti∑NCiDit)

Vt=ρtVt−1+Δti∑NWiDit−ϑzt−1

zt=Θ(Vt−ϑ)

where Ci and Wi are trainable parameters, Dit is the filtered presynaptic input, and ρt is the adaptive decay coefficient. This dual-pathway architecture allows the neuron to selectively filter inputs and dynamically adjust its memory retention, functionally analogous to gating operations in LSTM units.

Figure 1: Schematic of the neuron models. (a) Standard LIF model. (b) DGN model with dynamic conductance gating.

Theoretical Analysis of Robustness

The paper provides a rigorous stochastic differential equation (SDE) analysis, demonstrating that DGN neurons possess enhanced stochastic stability compared to LIF neurons. The steady-state voltage variance for DGN is:

⟨V2⟩DGN=2G0[∑i=1Nσi(Wi−G0Ci∑j=1NWjμj)]2

where G0=gl+∑Ciμi is the effective conductance. The adaptive leakage scaling and synaptic noise compensation mechanisms in DGN enable effective voltage stabilization under stochastic input perturbations, outperforming the static noise scaling of LIF neurons.

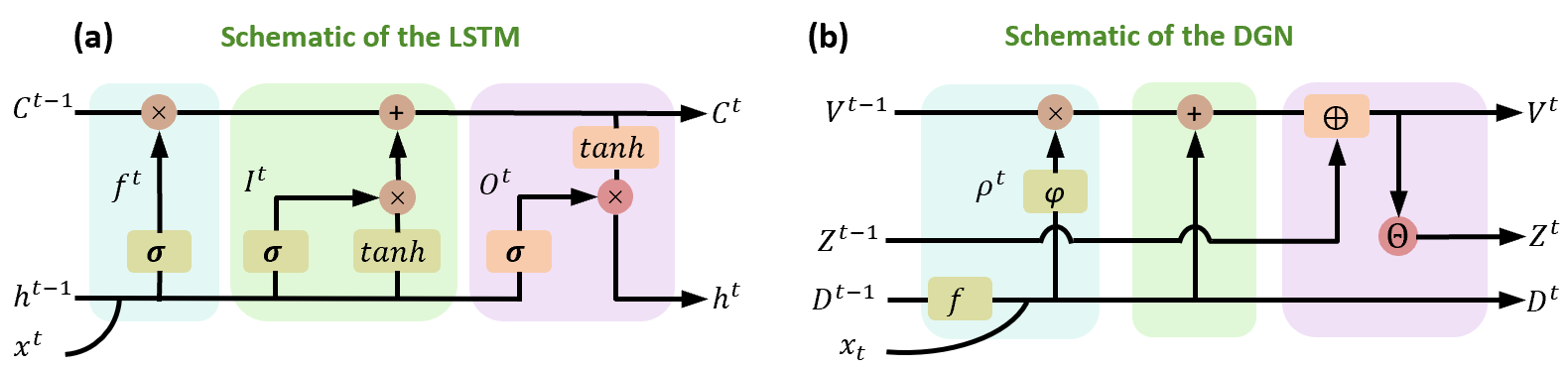

Structural Homology with LSTM

A key insight is the topological and functional homology between DGN and LSTM architectures. The adaptive decay coefficient in DGN mirrors the forget gate in LSTM, while the dynamic integration of presynaptic currents parallels LSTM's input gating. The spike reset mechanism in DGN is mathematically congruent with LSTM's cell state update, establishing a unified framework for temporal information processing across biological and artificial systems.

Figure 2: Schematic diagram of the model structure of LSTM and DGN, highlighting functional isomorphism in gating and memory operations.

Empirical Evaluation

DGN-based SNNs are evaluated on speech and neuromorphic datasets (Ti46Alpha, TIDIGITS, SHD, SSC) using both feedforward and recurrent architectures. The DGN model achieves:

- 99.10% top-1 accuracy on TIDIGITS (recurrent)

- 75.63% accuracy on SSC (recurrent)

- Consistently higher accuracy than LIF, ALIF, HeterLIF, and even LSTM in some configurations, despite using fewer neurons and simpler architectures.

Robustness to Noise and Adversarial Attacks

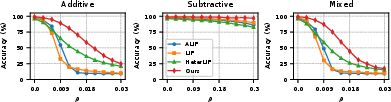

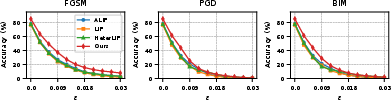

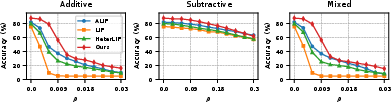

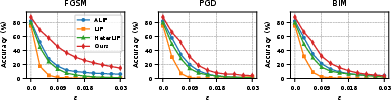

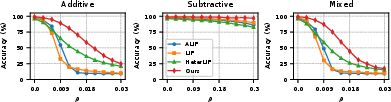

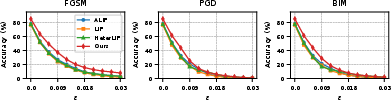

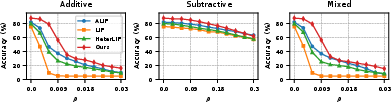

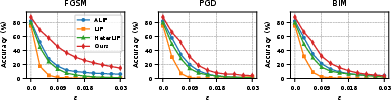

Robustness is assessed by testing models on previously unseen noise patterns and adversarial attacks (FGSM, PGD, BIM). DGN maintains high accuracy under additive, subtractive, and mixed noise, as well as gradient-based attacks, with minimal performance degradation compared to other neuron models.

Figure 3: Performance of the model on TIDIGITS using a feedforward network under perturbations of different distribution probabilities p.

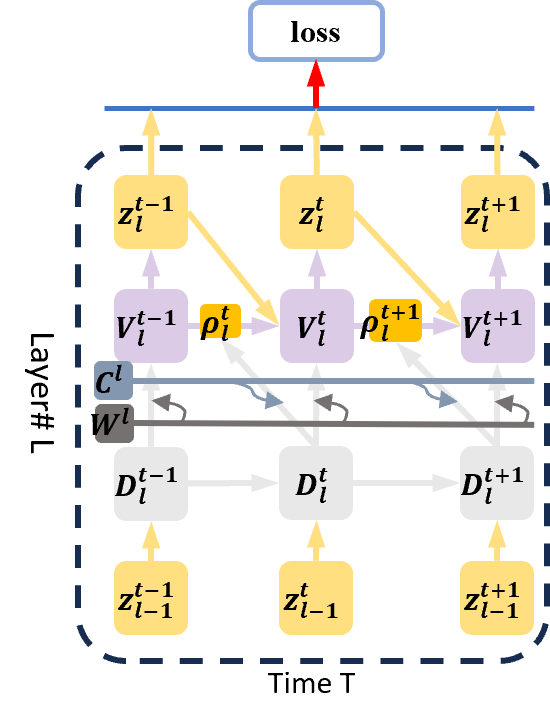

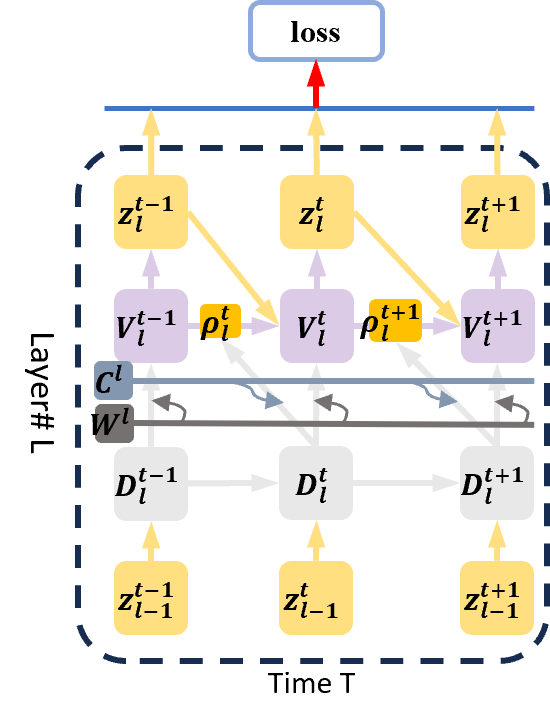

Figure 4: DGN unfolds over three time steps, illustrating temporal credit assignment for BPTT.

Figure 5: Performance of the model on TIDIGITS using a recurrent network under perturbations of different distribution probabilities p.

Figure 6: Performance of the model on SHD using a feedforward network under perturbations of different distribution probabilities p.

Figure 7: Performance of the model on SHD using a recurrent network under perturbations of different distribution probabilities p.

Ablation and Architectural Analysis

Ablation studies confirm that the dynamic gating component is essential for robust performance. Models with only static gating (leak conductance) exhibit inferior accuracy and noise resistance. The synergy between static and dynamic gating structures enables context-sensitive information filtering and adaptive noise suppression.

Implementation Considerations

- Training: DGN-SNNs are trained using BPTT with surrogate gradients, leveraging the unfolded computational graph for temporal credit assignment.

- Resource Requirements: DGN introduces minimal computational overhead compared to LIF, as the dynamic conductance update is efficiently implemented.

- Scalability: The model is compatible with standard SNN frameworks and can be deployed on neuromorphic hardware.

- Limitations: The paper focuses on robustness; further exploration of temporal dynamics and richer conductance models is warranted.

Implications and Future Directions

The DGN model bridges the gap between biophysical realism and computational efficiency in SNNs, providing a biologically plausible mechanism for robust spike-based computation. The demonstrated structural homology with LSTM units suggests a unifying principle for gating in neural computation. Future research may explore hierarchical conductance-based gating, integration with advanced SNN training methods, and deployment in real-world neuromorphic systems.

Conclusion

The Dynamic Gated Neuron model introduces a biologically inspired, activity-dependent conductance mechanism that functions as an intrinsic gating structure in spiking neural networks. Theoretical and empirical analyses demonstrate that DGN enables robust, efficient, and adaptive computation, outperforming conventional neuron models under noise and adversarial perturbations. This work establishes dynamic conductance gating as a key principle for resilient spike-based computation and opens new avenues for biologically grounded SNN design.