- The paper provides an in-depth survey of multimodal alignment and fusion techniques by categorizing over 200 studies to outline design blueprints for future models.

- The methodology classifies architectures into two-tower, two-leg, and one-tower designs and analyzes fusion strategies from explicit alignment to deep, attention-based integration.

- The findings highlight challenges in computational efficiency, data noise, and feature misalignment while suggesting modular, adaptive architectures for scalable multimodal learning.

Multimodal Alignment and Fusion: Modeling, Methods, and Challenges

Introduction

The paper "Multimodal Alignment and Fusion: A Survey" (2411.17040) presents a systematic, technically comprehensive review of the landscape of multimodal learning with emphasis on alignment and fusion techniques. It analyzes the architectural paradigms, methodological advances, loss functions, dataset properties, and the practical challenges encountered when integrating heterogeneous data types—primarily images, text, audio, and video—within machine learning models. The authors categorize over 200 works to build a structured understanding and provide design blueprints for future model development.

Foundations: Modalities, Datasets, and Losses

Diverse data modalities yield complementary signals: text is discrete and semantic, vision is high-dimensional and spatial, audio encodes sequential and emotional cues. The paper reviews major datasets (e.g., LAION-5B, MS-COCO, RS5M) and details their linguistic, semantic, and pairing characteristics, stating the necessity for large-scale, high-quality cross-modal corpora for effective VLM and MLLM training.

Specialized objectives and loss functions underlie multimodal model optimization. Contrastive and cross entropy losses drive inter-modal alignment, while reconstruction and angular margin losses address robustness and fine class boundary separation. Supervised contrastive variants are highlighted as crucial in noisy or label-sparse regimes.

Architectural Taxonomy of Multimodal Models

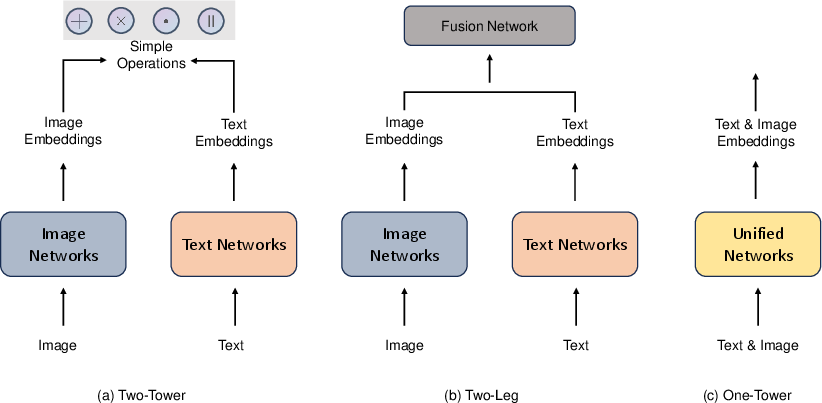

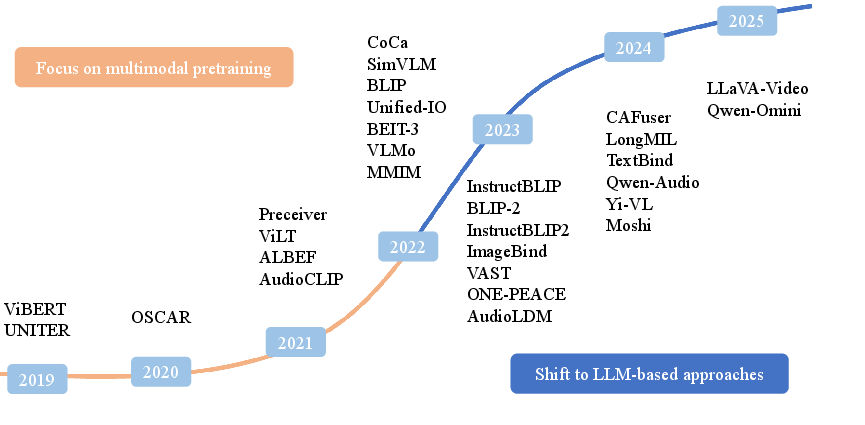

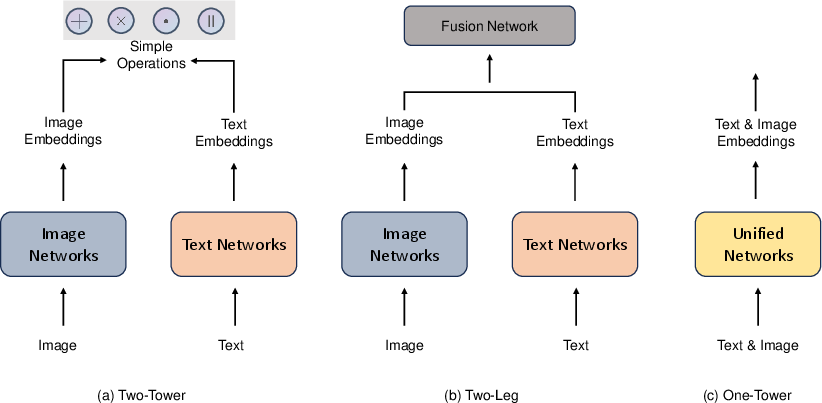

The paper categorizes model architectures into three principal structures:

- Two-Tower: Separate modality-specific encoders; fusion mainly via simple operations (e.g., dot products, additive/concat).

- Two-Leg: Dedicated fusion network integrates intermediate encoded features.

- One-Tower: Joint encoder processes all modalities, favoring concurrent representation learning and direct interaction.

Figure 1: Comparative schematic of representative two-tower, two-leg, and one-tower multimodal architectures, differentiating the on-model stage where inter-modal interaction occurs.

Moving beyond temporal pipeline classification (early, late, hybrid fusion), the survey reclassifies fusion methods according to their operational mechanism (encoder-decoder, kernel-based, graph-based, and attention-based), capturing the evolution toward deep entanglement and model-unified fusion strategies.

Alignment: Explicit and Implicit Strategies

Alignment solves the semantic mapping problem—associating elements or structures across heterogeneous modalities, which is prerequisite for effective fusion. The survey distinguishes:

Graph-based implicit alignment faces high computational complexity due to irregular graph structures; GNNs exhibit hyperparameter sensitivity and memory inefficiency, remaining a bottleneck in practice.

Fusion: Encoder-Decoder, Kernel, Graphical, and Attention Paradigms

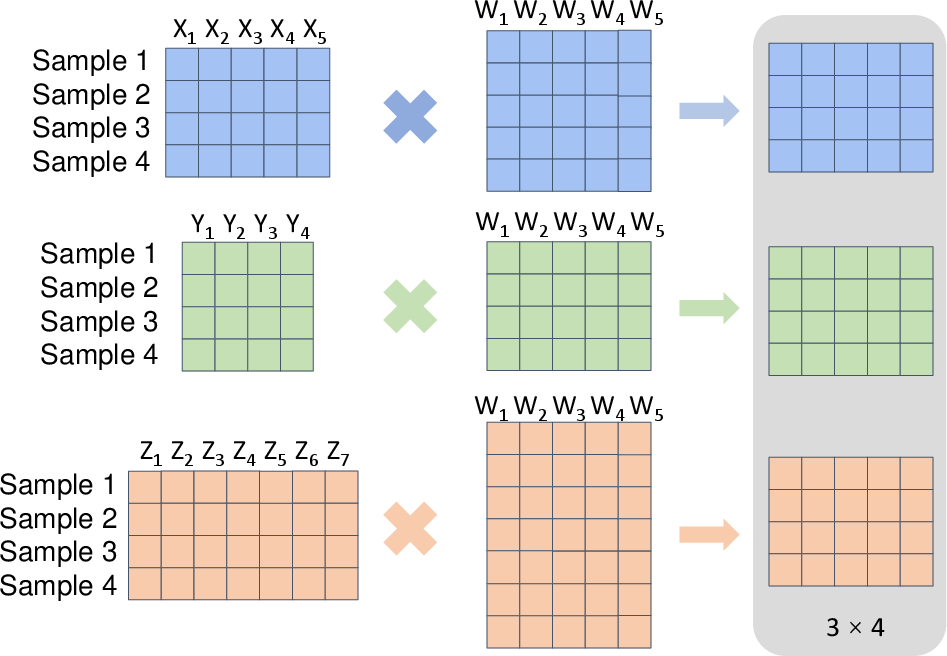

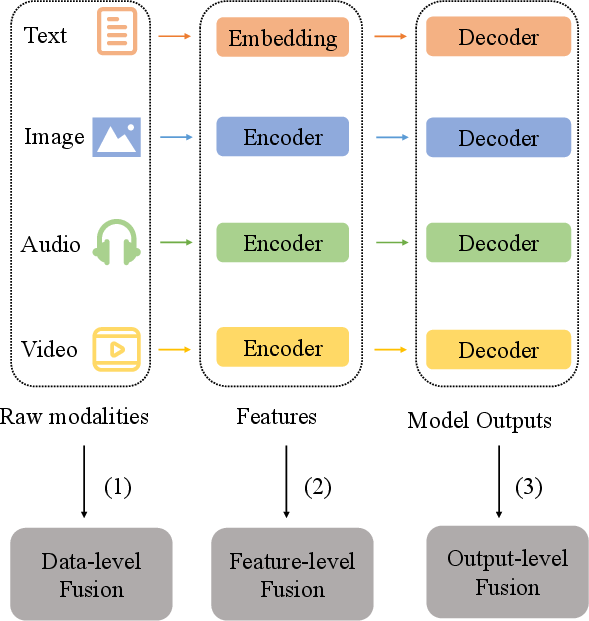

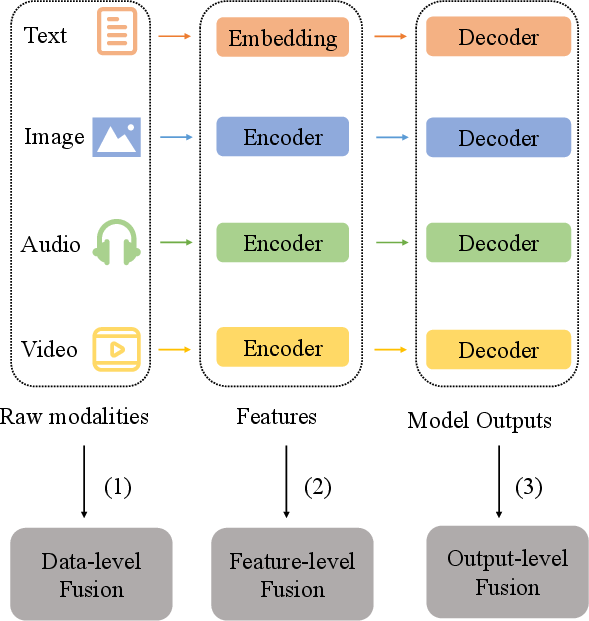

The encoder-decoder designs are analyzed across three fusion granularity levels: data-level (raw input concatenation), feature-level (hierarchical or intermediate feature concatenation), and model-level (post-decoder output fusion). Feature-level fusion is highlighted for maintaining semantic fidelity and enabling deep inter-modal interaction.

Figure 3: Three fusion mechanisms: data-level, feature-level, output-level, mapped onto multimodal processing pipelines.

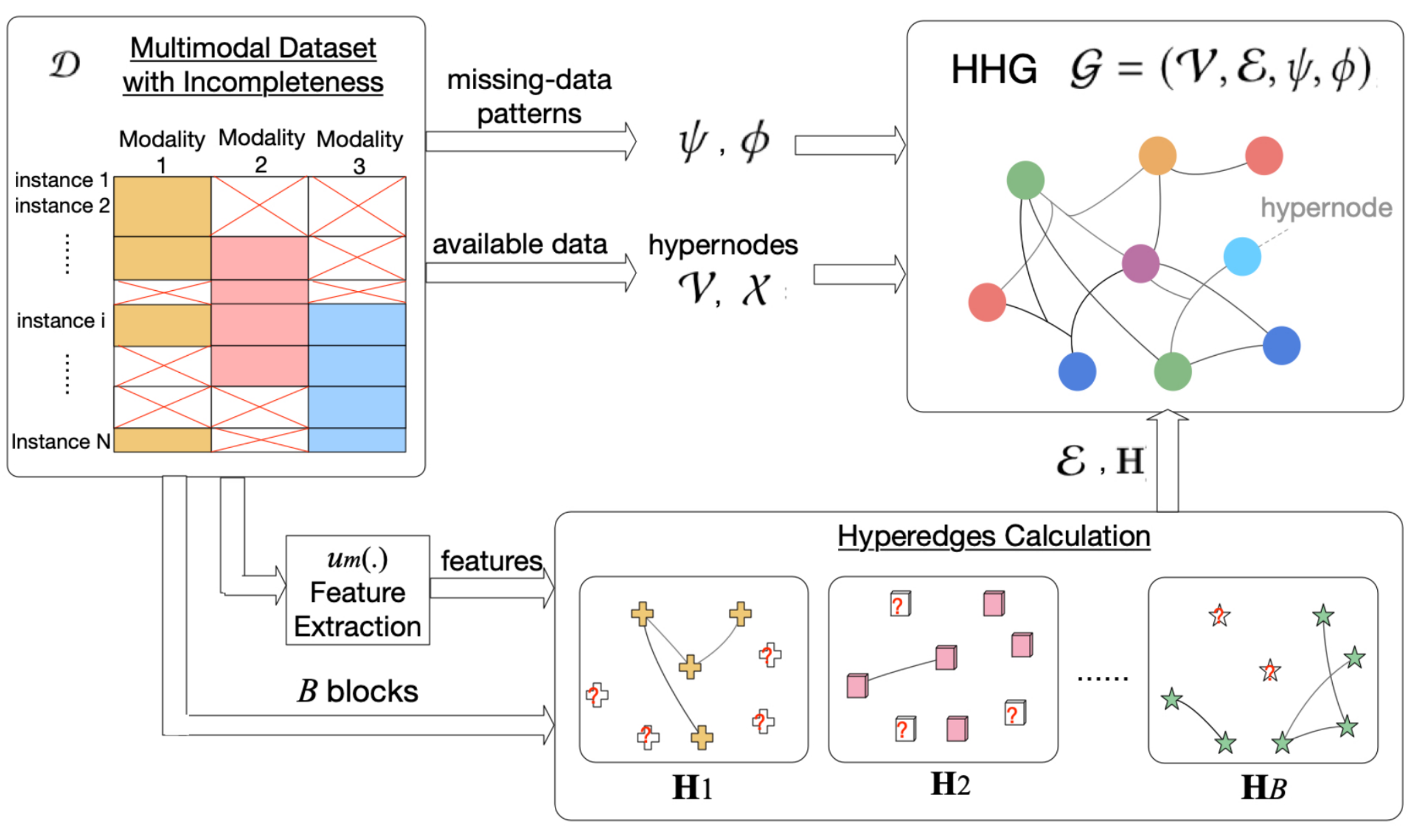

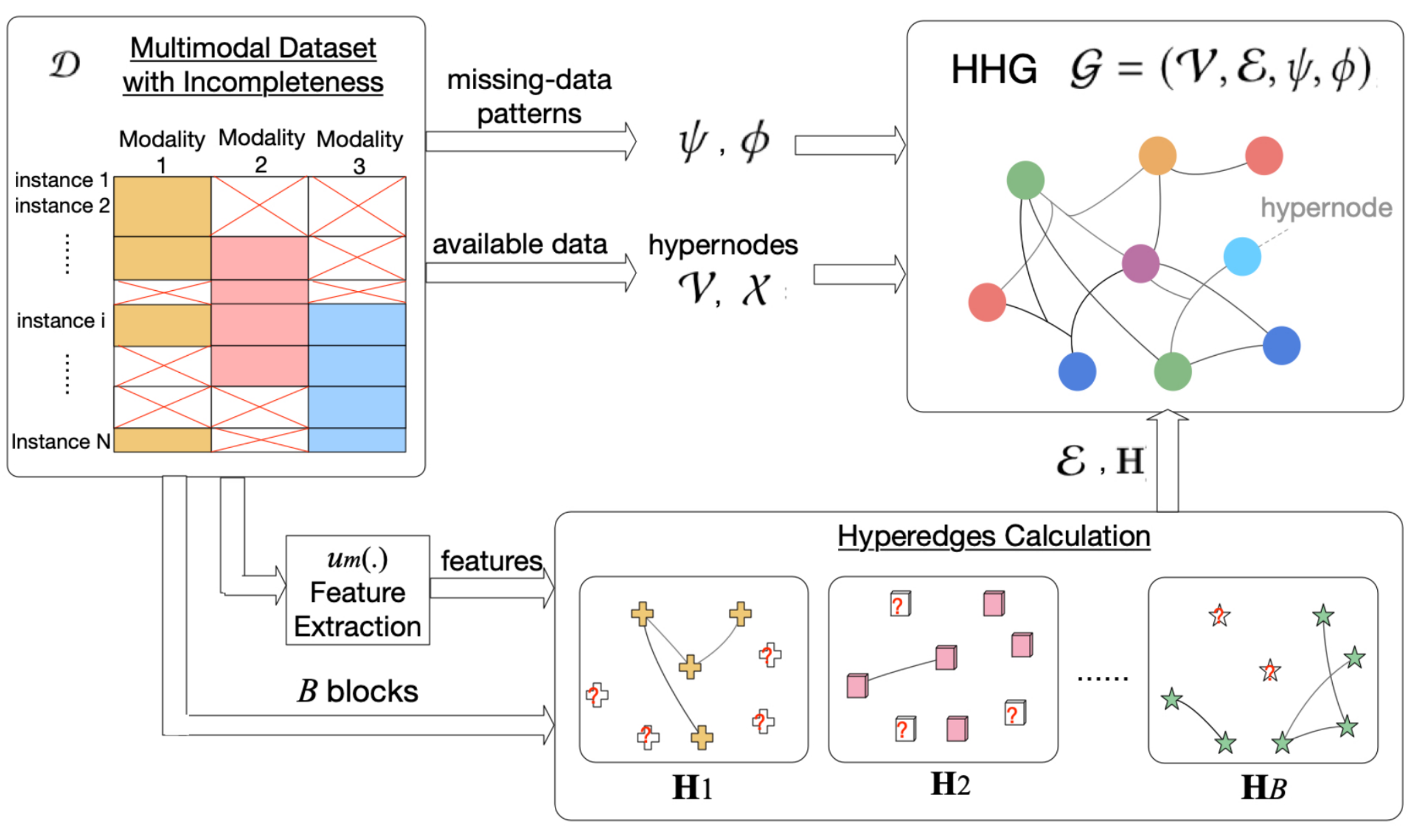

Kernel-based fusion uses kernel tricks to embed modalities into high-dimensional, nonlinear spaces, supporting better discrimination at the cost of interpretability and scalability. Graphical fusion methods construct connected heterogeneous graphs (e.g., HGMF), facilitating robust fusion even under missing or incomplete modal data.

Figure 4: Heterogeneous hypernode graphs in HGMF, demonstrating modality fusion under incomplete data conditions.

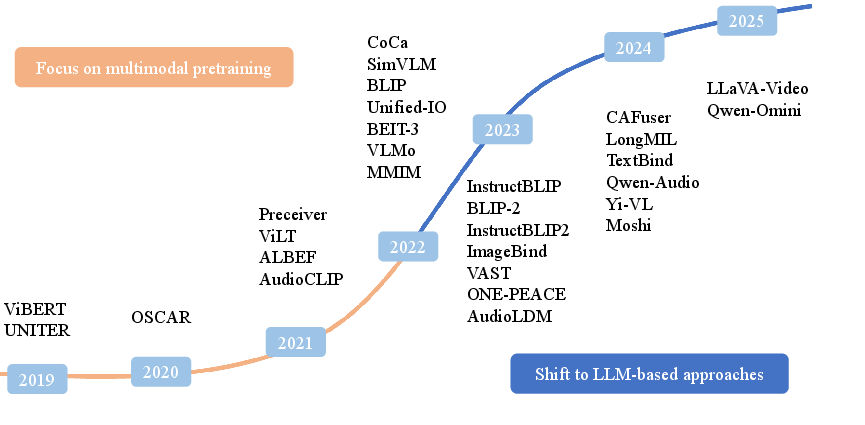

Attention-based fusion, now the dominant paradigm, uses Q/K/V-motivated modules to dynamically weigh inter-modal features. Transformer architectures and their derivatives (ViT, BLIP, CoCa, BEIT-3, etc.) have advanced state-of-the-art performance. Attention mechanisms enable selective focusing and deep integration, but scale and computational expense grow rapidly with modal richness and dataset expansion.

Figure 5: Timeline illustrating the architectural shift from transformer-centric fusion to LLM-centric cross-modal knowledge reuse and adapter-based integration post-2023.

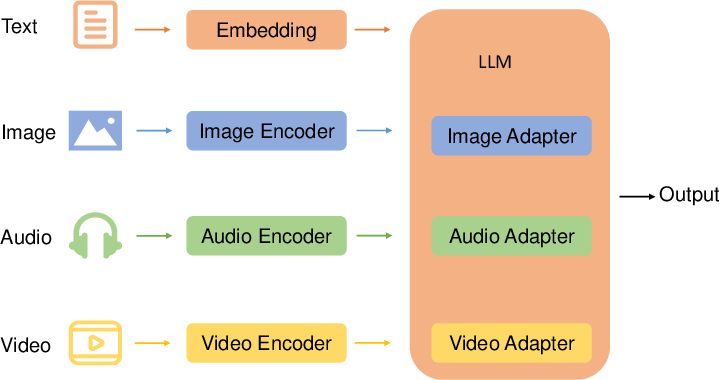

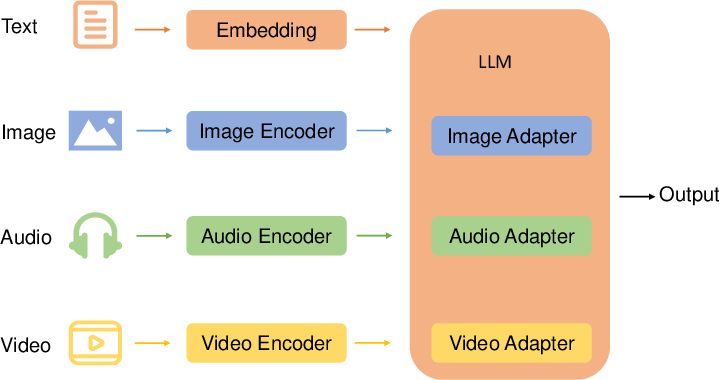

Recent advances involve adapters embedded into frozen LLMs, where modality-specific encoders feed adapters that interface with the core LLM, enabling parameter-efficient fine-tuning and zero-shot generalization.

Figure 6: Modality-specific adapters linking encoder outputs to the LLM core, supporting scalable multimodal fusion and flexible extension.

Key Challenges

The survey details several persisting obstacles:

- Modal Feature Alignment: Visual features extracted by generic object detectors misalign with corresponding textual features, limiting cross-modal knowledge transfer; recent works use noise-injection and shared tokenization (FDT), but fully robust alignment remains unresolved.

- Computational Efficiency: Early models suffer from prohibitive inference costs; advances with ViTs (patch tokenization), attention bottlenecks, and prompt-based fusion improve tractability but struggle with scale as modal diversity increases.

- Data Quality: Internet-scale multimodal datasets are noisy, often optimized for search rather than alignment; synthetic caption generation and filtering improve training utility, but data diversity and signal integrity need further improvement.

- Training Scale: Gargantuan datasets (e.g., LAION-5B) democratize access but pose difficulties in preprocessing, filtering, and maintaining representation diversity. Efficient compression and MoE scaling methods offer some relief but do not fully mitigate dataset redundancy or domain adaptation issues.

Implications and Future Directions

From the surveyed literature, the following implications and research directions emerge:

- Methodological Extension: Hybrid techniques blending explicit and implicit alignment/fusion mechanisms (e.g., hybrid Transformers/GNNs) may improve semantic fidelity and computational efficiency.

- Dataset Quality over Quantity: Innovations in scalable multimodal data curation, synthetic augmentation, and robust filtering must parallel model growth to avoid adverse effects of noise and bias.

- Scalable and Adaptive Architectures: Design of modular, parameter-efficient adapters and task-conditioned fusion pipelines will be crucial for domain transfer, efficiency, and generalization.

- Deeper Fusion Beyond Feature Aggregation: Research must further explore deep cross-modal entanglement (semantic, reasoning, context dependence), moving beyond simple concatenation or dot products toward multi-hop reasoning and generative cross-modal models.

Conclusion

The paper delivers a granular analysis of multimodal alignment and fusion landscape, showing that architectural innovations and robust alignment are key for progress. Attention-based approaches have achieved state-of-the-art performance but face computational, alignment, and quality challenges. Theoretical and practical advances are required in alignment expressivity, fusion depth, scalable data curation, and adaptive integration frameworks. Future research should target model interpretability, domain robustness, and scalable multimodal training. The surveyed methodologies, technical challenges, and suggested solutions provide an operational blueprint for advancing multimodal AI toward generality and real-world applicability.