Foundations and Trends in Multimodal Machine Learning: Principles, Challenges, and Open Questions

The paper "Foundations and Trends in Multimodal Machine Learning: Principles, Challenges, and Open Questions" provides a comprehensive review of the computational and theoretical foundations of multimodal machine learning (MML). This research field aims to design computer agents capable of learning, understanding, and reasoning through the integration of multiple sensory modalities such as linguistic, acoustic, visual, tactile, and physiological messages. This essay outlines the key principles driving MML, the core technical challenges, and the future directions suggested by the paper.

Foundational Principles

The authors identify three key principles fundamental to MML: heterogeneity, connections, and interactions.

- Heterogeneity: Different modalities exhibit diverse qualities, structures, and representations. The paper categorizes heterogeneity into several dimensions including element representation, distribution, structure, information, noise, and task relevance. These dimensions are crucial for designing specialized encoders and for understanding how multimodal data should be processed.

- Connections: The interconnected nature of multimodal data means modalities share complementary information. Connections can be studied from both statistical (e.g., association and dependence) and semantic (e.g., correspondence and relationships) perspectives.

- Interactions: Interactions in multimodal data produce new information when modalities are integrated for a task. The paper of interactions includes understanding whether information is redundant or unique (interaction information), the functional operators involved in integrating modalities (interaction mechanics), and how the inferred task changes with multiple modalities (interaction response).

Core Technical Challenges

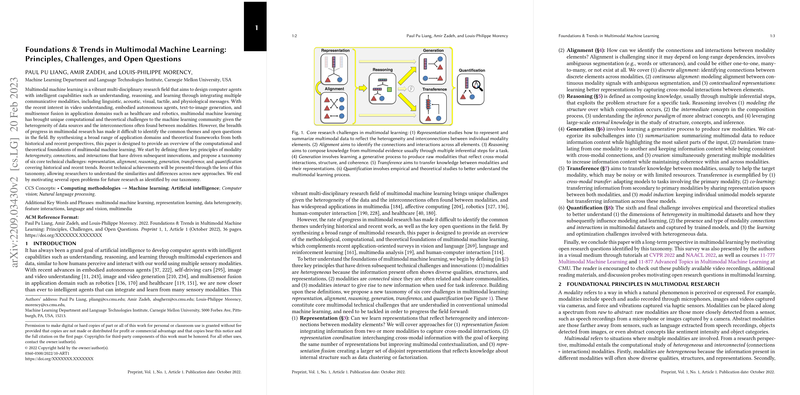

The paper presents a taxonomy of six core challenges in MML: representation, alignment, reasoning, generation, transference, and quantification.

- Representation: This challenge involves learning representations that capture cross-modal interactions. The authors discuss three forms:

- Fusion: Integrating multiple modalities into a single representation.

- Coordination: Maintaining separate but interconnected representations.

- Fission: Creating a decoupled set of representations reflecting internal structure.

- Alignment: Identifies connections between modality elements.

- Discrete alignment: Aligns discrete elements.

- Continuous alignment: Addresses continuous signals without clear segmentation.

- Contextualized representations: Learns better representations by modeling cross-modal connections.

- Reasoning: Combines knowledge through multiple inferential steps.

- Structure modeling: Defines the relationships over which reasoning occurs.

- Intermediate concepts: Studies the parameterization of multimodal concepts.

- Inference paradigm: Understands how abstract concepts are inferred.

- External knowledge: Leverages large-scale knowledge bases.

- Generation: Involves creating raw modalities that reflect cross-modal interactions.

- Summarization: Abstracts the most relevant information.

- Translation: Maps one modality to another while maintaining information content.

- Creation: Generates high-dimensional data in a coherent manner.

- Transference: Concerns the transfer of knowledge between modalities.

- Cross-modal transfer: Transfers knowledge from models trained on secondary modalities.

- Co-learning: Shares information through shared representation spaces.

- Model induction: Keeps models separate but induces behavior in each other.

- Quantification: Aims to empirically and theoretically understand MML models.

- Heterogeneity: Studies different quantities and usages of modality information.

- Interconnections: Understands the presence and type of modality connections and interactions.

- Learning processes: Characterizes the learning and optimization challenges.

Future Directions

The paper outlines several promising directions for future work:

- Theoretical and empirical frameworks: Formalizing the core principles of MML.

- Beyond additive and multiplicative interactions: Capturing causal, logical, and temporal connections.

- Insights from human sensory processing: Leveraging cognitive science principles to design MML systems.

- Long-term memory and interactions: Developing models that can capture long-range interactions.

- Compositional generalization: Ensuring that models generalize to new compositions of modality elements.

- High-modality learning: Extending MML to include a wider range of real-world modalities.

- Ethical concerns in generation: Addressing the risks of multimodal generation such as misinformation or biased outputs.

Conclusion

This paper successfully proposes a structured taxonomy for understanding and addressing the challenges in MML. By outlining key principles, technical challenges, and future directions, the authors provide a roadmap for advancing the field. This taxonomy can help researchers catalog advances and identify open research questions, driving forward both theoretical understanding and practical applications in multimodal machine learning.