- The paper demonstrates a scalable multi-agent framework (MacNet) that uses DAGs to enable effective, sequential LLM-based agent collaboration.

- It details a modular design encompassing topology, interaction, and memory control to optimize collaborative reasoning and maintain context.

- It reports significant experimental improvements on benchmarks like MMLU and HumanEval, validating a collaborative scaling law with logistic growth in solution quality.

Scaling LLM-based Multi-Agent Collaboration

Introduction

The paper "Scaling LLM-based Multi-Agent Collaboration" investigates the principles and implementation strategies for enhancing the collaboration of agents powered by LLMs through scalable multi-agent frameworks using graph theory, specifically directed acyclic graphs (DAGs). This research draws inspiration from the neural scaling laws prevalent in the development of LLMs, which suggest that increasing the scale of agents could lead to emergent collective intelligence, potentially enhancing the capabilities beyond individual agents.

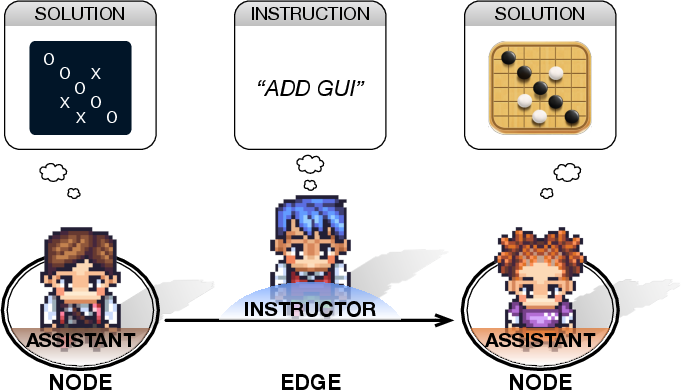

Figure 1: Given a task, multi-agent collaboration networks (MacNet) utilize directed acyclic graphs to organize diverse agents for collaborative interactions, with the final solution derived from their dialogues.

Multi-Agent Collaboration Network Design

The proposed multi-agent collaboration network (MacNet) leverages DAGs to systematically organize agents into a structure that enhances interactive reasoning and task resolution. The primary components of MacNet include:

- Topology Design:

- MacNet deploys a DAG where nodes represent agent nodes endowed with specialized roles and edges represent directional communication pathways between agents.

- Through topological ordering, agent interactions are structured to be sequentially organized, facilitating efficient data flow and resolution of tasks, thereby avoiding global broadcasts.

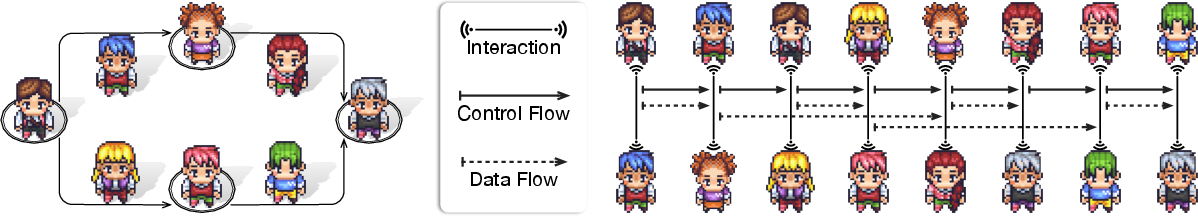

Figure 2: Representative topological structures.

- Interaction Mechanism:

- Memory Control:

- Context management is handled through short-term and long-term memory modules to avoid context overflow, thus ensuring scalability up to thousands of agents without loss of context or resolution quality.

Experimental Findings

- Performance Evaluation:

- MacNet demonstrates superior performance across a variety of benchmarks when compared to existing methods. Key experiments were executed on datasets such as MMLU, HumanEval, SRDD, and CommonGen-Hard, showcasing significant improvements in metrics such as accuracy and solution quality.

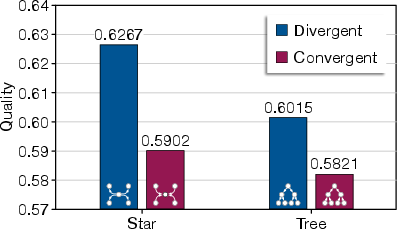

- Topology Evaluation:

- Collaborative Scaling Law:

Conclusion

The research provides significant insights into scaling multi-agent systems leveraging LLMs. By employing directed acyclic graphs, MacNet achieves scalable and efficient collaboration among agents, surpassing traditional models. This work paves the way for more resource-efficient systems, by optimizing agent collaboration through strategic topology management, potentially leading to improved automation and decision-making capabilities in complex multi-agent environments. Future research could explore further optimization techniques and integrations with other emerging technologies to enhance collaboration efficacy.