Shampoo: Efficient Tensor-Preconditioned Optimizer

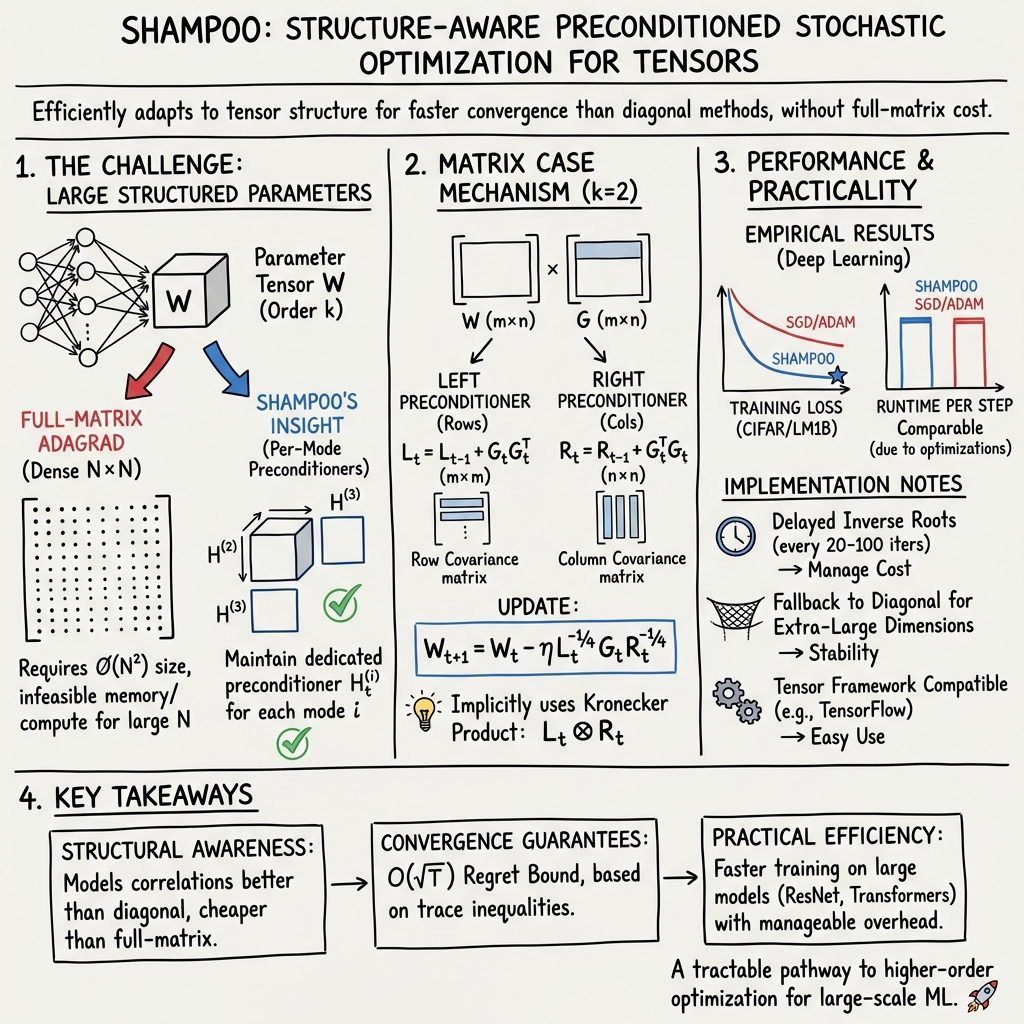

- Shampoo is a structure-aware preconditioned stochastic optimization algorithm that leverages per-mode matrix preconditioners to efficiently handle high-dimensional tensor parameters.

- It utilizes a Kronecker product-based preconditioning approach along with matrix trace inequalities to ensure strong convergence guarantees and computational efficiency.

- Empirical evaluations on vision and language tasks show that Shampoo achieves faster convergence and competitive runtime performance compared to diagonal adaptive methods.

Shampoo is a structure-aware preconditioned stochastic optimization algorithm designed for tensor-parameter spaces, with practical efficiency and strong convergence guarantees. It employs a per-mode matrix preconditioner approach, enables parallel computation, and offers significant convergence acceleration over diagonal and full-matrix adaptive methods in high-dimensional neural networks. By operating over the native tensor structure and avoiding the computational prohibitions of full-matrix adaptivity, Shampoo provides a tractable pathway to higher-order optimization for large-scale machine learning deployments.

1. Structure-Aware Preconditioning in Tensor Optimization

Shampoo addresses a central bottleneck in stochastic optimization for deep learning: effective curvature adaptation of large, structured parameters with manageable computational and memory overhead (Gupta et al., 2018). Parameters in modern neural architectures are typically matrix- or tensor-valued. Classic preconditioning methods (e.g., full-matrix AdaGrad, Newton-type procedures) require size- matrices, quickly becoming infeasible for large .

Shampoo maintains, for each mode of a parameter tensor of order , a dedicated preconditioner

updated by accumulating mode-specific contractions of the gradient. For matrices , two preconditioners are maintained:

- Left: (row covariance)

- Right: (column covariance)

The preconditioned update is executed as: The effective full preconditioner, operationally equivalent when vectorizing, is the Kronecker product .

For higher-order tensors, mode-wise contractions and corresponding preconditioners are generalized, with fractional inverse powers performed along each dimension.

2. Mathematical Foundations and Convergence Guarantees

Shampoo’s convergence analysis is formalized within the Online Convex Optimization (OCO) framework, leveraging adaptive regularization via mirror descent. The optimizer’s update, after vectorization, can be interpreted as: effectively yielding when .

A distinguishing technical contribution is the demonstration (via matrix trace inequalities, including Ando’s and Lowner’s monotonicity theorems) that the per-mode Kronecker product lower-bounds the full-matrix preconditioner for the flattened parameter. Specifically,

which ensures preservation of key spectral characteristics for step-size adaptation.

When gradients have rank at most , the regret bound is: with typical growth of trace terms as and overall regret scaling as under mild assumptions. The analysis extends, with significant algebraic complexity, to general order- tensors.

3. Empirical Performance in Deep Learning

Extensive empirical evaluation positions Shampoo as consistently faster in convergence than diagonal-state optimizers (SGD variants, AdaGrad, Adam) (Gupta et al., 2018). Key settings examined include:

- Vision: 32-layer ResNet/Inception on CIFAR-10, 55-layer ResNet on CIFAR-100

- Language: Attention-based architectures on the LM1B benchmark

Across these models, Shampoo achieves lower training loss and superior or competitive generalization metrics (test error, perplexity). Per-step runtime remains comparable to SGD, AdaGrad, and Adam, despite the more involved update rule. On benchmark hardware (Tesla K40 GPU), steps/sec for Shampoo closely match diagonal optimizers, thanks to algorithmic shortcuts such as delayed matrix root computation and optimized tensor contraction routines in frameworks like TensorFlow.

4. Practical Implementation and Deployment Considerations

The practical effectiveness of Shampoo is underpinned by:

- Structure-preserving preconditioning which better models inter-parameter correlations than diagonal adaptivity, yet at much lower cost than full-matrix schemes;

- Convex-case convergence guarantees, with empirical success in non-convex deep learning;

- Memory and runtime management through delayed fractional power updates (recompute every 20–100 iterations), and fallback to diagonal variants for extra-large dimensions;

- Tensor framework compatibility, enabling direct integration with standard deep learning tools;

- Model-agnostic applicability—only tensor shape information is required, with no architecture-specific knowledge needed.

A practical limitation is the reliance on matrix fractional inverse powers, typically requiring eigendecomposition, which poses both computational and precision challenges for very large layers. To ameliorate this, Shampoo switches automatically to diagonal approximations in such scenarios.

5. Advances, Extensions, and Directions for Future Research

Several promising pathways are articulated for advancing Shampoo and related tensor-structured second-order optimizers:

- Extension to non-convex optimization regimes, which dominate contemporary deep learning practice.

- Improved diagonal/hybrid approximations for extreme-scale parameter tensors, balancing curvature adaptation and resource footprint.

- Incorporation and analysis of advanced momentum schemes (already present with momentum = 0.9 in some experiments).

- Empirical investigation in broader settings—reinforcement learning, generative modeling—where tensor geometry and optimization interact more subtly.

- Exploration of cross-layer correlation modeling, potentially pushing beyond per-tensor preconditioning to capture deeper network structure.

Future work may also further quantify the precise trade-offs between update frequency, fractional power computation, and overall optimizer robustness, especially in distributed or low-precision execution environments.

6. Broader Significance and Theoretical Impact

Shampoo represents an overview of ideas from adaptive online learning, higher-order optimization, and tensor algebra. Its key contribution lies in the operationally efficient exploitation of parameter structure—a principle foundational for scalable second-order optimization. The mathematical insights into Kronecker product preconditioning and trace inequalities underpin both its spectral efficiency and sizing guarantees.

The convergence rate, memory–runtime trade-off, and model-agnostic deployment have made Shampoo a reference method for high-dimensional tensor optimization, with ongoing influence on the design of newer block-structured and distributed stochastic optimizers in large-scale deep learning.

7. Comparative Summary Table

| Optimizer | Preconditioner Type | Empirical Convergence | Runtime per Step |

|---|---|---|---|

| Shampoo | Per-mode (Kronecker-factor) | Fastest (CIFAR, LM1B) | Comparable to SGD/Adam |

| AdaGrad/Adam | Diagonal per-parameter | Slower | Fastest |

| Full-matrix AdaGrad | Full dense matrix | (Impractical at scale) | (Prohibitive) |

This summary table collates the computational and empirical advantages of Shampoo relative to commonly used adaptive methods, as presented in (Gupta et al., 2018).