KL-Shampoo Optimizer

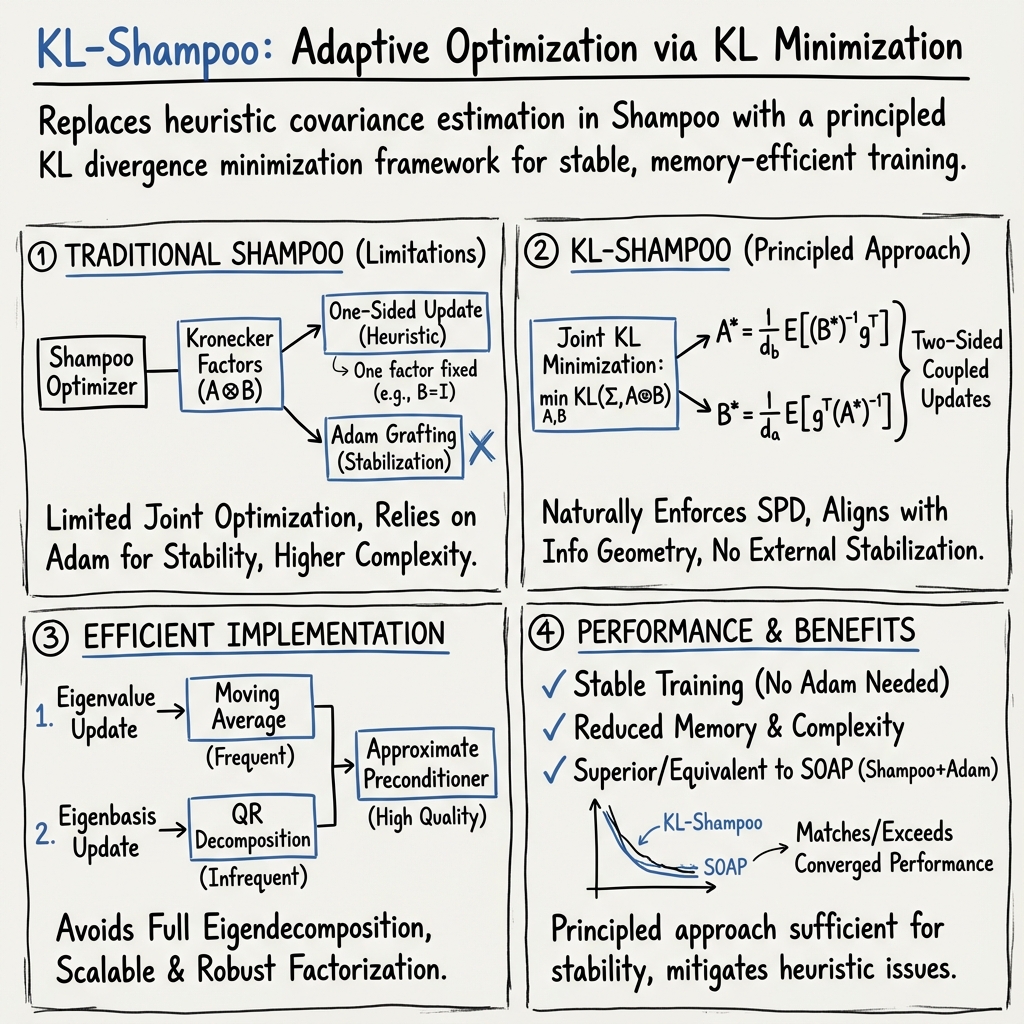

- KL-Shampoo is an adaptive optimization algorithm that reformulates gradient covariance estimation as a KL divergence minimization to enforce symmetric positive-definite preconditioners.

- It eliminates heuristic Adam grafting and reduces memory overhead by employing two-sided preconditioner updates and moving average-based eigenvalue estimation.

- KL-Shampoo demonstrates superior or equivalent performance in neural network training tasks, with applications ranging from language models to vision and multi-modal architectures.

KL-Shampoo is an adaptive optimization algorithm for training neural networks, designed to improve upon the Shampoo optimizer by reformulating second-moment (covariance) estimation as a Kullback-Leibler (KL) minimization problem. This alternative information-geometric perspective provides a more principled foundation for covariance estimation, removes specific heuristics from the original Shampoo approach, and enables stable training without referencing or grafting with Adam, thereby reducing memory overhead and complexity.

1. Formal Perspective: Covariance Estimation via KL Minimization

The original Shampoo algorithm uses a Kronecker-factored approximation to estimate the covariance of the gradients for each tensor-valued parameter. While this second-moment estimation has been traditionally analyzed with the Frobenius norm, such a view does not ensure that the preconditioner remains symmetric positive-definite (SPD), a property essential for meaningful descent directions during optimization.

KL-Shampoo reframes the problem through the Kullback-Leibler divergence between Gaussian distributions, where the second moment is interpreted as a covariance matrix. If denotes the empirical covariance and the preconditioner (Kronecker-factored as ), the KL divergence between and is

Minimizing this functional automatically enforces SPD constraints and aligns the update direction with the most information-theoretically efficient choice.

2. Identified Limitation in Traditional Shampoo

Analysis reveals that the canonical Shampoo procedure updates only one Kronecker factor while holding the other fixed (typically to the identity), meaning it optimizes a one-sided KL minimization. This does not fully solve the joint problem for both factors. Moreover, Shampoo typically relies on "learning rate grafting," where Adam's updates stabilize training by correcting the scales in the optimizer's steps—a heuristic that introduces additional memory requirements and complexity.

Therefore, while Shampoo can stably train neural networks using these heuristics, its update schedule and covariance estimation are not jointly optimal when measured by KL divergence.

3. KL-Shampoo Design: Two-Sided Preconditioner Updates

KL-Shampoo is derived by considering the joint KL minimization for both Kronecker factors. The ideal update seeks to solve

which leads to the coupled equations

where is the gradient tensor, and are the appropriate dimensional scalings.

Direct implementation is impractical due to coupled dependencies, so KL-Shampoo employs a moving average update:

These updates ensure that the scaling of the step sizes is naturally controlled by the dimensionality of the factors, resolving the mis-scaling inherent in Shampoo and eliminating the necessity for external stabilization.

Implementation is made computationally efficient by updating the eigenvalues with moving averages and infrequently updating the eigenbasis via QR decomposition. This approach avoids the computational expense of regular eigendecomposition and leverages approximate but robust matrix factorizations to maintain high-quality preconditioners.

4. Performance and Empirical Improvements

Experimental results indicate that KL-Shampoo achieves performance superior or equivalent to Shampoo with Adam grafting (SOAP) in neural network pretraining tasks. For example, in training RWKV7 models, KL-Shampoo stably trains where Shampoo with power may fail without Adam stabilization. KL-Shampoo eliminates the need for this grafting, reduces memory overhead, and matches or exceeds the converged performance of SOAP.

This demonstrates that principled covariance estimation grounded in KL minimization is sufficient for optimizer stabilization, and the moving-average and dimension-normalization features mitigate the issues found in prior heuristic approaches.

5. Practical Implementation and Computational Strategies

KL-Shampoo's practical scheme requires efficient matrix inverse and factor updates. Eigenvalue estimation is performed with a moving average using a potentially outdated eigenbasis, with the basis itself refreshed occasionally using a QR-based method. This dual update schedule ensures that the preconditioner remains close to optimal in KL divergence while maintaining scalable computational requirements. Although the full eigendecomposition provides both the eigenbasis and eigenvalues, the QR method offers a lower-cost alternative in settings of infrequent eigenbasis changes.

6. Applications and Extension Potential

KL-Shampoo is particularly applicable to the pretraining of neural networks with tensor-valued parameters, where the KL-based framework generalizes naturally to higher-order tensor structures. Beyond LLM pretraining, the algorithm is suitable for large-scale vision models, multi-modal architectures, and any domain where structured second moment estimation is beneficial.

Future directions suggested by the paper include refining the moving-average update scheme, leveraging proximal-gradient methods for further efficiency, and extending the QR-based approach to more general tensor-valued preconditioners. A more in-depth theoretical analysis of KL-Shampoo's convergence under the joint KL minimization framework remains an area for further investigation.

7. Broader Context within Optimization Literature

KL-Shampoo sits at the intersection of covariance estimation, matrix/tensor factorization, and information geometry. Its design is informed by limitations uncovered through KL analysis and the desire to remove heuristic stabilization procedures such as Adam grafting. This shift toward principled optimizer parameter estimation demonstrates a growing trend in the optimization literature: leveraging information-theoretic objectives (such as KL divergence) for robust, scalable, and theoretically justified algorithmic improvements in deep learning optimizers (Lin et al., 3 Sep 2025).