LLM-Adapters: Modular & Efficient Fine-Tuning

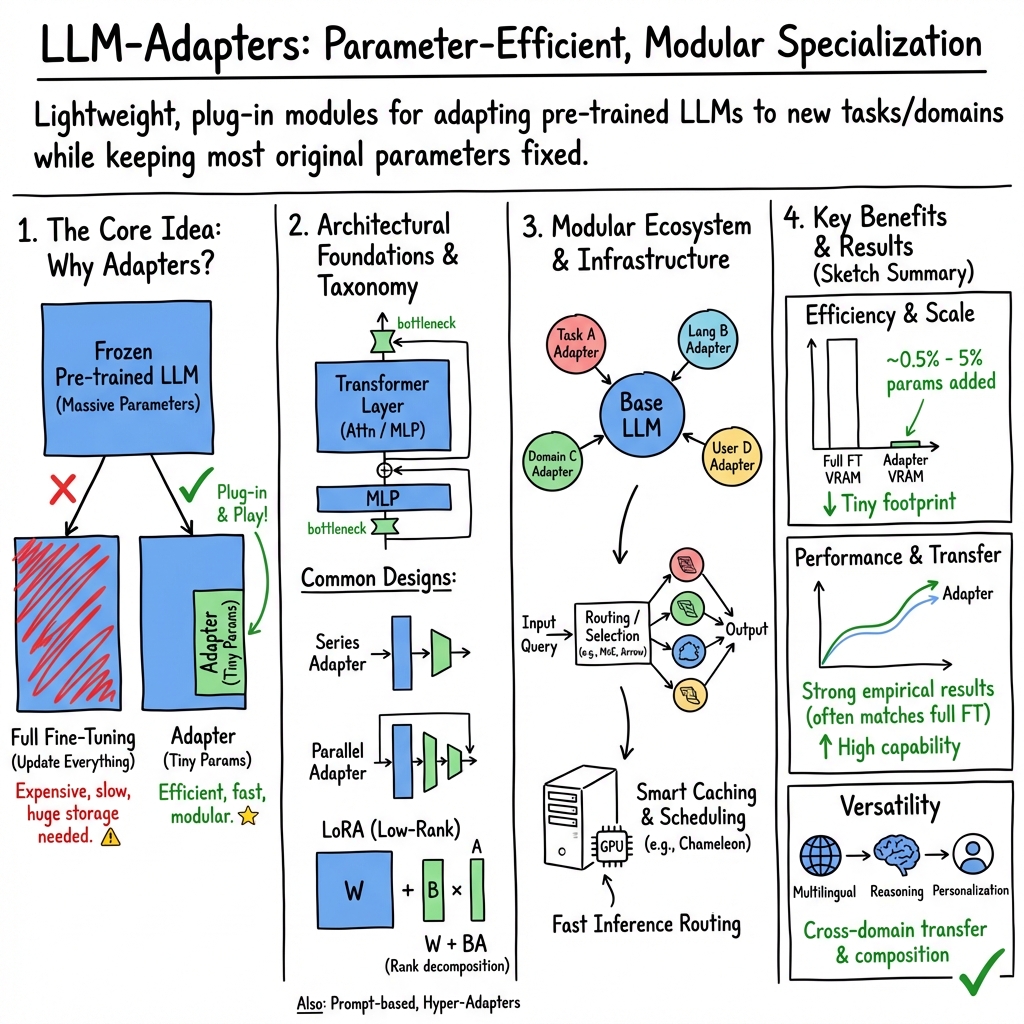

- LLM-Adapters are lightweight plug-in modules that enable efficient adaptation by inserting small neural networks into transformer layers.

- They utilize series, parallel, and low-rank architectures to optimize performance while keeping most model parameters fixed.

- Empirical studies show adapters enhance domain specialization, multilingual support, and user personalization with minimal training overhead.

LLM Adapters (LLM-Adapters) are lightweight, plug-in neural modules that enable parameter-efficient adaptation of pre-trained LLMs to new tasks, domains, modalities, or user requirements, while typically keeping most of the original model parameters fixed. LLM-Adapters encompass a broad family of architectural augmentations—inserted at key points in the transformer architecture—that can facilitate rapid, low-resource, and modular specialization of LLMs across diverse use cases, including NLP, multilingual processing, multimodal learning, user personalization, and efficient serving.

1. Architectural Foundations and Adapter Taxonomy

LLM-Adapters are typically realized as small bottleneck neural networks inserted after or in parallel with key components (e.g., multi-head attention or feedforward sublayers) within transformer architectures. The most prevalent designs include:

- Series Adapters: Inserted sequentially, usually after the MLP or attention layer with a residual connection. Example: , where is a nonlinearity and are learnable projections (Hu et al., 2023).

- Parallel Adapters: Run in parallel to the host layer, enhancing capacity without substantially interfering with the main computation: (Hu et al., 2023).

- Low-Rank Adapters (LoRA): Decompose adaptation into low-rank updates , where and and , enabling efficient parameter usage (Hu et al., 2023, Liu et al., 22 Jan 2025).

- Prompt-based Adapters: Leverage learnable prompt vectors injected into the input or hidden states, effectively tuning the activation space the frozen model operates in (Hu et al., 2023).

- Hyper-Adapters: Use hyper-networks conditioned on meta-information (such as language or layer identity) to generate the weights of adapters dynamically, enabling scalable, parameter-light adaptation to multiple languages or domains (Baziotis et al., 2022).

Adapter architectures can be further extended with gating (MoE), composition, or alignment across modalities and representations (Li et al., 26 Aug 2025, Ebrahimi et al., 2024, Ostapenko et al., 2024, Jukić et al., 26 Sep 2025), with hybrid and compositional forms increasingly prominent.

2. Parameter Efficiency and Modular Adaptation

Adapters provide a mechanism to introduce new capabilities without the need for full-model retraining or storage of multiple full-parameter copies:

- Typical parameter count increases range from 0.5%–5% per domain or task, with 2% often reported for a first new task, and further 13% total after several tasks due to stacking or combinatorial additions (Lee et al., 2020).

- Hyper-adapters can compress language- or task-specific capacity, achieving up to 12 parameter efficiency over per-language adapters, scale sub-linearly in multi-lingual or multi-task settings, and enable positive transfer via meta-conditioned generation (Baziotis et al., 2022).

- Modular adapter “libraries” (e.g., via model-based clustering (MBC) or task-specific training) enable libraries of LoRA modules to be dynamically reused and routed to new tasks or domains, with zero-shot and supervised routing mechanisms (e.g., Arrow) achieving strong generalization (Ostapenko et al., 2024). For instance, Arrow uses SVD-based “prototypes” for routing selection at inference, and cluster-based adapters optimize for parameter transfer (Ostapenko et al., 2024).

Adapters can also be “composed” algebraically, as with CompAs, which turns context into compositional adapter parameters whose sum approximates the effect of concatenated contexts—enabling efficient scaling beyond context window limits and robust multi-chunk integration (Jukić et al., 26 Sep 2025).

3. Empirical Performance and Task Adaptation

Adapters consistently yield strong empirical results for domain, task, user, and modality adaptation:

- Domain Specialization: Adapter-based models frequently outperform full fine-tuning baselines and achieve lower WERs or error on speech (ASR) or NLP benchmarks, even using a fraction of the parameter update or training time (e.g., up to 8.25 percentage point WER improvement in general ASR, up to 2.46% in specialized music ASR) (Lee et al., 2020).

- Task and Data Efficiency: On reasoning and commonsense tasks (e.g., GSM-8K, MMLU, BBH), adapter-based fine-tuning on smaller LLMs (7B, 13B) with LoRA or series/parallel modules allows performance that matches or exceeds larger (175B) models in practical ID or OOD evaluation regimes (Hu et al., 2023).

- Multilinguality: Adapter techniques (standard, hyper-adapter, vocabularic, or embedding-based) afford rapid inclusion of new languages or scripts, boost transfer to underrepresented (or unseen) languages, and resolve fragmentation and inefficiency in tokenization and representation, especially for low-resource languages or those absent from pretrain (Gurgurov et al., 2024, Han et al., 2024, Jiang et al., 12 Feb 2025).

- Multimodal and Cross-lingual Transfer: Gated cross-modal adapters enable efficient visual-language understanding with minimal retraining, as seen in CROME; modular adapters also permit incremental enrichment of multilingual reasoning or generation without catastrophic forgetting (Ebrahimi et al., 2024, Jiang et al., 12 Feb 2025).

- Personalization and User Behavior: Persona-specific low-rank adapters fine-tuned over SLMs can efficiently capture diverse user traits, outperforming pooled or single-adapter alternatives in terms of reduced RMSE and MAE on recommendation tasks, with scalable, plug-and-play modular deployment (Thakur et al., 18 Aug 2025).

4. Mechanisms for Efficiency, Specialization, and Knowledge Transfer

Several mechanisms underpin the efficiency and versatility of LLM-Adapters:

- Residual and Bottleneck Design: Small bottleneck MLPs with residual connections after each main layer minimize the magnitude of changes while maintaining model expressiveness (Hu et al., 2023, Alabi et al., 2024).

- Gradual and Distributed Adaptation: Adaptation to new languages, tasks, or domains is typically gradual and distributed across layers, with the source domain dominating early representations and the target (or new) domain emerging only in later layers. Importantly, many adapters can be skipped or dropped in intermediate layers with minimal loss of performance, suggesting opportunities for inference optimization (Alabi et al., 2024).

- Knowledge Transfer: Adapters engage in knowledge transfer across related domains, tasks, or languages. For instance, hyper-adapters encode language relatedness, enabling fine-tuned weights for one language to generalize to typologically similar languages with negligible loss in BLEU (Baziotis et al., 2022). In modular setups, shared adapters facilitate cross-lingual support, with high-resource domains bolstering low-resource ones (Li et al., 26 Aug 2025).

- Vocabulary and Embedding Surgery: Adapter modules can efficiently alter or extend the LLM vocabulary, learning linear combinations of pre-existing embeddings for new tokens without model retraining, significantly boosting multilingual NLU and MT, especially for Latin-script and highly fragmented languages (Han et al., 2024, Jiang et al., 12 Feb 2025).

5. System and Infrastructure-Level Optimizations

Adapters both unlock and require architectural changes to LLM serving infrastructure:

- Dynamic/Token-wise Routing: Systems such as LoRA-Switch implement token-wise routing at inference and fused kernel launches, reducing inference latency by more than 2.4 over prior MoE or dynamically routed adapter baselines, with negligible reduction in model accuracy (Kong et al., 2024).

- Caching and Scheduling for Multi-Adapter Environments: Chameleon introduces intelligent caching of popular adapters in idle GPU memory and adapter-aware non-preemptive multi-queue scheduling to address adapter loading overhead and workload heterogeneity. By optimizing both cache eviction (e.g., frequency, recency, and size-based heuristics) and batch admission (e.g., weighted request size metrics), Chameleon yields a reduction in P99 latency by 80.7% and a 1.5 throughput improvement under realistic production loads (Iliakopoulou et al., 2024).

- Reversible and Multiplexed Adaptation: Data multiplexing using reversible adapters (e.g., RevMUX) enables batch-level inference speedups of 154–161% versus naive mini-batch scheduling, with parameter-efficient, symmetric adapter architectures that guarantee individual sample recovery after shared processing (Xu et al., 2024).

6. Extended Applications and Future Directions

LLM-Adapters continue to evolve as foundational enablers for scalable, customizable, and resource-aware LLM deployment:

- Neural Architecture Search (NAS) and Compression: NAS frameworks integrating elastic low-rank adapters with network compression and sparsity yield models with up to 80% reduced parameter footprints and inference speedups of up to 1.41 versus standard LoRA, maintaining accuracy (Liu et al., 22 Jan 2025).

- Ensemble and Gradient-based Expert Adapters: ELREA (Ensembles of Low-Rank Expert Adapters) clusters domain data by gradient direction (i.e., conflict minimization) and trains expert adapters for each cluster, combing their outputs at inference via gradient-aligned routing, outpacing full-data LoRA and typical ensemble baselines (Li et al., 31 Jan 2025).

- Meta-Learning and Contextual Composition: CompAs achieves compositional adaptation by mapping context or instructions to LoRA-like parameter updates (adapters) that can be algebraically combined—in lieu of long prompt concatenation—enabling efficient scaling, improved robustness, reversibility, and secure auditing (Jukić et al., 26 Sep 2025).

- Cross-Modal, Non-NLP, and Emerging Domains: CROME demonstrates that pre-LM alignment via gated cross-modal adapters facilitates scalable, parameter-efficient multimodal learning, and systems such as LLM4WM leverage mixture-of-expert low-rank adapters for physical wireless channel multi-tasking, with strong results in full- and few-shot regimes (Ebrahimi et al., 2024, Liu et al., 22 Jan 2025, Liu et al., 22 Jan 2025).

Adapters have also been leveraged for context parameterization, preference alignment (Q-Adapter), knowledge graph integration, and beyond—consistently yielding improved adaptability, modularity, and practical deployment characteristics in resource-constrained or rapidly changing real-world deployment contexts.

In summary, the LLM-Adapter paradigm encompasses a highly active spectrum of architectural, algorithmic, and systems-level research targeting parameter-efficient, modular, and composable specialization of LLMs. Adapters achieve substantial reductions in training cost and resource footprint while maximizing specialization, flexibility, and knowledge transfer for dynamic, heterogeneous, and multi-domain environments. All reported claims, empirical performance figures, parameter counts, and technical formulas are sourced directly from the referenced research and should be reviewed in detail in the cited works for rigorous application and further development.