Dr. Zero: Self-Evolving Search Agents without Training Data

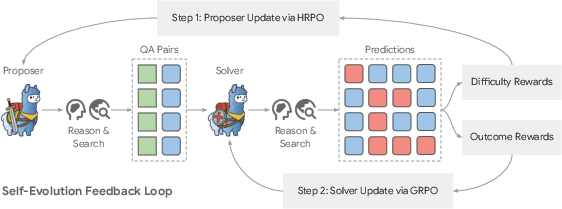

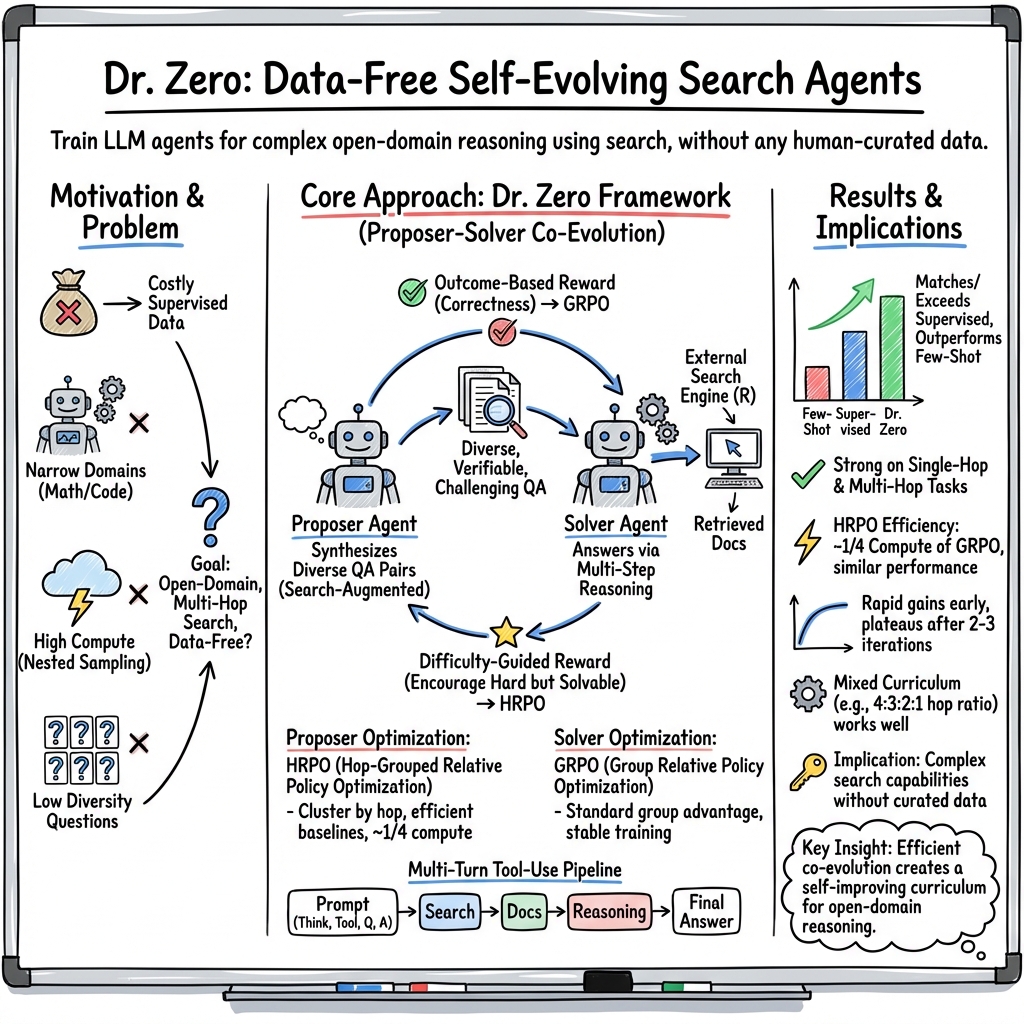

Abstract: As high-quality data becomes increasingly difficult to obtain, data-free self-evolution has emerged as a promising paradigm. This approach allows LLMs to autonomously generate and solve complex problems, thereby improving their reasoning capabilities. However, multi-turn search agents struggle in data-free self-evolution due to the limited question diversity and the substantial compute required for multi-step reasoning and tool using. In this work, we introduce Dr. Zero, a framework enabling search agents to effectively self-evolve without any training data. In particular, we design a self-evolution feedback loop where a proposer generates diverse questions to train a solver initialized from the same base model. As the solver evolves, it incentivizes the proposer to produce increasingly difficult yet solvable tasks, thus establishing an automated curriculum to refine both agents. To enhance training efficiency, we also introduce hop-grouped relative policy optimization (HRPO). This method clusters structurally similar questions to construct group-level baselines, effectively minimizing the sampling overhead in evaluating each query's individual difficulty and solvability. Consequently, HRPO significantly reduces the compute requirements for solver training without compromising performance or stability. Extensive experiment results demonstrate that the data-free Dr. Zero matches or surpasses fully supervised search agents, proving that complex reasoning and search capabilities can emerge solely through self-evolution.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces Dr. Zero, an AI system that teaches itself to become a better “search agent” without using any human-made training data. A search agent is an AI that can look things up on the web and reason through several steps to answer tricky questions. Dr. Zero does this by creating its own practice questions, solving them, and learning from the results—like a student who writes their own test and then studies from their mistakes.

Key Questions the Paper Tries to Answer

- Can an AI improve its web-search and reasoning skills without any human-written questions or answer labels?

- How can we make the AI’s self-made questions diverse, challenging, and still solvable, so learning stays meaningful?

- How do we make this self-training efficient, so it doesn’t require huge amounts of computer power?

How Dr. Zero Works (In Simple Terms)

Think of Dr. Zero as a two-player team that levels up together:

- The proposer is like a puzzle maker. It creates questions for the AI to answer. Over time, it tries to make questions that are not too easy and not impossible—just the right challenge.

- The solver is like a puzzle solver. It uses a search engine to find information and reasons step-by-step to answer the questions.

Here’s the loop they repeat:

- The proposer makes new questions (some require one step to answer, others need multiple “hops” across different web pages—like following clues in a scavenger hunt).

- The solver tries to answer them by searching and reasoning.

- The proposer gets a “score” based on how well the solver did:

- If all answers are correct, the question was too easy.

- If none are correct, the question was too hard.

- The best score comes when the question is challenging but still solvable.

- Both models update themselves to do better next round: the proposer makes better questions, and the solver gets better at solving them.

To make this efficient, the authors created a training method called HRPO (hop-grouped relative policy optimization). In everyday terms:

- “Multi-hop” means a question needs several steps to solve (like reading multiple pages and connecting facts).

- HRPO groups questions by how many hops they need (1 step, 2 steps, 3 steps, etc.).

- By comparing questions within the same group, the system can judge difficulty more fairly and learn faster, without needing to generate tons of extra samples. This avoids wasting a lot of computer time.

They also train the solver with a simpler method (GRPO) that focuses on whether the final answers are correct.

What They Found and Why It Matters

The researchers tested Dr. Zero on well-known question-answering challenges that require web search and multi-step reasoning (for example, Natural Questions, TriviaQA, HotpotQA, and others). Important takeaways:

- Dr. Zero matched or even beat strong systems that were trained with lots of human-made data. On some complex benchmarks, it surpassed supervised methods by up to 14.1%.

- It especially improved on multi-hop questions that need careful, multi-step thinking.

- The system worked on small and larger AI models, with bigger models gaining more from tougher, multi-step practice questions.

- Thanks to HRPO, training was much more efficient than older self-training methods that needed many repeated tries for each question.

In short: Dr. Zero shows an AI can teach itself to search and reason better without any labeled training data, just by using the web and a smart feedback loop.

Why This Is Important

- Reduces dependence on human-made datasets: Making and labeling training data is slow and expensive. Dr. Zero shows a path to strong performance without it.

- Builds better study habits for AI: The “make a puzzle → solve it → adjust difficulty” loop creates an automatic curriculum, helping the AI steadily level up.

- More practical search agents: A system that can reliably look up facts and reason through multiple steps can help with research, learning, and answering complex real-world questions.

Final Thoughts and Future Impact

Dr. Zero is a promising step toward AI systems that improve themselves using the open web, without manual supervision. This could make advanced, reasoning-heavy assistants more accessible, especially when high-quality training data is hard to find.

The authors also point out future challenges:

- Keeping training stable over many rounds so performance doesn’t plateau.

- Preventing “reward hacking” (the AI gaming its own scoring system).

- Avoiding bias and ensuring reliability when there’s no human in the loop.

If these challenges are addressed, self-evolving search agents like Dr. Zero could become powerful tools for learning, research, and problem-solving in many fields.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise list of what remains missing, uncertain, or unexplored in the paper, phrased to guide actionable follow-up work:

- External search dependency and scope: Generalization beyond a local English Wikipedia index (E5-base, top-3) to open-web search, other corpora (domain-specific, noisy, or adversarial), and multilingual settings is untested.

- Verifiability and truthfulness: The framework lacks an explicit evidence-grounded verifier (e.g., citation checking or claim verification); correctness is judged by exact match and solver agreement, leaving risks of incorrect or unsupported synthesized labels unaddressed.

- Reward hacking and ambiguity: The proposer reward peaks when exactly one of n solver attempts is correct, potentially incentivizing ambiguous or borderline questions; the paper does not quantify reward gaming or ambiguity and offers no safeguards beyond future work.

- Sensitivity to n in pass-rate reward: The number of solver attempts (n) used to compute proposer difficulty is unspecified; its impact on compute, variance, and reward reliability is not analyzed.

- “Hop” measurement and grouping validity: How hop counts are computed, validated, and robustly distinguished is unclear; the effect of hop misclassification on HRPO stability and performance is not studied.

- Grouping features beyond hop count: HRPO groups by hops only; alternative or complementary grouping criteria (topic, answer type, retrieval difficulty, lexical overlap, evidence count) are not explored.

- Efficiency claims unquantified: Wall-clock time, GPU hours/FLOPs, token throughput, and energy/cost comparisons vs GRPO, PPO, and nested sampling are not reported, leaving HRPO’s efficiency gains largely qualitative.

- Stability and plateau: Self-evolution plateaus after 2–3 iterations (worse at 7B); mechanisms to sustain continual improvement (adaptive curriculum, diversity constraints, restarts, entropy regularization) are not investigated.

- Implementation fragility: Training failures due to “inconsistent token IDs” in multi-turn rollouts suggest tooling brittleness; the frequency, impact on results, and mitigation strategies are not systematically analyzed.

- Safety and bias: No bias, toxicity, or safety audits are conducted; the method could amplify biases or generate unsafe content through search-augmented self-play.

- Robustness to retrieval noise/attacks: The agent’s behavior under irrelevant, misleading, adversarial, or poisoned documents, as well as retrieval outages and latency, is untested.

- Evaluation limited to exact match: No assessments of paraphrase tolerance, partial credit, calibration, hallucination rate, confidence reliability, or human judgments are provided.

- Evidence grounding quality: The system does not measure citation coverage, evidence sufficiency, or attribution quality for generated answers or synthesized QA pairs.

- Topic and difficulty diversity: There is no quantitative analysis of proposer-generated topic coverage, knowledge breadth, or difficulty profiles; mechanisms to enforce or diagnose diversity are missing.

- Co-adaptation/collusion risk: Using the same base LLM for proposer and solver could induce co-adaptation to idiosyncratic patterns; cross-play tests (swapping solvers, evaluating on external solvers/users) are absent.

- Generality beyond QA: Applicability to other tool-use tasks (coding, planning, data analysis), multi-modal settings, or interactive research tasks is not evaluated.

- Retrieval component learning: The retriever is fixed (E5-base, ANN top-3); joint learning or RL-based query reformulation and retriever adaptation are not explored.

- Curriculum control is manual: Fixed hop ratios (4:3:2:1) are hand-set; an adaptive curriculum policy tied to solver competence and uncertainty is not proposed or evaluated.

- Algorithmic baselines and theory: Comparisons to PPO with learned critics, off-policy methods, advantage normalization variants, or preference-based RL (DPO/RLAIF) are limited; HRPO’s theoretical properties (variance bounds, convergence) are not provided.

- Hyperparameter omissions and reproducibility: Key settings (e.g., HRPO/GRPO group sizes, max turns per rollout) are unspecified in the text; sensitivity analyses and reproducibility checklists are missing.

- Scaling laws: Results are reported for Qwen2.5 3B/7B only; scaling trends to larger models, compute scaling vs performance, and plateau behaviors under scale are unexplored.

- Temporal and domain drift: Training and evaluation use a static Wikipedia snapshot; robustness to evolving knowledge, time-sensitive queries, and cross-domain drift is not assessed.

- Dependence on initial document seeding: Ablations show large drops without an initial document; sensitivity to initial retrieval quality and fallback strategies when retrieval fails are not studied.

- Failure-case taxonomy: There is limited qualitative or systematic error analysis (e.g., retrieval misses vs reasoning mistakes vs formatting/tool-use errors), hindering targeted improvements.

- Ethical/legal considerations: Potential plagiarism of retrieved text in synthesized QA pairs, copyright concerns, and data governance for self-generated corpora are not addressed.

Glossary

- Actor-critic methods: RL algorithms that pair a policy (actor) with a value estimator (critic) to reduce gradient variance. "For example, actor-critic methods such as PPO employ a learned critic to estimate a value baseline (Mnih et al., 2016; Schulman et al., 2017)."

- Advantage estimation: Computing the relative benefit of an action or trajectory versus a baseline to guide policy updates. "We introduce hop-grouped relative policy optimization (HRPO), a novel optimization method that clusters structurally similar questions to provide a robust group-level baseline for advantage estimation."

- Approximate nearest neighbor (ANN) search: An efficient retrieval technique that finds items close to a query in embedding space using approximate indexing. "During inference, we perform an approximate nearest neighbor (ANN) search to retrieve the top-3 documents."

- Automated curriculum: An automatically generated sequence of tasks that increase in difficulty to improve learning. "As the solver evolves, it incentivizes the proposer to produce increasingly difficult yet solvable tasks, thus establishing an automated curriculum to refine both agents."

- Data-free self-evolution: A training paradigm where models improve by generating and learning from synthetic data without human-curated datasets. "As high-quality data becomes increasingly difficult to obtain, data-free self-evolution has emerged as a promising paradigm."

- Direct Preference Optimization (DPO): An offline method that trains LLMs directly on pairwise preference data. "A simpler offline alternative is direct preference optimization (DPO) (Rafailov et al., 2023), which directly optimizes LLMs on pairwise preference data."

- Exact match: An evaluation metric that marks a prediction correct only if it exactly matches the target answer string. "All models are evaluated using exact match with identical search engine (E5 base) and corpus settings (English Wikipedia dump)."

- Group Relative Policy Optimization (GRPO): A group-based policy optimization method that uses statistics across multiple responses to form low-variance baselines. "For solver training, we sample data pairs (x, y) from the proposer Te and optimize To via group relative policy optimization (GRPO) (Shao et al., 2024)."

- Hop-grouped Relative Policy Optimization (HRPO): An optimization algorithm that clusters questions by hop complexity to compute group-level baselines efficiently. "To enhance training efficiency, we also introduce hop-grouped relative policy optimization (HRPO)."

- IRCoT: A technique that interleaves retrieval with chain-of-thought reasoning to improve accuracy on knowledge-intensive tasks. "A notable example is IRCoT, where Trivedi et al. (2023) exploit multi-step retrieval to optimize answer accuracy on knowledge-intensive tasks."

- KL regularizer: A regularization term that penalizes divergence between the current policy and a reference to stabilize updates. "where N denotes the size of the sampled batch, and 3 is the hyperparameter controlling the KL regularizer."

- Multi-hop queries: Questions requiring reasoning across multiple pieces of evidence or steps. "such approaches yields moderate performance gains on trivial one-hop tasks but struggles to match supervised baselines on complex multi-hop queries (see Section 4)."

- Multi-turn tool-use rollout pipeline: A training procedure involving repeated tool interactions across turns during rollouts. "we introduce a multi-turn tool-use rollout pipeline that enables the trained proposer to significantly improve question generation quality and produce complex, multi-hop questions."

- Nested sampling: A multi-level sampling approach (e.g., sampling many questions and many responses per question) that increases compute cost. "the standard group relative policy optimization (GRPO) significantly increases training compute in self-evolution as it requires nested sampling: generating multiple queries and subsequently producing multiple responses for each question."

- On-policy framework: An RL setup where updates are computed using data sampled from the current policy. "For optimal proposer performance and training efficiency, we adopt a strictly on-policy framework and omit ratio clipping."

- Outcome-based reward: A reward signal that depends solely on the correctness of the final answer. "The optimization is driven by an outcome-based reward that solely evaluates the correctness of final predictions against the synthesized ground truth y."

- Policy gradient algorithms: RL methods that directly optimize a policy’s parameters via gradients of expected reward. "In the context of LLMs, RL is frequently implemented using policy gradient algorithms (Sutton et al., 1999; Ouyang et al., 2022)."

- Proposer-solver co-evolution: A training scheme where a question generator (proposer) and solver improve together by challenging each other. "Huang et al. (2025a) design a proposer-solver co-evolution framework to iteratively bootstrap questions and rationales, thereby achieving meaningful performance gains without access to any curated datasets."

- Proximal Policy Optimization (PPO): A widely used actor-critic RL algorithm that stabilizes updates via clipping and KL constraints. "For example, actor-critic methods such as PPO employ a learned critic to estimate a value baseline (Mnih et al., 2016; Schulman et al., 2017)."

- Ratio clipping: Limiting the change in the policy probability ratio during updates to improve stability. "we adopt a strictly on-policy framework and omit ratio clipping."

- REINFORCE++: A single-response RL optimization approach proposed for efficient alignment that reduces sampling costs. "While single-response methods like REINFORCE++ reduce sampling costs, we find that a global baseline becomes unstable when processing diverse query structures."

- Retrieval-Augmented Generation (RAG): Augmenting generation with retrieved documents to inject external knowledge. "Few-shot baselines include standard prompting, IRCoT (Trivedi et al., 2023), Search-o1 (Li et al., 2025) and retrieval augmented generation (RAG) (Lewis et al., 2020)."

- Reward hacking: Exploiting the reward function to achieve high scores without solving the intended task. "Furthermore, we plan to safeguard the self-evolution process against reward hacking and bias amplification, aiming to develop robust learning frameworks..."

- Reward standardization: Normalizing rewards within a group to compute advantages with lower variance. "where the advantages are computed via reward standardization (i.e., Ai = std({(y=ûi)}-1)+8"

- Search-o1: A search-enhanced reasoning baseline used for comparison in experiments. "Few-shot baselines include standard prompting, IRCoT (Trivedi et al., 2023), Search-o1 (Li et al., 2025) and retrieval augmented generation (RAG) (Lewis et al., 2020)."

- Search-R1: An RL-trained search agent baseline that leverages external search with reinforcement learning. "Supervised baselines consist of supervised fine-tuning (SFT), RL-based fine-tuning without search (R1) (Guo et al., 2025) and the RL-based search agent Search-R1 (Jin et al., 2025)."

- Self-play: A training mechanism where a model generates tasks and evaluates itself to improve without human labels. "Early approaches utilize self-play mechanisms where the model acts as both the generator and the evaluator to refine its policy without human annotations (OpenAI et al., 2021; Chen et al., 2024; Wu et al., 2024)."

- Self-questioning LLMs (SQLM): Models that generate their own questions to train themselves in a data-free manner. "We further compare Dr. Zero against existing data-free methods, specifically self-questioning LLMs (SQLM) and self-evolving reasoning LLMs (R-Zero) (Chen et al., 2025; Huang et al., 2025a)."

- Supervised fine-tuning (SFT): Training a model on labeled datasets to improve performance on target tasks. "Supervised baselines consist of supervised fine-tuning (SFT), RL-based fine-tuning without search (R1) (Guo et al., 2025) and the RL-based search agent Search-R1 (Jin et al., 2025)."

- Value baseline: An estimate of expected return used to reduce variance in policy gradient updates. "For example, actor-critic methods such as PPO employ a learned critic to estimate a value baseline (Mnih et al., 2016; Schulman et al., 2017)."

Practical Applications

Immediate Applications

The following applications can be deployed now using the Dr. Zero framework, its proposer–solver loop, and the HRPO/GRPO training methods, with modest engineering to adapt corpus, tools, and guardrails.

- Sector: software/enterprise search

- Application: data-free self-improving enterprise knowledge assistants

- What it does: bootstrap a search-augmented LLM for internal FAQs, policies, and wikis without labeled Q&A by indexing the company corpus and letting the proposer generate verifiable questions that continuously train the solver.

- Tools/products/workflows: external search index (e.g., ANN over embeddings), proposer–solver training loop, HRPO for proposer, GRPO for solver, hop-ratio curriculum (e.g., 4:3:2:1), exact-match or relaxed answer checks, MLOps pipeline for iteration until performance plateaus.

- Assumptions/dependencies: high-quality retrieval over the target corpus; base LLM with tool-use; answer verification (exact match or domain-specific heuristics); compute for short training runs; safety filters to prevent reward hacking or biased query generation.

- Sector: education/LMS

- Application: automated curriculum generation and practice question creation

- What it does: generate structured, multi-hop questions and verifiable answers aligned to course materials, using difficulty-guided rewards to produce non-trivial but solvable items for formative assessments.

- Tools/products/workflows: LMS integration, proposer format constraints (<question>/<answer> tags), difficulty rewards to ensure progressive challenge, teacher-in-the-loop to approve batches, analytics on solver pass rates to tune curricula.

- Assumptions/dependencies: alignment to learning standards; source corpus (textbooks, lecture notes); guardrails for age-appropriate content; domain-specific answer checking beyond exact match.

- Sector: research tooling (academia/industry R&D)

- Application: literature-driven deep research assistants without labeled training data

- What it does: index curated sources (e.g., Wikipedia, arXiv abstracts, PubMed) and use the self-evolving loop to improve multi-hop retrieval and reasoning for review writing, hypothesis checking, and fact synthesis.

- Tools/products/workflows: corpus ingestion and indexing; proposer–solver iterations (50-step blocks, 2–3 iterations as per paper); prompt templates for tool calls; evaluation harness with EM and citation presence checks.

- Assumptions/dependencies: reliable, up-to-date corpora; domain-specific verification (e.g., citation extraction); monitoring for hallucinations; ethics compliance in sensitive domains.

- Sector: customer support/ops

- Application: ticket triage and knowledge-base search copilot

- What it does: enhance multi-turn, multi-hop retrieval over help articles and previous tickets to resolve complex issues without requiring manually labeled Q&A for training.

- Tools/products/workflows: integration with support platforms; retrieval over KB and resolved tickets; proposer generating realistic support queries; solver trained to produce step-by-step resolutions; HRPO to reduce compute.

- Assumptions/dependencies: private indexing with access controls; redaction for sensitive data; domain-specific correctness metrics (e.g., resolution steps); guardrails against generating unsafe procedures.

- Sector: compliance/legal (internal use)

- Application: policy and regulation lookup assistant

- What it does: multi-hop search across statutes, policy manuals, and regulatory guidance to answer nuanced questions; self-evolves by proposing challenging, verifiable queries (e.g., cross-referencing sections) to train accuracy.

- Tools/products/workflows: legal corpus index; structured outputs (citations, section references); proposer rewards tuned for verifiability (presence of correct citation); audit log of tool interactions.

- Assumptions/dependencies: jurisdiction-specific corpora; rigorous answer verification (beyond EM); human review for high-stakes outputs; governance to avoid bias amplification.

- Sector: daily life/personal productivity

- Application: personal “deep research” assistant

- What it does: helps users investigate topics across web-scale sources with multi-hop search and reasoning that self-improves on-the-fly without collecting personal labeled data.

- Tools/products/workflows: browser-integrated search tool; configurable proposer difficulty; periodic short training iterations; user-controllable source allowlists.

- Assumptions/dependencies: quality and trust of sources; local or cloud compute; opt-in telemetry; safeguards against misinformation and unsafe instructions.

- Sector: ML engineering

- Application: efficient RL training for tool-using agents

- What it does: adopt HRPO to replace nested sampling in GRPO for proposer training, cutting rollout costs while stabilizing advantage estimates via hop-group baselines.

- Tools/products/workflows: HRPO trainer library; hop clustering (1–4 hops); KL regularization and gradient clipping; BF16 mixed precision; standardized multi-turn tool-calling prompts.

- Assumptions/dependencies: consistent tokenization across tool steps; reliable tool API; monitoring for training failures (e.g., token ID inconsistencies) and entropy collapse.

Long-Term Applications

The following applications require further research, scaling, domain adaptation, or safety validations before broad deployment.

- Sector: healthcare

- Application: clinical evidence synthesis and guideline navigation

- What it could do: multi-hop retrieval over PubMed, guidelines, and EHR-compatible knowledge bases to answer complex clinical queries and generate evidence-backed summaries.

- Tools/products/workflows: medical corpus indexing; domain-tuned proposer rewards (verifiable via citations and consistency checks); clinician oversight workflow; uncertainty estimates.

- Assumptions/dependencies: regulatory compliance (HIPAA/PHI); medically vetted sources; robust factuality verification beyond EM; comprehensive safety layers to prevent harmful recommendations.

- Sector: finance

- Application: due diligence and regulatory reporting assistant

- What it could do: multi-document analysis across filings, earnings calls, sanctions lists, and regulations; self-evolving challenger queries to improve robustness in cross-hop reasoning.

- Tools/products/workflows: finance-specific retrieval; structured outputs (figures, citations); stress testing via synthetic adversarial queries; audit trails.

- Assumptions/dependencies: access to premium data feeds; strict compliance review; calibrated uncertainty; defense against reward hacking in noisy financial corpora.

- Sector: public sector/policy

- Application: government knowledge assistants for legislation and FOIA processing

- What it could do: self-evolve on public records to answer constituents’ questions, draft summaries, and cross-reference multi-hop legal and policy materials without expensive annotation campaigns.

- Tools/products/workflows: agency corpus indexing; verifiability-oriented proposer; human-in-the-loop verification; transparency mechanisms (sources, tool-call logs).

- Assumptions/dependencies: multilingual/cross-jurisdiction adaptation; bias mitigation; accessibility requirements; privacy safeguards for sensitive records.

- Sector: education at scale

- Application: adaptive, standards-aligned question generation and auto-grading

- What it could do: produce level-appropriate, multi-hop questions and grading rubrics while adjusting difficulty based on solver pass rates and student performance signals.

- Tools/products/workflows: integration with large LMS ecosystems; calibration of difficulty rewards; analytics dashboards; teacher override workflows.

- Assumptions/dependencies: robust content alignment and fairness; domain-specific grading (semantic similarity, rubric-based); mitigation of curriculum bias and drift.

- Sector: safety and alignment research

- Application: robust self-evolution under safety constraints

- What it could do: develop guardrails that prevent reward hacking, bias amplification, and entropy collapse, enabling safe autonomous improvement of tool-using agents in high-stakes domains.

- Tools/products/workflows: anomaly detection in reward/entropy curves; adversarial proposer generation; counterfactual evaluation; red-team pipelines.

- Assumptions/dependencies: formal verification methods for tool-use; standardized safety benchmarks; richer rewards beyond binary correctness.

- Sector: multilingual/global deployment

- Application: cross-lingual self-evolving search agents

- What it could do: extend Dr. Zero to diverse languages and corpora, enabling data-free improvement where labeled datasets are scarce.

- Tools/products/workflows: multilingual embeddings and retrieval; locale-specific formatting and answer checks; hop-based curricula adapted to language structures.

- Assumptions/dependencies: high-quality non-English corpora; language-specific tokenization and tool prompts; culturally aware guardrails.

- Sector: general AI products

- Application: “research copilot” platforms and zero-data model ops

- What it could do: offer hosted services that spin up domain-specific self-evolving agents, with turnkey indexing, curriculum generation, HRPO-based training, and governance tooling.

- Tools/products/workflows: orchestration layer for proposer–solver loops; configurable reward schemas; usage analytics; compliance/audit modules.

- Assumptions/dependencies: scalable infrastructure; standardized APIs to search engines; cost controls; user-driven source selection and transparency.

- Sector: evaluation and benchmarking

- Application: synthetic benchmark generation for multi-hop reasoning

- What it could do: use the proposer to create diverse, difficulty-calibrated, verifiable test sets tailored to domains (beyond math/coding), improving coverage of open-domain reasoning.

- Tools/products/workflows: benchmark curation pipeline; hop-aware sampling; automated solvability checks; public leaderboard integration.

- Assumptions/dependencies: reliable solvability verification; avoidance of leakage; community validation to ensure representativeness.

- Sector: knowledge-intensive software engineering

- Application: documentation and codebase cross-referencing assistants

- What it could do: multi-hop reasoning over large repositories and documentation to answer “how/why” questions, with self-evolving curricula to improve tool-use patterns over time.

- Tools/products/workflows: repo indexers; code-aware retrieval; structured outputs (file paths, commit IDs); proposer rewards tuned to reproducibility of steps.

- Assumptions/dependencies: precise tooling and environment emulation; secure access policies; domain-specific correctness metrics beyond EM.

In all cases, feasibility depends on retrieval quality, verifiable answer checking, stable multi-turn tool integration, and safeguards against reward hacking or bias. The paper’s observed training plateaus and failure modes (e.g., token ID inconsistencies, entropy collapse in larger models) suggest the need for careful monitoring, iteration limits, and robust evaluation beyond exact-match, especially in high-stakes deployments.

Collections

Sign up for free to add this paper to one or more collections.