R-Zero: Self-Evolving Reasoning LLM from Zero Data (2508.05004v1)

Abstract: Self-evolving LLMs offer a scalable path toward super-intelligence by autonomously generating, refining, and learning from their own experiences. However, existing methods for training such models still rely heavily on vast human-curated tasks and labels, typically via fine-tuning or reinforcement learning, which poses a fundamental bottleneck to advancing AI systems toward capabilities beyond human intelligence. To overcome this limitation, we introduce R-Zero, a fully autonomous framework that generates its own training data from scratch. Starting from a single base LLM, R-Zero initializes two independent models with distinct roles, a Challenger and a Solver. These models are optimized separately and co-evolve through interaction: the Challenger is rewarded for proposing tasks near the edge of the Solver capability, and the Solver is rewarded for solving increasingly challenging tasks posed by the Challenger. This process yields a targeted, self-improving curriculum without any pre-existing tasks and labels. Empirically, R-Zero substantially improves reasoning capability across different backbone LLMs, e.g., boosting the Qwen3-4B-Base by +6.49 on math-reasoning benchmarks and +7.54 on general-domain reasoning benchmarks.

Summary

- The paper introduces a novel self-evolving framework that uses a co-evolutionary loop between a Challenger and a Solver to boost reasoning abilities without human-curated data.

- It leverages an uncertainty-based reward and iterative filtering to generate challenging questions and fine-tune the Solver, leading to measurable gains on math benchmarks.

- The results demonstrate consistent improvements and effective transfer to general-domain reasoning tasks, despite challenges like decreasing pseudo-label quality.

R-Zero: A Self-Evolving Reasoning LLM from Zero Data

Introduction

R-Zero introduces a fully autonomous framework for self-evolving LLMs that eliminates the need for any human-curated tasks or labels. The core innovation is a co-evolutionary loop between two independently optimized agents—a Challenger and a Solver—both initialized from the same base LLM. The Challenger is incentivized to generate questions at the edge of the Solver's current capabilities, while the Solver is trained to solve these increasingly difficult tasks. This process yields a self-improving curriculum, enabling the LLM to enhance its reasoning abilities from scratch, without external supervision.

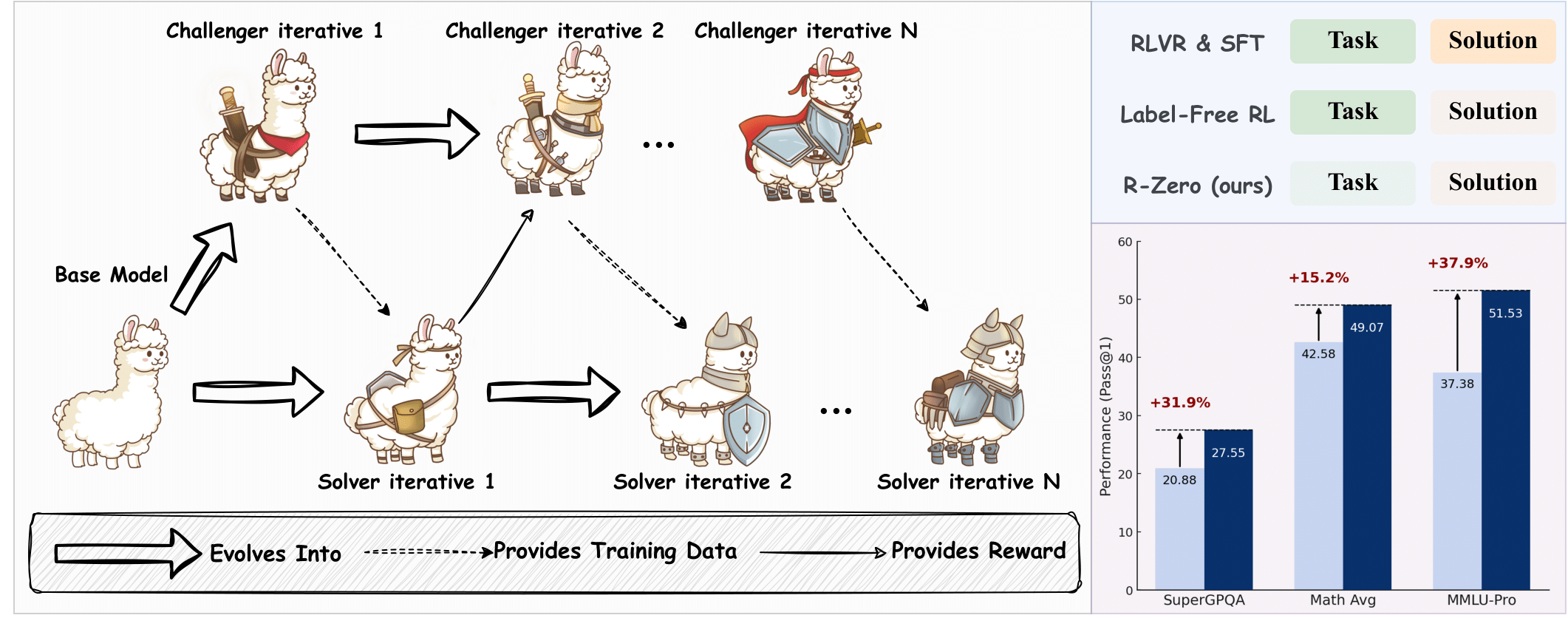

Figure 1: (Left) R-Zero employs a co-evolutionary loop between Challenger and Solver. (Right) R-Zero achieves strong benchmark gains without any pre-existing tasks or human labels.

Methodology

Co-Evolutionary Framework

R-Zero's architecture is built on a two-agent system:

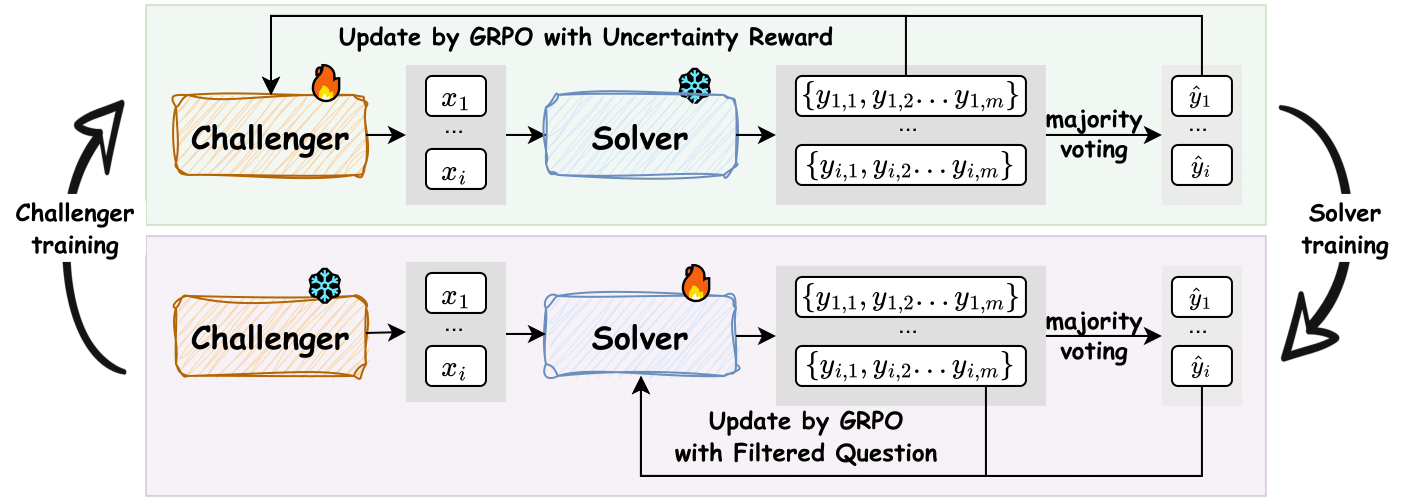

- Challenger (Qθ): Generates challenging questions using Group Relative Policy Optimization (GRPO), with rewards based on the uncertainty of the Solver's responses.

- Solver (Sϕ): Fine-tuned on a filtered set of Challenger-generated questions, using pseudo-labels derived from majority voting over its own answers.

The process is iterative: the Challenger is trained to maximize the Solver's uncertainty, and the Solver is subsequently trained to solve the filtered, challenging questions. This loop is repeated, resulting in a progressively more capable Solver.

Figure 2: R-Zero framework overview, illustrating the co-evolution of Challenger and Solver via GRPO and self-consistency-based rewards.

Reward Design

- Uncertainty Reward: For each generated question, the Solver produces m answers. The reward is runcertainty(x;ϕ)=1−2∣p^(x;Sϕ)−0.5∣, where p^ is the empirical accuracy (fraction of answers matching the majority vote). This is maximized when the Solver's accuracy is 50%, i.e., maximal uncertainty.

- Repetition Penalty: To ensure diversity, a BLEU-based clustering penalty is applied within each batch, discouraging the Challenger from generating semantically similar questions.

- Format Check Penalty: Outputs not conforming to the required format are immediately assigned zero reward.

The final reward is ri=max(0,runcertainty(xi;ϕ)−rrep(xi)).

Dataset Construction and Filtering

After Challenger training, a large pool of candidate questions is generated. For each, the Solver's answers are used to assign a pseudo-label via majority vote. Only questions with empirical correctness within a specified band around 50% are retained, filtering out tasks that are too easy, too hard, or ambiguous.

Solver Training

The Solver is fine-tuned on the curated dataset using GRPO, with a binary reward: 1 if the generated answer matches the pseudo-label, 0 otherwise.

Theoretical Motivation

The uncertainty-based reward is theoretically justified by curriculum learning theory: the KL divergence between the Solver's policy and the optimal policy is lower-bounded by the reward variance, which is maximized at 50% accuracy. Thus, the Challenger is incentivized to generate maximally informative tasks for the Solver.

Experimental Results

Mathematical Reasoning

R-Zero was evaluated on multiple mathematical reasoning benchmarks (AMC, Minerva, MATH-500, GSM8K, Olympiad-Bench, AIME-2024/2025) using Qwen3 and OctoThinker models at 3B, 4B, and 8B scales. Across all architectures, R-Zero consistently improved performance over both the base model and a baseline where the Solver is trained on questions from an untrained Challenger.

- Qwen3-8B-Base: Average score increased from 49.18 (base) to 54.69 (+5.51) after three R-Zero iterations.

- Qwen3-4B-Base: Average score increased from 42.58 to 49.07 (+6.49).

- OctoThinker-3B: Average score increased from 26.64 to 29.32 (+2.68).

Performance gains were monotonic across iterations, confirming the efficacy of the co-evolutionary curriculum.

General-Domain Reasoning

Despite being trained only on self-generated math problems, R-Zero-trained models exhibited significant transfer to general reasoning benchmarks (MMLU-Pro, SuperGPQA, BBEH):

- Qwen3-8B-Base: General-domain average improved by +3.81 points.

- OctoThinker-3B: General-domain average improved by +3.65 points.

This demonstrates that the reasoning skills acquired via R-Zero generalize beyond the training domain.

Analysis

Ablation Studies

Disabling any core component (RL-based Challenger, repetition penalty, or difficulty-based filtering) led to substantial performance drops. The largest degradation occurred when RL training for the Challenger was removed, underscoring the necessity of the co-evolutionary curriculum.

Evolution of Question Difficulty and Label Quality

As the Challenger improved, the difficulty of generated questions increased, evidenced by declining Solver accuracy on new question sets. However, the accuracy of pseudo-labels (as measured against GPT-4o) decreased from 79% to 63% over three iterations, indicating a trade-off between curriculum difficulty and label reliability.

Synergy with Supervised Fine-Tuning

R-Zero can be used as a mid-training method: models first improved by R-Zero achieved higher performance after subsequent supervised fine-tuning on labeled data, with an observed gain of +2.35 points over direct supervised training.

Implementation Considerations

- Resource Requirements: Each iteration involves generating thousands of questions and multiple rollouts per question, requiring significant compute, especially for larger models.

- Scalability: The framework is model-agnostic and demonstrated effectiveness across different architectures and scales.

- Limitations: The approach is currently best suited for domains with objective correctness criteria (e.g., math). Extension to open-ended generative tasks remains an open challenge due to the lack of verifiable reward signals.

Implications and Future Directions

R-Zero demonstrates that LLMs can self-improve their reasoning abilities from scratch, without any human-provided data. This has significant implications for scalable, autonomous AI development, particularly in domains where labeled data is scarce or unavailable. The framework's transferability to general reasoning tasks suggests that self-evolving curricula can yield broadly capable models.

Future work should address the degradation of pseudo-label quality as task difficulty increases, explore more robust self-labeling mechanisms, and extend the framework to domains lacking objective evaluation criteria. Additionally, integrating model-based verifiers or leveraging external knowledge sources could further enhance the reliability and generality of self-evolving LLMs.

Conclusion

R-Zero establishes a fully autonomous, co-evolutionary framework for reasoning LLMs that requires zero external data. Through iterative Challenger-Solver interactions and uncertainty-driven curriculum generation, R-Zero achieves substantial improvements in both mathematical and general reasoning tasks. The approach is theoretically grounded, empirically validated, and provides a foundation for future research into self-improving, data-independent AI systems.

Follow-up Questions

- How does the co-evolutionary dynamic between the Challenger and Solver specifically contribute to enhanced reasoning capabilities?

- What are the key benefits and limitations of the uncertainty-based reward mechanism in driving the curriculum?

- How do the performance gains on mathematical benchmarks translate to improvements in general-domain reasoning tasks?

- What implementation challenges, such as computational costs and label quality, could impact the scalability of this framework?

- Find recent papers about self-evolving reasoning LLMs.

Related Papers

- Large Language Models Can Self-Improve (2022)

- Better Zero-Shot Reasoning with Self-Adaptive Prompting (2023)

- Self-Explore: Enhancing Mathematical Reasoning in Language Models with Fine-grained Rewards (2024)

- On the Emergence of Thinking in LLMs I: Searching for the Right Intuition (2025)

- Absolute Zero: Reinforced Self-play Reasoning with Zero Data (2025)

- General-Reasoner: Advancing LLM Reasoning Across All Domains (2025)

- Learning to Reason without External Rewards (2025)

- Direct Reasoning Optimization: LLMs Can Reward And Refine Their Own Reasoning for Open-Ended Tasks (2025)

- SPIRAL: Self-Play on Zero-Sum Games Incentivizes Reasoning via Multi-Agent Multi-Turn Reinforcement Learning (2025)

- Self-Questioning Language Models (2025)

Tweets

YouTube

HackerNews

- R-Zero: Self-Evolving Reasoning LLM from Zero Data (3 points, 1 comment)

alphaXiv

- R-Zero: Self-Evolving Reasoning LLM from Zero Data (114 likes, 1 question)