RoboReward: General-Purpose Vision-Language Reward Models for Robotics (2601.00675v1)

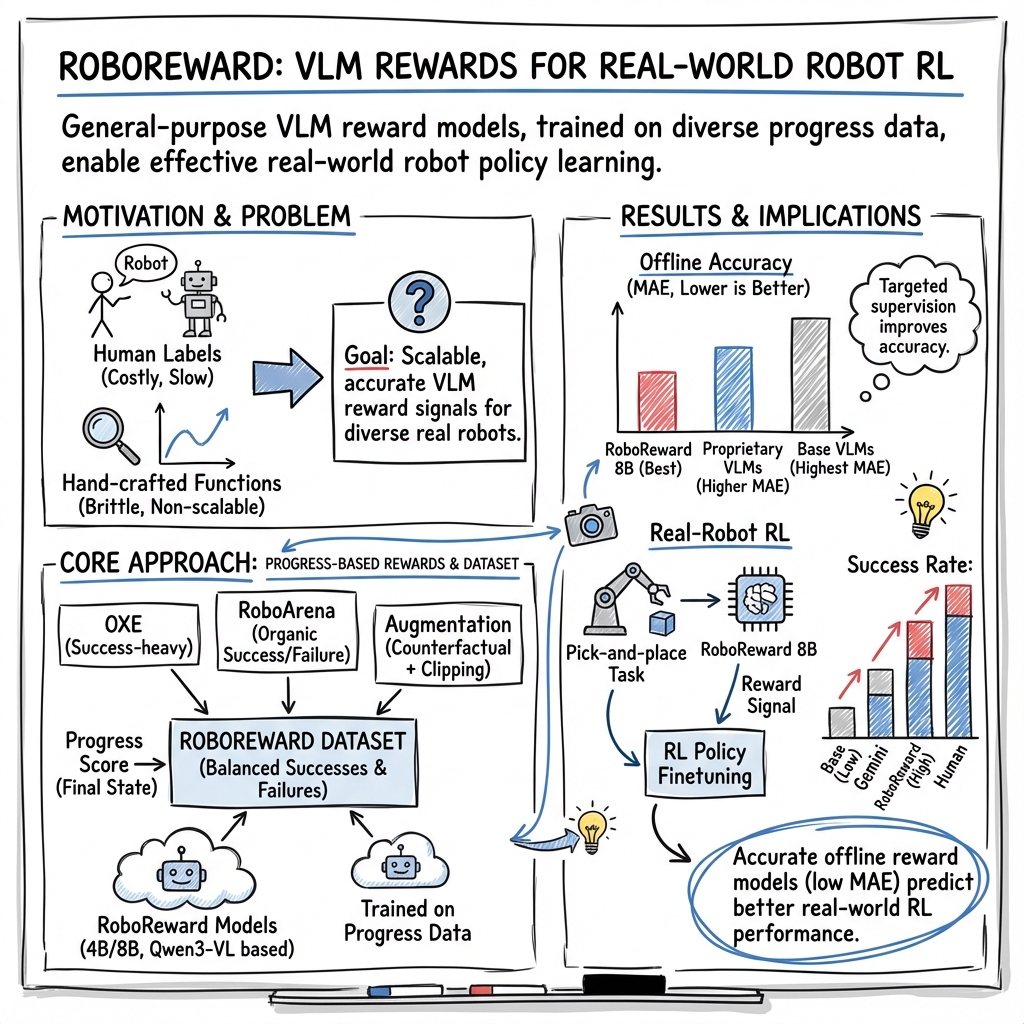

Abstract: A well-designed reward is critical for effective reinforcement learning-based policy improvement. In real-world robotic domains, obtaining such rewards typically requires either labor-intensive human labeling or brittle, handcrafted objectives. Vision-LLMs (VLMs) have shown promise as automatic reward models, yet their effectiveness on real robot tasks is poorly understood. In this work, we aim to close this gap by introducing (1) \textbf{RoboReward}, a robotics reward dataset and benchmark built on large-scale real-robot corpora from Open X-Embodiment (OXE) and RoboArena, and (2) vision-language reward models trained on this dataset (RoboReward 4B/8B). Because OXE is success-heavy and lacks failure examples, we propose a \emph{negative examples data augmentation} pipeline that generates calibrated \emph{negatives} and \emph{near-misses} via counterfactual relabeling of successful episodes and temporal clipping to create partial-progress outcomes from the same videos. Using this framework, we produce an extensive training and evaluation dataset that spans diverse tasks and embodiments and enables systematic evaluation of whether state-of-the-art VLMs can reliably provide rewards for robotics. Our evaluation of leading open-weight and proprietary VLMs reveals that no model excels across all tasks, underscoring substantial room for improvement. We then train general-purpose 4B- and 8B-parameter models that outperform much larger VLMs in assigning rewards for short-horizon robotic tasks. Finally, we deploy the 8B-parameter reward VLM in real-robot reinforcement learning and find that it improves policy learning over Gemini Robotics-ER 1.5, a frontier physical reasoning VLM trained on robotics data, by a large margin, while substantially narrowing the gap to RL training with human-provided rewards.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper is about teaching robots to learn better by giving them fair, consistent “scores” for how well they do a task. The authors build a new dataset and AI models that watch a robot’s video, read the task (like “put the carrot in the drawer”), and then give a progress score from 1 to 5. These scores act like a report card for the robot’s learning. The big goal: make it possible to use reinforcement learning (RL) on real robots without needing a human to constantly grade every attempt.

What questions did the researchers ask?

They focused on a few simple but important questions:

- What kind of score helps a robot learn best: a simple pass/fail or a progress score (like 1–5)?

- Can today’s vision-LLMs (AIs that understand both video and text) grade robot attempts accurately across many different tasks and robots?

- Can we train smaller, focused models that grade better than big, general-purpose models?

- If the grading gets more accurate, do robots actually learn to do tasks better in the real world?

How did they do it?

To make this work, the team created both a big training dataset and a clean test benchmark, and then trained and tested grading models.

Here’s the approach, described with everyday analogies:

- Choosing the scoring style: They tested different types of rewards in simulation and found a 1–5 “progress score” works better than pass/fail. Think of this like getting partial credit on a school assignment—it gives more helpful feedback than just “correct” or “wrong.”

- Building a balanced dataset: Real robot datasets mostly show successes (like only watching “perfect” cooking videos). That’s not helpful for learning what mistakes look like. So they added believable “fail” and “almost-there” examples:

- Counterfactual relabeling: Imagine watching a robot video that correctly puts a pot on a yellow cloth. Now change the task text to “put the fork on the yellow cloth.” With the same video, that’s now a fail or near-miss. This teaches the grader to pay attention to the exact instruction, not just any movement.

- Negative clipping: They “cut” successful videos earlier (like stopping a cooking video halfway). The same task is now only partially done—great training examples for scores like 2/5 or 3/5.

- Carefully checking labels: They used AI to propose these labels and then filtered them with strict checks, plus human verification on the final test set, so the benchmark is trustworthy.

- Training reward models: They trained two vision-language grading models (called RoboReward 4B and 8B; “B” means billions of parameters, a way of saying model size) using 45,000 labeled robot episodes. The test set (RoboRewardBench) has 2,800 human-verified episodes across many robots and tasks.

- Measuring accuracy: They scored models by how close their 1–5 prediction was to the human label on average (called MAE—mean absolute error; smaller is better). A MAE below 1 means the model is usually within one point of the true score.

What did they find, and why does it matter?

The paper reports several clear findings. Here are the highlights:

- Progress scores help robots learn faster than pass/fail. In simulation, giving 1–5 progress rewards made RL training succeed much more quickly than using only success/failure. That’s like getting detailed feedback instead of a simple “yes/no.”

- Better grading accuracy → better robot learning. They showed a strong correlation: when the reward model’s scores matched human labels more closely, RL led to higher real task success. So improving the grader matters in practice.

- Today’s general AI models aren’t consistent graders for robots. The team tested 22 top models and found none were great across all robots, scenes, and tasks. Performance varied a lot depending on camera view, robot type, and environment.

- Their focused models beat much bigger ones. The new RoboReward 8B and 4B models (trained specifically to grade robot videos) outperformed larger, famous models on the benchmark. Training on the right data for the right goal wins over sheer size.

- Real robot improvement: Their 8B model made a real robot learn better. On tasks like “pick up the toy monkey and place it on the yellow towel” and “pull open the drawer,” RL with the RoboReward 8B scores raised success rates a lot, coming closer to using a human grader and clearly beating a strong robot-specialized baseline model.

- Data tricks matter. Without their “counterfactual” and “clipped” negatives, the model looked fine on familiar data but fell apart on new robots and tasks. Those smart negatives taught the grader to handle many kinds of failures and near-misses.

What is the impact of this work?

This research makes it much easier to use reinforcement learning on real robots by replacing constant human grading with reliable, automatic scoring:

- It shows that progress-based, 1–5 rewards are both learnable and more useful for robots than pass/fail.

- It provides an open dataset, benchmark, and trained models so others can build and compare better graders.

- It proves that improving the grader isn’t just about nice numbers—robots actually learn more and succeed more often in the real world.

Big picture: If robots can get accurate, automatic feedback on how well they did, they can practice and improve on many tasks without humans standing by. That can speed up progress toward general-purpose home and workplace robots. The next step is making these reward models handle longer, multi-step tasks (like “set the table” with several subtasks), where keeping track of progress is trickier but even more valuable.

Knowledge Gaps

Unresolved gaps, limitations, and open questions

Below is a concise list of what the paper leaves missing, uncertain, or unexplored, phrased to guide actionable follow-up research:

- Long-horizon, multi-stage tasks: RoboReward focuses on short-horizon, end-of-episode scoring; it remains open how well the approach scales to longer, multi-step tasks with complex temporal credit assignment and subgoal tracking.

- Per-step/process rewards vs. end-of-episode: The paper validates discrete end-of-episode progress scores; it does not test step-wise or process rewards, nor whether hybrid dense+sparse signals yield better RL performance.

- Reward calibration and uncertainty: Models output discrete labels without calibrated confidence; evaluating and leveraging uncertainty (e.g., abstention, ECE/ACE calibration, reward variance) in RL is unexplored.

- Reward hacking/exploitability: There is no closed-loop analysis of whether policies learn to exploit systematic biases in the reward model; adversarial evaluations and defenses against reward gaming are needed.

- Generalization across embodiments and views: Large, non-uniform performance gaps across robots, scenes, and camera viewpoints are observed, but the paper does not propose or test domain adaptation strategies (e.g., leave-one-embodiment-out splits, viewpoint augmentation, feature normalization).

- Proprioception and force/tactile cues: Rewards are inferred from video and text only; many manipulation outcomes (e.g., successful latching, contact quality) are not visually observable—integrating proprioceptive/force/tactile/audio inputs remains open.

- Robustness to distribution shift: Systematic tests under lighting changes, occlusions, clutter density, background motion, and camera jitter are missing.

- Negative augmentation validity: The counterfactual relabeling and clipping pipeline generates labels validated by VLMs, but the training set’s label noise rate, bias profile, and error types are not quantified via human audit.

- Circularity risk in label validation: The same class of VLMs is used to propose and validate counterfactual labels; assessing validation reliability with independent human review or alternate modalities is needed.

- Naturalness and ambiguity of counterfactuals: It is unclear how often generated instructions are semantically plausible for the scene versus contrived or ambiguous; measuring instruction “naturalness” and its impact on learning is open.

- Clipped negatives as distribution shift: Temporal clipping can introduce unnatural episode terminations; the effect on model bias and on RL (e.g., incentivizing early termination) is not analyzed.

- Data leakage controls: Although tasks are split by description, potential leakage via shared scenes, objects, or near-duplicate episodes across splits is not rigorously tested (e.g., scene- or embodiment-disjoint splits).

- Annotation reliability: Inter-annotator agreement for the 1–5 progress rubric (both in RoboArena mappings and test-set verification) is not reported; reproducibility of the rubric across annotators is uncertain.

- Granularity of rewards: The 1–5 rubric is chosen for convenience; the trade-offs vs. finer (e.g., 1–10) or continuous scales, or ordinal pairwise preferences, on both accuracy and RL performance remain untested.

- Evaluation metrics: MAE is the sole primary metric; rank correlation, calibration metrics, cost-sensitive errors (e.g., penalizing 1↔5 mistakes more), and alignment with RL sensitivity are not examined.

- Correlation to RL beyond limited settings: The reward-accuracy-to-RL correlation is shown in Robomimic and two real tasks with DSRL; robustness across more tasks, embodiments, RL algorithms (e.g., SAC/PPO/TD3/model-based), and base policies is unknown.

- Real-time deployment constraints: Inference latency, throughput, and compute/energy costs of reward evaluation (especially for 8B models) in online RL loops are not measured; streaming vs. batched evaluation trade-offs are open.

- Training strategy choices: The vision backbone is frozen; whether end-to-end fine-tuning, visual adapters, or stronger video pretraining improve cross-embodiment generalization is not tested.

- Model scaling laws: Effects of scaling beyond 8B, mixture-of-experts, or specialized visual encoders on reward accuracy and RL outcomes are unexplored.

- Data mixture design: The optimal ratio of organic failures (RoboArena) to synthetic counterfactual/clipped negatives, diminishing returns per video, and curriculum scheduling for negatives are not studied.

- Safety-aware rewards: The benchmark does not include safety constraints (e.g., avoiding collisions); designing and evaluating rewards that penalize unsafe behaviors remains open.

- Explainability and debuggability: Models provide scalar scores without rationales; whether textual justifications or visual grounding improve trust, diagnosis, and RL stability is untested.

- Multilingual and compositional instructions: Robustness to multilingual prompts, colloquialisms, synonyms, and compositional goals (novel verb–object combinations) is not evaluated.

- Category- and object-level bias: Per-object/affordance performance (e.g., deformables, transparent objects, reflective surfaces) is not analyzed; targeted failures remain unidentified.

- Mobile manipulation and locomotion: The benchmark largely covers tabletop manipulation; extension to mobile manipulation, navigation, and locomotion tasks is unaddressed.

- Process supervision vs. outcome-only: The study does not compare outcome-only rewards to process-based supervision (e.g., key steps, affordance satisfaction), which may alleviate temporal credit issues.

- Reward drift and continual learning: How to adapt reward models online to new tasks/scenes without catastrophic forgetting, and how drift affects RL, is open.

- Provenance and contamination: Potential overlap between OXE/RoboArena videos and proprietary model pretraining corpora is not assessed; its effect on benchmark fairness is unknown.

- Statistical rigor in real-robot results: Real-world evaluations use 20 trials per condition and two tasks; confidence intervals, effect sizes, and sensitivity to seeds/hyperparameters are not reported.

- Benchmark scale and coverage: RoboRewardBench has 2,831 episodes; scaling the human-verified test set to cover more embodiments, longer horizons, and harder settings is an open engineering task.

- Reward normalization across tasks: How to standardize scores across tasks of varying difficulty so that a single model remains calibrated is not addressed.

- Integration with policy learning signals: Combining reward models with value shaping, hindsight relabeling, or preference learning during RL to mitigate reward errors remains unexplored.

Glossary

Below is an alphabetical list of advanced domain-specific terms from the paper, each with a short definition and a verbatim usage example.

- Behavioral cloning: A supervised learning approach where a policy is trained to imitate expert demonstrations. "Although this is useful for training policies with behavioral cloning, it is suboptimal for training reward models that must discriminate fine-grained partial progress and failure."

- Bimanual: Refers to manipulation using two arms or hands in robotics. "it is comparatively weak on UTokyo xArm Bimanual (absolute error 1.394)"

- Counterfactual relabeling: Generating alternative task labels (including failures or partial successes) for the same video to augment training data. "(i) Counterfactual relabeling."

- Cosine learning-rate decay: A schedule that reduces the learning rate following a cosine curve over training. "We train for 3 epochs with cosine learning-rate decay, a warmup ratio of 0.05, weight decay of 0.05, and max gradient norm 1.0."

- Credit assignment: Determining which actions or states contributed to success or failure, especially challenging in long-horizon tasks. "longer-horizon, multi-stage tasks, where credit assignment and progress estimation become more challenging."

- Data-mixture ablations: Experiments that remove or alter parts of the training data mixture to assess their impact on performance. "We further justify our data mixture and augmentation pipeline via data-mixture ablations that isolate the contributions of counterfactual relabeling and negative clipping to overall reward accuracy (Section 5.3)."

- Diffusion policy: A control policy trained via diffusion models to generate actions for robotic tasks. "finetune a diffusion policy pretrained on a dataset of task demonstrations included in Robomimic"

- Discount factor: The parameter in RL that down-weights future rewards relative to immediate rewards. "where y € [0, 1) denotes a discount factor, and rt is the reward at step t."

- DSRL: A state-of-the-art RL fine-tuning algorithm used to improve policies. "utilize DSRL (Wagenmaker et al., 2025)—a state-of-the-art RL fine-tuning algorithm—as our RL algorithm"

- Egocentric camera perspectives: Views from the robot’s own perspective (first-person), used for perception and evaluation. "with a mix of exocentric and egocentric camera perspectives."

- Embodiments: Different physical robot platforms or configurations. "spans diverse tasks and embodiments"

- End-of-episode progress labels: Discrete scores assessing task completion at the end of a rollout. "to predict the 5-level end-of-episode progress labels when given a task description and rollout video."

- Episodic rewards: Rewards assigned to entire episodes rather than individual timesteps. "we restrict our investigation to episodic rewards, which assign a reward value to a full episode rather than each individual step"

- Exocentric camera perspectives: External views (third-person) of the robot interacting with the environment. "with a mix of exocentric and egocentric camera perspectives."

- Frontier vision-LLMs: The most advanced, state-of-the-art VLMs available. "two general-purpose vision-language reward models for robotics that outperform frontier vision-LLMs."

- Fusion: The module or process that integrates visual and textual information within a multimodal model. "we freeze the vision backbone and fine-tune the fusion and LLM layers."

- Generalist robot policies: Policies trained to handle a wide variety of tasks and environments, rather than task-specific ones. "with the advent of “generalist” robot policies (Octo Model Team et al., 2024; Kim et al., 2024; Black et al., 2024)"

- Gradient accumulation: Technique to simulate larger batch sizes by accumulating gradients over multiple steps before updating. "We use an effective batch size of 32 via gradient accumulation"

- Group-wise MAE: Mean absolute error computed and averaged over defined groups or subsets. "Overall reports the group-wise MAE (lower is better) over all RoboRewardBench subsets"

- Hindsight experience relabeling (HER): A method that relabels failed episodes as successes for the goals they did achieve to improve learning. "popular hindsight experience relabeling technique (HER, Andrychowicz et al. (2017))"

- In-context value learning: Using a VLM to infer value functions or rewards directly from context without explicit training on the task. "Ma et al. (2024) uses a VLM to perform in-context value learning."

- Leaderboard: A public ranking of model performance on a standardized benchmark. "including a leaderboard, prompts, raw generations, and results"

- LLM layers: The LLM components inside a multimodal architecture. "we freeze the vision backbone and fine-tune the fusion and LLM layers."

- MAE (Mean absolute error): The average absolute difference between predicted and true labels; lower is better. "Our primary metric throughout the paper is mean absolute error (MAE) between predicted and ground-truth labels"

- Multimodal settings: Tasks and evaluations that involve multiple data modalities, such as vision and language. "For multimodal settings, VLRewardBench (Li et al., 2024) and Multimodal RewardBench (Ya- sunaga et al., 2025) probe VLM reward models..."

- Negative clipping: Truncating successful rollout videos early to create partial-progress or failure examples. "(ii) Negative clipping."

- Negative examples data augmentation: Adding synthetic failure or near-miss examples to balance and enrich training data. "1. Negative examples data augmentation."

- Near-miss: An outcome that is close to success but violates one or more requirements. "generates calibrated negatives and near-misses via counterfactual re- labeling of successful episodes and temporal clipping"

- Nonprehensile objects: Objects manipulated without grasping (e.g., pushing or sliding). "KAIST Nonprehensile Objects (1.491)."

- OpenGVL leaderboard: A benchmark evaluating VLMs as temporal value estimators. "The closest to our evaluation setting is the OpenGVL leaderboard (OpenGVL Team, 2025)"

- Open X-Embodiment (OXE): A large-scale collection of real robot demonstrations across many embodiments and tasks. "Open X-Embodiment (OXE) episodes (Open X-Embodiment Collaboration, 2023)"

- Oracle human reward: Ground-truth rewards provided by a human, serving as an ideal baseline. "(1) oracle human reward: a human labeler gives a positive reward of +1 on success and the reward is otherwise 0"

- Preference-based approaches: Methods that use comparisons or ratings to learn rewards from preferences. "Preference- based approaches query VLMs over image and trajectory comparisons or ratings to learn reward functions"

- Progress score: A scalar or discrete label indicating how much of the task has been completed. "assign progress scores based on how well each policy's rollout accomplishes the intended instruction."

- Prompt rewriting: Cleaning or normalizing task text while preserving meaning. "Prompt Rewriting. First, we normalize spelling and grammar without altering semantics"

- Relabeling framework: A system for reassigning labels (e.g., tasks or rewards) to existing data to improve training. "We therefore develop a relabeling framework for synthetically augmenting demonstration data."

- RewardBench: A benchmark for evaluating language reward models’ accuracy and bias. "RewardBench (Lambert et al., 2024) and RewardBench 2 (Malik et al., 2025) test reward model accuracy, bias, and correlation with downstream LLM-RL performance."

- RoboArena: A diverse dataset of real-world policy evaluations with human progress scores. "we additionally include RoboArena (Atreya et al., 2025) as a complementary source of real-robot trajectories."

- RoboReward: A dataset and trained models for general-purpose vision-language reward modeling in robotics. "We introduce RoboReward, a dataset for training and evaluating general-purpose vision- language reward models for robotics."

- RoboRewardBench: The human-verified evaluation suite for measuring reward model accuracy on robot rollouts. "We then introduce RoboRewardBench, a comprehensive and standardized evaluation of VLMs as reward models on full robot rollouts"

- Temporal clipping: Cutting videos at earlier time points to produce partial completion outcomes. "temporal clipping to create partial-progress outcomes"

- Temporal value estimators: Models that estimate value functions over time from videos. "evaluates VLMs as temporal value estimators on expert videos"

- Value-Order Correlation metric: A metric assessing whether predicted values preserve the correct ordering. "evaluates VLMs as temporal value estimators on expert videos using a Value-Order Correlation metric."

- Vision backbone: The core visual feature extractor in a multimodal model, often kept frozen during fine-tuning. "we freeze the vision backbone and fine-tune the fusion and LLM layers."

- Vision-LLMs (VLMs): Models that jointly process visual and textual inputs for reasoning or prediction. "Vision-LLMs (VLMs) have shown promise as automatic reward models"

- Warmup ratio: The fraction of training steps used to gradually increase the learning rate from zero. "a warmup ratio of 0.05"

- Weight decay: A regularization technique that penalizes large weights to prevent overfitting. "weight decay of 0.05"

- Zero-shot: Using a model without task-specific fine-tuning, relying on generalization from pretraining. "Both VLM reward models are not further fine-tuned and are prompted zero-shot."

Practical Applications

Immediate Applications

The following applications can be deployed now using the released dataset, models, and evaluation suite, especially for short-horizon manipulation tasks and episodic, discrete progress rewards.

- Reward-as-a-Service for robot RL fine-tuning (Robotics, Software)

- Use RoboReward 8B to provide end-of-episode progress scores (1–5) during RL training, replacing manual binary success labels or hand-crafted rewards.

- Workflow: integrate the model into existing RL loops (e.g., DSRL or diffusion policy fine-tuning) to automatically score episodes and drive policy improvement.

- Assumptions/dependencies: short-horizon tasks; video + instruction input; inference latency acceptable; cloud or on-prem compute; model access and licensing.

- Automated benchmarking of robot policies (Academia, Robotics, Industry QA)

- Adopt RoboRewardBench and leaderboard to compare generalist policies and reward models across embodiments and scenes; identify generalization gaps before deployment.

- Tools: the released prompts, videos, and evaluation suite; MAE as a standardized metric correlated with RL gains.

- Assumptions: tasks and camera viewpoints comparable to benchmark; adherence to progress rubric.

- Balanced reward dataset creation from success-heavy logs (Robotics, MLOps)

- Apply the counterfactual relabeling + temporal clipping pipeline to existing demo corpora to synthesize calibrated failures and near-misses, enabling reward model training without expensive failure collection.

- Workflow: run offline pipeline, validate with strict rubric, distill into a compact reward model for deployment.

- Assumptions: access to demonstration videos with clear task context; pipeline compute budget; quality VLM/LLM for generation and validation.

- Replace binary success labels with discrete progress scoring (Academia, Robotics)

- Standardize on 5-level progress labels for episodic rewards in lab experiments and internal QA; improves RL convergence over binary success signals.

- Tools: the rubric and annotation guidance; RoboReward models as labelers when human time is limited.

- Assumptions: annotators or model-in-the-loop capable of consistent application of rubric.

- Robotics courseware and lab exercises (Education)

- Teach practical reward modeling by assigning students the dataset, rubric, and models; demonstrate MAE–RL performance correlation experimentally.

- Tools: Robomimic + DSRL, RoboRewardBench, RoboReward 4B/8B checkpoints.

- Assumptions: basic GPU resources; course alignment with short-horizon manipulation tasks.

- Edge or lab inference for episodic scoring (Robotics)

- Deploy RoboReward 4B on lab GPUs or high-end edge devices to score episodes without API dependence; freeze vision backbone to reduce memory footprint.

- Workflow: process rollout videos, return a progress score; gate policy updates or resets based on score thresholds.

- Assumptions: sufficient on-device compute; acceptable throughput for your episode cadence.

- Pre-deployment QA for warehouse/manufacturing pick-and-place (Industry: Logistics/Manufacturing)

- Use RoboRewardBench to stress-test generalist arms on typical short-horizon actions (grasp, place, open/close), flagging embodiment/viewpoint weaknesses before production.

- Tools: benchmark subsets similar to your camera setups; log per-subset MAE to guide scene-specific retraining.

- Assumptions: task similarity and camera configuration alignment; episodic evaluation feasible in QA.

- RL training cost reduction via semi-automated rewards (Robotics Startups)

- Replace a portion of human reward labeling with RoboReward 8B during online training; reserve humans for ambiguous episodes, reducing labor while maintaining high success rates.

- Workflow: automatic scoring -> confidence thresholding -> human-in-the-loop escalation.

- Assumptions: robust escalation policies; monitoring to mitigate reward errors in safety-critical moments.

- Continuous policy health monitoring (MLOps, Robotics)

- Monitor rolling MAE on a held-out validation set to predict downstream RL performance; trigger retraining or dataset augmentation when MAE drifts upward.

- Tools: MAE dashboards; automated alerting tied to evaluation suite outputs.

- Assumptions: access to validation episodes representative of production distribution.

- Rapid task adaptation for new scenes (Robotics)

- Fine-tune reward models on small, scene-specific clips using the negative augmentation pipeline; accelerate adaptation of policies to new camera viewpoints or lighting without collecting failures from scratch.

- Assumptions: representative demos available; pipeline validation steps applied to limit label noise.

- Supplier evaluation and procurement (Policy, Industry QA)

- Require reported RoboRewardBench MAE scores across relevant subsets in RFPs for embodied AI systems; use standardized metrics to compare claims.

- Assumptions: vendors can run benchmark or provide reproducible evidence; policy framework recognizes the benchmark.

- Integration with ROS and RL libraries (Software)

- Package the reward model as a ROS node or a PyTorch/TensorFlow wrapper that consumes episode buffers and outputs progress scores; plug into existing training loops.

- Assumptions: ROS2 compatibility or Python envs; video streaming format normalized.

Long-Term Applications

These applications require further research, scaling, or development, particularly around longer-horizon tasks, safety-critical deployments, and broader embodiment generalization.

- General-purpose reward modeling for multi-stage tasks (Robotics, Academia)

- Extend from end-of-episode scoring to temporally dense, multi-step rewards for long-horizon procedures (assembly, kitting, household chores).

- Dependencies: improved credit assignment; more diverse datasets with failures across stages; stronger temporal reasoning in VLMs.

- Safety-certified reward models in regulated environments (Policy, Healthcare, Industrial Safety)

- Establish certification regimes where reward model reliability (e.g., MAE thresholds on domain-specific suites) is audited; integrate into safety cases for hospital or factory robots.

- Dependencies: domain-specific benchmarks; conformance testing; regulatory guidance and standards.

- Personalized home robot training with user videos (Consumer Robotics, Daily Life)

- Users record short videos of desired outcomes; system generates counterfactuals/near-misses, trains a personalized reward model that guides RL fine-tuning of a home assistant.

- Dependencies: easy-to-use mobile capture, privacy-safe on-device training, robust performance across diverse home layouts.

- Cross-embodiment, viewpoint-robust reward models (Robotics)

- Achieve uniform accuracy across arms, grippers, mobile manipulators, and egocentric/exocentric cameras, reducing per-platform calibration.

- Dependencies: broader data mixture beyond OXE/RoboArena; domain randomization; viewpoint-invariant visual backbones.

- Real-time reward shaping during episodes (Software, Robotics)

- Move from end-of-episode to low-latency, step-wise progress signals to enable curriculum learning, early termination of failing episodes, and dynamic exploration bonuses.

- Dependencies: efficient streaming inference; stabilized reward shaping that avoids reward hacking; better temporal grounding.

- Autonomous data engine for reward improvement (MLOps, Robotics)

- Close the loop: auto-detect failure modes in deployment, collect targeted data, run augmentation/validation, retrain reward models, and redeploy continuously.

- Dependencies: reliable data pipelines, drift detection, automated rubric validation, governance for updates.

- Domain-specific reward suites (Energy, Agriculture, Lab Automation)

- Create specialized benchmarks and trained reward models for valve turning, tool use, specimen handling, crop manipulation, etc., reflecting sector-specific constraints.

- Dependencies: curated datasets with meaningful failures; sector-specific rubrics; collaboration with domain experts.

- Edge-optimized reward models (Robotics Hardware, Software)

- Quantize and distill RoboReward models for embedded deployment (ARM/NPU), enabling on-robot inference with minimal latency.

- Dependencies: hardware accelerators; quantization-aware training; acceptable accuracy–latency trade-offs.

- Multi-agent and human–robot collaboration rewards (Robotics, HRI)

- Score joint task progress involving humans and multiple robots (handoffs, coordinated assembly), capturing social and spatial constraints.

- Dependencies: multimodal sensing (speech, pose), richer rubrics, datasets with collaborative failures.

- Reward governance and auditing tools (Policy, MLOps)

- Develop audit trails and dashboards tracking reward predictions, disagreements with human labels, and downstream impacts on policy actions; detect reward misspecification.

- Dependencies: standardized logging formats; anomaly detection; incident response protocols.

- Autonomous driving and mobile manipulation rewards (Transportation, Robotics)

- Apply vision-language rewards to navigation/manipulation hybrids (e.g., fetch-and-carry in hospitals, retail restocking), bridging perception and action across spaces.

- Dependencies: long-horizon datasets; robust scene understanding; safety validation.

- Programmatic reward design assistants (Software, Robotics)

- Use VLMs to propose, validate, and refine task-specific rubrics and reward formulations; automate parts of reward engineering with explainable rationales.

- Dependencies: improved reasoning reliability; human-in-the-loop oversight; versioning of rubrics and outcomes.

- Standard-setting around “Reward Quality” metrics (Policy, Standards Bodies)

- Establish sector-wide metrics (e.g., MAE thresholds, per-subset robustness) and reporting requirements for embodied AI deployments; anchor procurement and liability.

- Dependencies: consensus among stakeholders; interop with existing safety standards (e.g., ISO/IEC for robotics).

Notes on feasibility across applications:

- Current strengths: short-horizon tasks; episodic rewards; correlation between offline MAE and RL performance; open models and benchmarks.

- Current limitations: non-uniform generalization across embodiments/viewpoints; sensitivity to instruction clarity; reliance on high-quality video; latency and compute constraints; limited long-horizon capabilities.

Collections

Sign up for free to add this paper to one or more collections.