Overview

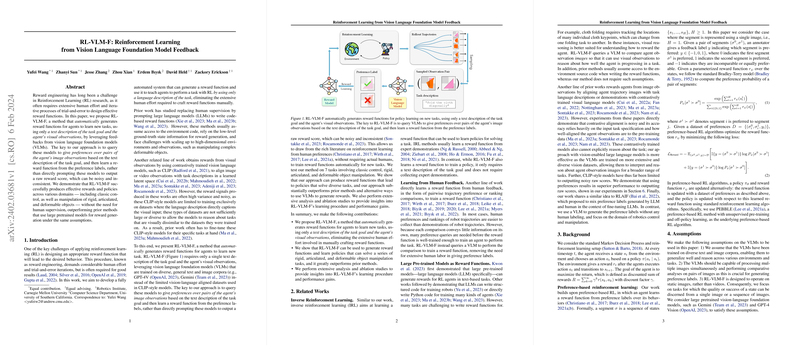

In an innovative approach to Reinforcement Learning (RL), a recent method dubbed RL-VLM-F leverages the power of vision language foundation models (VLMs) to automatically generate reward functions from textual descriptions of tasks and visual observations. This method signifies a remarkable shift away from traditional reward engineering, which typically demands considerable human effort and an iterative trial-and-error process. RL-VLM-F's ability to use VLMs to determine preferences over pairs of agent observations marks a pivotal step towards efficient, scalable, and human-independent reward function generation in RL.

The Challenge of Reward Engineering

Crafting effective reward functions is a cornerstone of successful reinforcement learning applications but is often fraught with challenges. It requires extensive knowledge and manual effort, making the process cumbersome and less accessible to non-experts. Previous methods have attempted to mitigate these issues by utilizing LLMs to auto-generate code-based reward functions or by harnessing contrastively trained vision LLMs for deriving rewards from visual feedback. Despite these advancements, limitations persist, including dependency on low-level state information, environment code access, and the capability to scale in high-dimensional settings.

Introducing RL-VLM-F

RL-VLM-F emerges as a solution, automating the generation of reward functions directly from high-level task descriptions and accompanying visual data. The process begins by querying VLMs to evaluate pairs of image observations, extracting preference labels to learn a reward function that reflects how well each image aligns with the described task goal. Unlike direct reward score predictions—which can be noisy and inconsistent—this preference-based approach yields a more reliable mechanism for reward learning.

Methodology

The methodology behind RL-VLM-F involves an iterative cycle where a policy is initially learned using randomly initialized parameters. As the agent interacts with the environment, image observations are captured and paired. These pairs are then evaluated by a VLM, which generates preference labels based on the textual task description. Through this automated process, RL-VLM-F refines the reward function, informing the policy learning step without manual human annotation.

Empirical Validation

RL-VLM-F's effectiveness is underscored by a set of diverse experiments spanning classic control scenarios and sophisticated manipulation tasks involving rigid, articulated, and deformable objects. These experiments showcase RL-VLM-F’s superior performance over several baselines, including methods that utilize large pre-trained models and those based on contrastive alignment. Remarkably, in some instances, RL-VLM-F matches or even exceeds the performance achievable through hand-engineered, ground-truth reward functions.

Insights and Contributions

The comprehensive analysis conducted reveals significant insights into RL-VLM-F's learning process and performance attributes. Among its notable contributions, RL-VLM-F dramatically reduces the human labor involved in crafting reward functions, demonstrating its ability to understand and interpret complex tasks from natural language descriptions and visual cues. Moreover, the method's robustness across a broad spectrum of domains affirms its potential to facilitate a wide array of RL applications, paving the way for more intuitive and efficient reward learning strategies.

Future Directions

The exploration of RL-VLM-F opens promising avenues for future research, particularly in expanding its applicability to dynamic scenarios or across tasks requiring nuanced understanding of the environment. Further investigation into the integration of active learning mechanisms could also enhance the efficiency and efficacy of the feedback generation process, optimizing the use of VLM queries for improved performance and scalability.

Conclusion

RL-VLM-F represents a significant leap forward in harnessing the capabilities of vision language foundation models for automated reward function generation in reinforcement learning. By eliminating the need for manual reward engineering and leveraging the interpretative power of VLMs, it offers a compelling approach that stands to revolutionize how agents learn and adapt to complex tasks, setting a new benchmark for flexibility, efficiency, and accessibility in RL research.