DiffThinker: Towards Generative Multimodal Reasoning with Diffusion Models

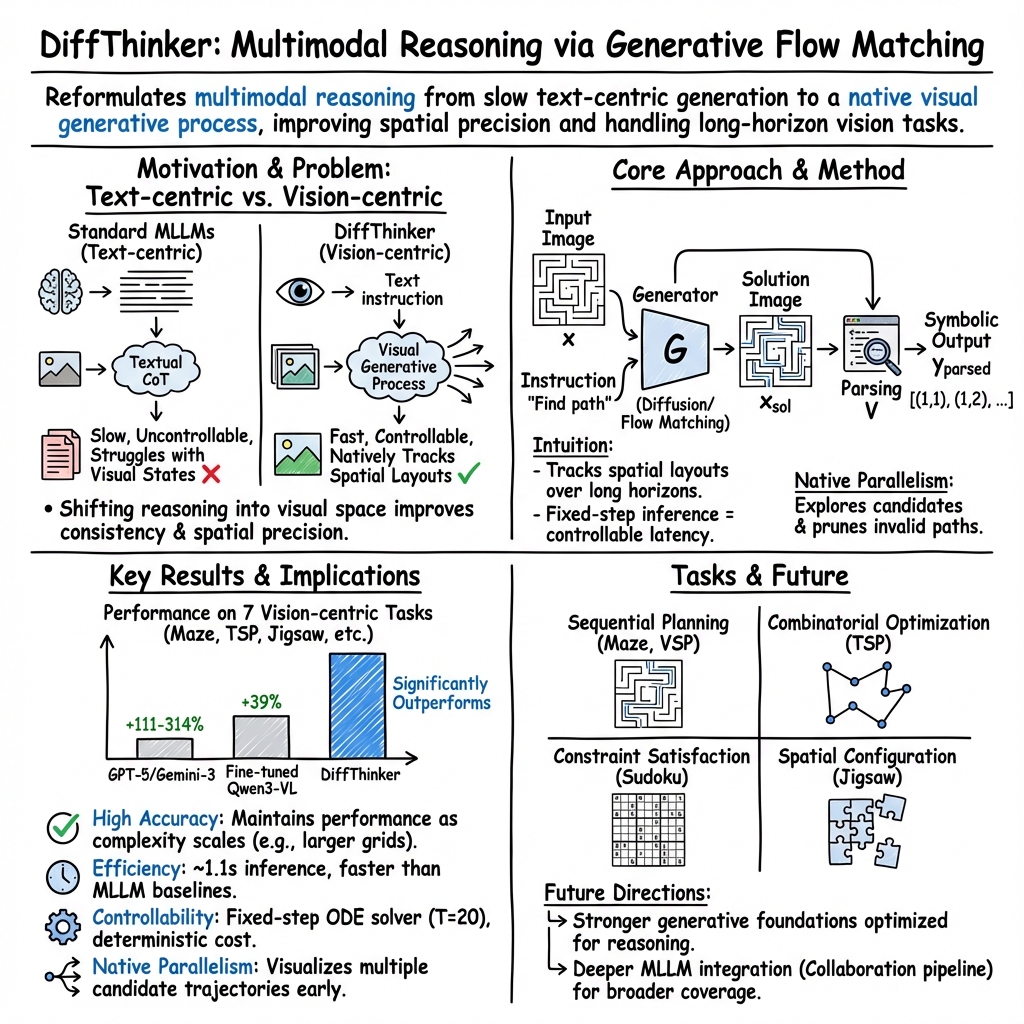

Abstract: While recent Multimodal LLMs (MLLMs) have attained significant strides in multimodal reasoning, their reasoning processes remain predominantly text-centric, leading to suboptimal performance in complex long-horizon, vision-centric tasks. In this paper, we establish a novel Generative Multimodal Reasoning paradigm and introduce DiffThinker, a diffusion-based reasoning framework. Conceptually, DiffThinker reformulates multimodal reasoning as a native generative image-to-image task, achieving superior logical consistency and spatial precision in vision-centric tasks. We perform a systematic comparison between DiffThinker and MLLMs, providing the first in-depth investigation into the intrinsic characteristics of this paradigm, revealing four core properties: efficiency, controllability, native parallelism, and collaboration. Extensive experiments across four domains (sequential planning, combinatorial optimization, constraint satisfaction, and spatial configuration) demonstrate that DiffThinker significantly outperforms leading closed source models including GPT-5 (+314.2\%) and Gemini-3-Flash (+111.6\%), as well as the fine-tuned Qwen3-VL-32B baseline (+39.0\%), highlighting generative multimodal reasoning as a promising approach for vision-centric reasoning.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces a new way for AI to “think” using pictures instead of mostly using text. The method is called DiffThinker. Instead of writing out long step-by-step explanations (like a long essay) to solve problems that involve seeing and planning in images, DiffThinker directly creates a solution image that shows the answer. This turns visual reasoning into an image-to-image task and helps the AI stay consistent and precise in tasks that are all about what you see on the screen.

What questions did the researchers ask?

The authors wanted to find out:

- Can we solve vision-heavy problems better by generating solution images directly, rather than generating text first?

- Is this new approach faster, more reliable, and more controllable than current multimodal LLMs (models that handle both text and images)?

- Does this approach naturally explore multiple possible answers at the same time?

- Can this image-thinking method team up with text-focused models to get even better results?

- Does generating videos help reasoning even more than images, or is it too slow?

How did they do it?

Think of a tough visual puzzle—like a maze or a Sudoku grid. Traditional models often read the picture, write a long text plan step by step, and then your app turns that text back into a path or a filled-in puzzle. DiffThinker does something different: it looks at the picture and directly draws the solution back onto the picture.

Here are the key ideas, explained simply:

- Diffusion models: Imagine starting with TV static (random noise) and slowly transforming it into a clear image. DiffThinker uses this idea to “paint” a solution image, guided by the puzzle and the instructions.

- Flow matching: This is like having a map that says “how to move from static noise to the right picture smoothly.” It’s a way to teach the model the best path from messy noise to a correct solution image.

- VAE (Variational Autoencoder): Think of this as an image compressor/decompressor. DiffThinker does most of its work in a compressed space to make it faster, then turns it back into a full-size picture at the end.

- MMDiT (Multimodal Diffusion Transformer): This is the “brain” that understands both text (instructions) and images, and helps guide the drawing of the solution.

- Parsing for fair scoring: After DiffThinker draws the solution, the researchers use a simple reader that converts the solution image back into symbols (like a list of moves or a filled Sudoku grid) so they can fairly compare results with text-based models.

- Task setup: They trained separate versions of DiffThinker for different types of problems and compared it to strong models like GPT-5, Gemini-3-Flash, and Qwen3-VL.

What did they find, and why is it important?

They tested seven tasks across four types of problems:

- Sequential planning: navigating grid worlds (VSP and VSP-Super) and solving mazes

- Combinatorial optimization: the Traveling Salesperson Problem (TSP), finding the shortest loop through cities

- Constraint satisfaction: Sudoku (fill numbers correctly)

- Spatial configuration: reconstructing jigsaw-like puzzles (Jigsaw and VisPuzzle)

Here’s what stood out:

- Much higher accuracy: DiffThinker beat powerful baselines by a large margin. In their tests, it was over three times better than GPT-5 on average, and more than double Gemini-3-Flash.

- Better at vision-centric problems: Because the solution is an image, the model stays aligned with the visual details (like exact paths through mazes or precise puzzle piece placement), which reduces errors that can come from turning pictures into text and back again.

- Four key properties:

- Efficiency: Training and solving were fast, similar to or better than some strong baselines.

- Controllability: The model uses a fixed number of steps (like 20 “brush strokes”) to draw a solution. That means predictable speed and cost, unlike text models that can produce very long answers.

- Native parallelism: It naturally explores multiple possible solutions at once early on, then prunes the bad ones—like trying many paths in a maze simultaneously and erasing the dead ends.

- Collaboration: When DiffThinker’s image candidates are checked by a text model (which verifies rules), their combined system beat either one alone—especially on puzzle tasks.

- Practical sweet spot: Around 20 drawing steps gave a good balance of accuracy and speed.

- Video vs. image: They built a video version (DiffThinker-Video) that shows a moving path over time. It can reason, but for now it’s slower and less accurate than the image approach. So images are the more efficient choice today.

What does this mean for the future?

- A new way to reason: For tasks where “seeing” is central—like paths, layouts, puzzles, and spatial planning—generating solution images directly can be more natural and reliable than writing long text explanations.

- Faster, steadier systems: Fixed-step image generation makes run-time predictable, which is useful for apps, games, robotics, and education tools that need quick, dependable reasoning.

- Teamwork wins: DiffThinker can act as a visual “problem solver,” while a text-based model plays the “rule checker.” Together, they can solve more complex tasks.

- Ready for more: As video models get faster, the idea of “thinking with video” may grow. For now, image generation hits the sweet spot between quality and speed.

- Big picture: This paradigm—generative multimodal reasoning—could lead to AI assistants that plan and solve by “drawing” their thoughts, making them better at long, complex, vision-heavy challenges.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated list of what remains missing, uncertain, or unexplored in the paper, framed as concrete directions for future research.

- Generalization to naturalistic visual scenes: Tasks are synthetic, grid-structured, and highly parseable; validate DiffThinker on real-world images with clutter, occlusions, lighting variations, and semantic complexity (e.g., street navigation from aerial imagery, real jigsaw photos, hand-written Sudoku scans).

- Unified multi-task reasoning: Models are trained per task; investigate a single DiffThinker that can generalize across domains (planning, combinatorial optimization, CSPs, spatial configuration) without task-specific fine-tuning.

- Dependence on MLLM-derived conditioning: The conditioning latent h is produced by an MLLM; quantify sensitivity to the conditioning source, compare against purely visual encoders, and assess cross-model compatibility and robustness when the MLLM is noisy or misaligned.

- Visual-to-symbolic parsing robustness: Provide a formal description and release code for the parser V, measure its error rates, and analyze how minor rendering artifacts, font variations, or path thickness affect correctness and fairness across paradigms.

- Evaluation metrics for ambiguous solutions: TSP and some planning tasks can have multiple valid or near-optimal solutions; define metrics beyond “accuracy,” such as normalized tour length gap, constraint satisfaction rates, and equivalence classes of solutions.

- Comparison with classical solvers: Benchmark against domain-specific algorithms (e.g., Concorde or Lin–Kernighan for TSP, backtracking/constraint propagation for Sudoku, exact maze solvers) to contextualize performance and efficiency vs. established baselines.

- Scaling behavior on extreme problem sizes: Characterize accuracy/latency/memory on very large instances (e.g., TSP with thousands of cities, maze grids >1k×1k, Sudoku with minimal clues) and provide scaling laws.

- Stochasticity and seed sensitivity: Analyze variability across random seeds, quantify the benefit of generating multiple candidates, and study how “native parallelism” interacts with sampling noise and candidate diversity.

- Theoretical grounding of “reasoning via diffusion”: Formalize why rectified flow/ODE integration yields effective constraint satisfaction and global planning; connect to optimization landscapes, energy-based views, or structured priors.

- Data efficiency and domain shift: Beyond the reported 30k samples per task, measure learning curves in low-data regimes, generalization to unseen distributions (beyond VisPuzzle), and transfer learning across related tasks.

- Robustness to input perturbations: Evaluate adversarial noise, rotations, perspective distortions, occlusions, compression artifacts, and font/style changes (e.g., Sudoku digits) to assess resilience of generative reasoning and parsing.

- Interpretability of latent trajectories: Develop tools to inspect and explain the generative trajectory (e.g., constraint activation over steps, path pruning decisions), bridging qualitative figures to quantitative interpretability.

- Enforcing hard constraints during generation: Explore integrating differentiable constraint checkers, plug-in verifiers, or guidance conditioned on symbolic validity to guarantee Sudoku validity or TSP tour correctness during sampling.

- Breadth and cost of collaboration with MLLMs: The collaboration is shown only for Jigsaw level-4; evaluate across all tasks, quantify accuracy-vs-latency tradeoffs as the candidate count N scales, and compare against programmatic verifiers instead of MLLMs.

- Fairness of efficiency comparisons: Training/inference time comparisons are limited in scope; include memory footprint, batch sizes, solver variants (Euler vs. higher-order), token limits for MLLMs, and account for parsing overhead to ensure apples-to-apples evaluation.

- Video-based reasoning scope: DiffThinker-Video is only tested on Maze level-8; study more complex temporal tasks (e.g., multi-step manipulation, dynamic environments), optimize video diffusion efficiency, and clarify when video offers clear advantages over images.

- Agentic, interactive settings: Test in environments where the state evolves from actions (e.g., embodied navigation, game puzzles), assessing closed-loop visual reasoning with observations and replanning over long horizons.

- Safety, reliability, and failure modes: Document and analyze typical failure cases (e.g., off-grid paths, near-miss constraint violations, ambiguous visual outputs), detect hallucinations, and propose safeguards for high-stakes deployments.

- Reproducibility and release details: Clarify dataset generation procedures, difficulty scaling, parser implementations, hyperparameters (e.g., step schedules, noise scales), and provide code/model releases with licenses to enable independent validation.

- Coverage of language-heavy reasoning: Evaluate tasks that require extensive textual reasoning, multi-step instruction following, and logical deduction from long context, to probe how image-centric reasoning interfaces with language.

- Architectural ablations: Compare MMDiT vs. U-Net backbones, latent vs. pixel-space generation, flow matching vs. DDPM/DDIM, different ODE solvers and schedulers, and assess their impact on reasoning quality and cost.

- Beyond Sudoku for CSPs: Extend to richer constraint satisfaction problems (e.g., Kakuro, Nonograms, SAT, graph coloring) and identify representation and parsing strategies for non-grid CSPs.

- Real-time and streaming constraints: Examine early-exit strategies, progressive decoding, and anytime performance under strict latency budgets; quantify the performance-latency frontier beyond the 20-step default.

- Integration with verifiable RL for diffusion: Explore RLHF or verifiable reward mechanisms tailored to diffusion models to reinforce reasoning quality during training, akin to R1/GRPO for MLLMs.

- Long-horizon claims vs. evidence: Provide benchmarks that genuinely require very long sequences of decisions, and measure whether generative trajectories maintain consistency over extended horizons.

- Multimodal conditioning breadth: Test robustness to ambiguous or underspecified instructions, multi-image inputs, and mixed modalities (audio/3D), and quantify alignment failures between textual cues and visual outputs.

- Non-grid and 3D tasks: Investigate reasoning in free-form geometric settings (e.g., 3D path planning, robotics trajectories, continuous optimization) where parseability is harder and constraints are continuous.

Glossary

- Autoregressive MLLMs: Models that generate sequences token-by-token, conditioning each token on prior outputs. "rooted in autoregressive MLLMs"

- Chain-of-Thought (CoT): A technique where models produce intermediate textual reasoning steps to improve problem-solving. "Chain-of- Thought (CoT)"

- Classifier-Free Guidance (CFG): A diffusion guidance method that mixes conditional and unconditional predictions to steer sampling. "Classifier-Free Guidance (CFG)"

- Combinatorial optimization: Optimization over discrete structures to find the best combination under constraints. "combinatorial optimization"

- Constraint satisfaction: Problems that require assignments meeting all specified constraints. "constraint satisfaction"

- Data manifold: The lower-dimensional surface in data space where valid samples lie. "the data manifold"

- Diffusion models: Generative models that transform noise into data via iterative denoising or learned flows. "Diffusion models have emerged as the dominant framework for generative modeling."

- Euler solver: A first-order numerical integration method for ODEs used to step generative flows. "first-order Euler solver"

- Flow Matching: A training framework that learns a velocity field to map noise to data via ODE trajectories. "employs Flow Matching"

- Flow-based methodologies: Generative approaches that transform simple distributions into complex ones via learned flows. "flow-based methodologies"

- GRPO: A reinforcement learning optimization paradigm used to fine-tune reasoning models. "GRPO (Shao et al., 2024)"

- Latent diffusion models: Diffusion models that operate in a compressed latent representation rather than pixel space. "latent diffusion models (Rombach et al., 2022)"

- Latent space: A compact representation space (e.g., from a VAE) where generation and reasoning occur efficiently. "the latent space of a Variational Autoencoder (VAE)"

- Latent visual tokens: Discrete or continuous tokens representing visual content in a model’s latent representation. "latent visual tokens"

- Logit-normal distribution: A distribution where the logit of a variable is normally distributed, used for timestep sampling. "a logit-normal distribution"

- Multimodal Diffusion Transformer (MMDiT): A diffusion architecture using transformers to model cross-modal dependencies. "a Multimodal Diffusion Transformer (MMDiT) (Esser et al., 2024)"

- Multimodal LLMs (MLLMs): Large models that process and reason over multiple modalities, such as text and images. "Multimodal LLMs (MLLMs)"

- Multimodal-to-Image: A reasoning paradigm mapping multimodal inputs directly to an output image. "DiffThinker: Multimodal-to-Image."

- Multimodal-to-Text: A reasoning paradigm mapping multimodal inputs to textual outputs. "Standard MLLMs: Multimodal-to-Text."

- Native parallel reasoning: Exploring multiple candidate solutions simultaneously within the generative process. "DiffThinker as a Native Parallel Reasoner."

- Ordinary Differential Equations (ODEs): Differential equations defining continuous-time dynamics used for generative flows. "Ordinary Differential Equations (ODEs)"

- Reinforcement Learning with Verifiable Reward: RL methods that use programmatically checkable rewards to guide reasoning. "Reinforcement Learning with Verifiable Reward"

- Sequential planning: Tasks requiring a sequence of actions to reach a goal state. "sequential planning"

- Spatial configuration: Tasks involving arranging components in space to form a coherent whole. "spatial configuration"

- Thinking with Image: A paradigm where models iteratively manipulate or generate images during reasoning. ""Thinking with Images" MLLMs interact with multimodal inputs through iterative tool calls."

- Thinking with Video: A paradigm where models reason by interacting with or generating videos. "the "Thinking with Video" paradigm"

- Traveling Salesperson Problem (TSP): The NP-hard task of finding the shortest tour visiting all cities exactly once. "Traveling Salesperson Problem (TSP) (Jünger et al., 1995)."

- Variational Autoencoder (VAE): A generative model that encodes data into a latent distribution and decodes samples back to data space. "Variational Autoencoder (VAE) (Kingma & Welling, 2013)"

- Velocity field: A vector field indicating how latent points should move over time to transform noise into data. "the velocity field that transforms noise into the data distribution"

- Visual Spatial Planning (VSP): A benchmark assessing spatial planning and reasoning in grid-based environments. "Visual Spatial Planning (VSP) (Wu et al., 2024)"

Practical Applications

Below is an overview of practical applications enabled by the DiffThinker paradigm (generative multimodal reasoning with diffusion models), grounded in its demonstrated strengths: vision-native reasoning, native parallelism (multi-hypothesis exploration), controllable and bounded inference cost, and effective collaboration with MLLMs for verification.

Immediate Applications

- Warehouse/mobile robot path overlays on mapped floorplans — DiffThinker generates safe paths directly as image overlays that are then parsed into waypoints/actions; its native parallelism yields N alternative routes for a verifier to select. Sector: robotics, logistics. Product/workflow: “Path Overlay Service” integrated into robot stacks (e.g., ROS) with a rule-based or LLM verifier; fixed-step inference guarantees predictable latency on-device or at the edge. Assumptions/dependencies: discretized/grid-aligned maps, robust image-to-action parser, local safety layers and collision checks, domain fine-tuning data.

- Picker route suggestions for small to mid-scale picking (TSP-style) — Visual route loops rendered over store/warehouse maps to minimize walking distance; verifier enforces aisle-direction, one-way zones, or priority items. Sector: retail, logistics. Product/workflow: WMS plug-in that requests N candidates and auto-selects via constraints; integrates with handheld scanners/AR headsets. Assumptions/dependencies: limited number of stops per batch, accurate store topology, dynamic constraints handled via verifier or re-plan triggers.

- 2D packing and layout arrangement (Jigsaw analog) — Arrange items (labels, cartons, images) into printable or cuttable sheets while respecting no-overlap and margin rules; output is a visually parseable layout. Sector: manufacturing/printing/design. Product/workflow: “N-up Layout Assistant” for prepress/CAD that proposes multiple candidate arrangements for operator approval. Assumptions/dependencies: domain parsers for cut lines and bleeds, constraint sets (material, machine bed sizes), task-specific data for fine-tuning.

- Visual QA for assembly and kitting — Compare photographed assemblies to target configurations and render corrective overlays (misplaced component highlighting, suggested swaps). Sector: manufacturing/quality. Product/workflow: camera + DiffThinker cell that outputs “fix-it” overlays; verifier checks bill-of-materials and tolerances. Assumptions/dependencies: consistent imaging conditions, labeled exemplars per SKU, calibrated parsers for pass/fail criteria.

- Education and consumer apps for visual logic — Sudoku, jigsaw, mazes, geometric constructions shown as solution images or step-limited sequences to teach spatial reasoning. Sector: education, gaming. Product/workflow: “Visual Reasoning Tutor” that generates hint overlays and N-step solutions with predictable compute time; can collaborate with an LLM to explain rationale. Assumptions/dependencies: content licenses, age-appropriate safety, anti-cheating modes.

- UI/dashboard auto-layout on grids — Generate visually balanced arrangements (widgets, charts, ads) subject to alignment and spacing constraints, delivered as editable overlays. Sector: software/design. Product/workflow: Figma/PowerPoint plugin that proposes N layouts; designer selects and edits. Assumptions/dependencies: grid or constraint schema, training data mirroring brand/style guides, parser to convert overlays to layout specs.

- Visual hypothesis generator for agentic systems — DiffThinker produces N candidate solution images; an MLLM verifies constraints and chooses the best (as shown in the paper’s collaborative pipeline). Sector: AI platforms, research, software. Product/workflow: microservice (“Visual Solver”) callable from agent frameworks to handle spatial reasoning subtasks. Assumptions/dependencies: reliable verifiers (programmatic or LLM), rate-limited GPU serving, deterministic step budget aligned with SLAs.

- Campus/hospital indoor navigation overlays — Render obstacle-aware routes on building maps for wayfinding apps; fixed-step inference enables predictable on-device latency. Sector: mapping/AR. Product/workflow: mobile SDK generating route overlays with accessibility constraints; optional N-candidate reranking. Assumptions/dependencies: well-registered and discretized floorplans, accurate obstacle updates, strong on-device parsers.

- Game development QA and content tooling — Auto-solve and stress-test puzzle/maze levels; generate solution assets for level designers. Sector: gaming. Product/workflow: Unity/Unreal plugin producing annotated path overlays and “golden” solves. Assumptions/dependencies: game-level export to parseable images, task-specific fine-tuning, version control integration.

Long-Term Applications

- City-scale logistics with complex constraints (time windows, capacities, traffic) — Multi-candidate route overlays for dispatchers that integrate with operational research solvers and live data streams. Sector: transportation/logistics. Product/workflow: “Visual Route Explorer” that proposes alternative plans for human-in-the-loop selection; verifier handles regulatory/delivery constraints. Assumptions/dependencies: scaling beyond small TSP instances, heterogeneous constraints, robust uncertainty handling, strong telemetry integration.

- Dynamic path planning via video reasoning (DiffThinker-Video) — Predictive sequences that visualize future trajectories around moving obstacles for robots/vehicles. Sector: automotive/robotics. Product/workflow: “Video Planner” that outputs short plan clips; rule-based/LLM verifier confirms safety margins before actuation. Assumptions/dependencies: more efficient and accurate video diffusion models, real-time guarantees, formal safety cases and certification.

- Surgical and interventional planning — Visual overlays showing safe tool paths or access corridors in anatomy; multi-hypothesis plans vetted by clinical rules. Sector: healthcare. Product/workflow: pre-op planning assistant rendering candidate trajectories and constraints; intra-op AR overlays. Assumptions/dependencies: rigorous clinical validation, patient-specific imaging data, regulatory approval, robust parsers for anatomical constraints.

- Facility/architectural layout and chip floorplanning — Generate candidate placements/paths (rooms, machines, nets) that satisfy spatial/constraint rules; native parallelism accelerates design-space exploration. Sector: AEC, semiconductor. Product/workflow: “Layout Explorer” producing N alternatives passed to simulators/DRC; verifier enforces codes and design rules. Assumptions/dependencies: extending to 2.5D/3D representations, complex multi-constraint verification, co-optimization loops with existing CAD/EDA tools.

- Urban planning and policy co-design — Produce multiple pedestrian/bike network or evacuation overlays for participatory workshops; visual-first alternatives improve stakeholder dialogue. Sector: public policy/civil engineering. Product/workflow: “Scenario Studio” that renders N plan overlays for debate and scoring; verifiers evaluate accessibility, equity, and compliance KPIs. Assumptions/dependencies: high-quality geospatial data, governance processes for model transparency, bias and fairness audits.

- Emergency response and evacuation planning — Rapidly explore multi-candidate egress routes and staging layouts under changing hazards. Sector: public safety. Product/workflow: command center tool generating overlays and live re-plans; verifier runs fast simulators before dissemination. Assumptions/dependencies: trustable hazard models, robust comms, certified decision support procedures.

- 3D packing/container loading and warehouse slotting — Extend jigsaw-like spatial configuration to 3D bin packing and pallet/container stowage with stability constraints. Sector: logistics/shipping. Product/workflow: “3D Stowage Assistant” generating N load plans with weight/COG checks. Assumptions/dependencies: 3D generative reasoning capability, physics/stability verification, forklift/handling constraints.

- Multi-robot swarm coordination — Visual multi-agent path overlays that minimize conflicts and idle time. Sector: robotics/automation. Product/workflow: “Swarm Planner” that proposes coordinated trajectories; verifier enforces communication and kinematic limits. Assumptions/dependencies: multi-agent scalability, communication-aware constraints, formal conflict resolution.

- Visual scheduling/timetabling — Paint constraint-satisfying schedules as calendar heatmaps/overlays for classes, staff, or manufacturing cells; verifier ensures hard constraints. Sector: education/operations. Product/workflow: “Visual Scheduler” that enumerates candidate timetables for admin approval. Assumptions/dependencies: robust mapping of symbolic CSPs to image representations, high-quality parsers back to structured schedules, hybrid loops with OR solvers.

- Energy network siting and routing — Overlays of candidate line routes/renewable placements balancing cost, risk, and environmental constraints. Sector: energy. Product/workflow: planning console that proposes N alternatives for stakeholder review; verifier encodes regulatory/environmental rules. Assumptions/dependencies: authoritative GIS layers, multi-criteria verification, public consultation requirements.

Notes on cross-cutting feasibility

- Data and parsers: Many applications require domain-specific datasets and reliable parsers to translate visual solutions into executable actions or structured outputs; the paper’s use of parseable grid tasks is a strong design pattern to replicate.

- Verification is pivotal: The collaborative “generator (DiffThinker) + verifier (rule engine or MLLM)” pattern is central to safety and correctness, especially in regulated or dynamic settings.

- Bounded compute: Fixed-step inference supports predictable SLAs and on-device deployment; however, model size and GPU availability (or quantization to edge accelerators) remain practical constraints.

- Generalization: Reported gains are on vision-centric, parseable tasks (planning, TSP, jigsaw, Sudoku). Extrapolation to open-world or highly non-visual constraints depends on further research and hybridization with symbolic/OR methods.

- Video reasoning: The paper’s results indicate current video models carry higher cost and lower accuracy than image-based reasoning; improvements in video generative efficiency are a prerequisite for time-critical, dynamic applications.

Collections

Sign up for free to add this paper to one or more collections.