- The paper introduces a two-stage autoregressive distillation framework that transforms high-fidelity bidirectional diffusion models into low-latency, streaming generators for interactive human avatars.

- It employs innovative mechanisms like Reference Sink and RAPR to ensure long-term stability and identity preservation in full-body video synthesis.

- The system integrates audio-conditioned attention and adversarial refinement, achieving state-of-the-art performance in realistic lip-sync, fluid gestures, and responsive interactions.

StreamAvatar: Streaming Diffusion Models for Real-Time Interactive Human Avatars

Introduction and Scope

The paper "StreamAvatar: Streaming Diffusion Models for Real-Time Interactive Human Avatars" (2512.22065) introduces a novel two-stage autoregressive framework transforming high-fidelity bidirectional diffusion models into real-time streaming generators for interactive human avatar video synthesis. Unlike prior work limited by computational latency, bidirectional attention, and scope restricted to head/shoulder regions, StreamAvatar supports full-body generation with coherent talking and listening behaviors. The design tackles three core challenges: efficient streaming, long-duration stability, and interactive responsiveness, yielding avatars exhibiting natural transitions, temporal consistency, and rich gestures across diverse interaction phases.

Two-Stage Autoregressive Adaptation and Acceleration Framework

StreamAvatar leverages a two-stage pipeline to transition from a high-quality, non-causal teacher diffusion model to a causal, low-latency, streaming student model.

Stage 1: Autoregressive Distillation

This stage restructures the underlying Diffusion Transformer (DiT) from bidirectional to block-wise causal attention, partitioning the generation sequence into reference and generation chunks. Score Identity Distillation (SiD) guides the student to match denoised outputs of the teacher, augmented by a student-forcing scheme where generation is conditioned on its own prior outputs, mitigating train-test mismatch and enabling efficient rollouts.

Stability and Consistency Mechanisms

- Reference Sink: Ensures persistent access to identity-defining reference features within the KV cache, preventing identity drift in long sequences.

- Reference-Anchored Positional Re-encoding (RAPR): Recalculates and caps positional indices during streaming, addressing OOD issues induced by vanilla RoPE and preventing attention decay to the reference frame, thereby stabilizing long-duration output.

Figure 1: Schematic of the two-stage adaptation: bidirectional DiT is converted to block-causal DiT with Reference Sink and RAPR; followed by adversarial refinement via a Consistency-Aware Discriminator.

Figure 2: Comparison of vanilla RoPE and RAPR. RAPR resolves long-sequence attention decay and train/inference positional mismatch.

Stage 2: Adversarial Refinement

To combat visual artifacts and instabilities arising from aggressive distillation (e.g., reduction to a 3-step process), an adversarial refinement stage employs a Consistency-Aware Discriminator composed of multi-frame Q-Formers. This dual-branch discriminator penalizes frame-level realism discrepancies and global identity inconsistency relative to the reference, optimizing distributional alignment via relativistic adversarial loss and gradient regularization.

Interactive Human Generation Model Design

The interactive model extends Wan2.2-TI2V-5B [Wan] with audio-conditioned attention modules enabling synchronized talking and listening phases. A binary audio mask, derived via TalkNet, segregates speaking and listening windows post feature extraction, preserving waveform fidelity and mitigating distributional drift in downstream features. The masked Wav2Vec features are injected as stage-specific cues through dedicated audio-attention layers.

Figure 3: Transformer block architecture with extended audio-related attention modules for talking/listening conditioning.

This results in a unified system generating smooth, phase-appropriate transitions with fluid gestures and reactions, contrasting strongly with prior methods that remain static during listening or exhibit limited expressiveness outside of facial regions.

Experimental Results

Qualitative Results

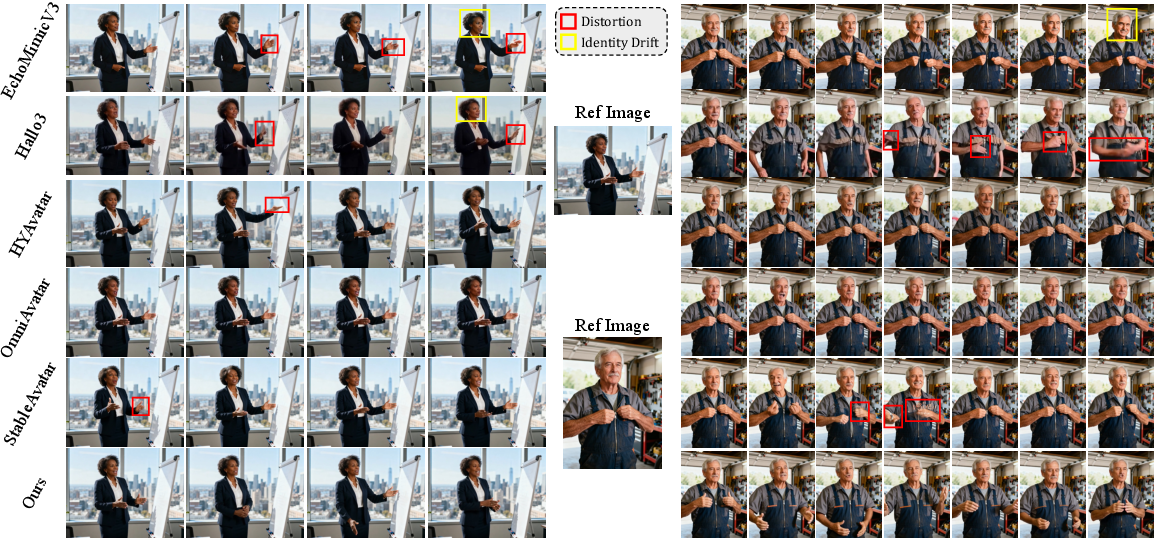

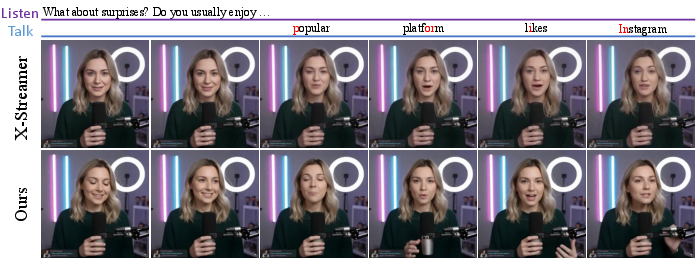

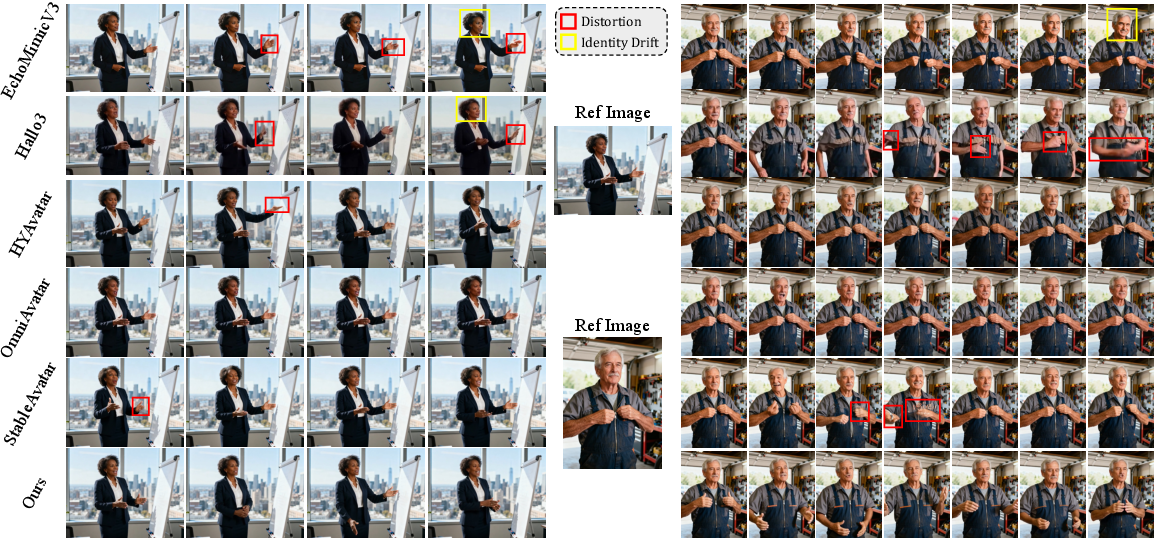

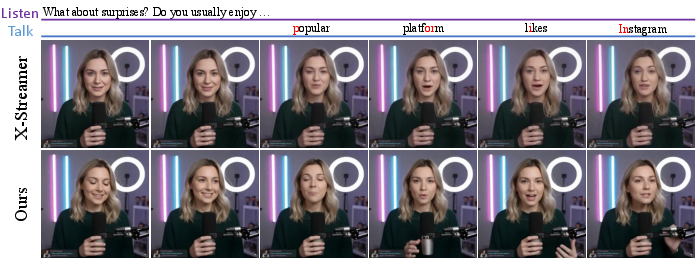

Comparative visual analysis (Fig. 4 & 5) highlights StreamAvatar's superiority over state-of-the-art (SOTA) methods in both talking and interactive scenarios. Where competitors frequently present distortions, error accumulation, and lack of gesture diversity, StreamAvatar avatars maintain consistent identity, higher motion dynamics, and realistic responses to listening cues.

Figure 4: Qualitative comparison with SOTA talking avatar generation techniques.

Figure 5: Interactive avatar generation; baseline remains static while StreamAvatar reacts naturally, transitioning between phases with fluid gestures and expressions.

The system exhibits strong performance across multiple datasets and metrics. In talking avatar generation, StreamAvatar outperforms or matches SOTA methods in FID, FVD, ASE, IQA, anomaly rate (HA), and motion dynamics (HKV), despite operating at significantly reduced latency (20s for 5s video at 720p, 3 steps). Notably, it achieves the highest listening-phase keypoint variance (LBKV, LHKV, LFKV), demonstrating unparalleled gesture richness during responsive interaction.

Ablation Studies

Incremental addition of Reference Sink, RAPR, and adversarial refinement consistently boosts stability, motion expressiveness, and identity preservation. Applying the audio mask post-feature extraction yields markedly superior performance relative to pre-mask variants.

Figure 6: Ablation study showcasing the impact of Reference Sink, RAPR, and consistency-aware GAN refinement on temporal stability and motion realism.

User and Head-to-Head Comparison Studies

Extensive user studies (Fig. 6) confirm StreamAvatar's perceptual superiority across audio-lip sync, dynamics, continuity, quality, and identity compared with competitive baselines. Qualitative comparisons with interactive head generation methods (ARIG, INFP) and recent streaming systems (MIDAS, X-Streamer) further validate StreamAvatar's one-shot, full-body capability and interaction realism.

Figure 7: User preference analysis for StreamAvatar versus state-of-the-art methods.

Figure 8: Comparison with SOTA interactive head generation models, demonstrating competitive visual quality and responsiveness.

Figure 9: Comparison with MIDAS in streaming interactive avatar generation, showing superior lip sync and expression accuracy.

Figure 10: Comparison with X-Streamer, illustrating advanced listening behavior diversity and motion richness.

Practical and Theoretical Implications

StreamAvatar addresses fundamental bottlenecks in real-time avatar generation, enabling streaming synthesis with minimal computational overhead and robust long-term consistency. Critically, it extends the interaction paradigm beyond facial expressions to embody full-body expressiveness, advancing both practical deployments in virtual assistants, education, and entertainment, and theoretical understanding of causal distillation and positional encoding in diffusion transformers.

The Reference Sink and RAPR strategies propose generalizable solutions for maintaining contextually anchored outputs in autoregressive sequence modeling, relevant to broader video and sequential generation domains. The dual-branch consistency-aware discriminator model enriches adversarial training methodologies by integrating local and global temporal constraints.

Speculation on Future Directions

Despite strong results, current limitations persist in modeling occluded regions and further minimizing decoding latency. Incorporation of external memory modules or long-term context attention may enable minute-scale generation with improved content consistency. Advances in VAE decoding efficiency could reduce the pipeline latency, supporting enhanced real-time interaction on consumer hardware. Broader integration of multi-modal cues and personalized behavior profiles may further humanize avatar responses in dynamic environments.

Conclusion

StreamAvatar sets a new standard for real-time, interactive human avatar video generation by innovatively adapting and distilling diffusion transformers into streaming-capable models with stability, consistency, and rich full-body expressiveness. Its design and demonstrated efficacy highlight foundational insights for the trajectory of interactive avatar synthesis, streaming generative modeling, and human-centered AI interfaces.